What's new in visionOS 2

This was predicted to be a big year for vision OS. Now the dust has settled after version 2 being announced at WWDC 2024, here’s what it means for developers.

“Worldwide” Release

Finally Apple Vision Pro is going global, with availability in China, Hong Kong, Japan, and Singapore last month. Australia, Canada, France, Germany, and the United Kingdom is on 12th July.

This is the single biggest news for the future of the device, although my concern is now that initial excitement has worn off, Apple is going to have to work hard to drum up new interest in order to fuel those sales.

WWDC2024

As hoped there has been many new features added to visionOS 2, from much needed improvements to Volumetric Windows to (Enterprise only) APIs to leverage camera access to TabletopKit and object tracking.

There’s a selection of videos from WWDC that cover the various topics, and I encourage you to watch them all (Apple, or YouTube). I particularly enjoyed the design focussed sessions as we all (including Apple) explore ways to get the most out of this new platform.

Hidden Gems

Here are some of the (perhaps) less obvious things announced that should be on your radar as you explore what’s possible.

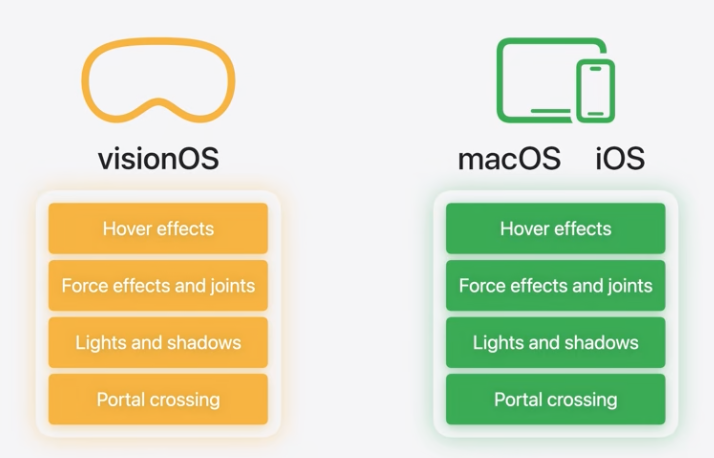

RealityKit 4 is available across iOS, iPadOS, macOS and visionOS so it’ll be easier to share code and experiences across the different systems. It also gains more features such as anchoring that were only available in ARKit, so this is the beginning of the end for that API.

Plane anchors have received other updates too, with more flexibility and information being available about them. It should be possible to be more sure about where things are being placed around the user, particularly when combined with the new room detection features.

Window positioning and opening has been improved. It’s now possible to push a window, which replaces the current window, rather than opening a new window instance that openWindow provides. With pushWindow when you close the new window, the old one is automatically re-opened. You also have control of where a window opens, relative to its parent. This allows you to position new windows next to their presenter, or even right in-front of the user with as a “utility” window.

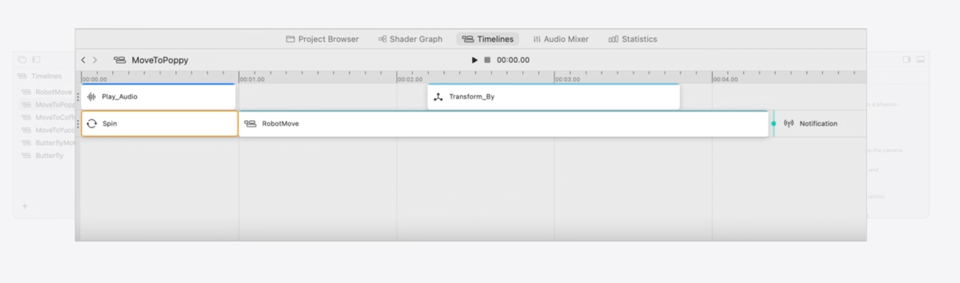

Bones, IK (Inverse Kinematics) and blend shapes have all been added to Reality Composer Pro, which is now turning into a fully fledged tool. They’ve also added a Timeline view, which is very reminiscent of authoring Adobe Flash experiences back in the day. I’m interested to see how much this feature can be pushed. The more RCP can bridge the gap between source material and the App, the easier it will be for more developers to create spatial experiences so I encourage this direction.

Hand tracking has added and predicted tracking mode, that improves latency at the expense of accuracy. It’ll be interesting to see if this helps with some of the issues with slow hand tracking in visionOS 1.

Lastly, customising the immersion experience is now possible, with control over how much immersion is possible in an immersive space using ImmersionStyle. When combined with tinting the of the camera passthrough with a custom colour through SurroundingsEffect, this will add more flexibility to immersive experiences.

What’s Next?

I was pleased to see Apple opening up the sensors on the device further, even if it’s only to Enterprise users at the moment. Realistically beyond entertainment and productivity there’s such a small market for spatial consumer apps it makes sense to focus on the enterprise in the immediate future. I do hope though that this is a path forward to greater access to the device for all use-cases going forwards.

Even with the worldwide release I don’t see Apple Vision Pro being a dominant force in the market this year, but at least we have more tools and features as developers to continue bridging the gap.

Although this wasn’t a leap forward in visionOS this year it has enough new and incremental updates to keep the journey exciting.

Happy Developing!