c is for computer

each issue of new terms & conditions is an entry toward building abc glossary — a glossary for an anti-colonial black feminist critical media ecology. contribute your own new term & condition at abcglossary.xyz to be part of this project.

computer. compute. computing. computing computer computes.

i’ve come to love the word computer. the press followed by the pursing of the lips, and — depending on your regional style — then the roll of the tongue at the roof of your mouth or the release of air between your teeth to sound out the letter “t.” and in just three syllables, it is a word that already holds layers of meaning as a noun and as a verb, that came into being through the birth of data science, militaristic research, and mathematic computation.

a current definition would define a computer as a machine that is programmed (the creation of operating systems, hence the “os” in iOS) to carry out a set of functions. however, the actual computing of computers was once performed by humans.

In contrast to the singular notion of the “computer” we think of today, early computers required programmers to reorient arrays of cable connections, tediously input binary figures through physical switches, feed a roll of paper tape into the machine or type the code into a typewriter. These varied methods of interface had different benefits and were developed at different times for the same machines — there was no singular format for interfacing with a computer (the single-channel monitor or phone screen interface that has come to define PCs and smartphones was designed later on, and for laypeople).1

— American Artist, Black

before machines we were the first computers, and more than that, “there was no singular format for interfacing with a computer.” this sense of dynamism gets lost in the reductive definitions of computers as inanimate objects with a sole purpose as part of a system. in earlier understandings of computers, there was an active relationship between humans and the technology that was very visible. with the evolution of the computer to a single-channel monitor, what also gets lost are the relations of power and labor that was and is embedded in computing:

The computer was a person. The term “computer” was first documented being used in the early 17th century to refer to a person who computes. Master of computing Alan Turing described the “human computer” as someone who is supposed to follow fixed rules with no authority to deviate from them in the slightest bit.

Teams of human computers who, in the beginning, were usually women, were commanded to perform long and tedious calculations by their masters, who were always men. Like slaves tethered to the switch, the movements of human computers were imposed on them by the calculated commands of their masters.

The slave is a human computer.

The human computer is a machine.2

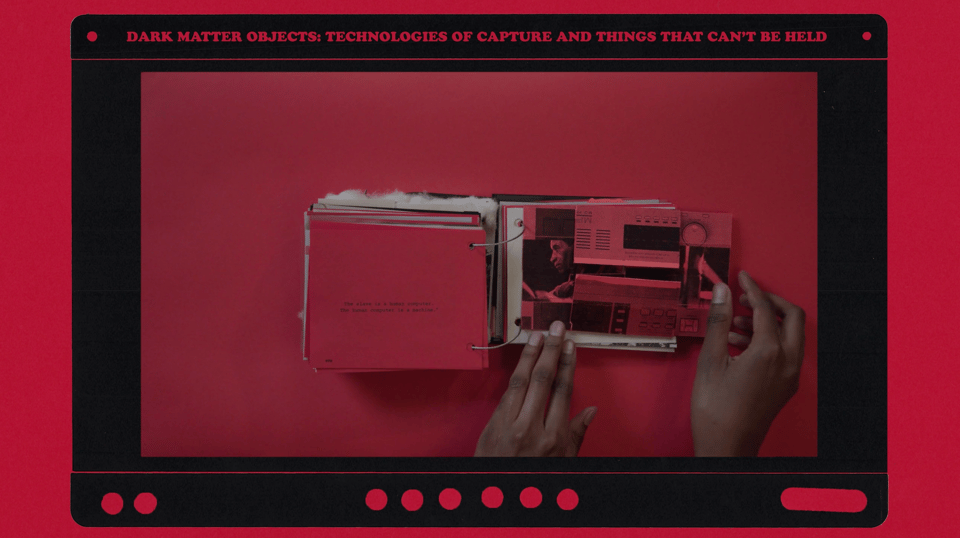

— Neta Bomani, Dark matter objects: Technologies of capture and things that can't be held

Technologies of capture and things that can't be held

as the computer got smaller in size, the enormity of the power relations and labor economies that exist in computing were able to be boxed and distributed globally, and through a little black screen. and we, the customers, simply became “users.”

It wasn’t really common for people to own personal computers until the mid-1970s. But when they did, the term “computer owner” never really took off. Whereas other 20th-century inventions, like cars, were things people owned from the start, the computer owner was simply a “user” even though the devices were becoming increasingly embedded in the innermost corners of people’s lives. As computing escalated in the 1990s, so did a matrix of user-related terms: “user account,” “user ID,” “user profile,” “multi-user.”3

— Taylor Majewski, “It’s time to retire the term ‘user’”

in recognizing our capabilities as human computers, it should not be taken in the western patriarchal sense that positions these abilities in a hierarchical order.

i’m remembering my first trip to the dominican republic (DR) last year, the homeland of my wife. we traveled from the capital to the countryside. along the way we’d pass stalls selling produce. these stalls would often be fashioned out of the wood and branches of the surrounding trees, and the weaving of natural fibers to create baskets and form the mechanics of the stall.

i couldn’t then find the words for something that seemed so trivial. i can now articulate that my awe and appreciation was in witnessing what often gets overlooked as technology. it is the technology that is in our hands and our bodies to carry and to build, it is the human computing that has created agricultural systems, that can program trees to become stalls to hold produce and feed our communities.

enslaved peoples in the deep South were early human computers growing crops where only swamps existed, and forming clay to architect buildings. they forged relationships with this land to yield produce, herbs, and cotton. computing is a science that is ancestral and exist in nature — to understand what grows around us, practicing the science of nurturing it, and only taking what we need so as to not disrupt the abundance and cycles of time in which nature computes.

An ant colony, or a model of one, could be a computer. A tree could be a computer, its roots functionalized to measure changes in the soil and report back to us. A cluster of brain cells hooked up to sensors could be a computer. A computer could be liquid, or bacterial. If we could throw out our existing paradigms and look farther afield, we might really be surprised. Intelligence is everywhere, in different degrees and combinations. The question is finding applications that are suitable and mutually-beneficial.4

— Claire L. Evans, “From Silicon to Slime”

related: user, e-colonialism

Works Cited

“Black Gooey Universe,” American Artist, Unbag, 2018.

Dark matter objects: Technologies of capture and things that can't be held, A riff on Fred Moten, Neta Bomani, et al., 2021.

“It’s time to retire the term ‘user’,” Taylor Majewski, MIT Technology Review, April 19, 2024.

“From Silicon to Slime,” by Willa Köerner, interview with Claire L. Evans, part of Ecologies of Entanglement, a collaborative series between Are.na Editorial and Dark Properties, August 5, 2024.

Add a comment: