Oooh, These AIs Be Lying

Happy Sunday,

The Indicator of the Week is 7. This week the United States Secretary of HHS released a major report that cited at least seven medical studies that were never actually published but instead were “hallucinated” by AI.

And that leads me directly to the conversation I want to have with y’all this week.

This year I had a small cohort of students, almost all of them were boys, who were obsessed with using AI to complete their work.

For processing tasks in my class, I am not going to fight students about the tools they use and I don’t really have a way to police what they use when they're out of my sight. But when it comes time for the written in-class assessment they’re on their own.

These students found themselves struggling. Their outsourcing of the cognitive labor meant they only had a tenuous grasp on the material and this manifested itself with struggles on exams, repeatedly.

But it is worse than that.

Often AI just told them the wrong stuff.

Something important to keep in mind when it comes to AI is that it will produce wildly inaccurate information if presented with a topic on which it has not been trained.

I have seen this “hallucination” of information dozens of times across my classes, necessitating this week’s AI intervention.

The Lesson

I began by briefly laying out the above to my students. I made sure to explain the topic of AI training and that it will “hallucinate” when asked to provide information that hasn't been fed to it previously.

I then modeled this for them, asking ChatGPT to give me some data about the US federal budget and then comparing the information it provided with reputable sources.

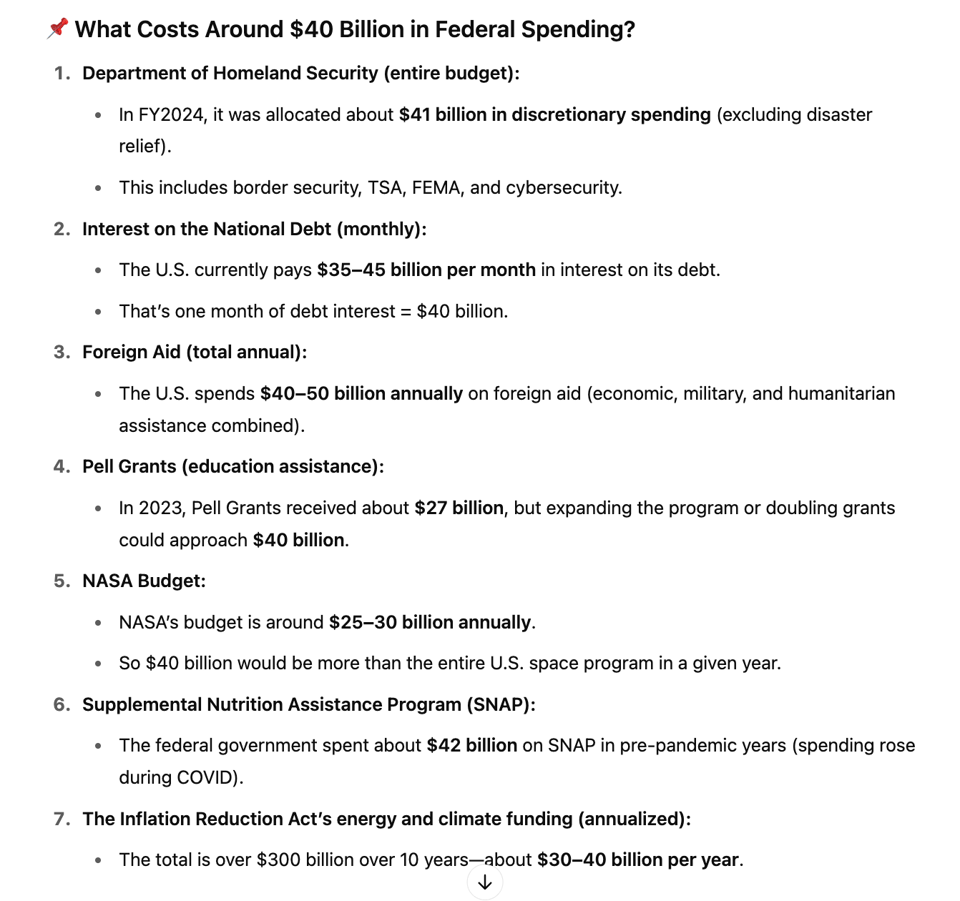

I offered a straightforward query. “I'm looking for examples of federal spending. In particular, I want examples for my students of various things the US government spends 40 billion dollars on annually. Can you provide me with some examples?”

ChatGPT spit out the following:

Several of these figures immediately didn’t pass the smell test.

So, I divided the class up and had them go fact check each figure from government websites and news sources. The results? Pure fiction.

It was off by over 100% on the Homeland Security budget. According to DHS, the 2024 budget was $103 billion, more than double the figure provided. Even the “discretionary” part is wrong. The figure is $60.4 billion in discretionary funding, according to DHS.

It ended up being wrong about nearly all the figures provided. The data sounded authoritative but was essentially useless.

I then asked my students to think of a topic they were intimately familiar with: their favorite band, neighborhood back home, a distant relative, the plots of their favorite TV show, and to look for similar errors — they were everywhere.

Wrong mascot for an old school in the US.

Wrong name for guitarist and bassist in their favorite band.

Wrong about details of a student’s old neighborhood in Beirut.

Wrong about the order their favorite author released their books and some made up titles.

Wrong about the history of a football team in Egypt.

It was here that the lights really started to come on for them and it began to sink in how much of the info these models produce is bunk. And also it was the end of the fifteen minutes I had set aside and other work was ahead of us.

Takeaways?

I wish I would have run this mini-lesson at the start of the year when we were establishing our norms.

I wish we were collectively being more thoughtful about how we’re deploying this technology societally.

I wish we weren’t encouraging kids to jump on AI for research on topics they lack expertise.

I wish educators weren’t obsessing on ways to integrate AI technology into classroom practice without understanding its shortcomings.

I just wish we were smarter about all of it.