AI Tools are Getting Better and AI Companies are Getting Worse

The great shortcoming of the American system in our time is the inability of Congress to legislate, especially when the proposed laws threaten large corporations. Because Congress is captive to corporate dollars, we are captive to Congressional inaction. We can’t even pass a federal budget. We either pass continuing resolutions or face a shutdown when the prior CR expires. Then we pass a new CR after the shutdown and reset the clock.

The most meaningful legislation in my lifetime are the Americans with Disabilities Act (1991) and the Affordable Care Act (2010). So we’re averaging a major new US policy at a glacial pace, nearly every other decade. At this point, Congress can pass tax cuts, when Republicans take power, and fund the military industrial complex on a bipartisan basis. Our leaders are allergic to meaningful problem solving for the big issues facing their constituents.

I have repeatedly, here and elsewhere, expressed my concerns about the implications of the mainstreaming of AI in our society without safeguards for personal privacy, intellectual property, and public safety in place. However, as if we’ve all never seen a dystopian piece of literature, we continue down this path with no regulations or meaningful legislation anywhere in sight.

The line from an episode of the Ezra Klein Show that “AI technology will never be worse than it is right now” lives rent free in my head. Below, is a recent episode of the podcast where we talked about the murky moral minefield of AI.

This is a long way of saying the inaction from Congress on AI is predictable and dangerous. These companies may make our lives easier but they will also do irreparable harm with their act first, reason second business practices.

For example, continuing Google’s tradition of rolling out half-baked products, their new AI Search product (that literally no one asked for) is telling people Barack Obama is a Muslim, they should eat rocks as part of a well-balanced diet, and to put glue in their pizza sauce. There are screenshots of worse search results out there but I’m training my brain to not believe everything it sees online.

Earlier this week, Open AI, the Musk co-founded and Microsoft backed AI company, revealed their newest “free to use” AI model. According to Open AI:

GPT-4o is much better than any existing model at understanding and discussing the images you share. For example, you can now take a picture of a menu in a different language and talk to GPT-4o to translate it, learn about the food's history and significance, and get recommendations. In the future, improvements will allow for more natural, real-time voice conversation and the ability to converse with ChatGPT via real-time video.

For example, you could show ChatGPT a live sports game and ask it to explain the rules to you. We plan to launch a new Voice Mode with these new capabilities in an alpha in the coming weeks, with early access for Plus users as we roll out more broadly.

GPT 4o is faster, has been trained on more (and more recent) information, and most notably—will talk to you. At one point, it talked to you in a clone of Scarlett Johansson voice, inspired by the 2013 film Her. If you haven’t seen Her, peep the trailer below.

They initially offered to pay Johansson to voice their AI and when she declined, they produced a sound-a-like version anyway. It is indicative of the culture of most of the AI companies—stealing the intellectual property and scholarship of the entire internet and selling it back to us as convenience. It’s theft on the grandest scale imaginable.

Yet, at the same time, I find (some) utility in these AI tools.

Last week, we attended a home “escape room” hosted by a friend. He bought an online kit. We tried to solve the mystery of a kidnapped artist using clues hidden around his living room. We failed. The artist drowned. But we all enjoyed playing the game and solving the clues.

Inspired by that experience, this week I started writing a murder mystery of my own. Using Claude 3 (for text) and Llama (for images), I wrote character profiles for the murder mystery dinner that Hope and I will host in the fall.

The game is set at the Al Hijar Hotel, an opulent location the AI and I conjured on the outskirts of Baghdad. It is set in 1921 as the British consolidated their rule over the former Ottoman Empire. Each character has a bio and fully fleshed out background.

This would have taken me weeks to complete on my own. I did most of it in two thirty-ish minute sessions. It wasn’t without hiccups. Llama’s image generator is buggy AF and I found myself telling it “yes, you actually can render images” repeatedly.

On Saturday morning, I completed the first phase of the game assigning missions to each character in the hotel bar. I've created a set of interactions for each player on night one before the first body drops.

So I find myself betwixt and between.

I know these tools are vehicles of theft.

I know these companies employ warehouses of workers, some paid as little as $2 per hour, to train their language models.

I know that these companies have weirdo techno triumphalist wet dreams about putting AI into every device that we use on a daily basis.

I know that these tools are in their infancy, with the worst harm likely to come.

And I know when that harm comes, our leaders will all say “no one could have seen this coming.”

—

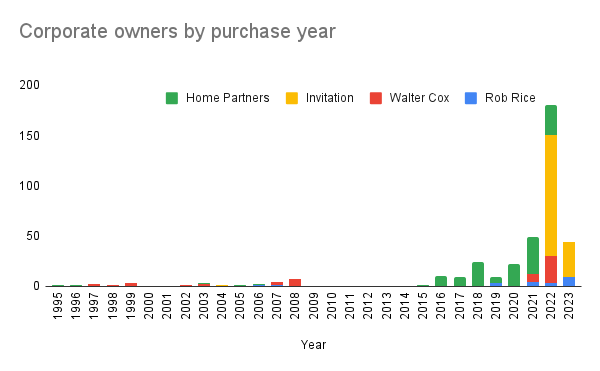

Lastly, on the podcast Monday, we’ll have a conversation with blogger Emmett O’Connell about how corporate and institutional landlords are hoovering up single family homes in Thurston County, Washington, mirroring trends elsewhere. He wrote about it here.

Look for that conversation on The Nerd Farmer Podcast Monday.