To Understand Correctness, You Must First Understand Incorrectness

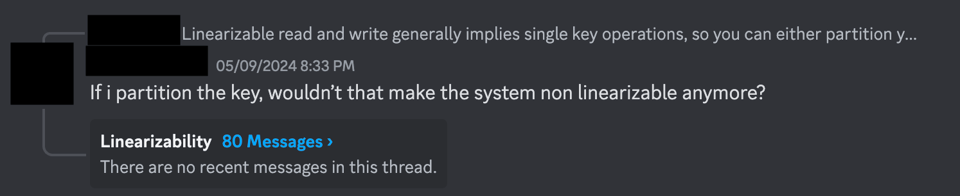

Recently I was in a Discord channel where someone wrote something akin to “I have a question about linearizability” which had an attached thread with 80 replies.

This is what game designers call "environmental storytelling."

Anyway, this exchange got me thinking again about why this is a famously confusing topic despite being so fundamental. I think the answer is that it’s very hard to wrap your head around something that is the “default.” Or rather, when you learn about a correctness condition which has always been met in any context you’ve ever interacted with, it feels like it’s saying nothing at all.

So let's concoct a real-world scenario that violates linearizability.

Art and Patrick and Tashi are trying to organize a get-together.

- The three have already agreed on a location to meet.

- Patrick calls Tashi to tell her he's chosen a new place to meet and gives her the address.

- Art calls Patrick, Patrick tells him that he just talked to Tashi and they're in fact going somewhere different, and that Art should call Tashi and ask for the new address.

- Art calls Tashi to ask for the address where they're going to meet, and she gives it to him.

This is a perfectly acceptable sequence of events and the three of them will now meet in the right place. But! It being correct depends on the communication between the three participants as being linearizable.

You can't really imagine this communication scheme not working, right? Like, your monkey brain whose job it is to find you nuts and berries sort of crumbles trying to envision a situation where this doesn't work. That's normal and natural: this is how our real world works! It's only in the messy world of concurrency and distributed systems where this sort of thing can fail.

Imagine the following situation where Tashi's knowledge of the world is asynchronously replicated to a secondary. The metaphor has become a bit tortured here but work with me and suspend some disbelief.

- Patrick calls Tashi to tell her he's chosen a new place to meet.

- Art calls Patrick and gets told to call Tashi.

- Art calls Tashi, but this time he speaks to her replica, which hasn't yet learned about the new location. Tashi gives him the old address.

This of course doesn't make any sense in reality, but it does make sense with computer systems talking to each other over a network. But a lot of our metaphors for understanding computer systems are based off of real world analogies. And mostly those are good. But sometimes they generalize sufficiently far that the analogies that once worked fine no longer hold up.

In my experience this is a fairly universal phenomenon. If you read, say, databases literature that predates the confluence of databases with distributed systems, you'll often find that they take linearizability as a given. If there was no circumstances where linearizability could be violated, then it's nonsensical to assume it in the first place. Nowadays, most databases are also distributed systems.

One way to think about things being linearizable is that a piece of data has a "home." A location that must be consulted in order for that data to be interacted with. This makes sense in the world of single-node databases where this is literally true: one machine "owns" the data and so a local mutex is sufficient for linearization over the data. This gets a little more abstract when we introduce consensus algorithms like Paxos, where the "home" for the data is some kind of superposition (I don't know what that word means but it felt right in my heart here) of all the consensus participants, and the consensus algorithm forces all interactions to go through at least one common machine.

Anyway, this is my advice to anyone who is attempting to understand any kind of correctness conditions like linearizability and serializability, which is to focus on the situations where it fails, and concoct real-world analogues where those things don't hold.

Add a comment: