Thoughts on DuckDB's Grammar Patching Thing

It is the job of modern programming languages to be amenable to abstractions. It should be easy for users to take a little bundle of functionality and reuse it, or build something more complex on top of it, or give it to someone else to use. A good programming language gives you a set of primitives and tools to combine them into more complex functionality.

So, let's be clear, this:

is not something you should want to do! The development of programming languages over the past few decades has been, at least in part, a debate on how best to allow users to express ways of building new functionality out of the semantics that the language provides: functions, generics, modules.

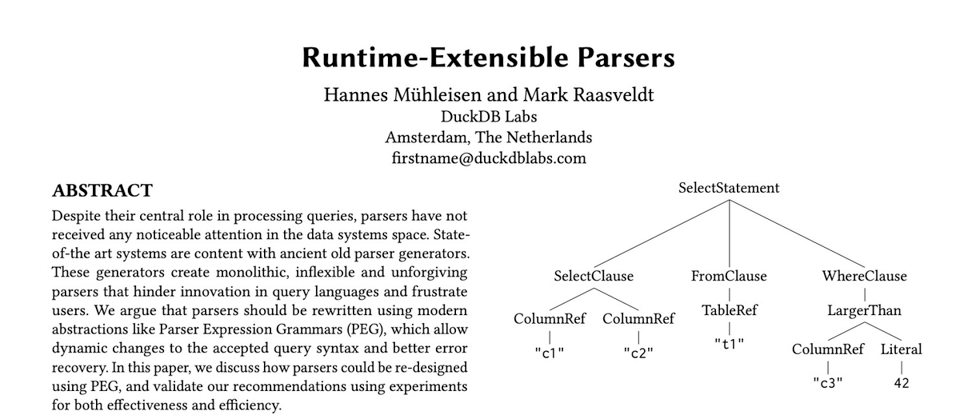

What are you supposed to do, though, when you have a language (SQL) that is incredibly popular, unkillable, and complete hostile to abstraction? You go down dark, demonic pathways like "users should be able to change the syntax of the language at runtime." This DuckDB blog post and associated paper propose that you give extensions (written in a language that is not SQL) an abstraction that lets them rewrite the way the language itself is parsed.

I think this is...basically a good idea? Particularly for analytics queries which are much more likely to be one-offs and don't need active maintenance, it's actually sort of appealing to be able to syntactically extend the query language to express some new idea or to interface with some external tool.

They propose, specifically, doing this via Parsing-Expression Grammars (PEGs), but that is basically an implementation detail. You could build the same dynamic hooks into a handwritten parser, but using something like PEGs gives you a consistent abstraction and API that you can expose to users.

PEGs are just a formalism for describing parsers. They are somewhat limited compared to full on monadic parsers*, but have some nice properties.

While I think this whole paradigm is an interesting idea as far as "I am providing a language and I want to give people hooks into it to extend it," perhaps we could go a level deeper. Every language I've ever worked on has mimicked the basic structure you see from an intro compilers course:

syntactic analysis for entire language

->

semantic analysis for entire language

That is, a monolithic parser which takes a string and returns a syntax tree for the language, which then gets interpreted in some way by the monolithic semantic layer of the system. That is, there are two main components, the parser, which understands all the syntax, and the semantic layer, which understands all the semantics.

If you accept that a grammar is something you are able to build up algebraically and dynamically, maybe there's another route you can go, which is to break up the language, somehow, by features which know both how they are to be described syntactically and how that syntax is to be interpreted semantically:

syntactic analysis for feature A

->

semantic analysis for feature A

----

syntactic analysis for feature B

->

semantic analysis for feature B

----

...

Is this a better architecture? I'm not sure!

Obviously this is not without its problems, there is some hairiness to be resolved here as the DuckDB paper calls out, and the SQL semantics are not really orthogonal enough to make this feasible for the common, baseline parts of the language. But I think this is an interesting idea! SQL itself has a lot of weird extensions even in the standard that have some level of utility but speaking from experience, you would not want clogging up your monolithic grammar file or AST definition.

I implemented a prototype of this idea (it's very hacked together, I slapped it together in a few hours, but it illustrates the basic idea) which you can find here. The best thing I can say about it is...it's at least kind of neat? You can see an example here of adding an array literal to the language after the fact:

func main() {

sys := NewSystem()

sys.RunCommand("SELECT 1 FROM b")

InstallSelect(sys)

fmt.Println("installed select extension")

fmt.Println()

sys.RunCommand("SELECT 1 FROM b")

sys.RunCommand("SELECT ARRAY[1, 2, 3] FROM b")

InstallArrayLiteral(sys)

fmt.Println("installed array literal extension")

fmt.Println()

sys.RunCommand("SELECT ARRAY[1, 2, 3] FROM b")

}

> SELECT 1 FROM b

error: no parser matched

installed select extension

> SELECT 1 FROM b

result: SELECT 1 FROM b

> SELECT ARRAY[1, 2, 3] FROM b

error: no parser matched

installed array literal extension

> SELECT ARRAY[1, 2, 3] FROM b

result: SELECT ARRAY[1, 2, 3] FROM b

Without going too deep into the parsing strategy, it's a pretty simplistic set of parser combinators derived from the grammar in the DuckDB post. For instance, here's how a SELECT gets parsed:

sys.Install("Select", Map(Sequence(

Optional(sys.Parser("WithClause")),

Word("SELECT"),

CommaSeparated(sys.Parser("AliasExpression")),

Word("FROM"),

Identifier,

Optional(sys.Parser("WhereClause")),

Optional(sys.Parser("GroupByClause")),

Optional(sys.Parser("HavingClause")),

Optional(sys.Parser("OrderByClause")),

Optional(LimitClauseParser),

), func(matches any) any {

ms := matches.([]any)

var with *WithClause

if ms[0] != nil {

with = ms[0].(*WithClause)

}

exprsA := ms[2].([]any)

exprs := make([]AliasExpression, len(exprsA))

for i := range exprs {

exprs[i] = exprsA[i].(AliasExpression)

}

tables := []string{ms[4].(string)}

var where *WhereClause

if ms[5] != nil {

where = ms[5].(*WhereClause)

}

var groupBy *GroupByClause

if ms[6] != nil {

groupBy = ms[6].(*GroupByClause)

}

var having *HavingClause

if ms[7] != nil {

having = ms[7].(*HavingClause)

}

var orderBy *OrderByClause

if ms[8] != nil {

orderBy = ms[8].(*OrderByClause)

}

var limit *LimitClause

if ms[9] != nil {

limit = ms[9].(*LimitClause)

}

return &Select{

with: with,

exprs: exprs,

tables: tables,

where: where,

groupBy: groupBy,

having: having,

orderBy: orderBy,

limit: limit,

}

}))

You can see an example of how an extension looks from the InstallArrayLiteral function:

func InstallArrayLiteral(sys *System) {

sys.InstallFront("Literal", Map(

Sequence(Word("ARRAY["), CommaSeparated(sys.Parser("ScalarExpr")), Word("]")),

func(matches any) any {

ms := matches.([]any)

exprs := ms[1].([]any)

result := make(ArrayExpr, len(exprs))

for i, expr := range exprs {

result[i] = expr.(Expr)

}

return &result

},

))

}

You might notice we have to use InstallFront (which adds a new rule with higher priority than the existing ones) to add a new derivation for Literal—this is because the ARRAY syntax is not prefix-free with column names, and if the existing parsers had priority over ARRAY, we'd parse ARRAY as a column name.

The prototype uses a kind of PEG-y set of parser combinators, but it's generic enough that you could slot in any kind of generated or handwritten parser into a nonterminal, as long as it adheres to the interface:

type Parser func(input string) (any, string, error)

Where a Parser parses some prefix of the input and returns some value along with the unparsed remainder. This stuff doesn't work as well as it would in a language friendlier to abstractions, but it's pretty usable, even in Go.

This has a lot of unanswered questions—how do you handle multiple extensions in a nice way, how do you define which nonterminals are accessible for extension (and how do talk about what the API of what a new installation should do), how do you make installing extensions typesafe, how do you provide good error messages, and so on. But I think it's a cool idea! And possibly a logical way forward for something like SQL.

* If you have never worked through Functional Pearl: Monadic parsing in Haskell you are depriving yourself of what I would call a religious experience.

Add a comment: