The Prequel to SQL is SEQUEL

Meta note: follow me on Bluesky if you are so inclined.

I've been going through some historical stuff lately. Been on a history kick. We went to see the Robert Caro Power Broker exhibit at The New York Historical and it really got me in a mood to be digging through some old musty documents. The paper SEQUEL: A STRUCTURED ENGLISH QUERY LANGUAGE by Don Chamberlin and Ray Boyce is as old and as musty as they come and describes the first real incarnation of what would later become SQL. Apparently someone else held the copyright on the name "SEQUEL" and so they had to shorten it. Jokes on whoever that was, because we still pronounce it way anyway.

This paper was really far ahead of its time, as far as an understanding of the importance of declarative programming and abstraction in data-processing. That said, reading it in 2024 it does show its age a little bit.

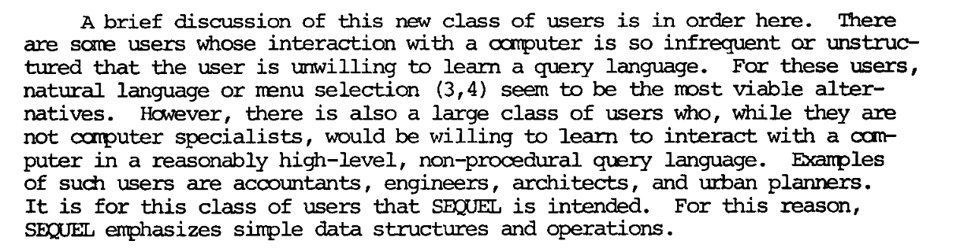

This one goes out to the real ones: the accountants, the engineers, the architects, and you know it, the urban planners.

It's funny to look at this and to remark, as the authors have in recent times, that as successful as SQL was, it turns out that they weren't really making it for the audience they thought they were. Certainly more people nowadays can write a SQL query than can write a C program, but I doubt that anyone for a moment would contend that it's not an activity firmly in the realm of programmers. I mean, are data people programmers (I'm not trying to gatekeep, I just don't really know what you people do)?

This paper is justified by way of improving on the authors's previous attempt at a language for relational queries that was called SQUARE. They say that SQUARE had too much mathematical notation and was too hard to type on a keyboard for the average user. But like, man, could any user type these things? What were you all doing in those days:

Was this the actual textual representation? I don't buy for a second that you lot had the parsing technology to parse these things. More on that later.

I want to clarify again, that this is obviously a landmark paper and, with the power of hindsight, one of the most influential papers of all time, and is a work of great engineering. That said, reading it in 2024 there is some obvious goofiness that I think anyone would admit to. But I say that with all due respect.

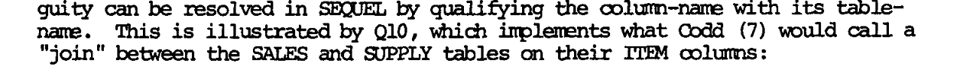

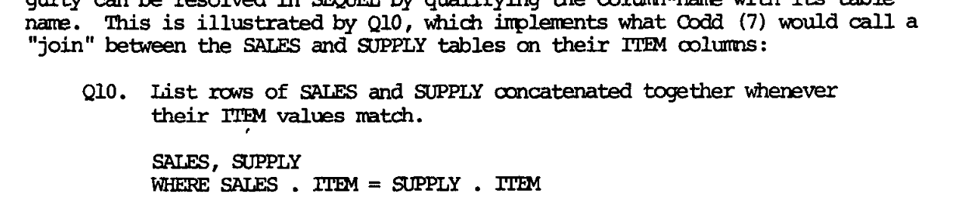

Remarkably, the word "join" appears only once in the entire paper, and it hilariously is with a tone of mild derision:

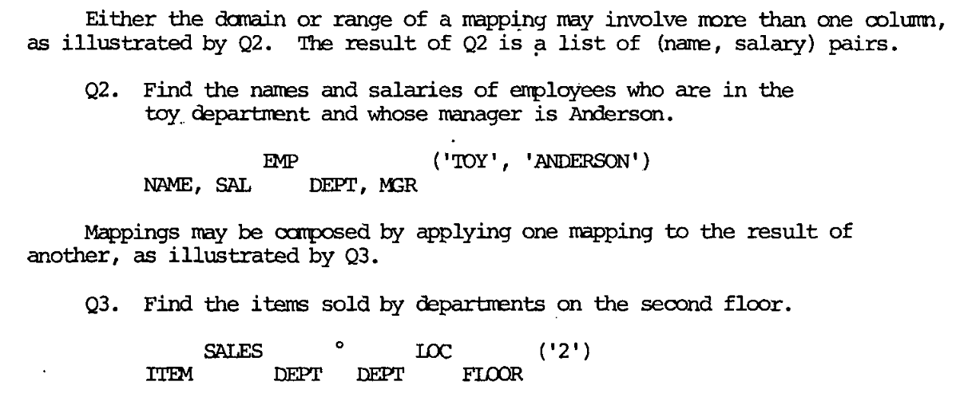

but the SQUARE query from above in fact also describes a join:

SALES ◦ LOC ('2')

ITEM DEPT DEPT FLOOR

This is saying: find the values in LOC that have FLOOR = 2, then pick the DEPT column from there. Then find all the values in SALES having those DEPT values and pick the ITEM column from there. Data is flowing right-to-left here which is a bit interesting. I don't know if the layout they have here is just an artifact of the typesetting or if the intention was actually to write them like this, or what.

In modern SQL you might write this like this:

SELECT DISTINCT

item

FROM sales, loc

WHERE sales.dept = loc.dept

AND loc.floor = '2'

Interestingly they didn't go even further with this and write it as the three-way join between SALES, DEPT, and the relation [FLOOR=2], instead still choosing to express the constraining as a kind of predicate.

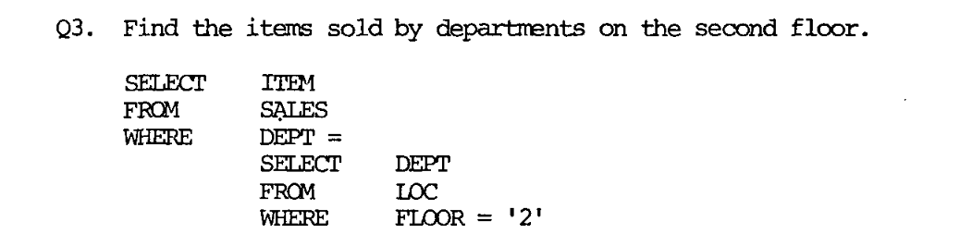

The query expressed in their SEQUEL looks more different from the SQL than you might expect:

Yes! One of the earliest examples they use involves an honest-to-goodness subquery. Though a weird, unparenthesized one that would not parse today. Presumably they were aware at the time that this could be rewritten to use an explicit join, although perhaps not, as, like I said, they call out their use of an actual join later, without drawing a connection to Q3:

So it's not really clear to me, the extent to which they understood the connection between subqueries and joins. I'd be willing to bet, based on the earliness of their work (System R was still six years away, at this point) that some things that might seem obvious now were not so clear at the time. But we now understand that transforming queries in the position they pose there into explicit, flat joins is an important job of a query planner.

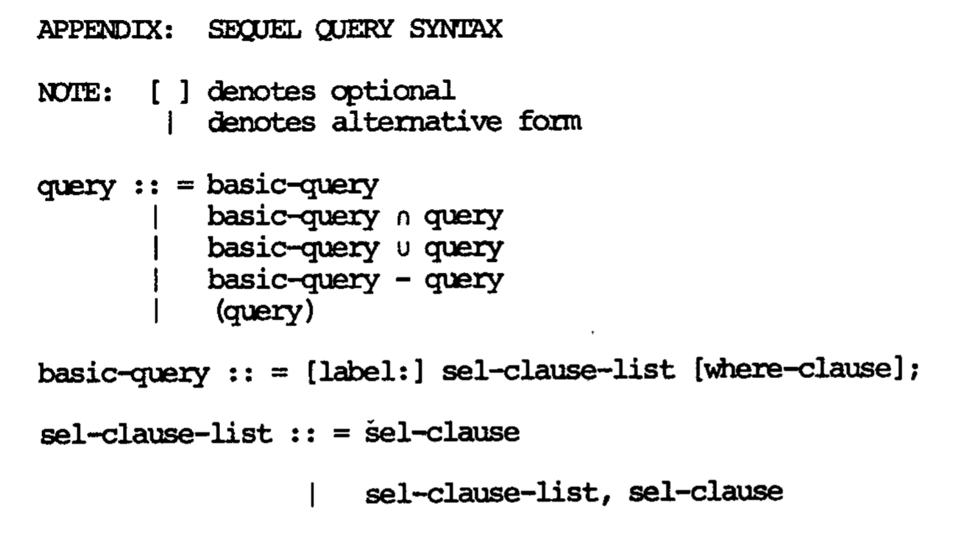

One remarkable thing about this paper is that they provide a BNF grammar at the end:

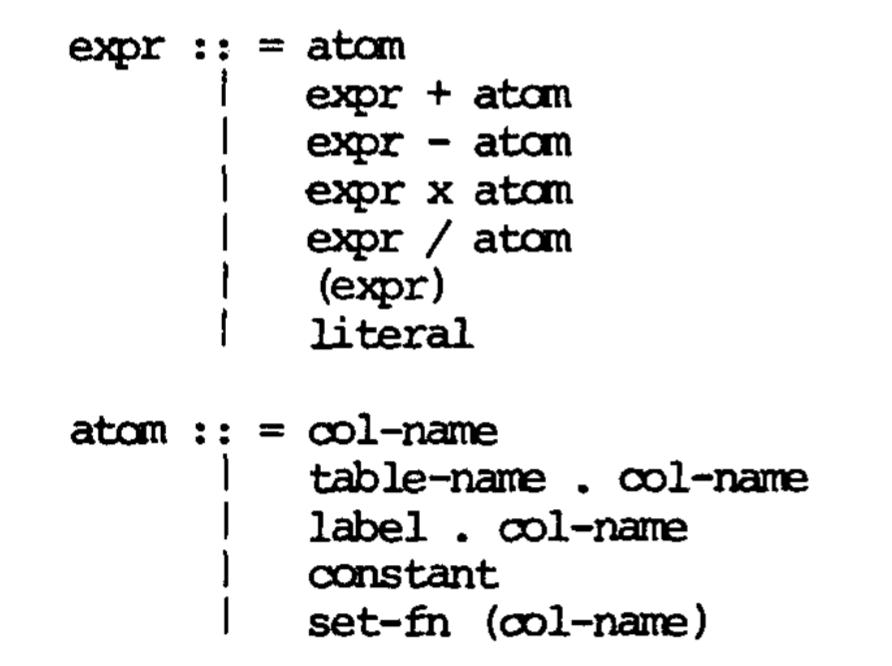

How nice! Now, hang on a second... What's their "expression" syntax?

Okay, so, if you're not used to reading and evaluating these kinds of grammars, it might not be obvious to you: this grammar is...pretty bad. It doesn't really make sense for a handful of reasons (you can check the paper yourself for the full grammar):

- It is left-recursive, which means it's not easily parseable by a recursive-descent parser. It is pretty typical nowadays that if you have the luxury of designing a grammar from scratch, you should make it context-free and easily parseable by a recursive-descent parser (the classic example of "bad syntax design" is that C requires a symbol table to be parsed, since you need to be able to distinguish a type name from a variable name).

- Actually it's...not even really recursive? You can't have complex expressions on the RHS.

1 / (2 + 3)is not a valid expression in this grammar:atomdoesn't loop back around toexpr. - It doesn't do any kind of operator precedence! Especially for a language aimed at non-technical users this is crazy.

Now, I have a couple hypotheses on why such a grammar made its way into this important paper. But I'm just talking out of my ass here.

- They just didn't know any better. Parsing was still pretty early days in 1974, and it wouldn't be shocking to me to learn that good grammar practices hadn't permeated the broader computer science consciousness.

- They didn't really care. Nobody was using this for industrial purposes yet (and these problems would be fixed in the full System R paper, which contains a much more thorough and well thought-out BNF) and the sense I get from reading a lot of papers in those days was that reviewers sometimes wanted a BNF for box-checking purposes.

- This didn't actually represent the grammar they were using. I've been told that it was common to just pop out of a generic parser into the shunting yard algorithm or similar in order to parse precedence expressions (as it is common to do today with precedence climbing [1]).

Anyway. This is a fun paper, and while its differences from modern SQL are pretty entertaining, it's sort of shocking how fully-formed the overall concept of the language was even in its first iteration. I should again emphasize, that the problems I've outlined here were largely fixed in the first real system bearing this language, which was System R.

[1]: See this video for my favourite presentation of the idea.

Add a comment: