Pushing Values to Zero

There is a popular presentation of the exponential distribution that goes through the geometric distribution. The geometric distribution arises from flipping a weighted coin until you see a heads, and reporting how long it took. It can be simulated with this code:

def geo(p):

count = 1

while rand() < p:

count++

return count

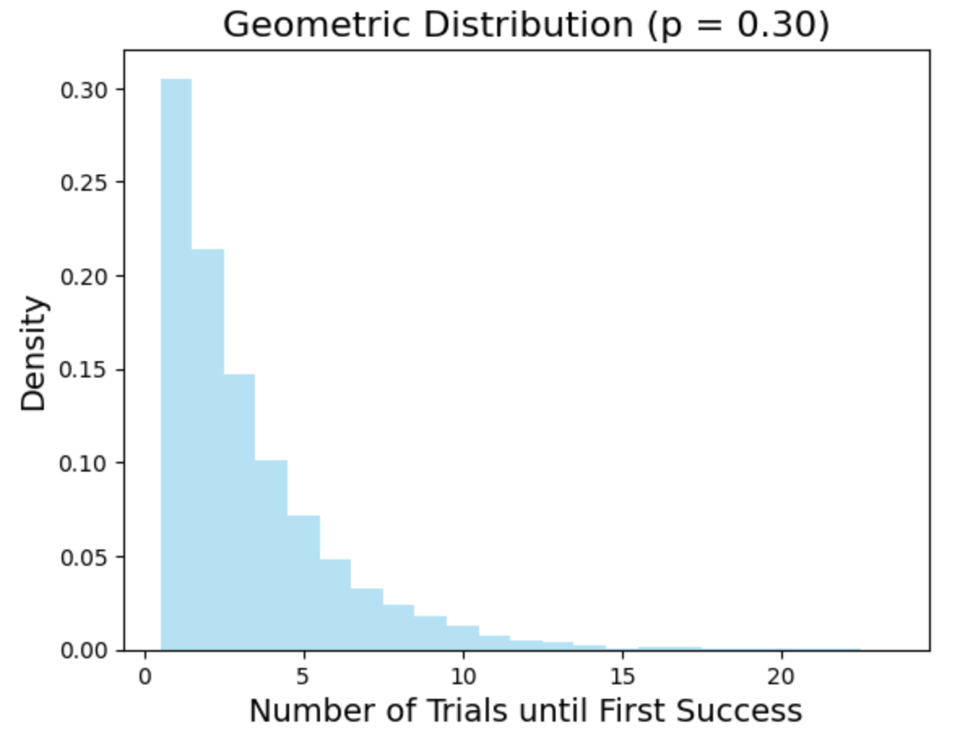

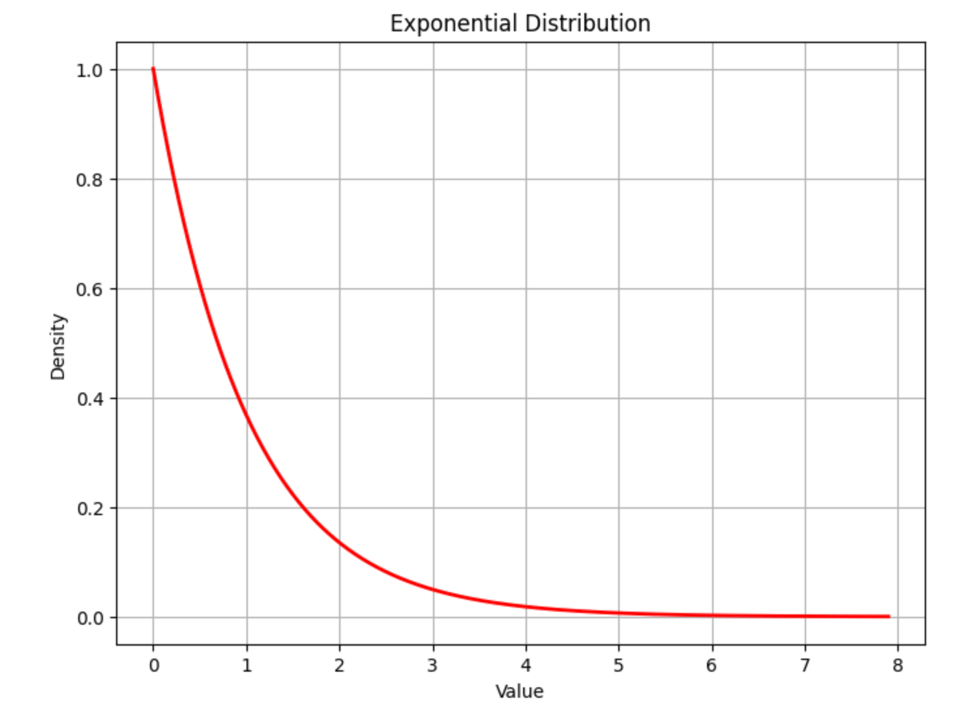

The geometric distribution is very natural and very easy to analyze and has all sorts of nice properties. Its continuous analogue is the exponential distribution, which can be thought of as decreasing the probability of a success to almost zero and increasing the number of trials performed in a particular period of "time."

As the probability that any particular trial succeeds drops to zero and the number of trial rises, it smoothes out and in the limit, we get a continuous distribution.

There's other ways to understand and define the exponential distribution, but a lot of teachers will go this way either to introduce the concept, or after introducing the concept to show that despite the fact that it's weird and continuous and scary, it can be understood in terms of something you already understand by driving one of its parameters to some extreme value.

I think this pushing around of parameters can be a good way to understand ideas in general: in CockroachDB (when I was there) we used 64MB as the target size of a "range" (the unit of data that would be replicated and moved around between nodes). A discussion I had with someone more familiar with the system once was about what the factors are that stop this value from being much larger or much smaller.

If the range size is too big:

It takes starts taking a long time to migrate data from node to node and increases the probability that such an operation will fail.

You don't have the level of granularity you might otherwise want to bin-pack the ranges onto different nodes, or to load balance hot data away from other hot data.

Increased contention as writes to the same range all need to be crammed through a single raft log.

If the range size is too small:

Increased overhead, since each range has its own consensus group.

Decreased locality, since CockroachDB is range-sharded and ideally data that's accessed together lives on the same range.

Most of the time, if you look at a number in a system and do this exercise of prodding it up and down, you can tease out the forces that are pushing on it in each direction that make its actual, present value appropriate (and sometimes you can find that it can be comfortably much larger or smaller).

Sometimes you don't find those forces, though! Or you find that the forces can be ignored in some cases.

I recently was discussing a system with a colleague that works the following way: data is read from Kafka and stored, aggregated, in a local LSM. Every d interval of time (d being like, O(minutes)), any new files are uploaded to cloud storage along with an updated manifest and the latest Kafka offset that the system read from. If the system dies, a new one comes up, downloads the manifest and all the files it needs, and begins reading from the same Kafka offset.

What are the factors pushing d to be bigger or smaller?

If d is too big, you have to read more data from Kafka on startup, which means a slower time getting back to processing live data.

If d is too small, you're more likely to upload small, short-lived SSTs that won't actually be relevant for very long (and still pay the 1 day minimum S3 charge).

But sometimes, like in this case, driving the values down to zero presents an alternative: what if we didn't care about uploading small, short-lived SSTs. What if we buffered data for longer in memory and only made larger SSTs? If we did this, and we knew d was basically zero, we could just upload them to cloud at the same time that we wrote them out to disk. And once we're doing that, well, maybe we don't even need to write them to disk in the first place: we can just write them straight to cloud storage and then periodically compact them. And at that point we've rederived something like SlateDB.

This has some problems, like it has bad read latency, so if you often need to read the data, or it's larger than memory, or something, it might not be appropriate. And I'm not trying to suggest that this is actually a reliable way to like, generate new ideas, but I think it is a reliable way to get a handle on the design space for an existing idea.

Links

I know I have been so extremely unreliable when linking to stuff, to the point where it’s not really a fixture of this newsletter, but I couldn’t resist with this post from Tom Moertel. It’s got it all: SQL, probability, randomized algorithms, everything a canonical NULL BITMAP reader enjoys. Highly recommend checking it out as I’d never heard of the algorithm described and it comes with an excellent explanation of why it works.

Add a comment: