In Codd we Trust (or not)

Get in loser, we’re doing another Codd philosophizing session. Codd’s paper introducing the relational model opens up like so:

Future users of large data banks must be protected from having to know how the data is organized in the machine (the internal representation).

This is a very strong statement. And why am I quoting it? Why should I care what some guy with a mustache who never even had to type rails new has to say about software engineering? Well, his point is pretty simple, actually, and mostly comes down to a conviction that the logical schema of a database and its physical schema are two distinct concerns that merit separation. If you start from the perspective that you want to be able to tweak those two things independently of one another, you’d probably arrive at much the same place as Codd. The relational model works well for this because its level of abstraction is high enough that it doesn’t really allow you to express physical concerns in its queries, or at least, lets you avoid doing so most of the time. This is where we get the need for a sophisticated query planner: even if the programmer could tell you how to compute a particular query, we want them not to, because we want to decouple “what are you retrieving” from “how do you retrieve it.”

Anyone who has worked on more than one software system will probably tell you that this is an acceptable mode of operating sometimes. Sometimes it’s good, for design purposes, that we express all of our queries logically and then let the computer sort out the messy, goopy, sticky bits. Especially if we have a system that’s not performance sensitive, it’s a really nice property to be able to keep your queries focused on their semantic meaning.

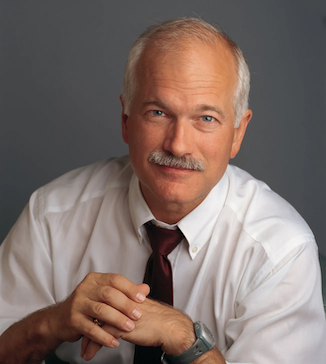

Sometimes that’s not appropriate though. Nelson Elhage has a post that I think is a fair, well explained discussion of why someone would object to this mode of interaction with a database system (referring specifically to the section “I dislike query planners”). In particular, systems with tight performance and predictability requirements have a stronger desire to do minimal “magic” on a query. This was part of why I was careful in this post not to call out any of these databases as “deficient” for not doing a particular query: there is inherent risk in any optimization you perform and turning what the user wrote into something bad is much less forgivable than not turning what the user wrote into something good.

This is part of why I find it so odd that while compilers have had standards for “optimization levels” for…as long as I’ve been interacting with them, there isn’t really any kind of similar thing in any databases (that I know of). The answer I tend to give to people is “different databases are for different purposes.” As in, you expect SQLite to perform different kinds of optimizations than PostgreSQL or Snowflake. But at least some of these databases are intended to be flexible tools that aren’t just for one particular workload, and as such, you might expect them to expose different levels of aggressiveness when it comes to doing query optimization. But beyond some stuff like, some databases allow you to disable specific optimization rules, I’m not aware of many systems that actually expose that sort of thing, like, “no trust me, I have thought about it, do not reorder my joins here, babe.”

When you’re really into this whole world of “declarative! Declarative!!!” It’s really easy to get caught up in ideas like, “it’s good for people to just describe what they want their programs to do, because then future versions of the compiler or planner or whatever will be able to optimize it without the programmer having to update it.” I think in practice this is…not really particularly valid. A lot of mature databases will allow you to mitigate the risk of using a query optimizer by shipping all the older versions of it. In Oracle, you can use the OPTIMIZER_FEATURES_ENABLE parameter to lock in your queries to a particular version of the optimizer, so if some new transformation comes along, it doesn’t break your carefully crafted query. Basically query optimization is a very chaotic, very risky process and basically every decision your query planner makes needs to weigh the potential benefits of it against the potential dangers of it.

I’ll admit to never having met Codd, I was already ten by the time he died so I suppose I don’t really have much of an excuse but the version of him that has been constructed in my personal mind palace, a bit of a lovable, insufferable nerd, rolls around in his grave when he sees people specifying how their declarative SQL queries should be run. Actually by my estimation of his writing he probably rolls around when he sees we’re specifying the queries in English rather than algebra, but that’s besides the point.

Databases, more than probably any other piece of software, are a synthesis of a lot of different ideas in computing. People (database people, specifically) always say that databases are the synthesis of all the different disciplines of computing, and alongside operating systems, there are few tools that are expected to serve as wide an array of roles as a general-purpose SQL database. There’s a lot of push and pull in the world of “what is a database” between people who want them to be smart* and solve all kinds of problems automatically and people who want them to just do what they’re told, and I’m not sure that databases today do a good job of filling both roles.

* When I first wrote this I linked to the OtterTune website but in the interim that link has taken on something of a different texture.

Add a comment: