Are Correctness Conditions Descriptive or Prescriptive?

Please see the bottom of this issue for a programming note!

Correctness conditions constrain the sequences of events that are allowed to occur in a system like a database. If we observe this series of events:

READ x -> 8

SET x = 3

READ x -> 8

READ x -> 3

We might say that consistency has been violated (it's important to remember: this is the "consistency" in the CAP theorem, not the "consistency" in ACID).

If we observe this series of events:

x = 2

Begin transaction A

A writes x = 6

Begin transaction B

B reads x = 6

A aborts

B commits

We might say that isolation has been violated.

These together fall under the umbrella term of a correctness condition and one way of thinking of them is as sets of histories. A history is just a sequence of events, as laid out above. A correctness condition is a way of classifying histories as "okay" or "not okay."

Lots of correctness conditions are fairly natural. A history is "linearizable" if it respects causality (in some sense that can be made precise). A history is "serializable" if no participant can tell that there was any concurrency. These are both concepts you would naturally come up with if you were sitting at a table with a piece of paper, trying to think of what are reasonable guarantees to enforce.

Others are less natural. A history is snapshot-isolated if everyone reads from a specific point in time and no two committed concurrent transactions have overlapping write sets. By contrast to linearizability and serializable isolation, this doesn't sound natural at all! Why the write sets and not the read sets? Why have that requirement at all? It seems like nobody should care about this to the same extent they care about other conditions?

Nonetheless, they do, and the reason is that snapshot isolation is very natural from an implementation standpoint. It is the easiest isolation level to implement with MVCC that still provides some amount of ability to control whether transactions are allowed to commit or not.

So, you might not be able to invent snapshot isolation in a vacuum, but looking at the rest of the world around it, it makes sense to come up with.

This raises a philosophical question in general about what we're saying when we talk about correctness conditions. In a precise, technical sense, we're talking about sets of histories, which provides a good theoretical foundation: it's easy to say "correctness condition A is stronger than correctness condition B if A's allowed histories are a subset of B's allowed histories."

In a looser sense what is happening here can be interpreted two ways. One is, "we'd like our systems to work this way, so here is a spec for implementors to target," and the other is "our system seems to work this way, here is what you can expect." And sometimes they start as the latter and become the former as people get used to them. Second-type conditions aren't just like, we threw transactions at the wall willy-nilly and out popped some correctness. If the systems weren't created with any guarantees at all then everything would just be not concurrency-controlled and we wouldn't have these complex conditions in the first place.

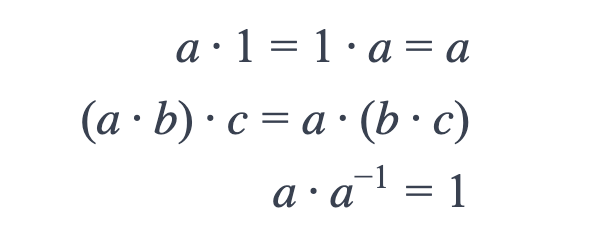

A lot of things can be approached from these two perspectives: consider the group axioms:

Are these valuable because someone dreamed them up one day and realized they can then derive lots of useful things from them? Or are they valuable because we observed that a lot of things we cared about behaved this way? The way we teach undergrads is that it's the former, because we need them to just take on faith from the get-go that this is an important concept. But the more historically accurate approach is that it's the latter (...sort of): we use this definition because we found that it accurately described a lot of things we already deemed important (specifically the symmetry of polynomials, but doing the generalization taught us new things). The formalism followed the problems we were already trying to solve.

Every database introduction book introduces the SQL isolation levels as though they are something foundational and important and I think that is a misleading perspective: they are a handful of points that got picked because they are convenient and because they mapped onto implementation strategies and that's important to keep in mind when thinking about them.

Programming note. I've been curious at the thought of what a newsletter-guided book club would look like. Would you be interested in reading along with one of the following books, occasionally mailing in commentary?

- Extensible Query Optimizers in Practice,

- Feedback Control for Computer Systems: Introducing Control Theory to Enterprise Programmers,

- Concurrency Control and Recovery in Database Systems.

I have chosen these books because I think they are all aesthetically NULL BITMAP and because I've read most of all of them so I have a good sense of them. Two of them are freely available online and I found a used copy of the third online for $15.

Do any of the following apply to you?

- Would be interested in reading commentary on a book on a slower, week-by-week read,

- would be interested in contributing commentary on a book on a week-by-week basis,

- would be interested in participating in discussion groups on a book on a week-by-week basis,

- would be interested in connecting with other NULL BITMAP readers generally in this or some other way.

(I'm not sure any of that is something I actually want to pursue but I do want to gauge interest!)

Please either comment or reply to this email if this is something you would be interested in, which books sound more or less compelling, and what level of engagement sounds appealing.

Add a comment: