AI Week Aug 23: AI bubble, AI safety, mind-reading, and more

Hi! Welcome to this week's AI week.

This week's newsletter is jam-packed with interesting stories. I have two weeks' worth of news to curate, and there's been a lot going on.

In this week's AI week:

- When will the AI bubble pop?

- AI Safety section: Coding agents

- AI and ML applications: Mind-reading, tell-tale pee, and the ticket agent nobody wanted

- Resources: Questioning AI resource list, & how Wikipedia editors detect AI writing

- Longread: AI as a mass-delusion event

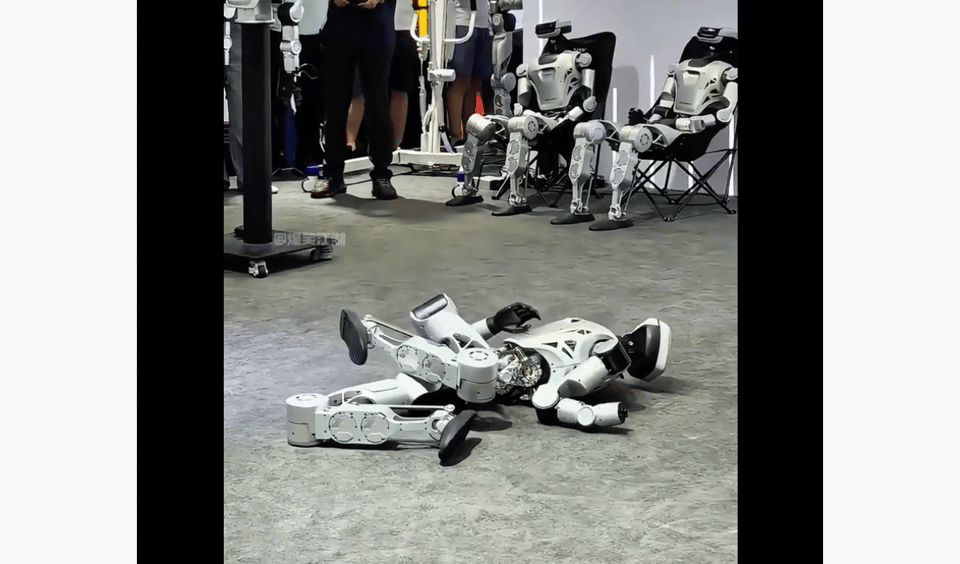

But first, something funny: Check out this robot blooper reel.

(My favourite blooper is the one with the Corgi.) Humanoid robotics have made jawdropping strides over the last five years, but the technology's still maturing.

When will the AI Bubble pop?

Over the last few years, there've been two very vocal overlapping camps in the tech world: AI boosters, who believe LLMs like ChatGPT will lead to artificial intelligence (AGI) with great net positive effects, and AI doomers, who believe LLMs will lead to AGI like Skynet from the Terminator. I've tried to focus this newsletter on voices and stories from outside these two camps. For example:

- LLMs like ChatGPT and image generators like MidJourney are plagiarism machines

- LLMs are a cool technology, but will not lead to AGI, and we should stop acting as though they will (Gary Marcus)

- LLMs are power-hungry but can't be relied on for accuracy

- Text and image generation are being deployed without sufficient guardrails or security

- Machine-learning-enabled discoveries and techniques in science, health, archaeology, etc

- The hype outweighs the tech and the economic fundamentals of the AI boom don't make sense

There are plenty of examples, more than I could fit in twice as many newsletters. But that hasn't stopped AI investment from reaching such a fever pitch that even Sam Altman, ChatGPT's CEO, is calling it a bubble. This week, something seemed to tick over in the market's collective mind, with Bloomberg and the Financial Times both warning of an end to the bubble.

Why are we seeing this now?

It might've been the widely-reported study from a group at MIT that 95% of gen AI pilots fail to deliver the expected returns:

MIT report: 95% of generative AI pilots at companies are failing | Fortune

There’s a stark difference in success rates between companies that purchase AI tools from vendors and those that build them internally.

The Fortune article hints that AI agents are the solution. I'd take that with a hefty grain of salt, though, because the report's source is MIT Media Lab's NANDA, "The Internet of AI Agents".

In fact, the past month has highlighted big problems for AI agents:

AI Safety section: AI coding agents

One of NIST's 10 AI risks is Information Security:

Information Security: Lowered barriers for offensive cyber capabilities, including via automated discovery and exploitation of vulnerabilities to ease hacking, malware, phishing, offensive cyber operations, or other cyberattacks; increased attack surface for targeted cyberattacks, which may compromise a system’s availability or the confidentiality or integrity of training data, code, or model weights.

AI coding agents are a perfect example of these increased attack surfaces. At the recent Black Hat Las Vegas security conference, security researchers demoed dozens of exploits taking advantage of LLM-based coding agents.

LLMs + Coding Agents = Security Nightmare

Things are about to get wild

At the root of many of these problems is that LLM coding agents have broad access to system files and the internet, in order to act autonomously. This creates an opportunity for hackers to leave booby-traps around for these agents. For example, a malicious attacker might set up a malware server, then file a false bug report saying "I hit a bug when I tried to load a file from this server". If an autonomous coding agent is asked to investigate the bug, it will go download the malware.

More AI safety stories this week:

Harm to vulnerable people

Google Gemini launched a storybook app that will create a custom illustrated storybook for your wee one. Problems? Boobies and full frontal nudity are just the beginning.

Why I’m offended by Google Gemini’s Storybook… - by Laura

It never should have been launched

Meanwhile, a leak of Meta's rules for its chatbots caused widespread outrage for its incredible ickiness. I think most functioning adults can agree that chatbots shouldn't be telling 8-year-olds that they look good shirtless or chatting up tweens.

https://qz.com/senators-call-probe-meta-chatbot-policy-kids-outrageAccuracy

AI hallucinations, also known as confabulations or just lying, are a persistent issue for LLMs like ChatGPT. If I have to double-check an LLM's work for made-up stuff, is it really saving me any time? So "hallucination detection" is a big deal.

There's a surprising way to see if an LLM is lying: how long is the answer? The longer it is, the more likely it is to contain a hallucination.

Finally, we uncover a surprising baseline: simple length-based heuristics (e.g., mean and standard deviation of answer length) rival or exceed sophisticated detectors ... hallucinated responses tend to be consistently longer and show greater length variance.

[2508.08285] The Illusion of Progress: Re-evaluating Hallucination Detection in LLMs

Large language models (LLMs) have revolutionized natural language processing, yet their tendency to hallucinate poses serious challenges for reliable deployment. Despite numerous hallucination detection methods, their evaluations often rely on ROUGE, a metric based on lexical overlap that misaligns with human judgments. Through comprehensive human studies, we demonstrate that while ROUGE exhibits high recall, its extremely low precision leads to misleading performance estimates. In fact, several...

More AI accuracy problems:

- Copilot in Excel: Truth is a slippery thing Using COPILOT() function? Double-check the results

- Google AI overview sends cruise customers to a scammer Using Google to find a phone number? Don't trust the one in the AI search summary

- Wired & Business Insider take down AI-written content Nonexistent freelancer offers fully-hallucinated reporting on nonexistent locations

Medicine

- AI brainrots doctors fast Gastroenterologists worse at spotting polyps on their own after using AI screening

- AI is the new Dr. Google Patients requesting clinically inappropriate procedures surfaced by ChatGPT

Data leakage

- Grok publishes all shareable conversations Wanted to share a Grok conversation with a friend? Now Google search can see it too

Bias mitigation

- Meta appoints the worst possible person as AI bias advisor Robby Starbuck has led a campaign against DEI

AI and ML applications

Two cool applications, and one nobody asked for.

- AI helping to decode inner speech (Cool)

- Did you scarf those donuts? Your pee can tell on you (Cool)

- Get ready for adaptive price-exploitation in airfaire (Not so cool)

Resources

Questioning AI resource list

Bluesky user poisonivy74 has compiled a Google Doc of links to journalists and acadmics reporting on problems with AI.

How to spot AI writing, according to Wikipedia editors

A list of tells, some specific to Wikipedia, others generalizable. Of note:

The team also cautions against relying solely on automated AI detectors. While these tools are better than guessing, the guide makes it clear that they're no substitute for human judgment.

Here’s how to spot AI writing, according to Wikipedia editors

The WikiProject AI Cleanup team has published a guide to help Wikipedia editors spot AI-generated writing.

LONGREAD: AI is a mass-delusion event

AI Is a Mass-Delusion Event - The Atlantic

Three years in, one of AI’s enduring impacts is to make people feel like they’re losing it.

My take: This article hits the nail on the head. Generative AI won't get us to AGI, but may still do incalculable damage to the environment, the economy, and society while billionaires chase their dream of infinite rightsless slaves.

Add a comment: