AI week Aug 8th: Pre-vacation edition

Hi! I'm about to go on vacation for a week. That means no newsletter next Friday. I'll be in the woods by a lake, ignoring the news.

It also means that I have less time than usual to devote to this newsletter - Packing! Errands! Where's my swimsuit! - but it's still packed, because this week has been brimful with interesting stories.

A reasonable person might say this isn't the best time to kick off a new newsletter section. Too bad, because in this week's AI week, I'm going to introduce a new AI safety section.

Each newsletter will have a couple of paragraphs on an AI risk or governance issue. And yes! There will be examples!

In this week's AI week:

- ChatGPT news

- On taking medical advice from LLMs

- What's wrong with social media?

- Linkdump

- AI Safety: Duelling policies

- Longread: Why this ex-hacker's an AI hater

Also new this week: Comments enabled! Come on over to the website to share your thoughs.

ChatGPT news

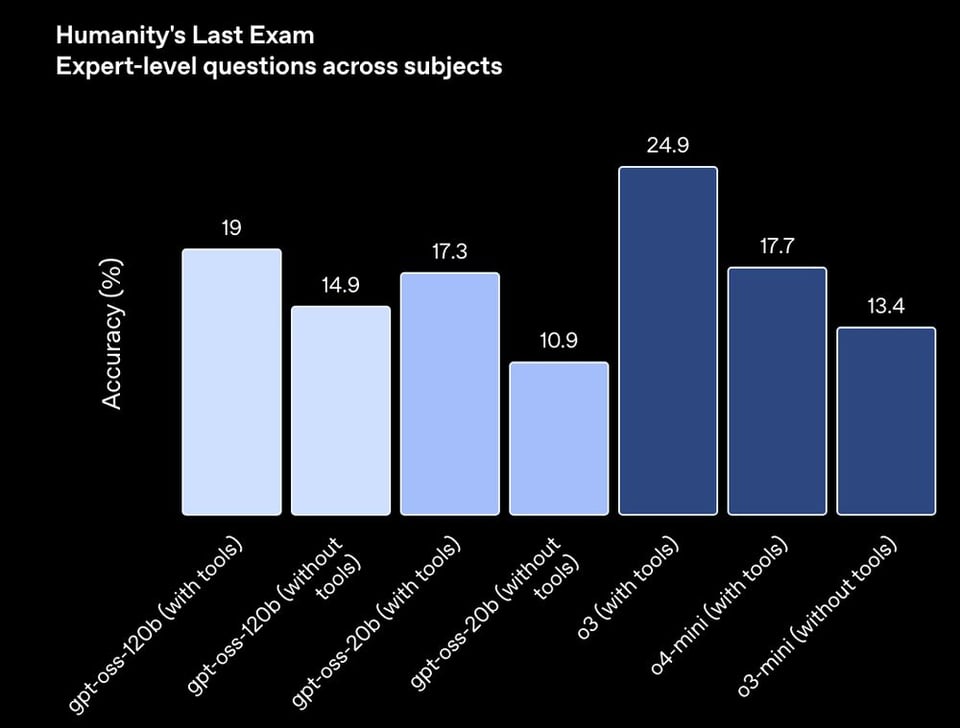

A couple of open source chatgpt models dropped this week. The larger of the two laptoppable models is comparable to previous model o4-mini:

I say "previous" because !! Big News !! OpenAI released GPT-5, and PSA: when GPT-5 rolls out to a consumer account, the prior models vanish.

So far, GPT5 users say:

- GPT5 loves to call tools (eg internet search, image gen)

- It can work for hours without input

- It's better at coding

- However, it still has trouble counting letters.

A few users have noticed that ChatGPT 5 will confidently assert that "Blueberry" has 3 b's.

Source: https://kieranhealy.org/blog/archives/2025/08/07/blueberry-hill/

Source: https://kieranhealy.org/blog/archives/2025/08/07/blueberry-hill/

The middle double-B is easy to miss if you just glance at the word.

On taking medical advice from LLMs

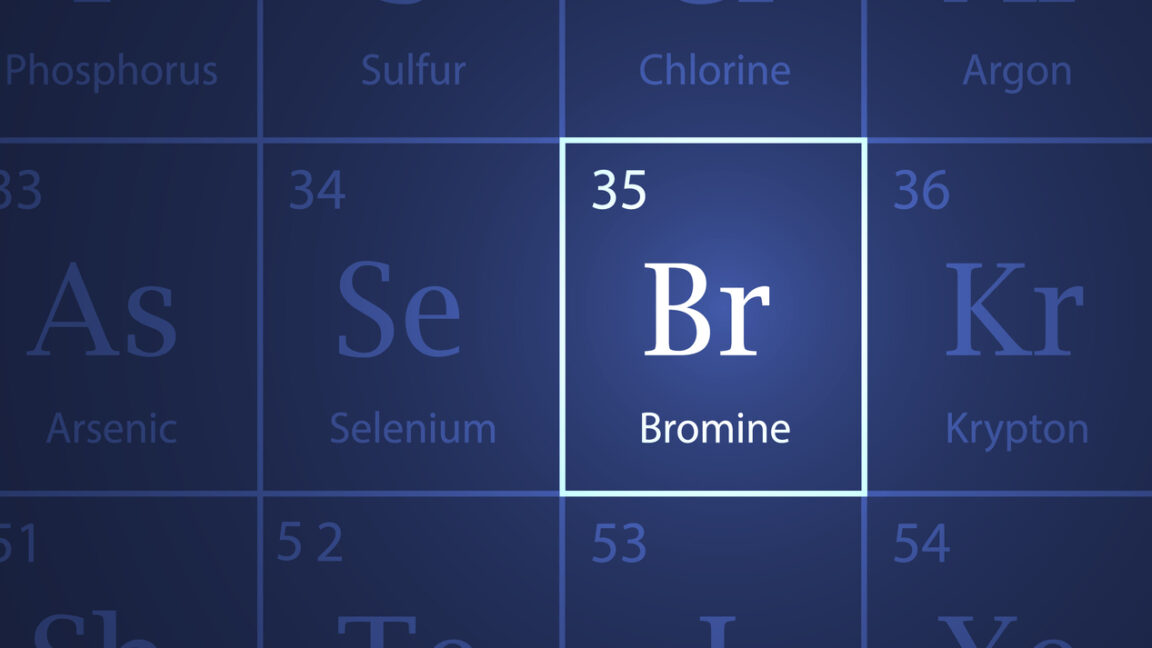

This week, Ars Technica reported on a man who gave himself a rarely-seen illness by taking health advice from a previous version of ChatGPT. On the LLM's advice, he swapped out his salt (sodium chloride) for pool chemicals (sodium bromide) and gave himself bromism.

After using ChatGPT, man swaps his salt for sodium bromide—and suffers psychosis - Ars Technica

Literal “hallucinations” were the result.

More recent versions of ChatGPT don't seem to advise people to take pool salts any more, but the problem of confabulation (LLMs making stuff up, aka "hallucinations") isn't limited to bromism. Nor is it limited to consumer-oriented chatbots.

The Verge has an excellent, thoughtful article about the time Google's healthcare LLM, MedGemini, hallucinated a brain structure, and what that means for healthcare professionals' ability to use LLMs safely.

https://www.theverge.com/health/718049/google-med-gemini-basilar-ganglia-paper-typo-hallucinationRelatedly, ChatGPT and other chatbots are terrible therapists (link goes to Youtube) and Illinois is blocking AI therapy.

https://www.axios.com/local/chicago/2025/08/06/illinois-ai-therapy-ban-mental-health-regulationWhat's wrong with social media?

What's wrong with social media? The answer might be: social media.

In this paper, a bunch of LLMs interacting on minimalist social networks fell into the same negative patterns that humans do:

We create a minimal platform where agents can post, repost, and follow others. We find that the resulting following-networks reproduce three well-documented dysfunctions: (1) partisan echo chambers; (2) concentrated influence among a small elite; and (3) the amplification of polarized voices - creating a 'social media prism' that distorts political discourse.

None of the fixes the authors tried made anything better. They suggest that the problem might be the whole concept of post/repost/follow:

These results suggest that core dysfunctions may be rooted in the feedback between reactive engagement and network growth, raising the possibility that meaningful reform will require rethinking the foundational dynamics of platform architecture.

Of course, the problem might also be that the LLMs behind these agents were trained on the internet, including the toxic social media we already have.

If so, that suggests that any future social media we can create may be doomed to slide into toxicity once the bots arrive.

Linkdump

- Who is Elara Voss? Variations on this name turn up in LLM output with surprising frequency. Who is she and why do LLMs love her?

- The people who marry their AI chatbots

- A poisoned calendar invite is all it takes to hack Gemini's AI agent Security researchers caused smarthome and computer chaos with a single GCal invite.

- Deliver us from evil More on Anthropic's paper on AI Evil last week. This article focuses on identifying and removing the training data, and nodes, behind misalignment.

AI Safety: Duelling policies

The US and China recently released two very different AI policies at nearly the same time. The White House's Action Plan is deregulation-first, rolling back Biden-era safeguards. In contrast, China's Governance Plan has been described as positioning China as the champion of trustworthy AI.

Removal of safeguards doesn't mean that the risk has gone away. So for the next several weeks, starting next week, this section of AI Week will look at one of the AI risks identified by the US National Institute of Standards and Technology (NIST) during Biden's presidency.

I'm in Canada, and our AI governance is a bit up in the air. A proposed bill died when our Parliament went on recess. In the meantime, we have a voluntary code of conduct that covers accountability, safety, fairness and equity, transparency, human oversight and monitoring, validity, and robustness.

What you get without safeguards: Nonconsensual topless pics nobody asked for

Grok generates fake Taylor Swift nudes without being asked - Ars Technica

Elon Musk so far has only encouraged X users to share Grok creations.

Longread

If you're only going to read one AI article this week, give this one a shot:

https://malwaretech.com/2025/08/every-reason-why-i-hate-ai.htmlIt's by a hacker. Sorry, ex-hacker. Marcus Hutchins is a Threat Intelligence Analyst, known for stopping the 2017 WannaCry ransomware worm.

The reason I’m not diving head first into everything AI isn’t because I fear it or don’t understand it, it’s because I’ve already long since come to my conclusion about the technology. I’m neither of the opinion that it’s completely useless or revolutionary, simply that the game being played is one I neither currently need nor want to be a part of.

If you're worried about being left behind, writes Marcus, don't be. "I’d make a strong argument that what you shouldn’t be doing is ‘learning’ to do everything with AI. What you should be doing is learning regular skills. Being a domain expert prompting an LLM badly is going to give you infinitely better results than a layperson with a ‘World’s Best Prompt Engineer’ mug."

Give it a read here.

Add a comment: