[AINews] OpenAI beats Anthropic to releasing Speculative Decoding

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Prompt lookup is all you need.

AI News for 11/1/2024-11/4/2024. We checked 7 subreddits, 433 Twitters and 30 Discords (216 channels, and 7073 messages) for you. Estimated reading time saved (at 200wpm): 766 minutes. You can now tag @smol_ai for AINews discussions!

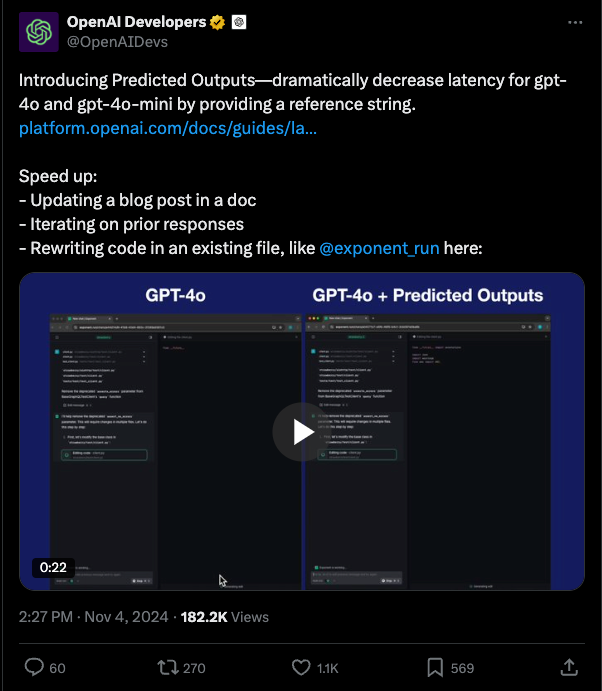

Ever since the original Speculative Decoding paper (and variants like Hydra and Medusa), the community has been in a race to deploy it. In May, Cursor and Fireworks announced their >1000tok/s fast apply model (our coverage here, Fireworks technical post here - note this issue initially went out with a factual error stating that the speculative decoding API was not released, as this post explains, Fireworks released a spec decoding API 5 months ago.). In August, Zed teased Anthropic's new Fast Edit mode. But Anthropic's API was not released... leaving room for OpenAI to come in swinging:

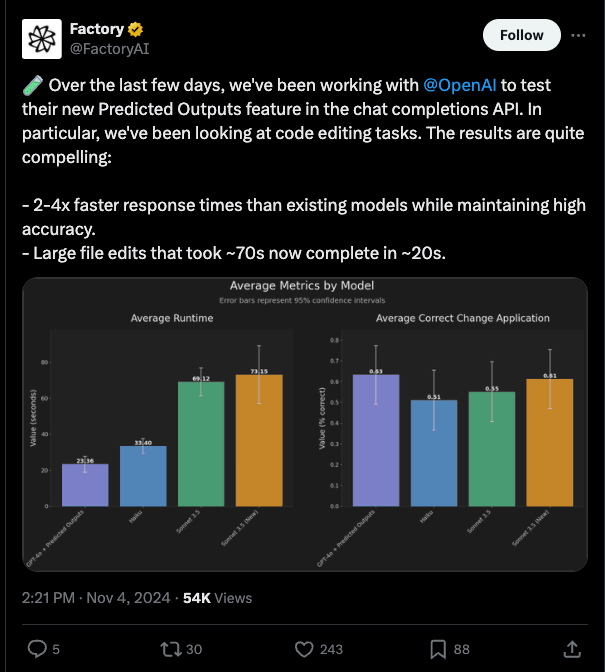

Factory AI reports much faster response times and file edits:

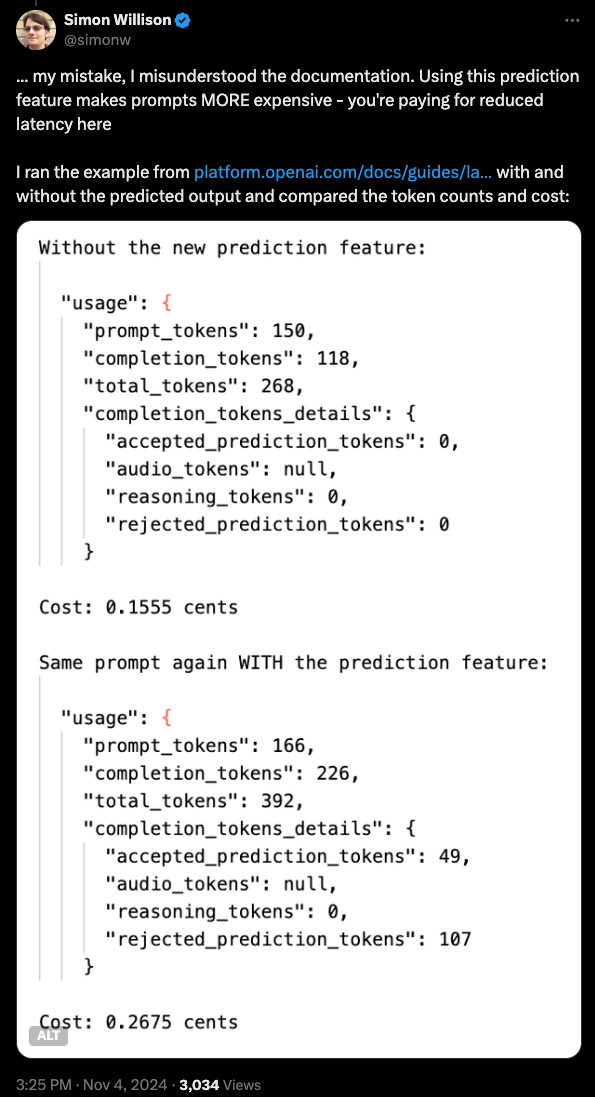

what this extra processing of draft tokens will cost is a bit more vague (subject to a 32 token match), but you can take ~50% as a nice rule of thumb.

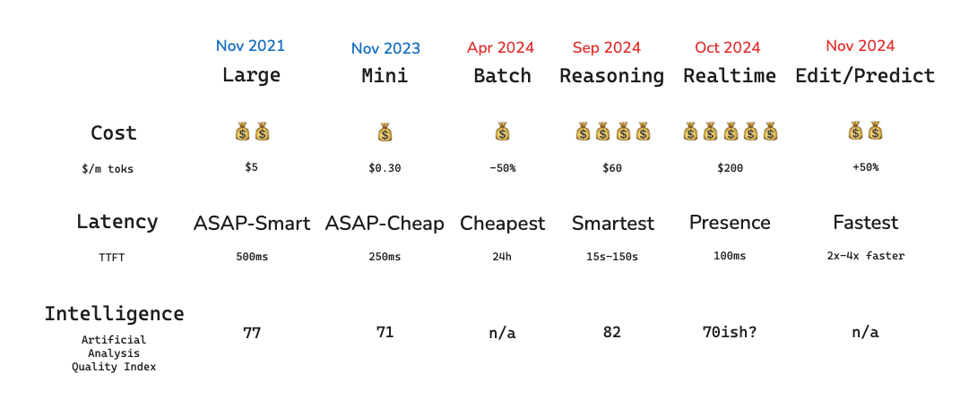

As we analyze on this week's Latent.Space post, this slots nicely in to the wealth of options that have developed that match AI Engineer usecases:

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Technology and Industry Updates

- Major Company Developments: @adcock_brett highlighted significant progress from multiple companies including Etched, Runway, Figure, NVIDIA, OpenAI, Anthropic, Microsoft, Boston Dynamics, ElevenLabs, Osmo, Physical Intelligence, and Meta.

- Model & Infrastructure Updates:

- @vikhyatk announced CPU inference capabilities now available locally

- @dair_ai shared top ML papers covering MrT5, SimpleQA, Multimodal RAG, and LLM geometry concepts

- @rasbt published an article explaining two main approaches to Multimodal LLMs

- @LangChainAI demonstrated RAG Agents with LLMs using NVIDIA course materials

- Product Launches & Features:

- @c_valenzuelab discussed new ways of generating content beyond prompts, including Advanced Camera Controls

- @adcock_brett reported on Etched and DecartAI's Oasis, the first playable fully AI-generated Minecraft game

- @bindureddy showcased AI Engineer building custom AI agents with RAG from English prompts

- Research & Technical Insights:

- @teortaxesTex discussed architecture work in LLMs focusing on inference economics

- @svpino emphasized importance of understanding fundamentals: neural networks, loss functions, optimization techniques

- @c_valenzuelab detailed challenges in AI research labs regarding bureaucracy and resource allocation

Industry Commentary & Culture

- Career & Development: @c_valenzuelab highlighted frustrations in AI research labs including bureaucracy, approval delays, and resource allocation issues

- Technical Discussions: @teortaxesTex discussed AI safety concerns and governance implications

- @DavidSHolz shared an amusing AI safety analogy through a Starcraft game experience

Humor & Memes

- @fchollet joked about AGI vs swipe keyboard accuracy

- @svpino commented on the peaceful nature of the butterfly app

- @vikhyatk made satirical comments about AI access and providers

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Hertz-Dev: First Open-Source Real-Time Audio Model with 120ms Latency

- 🚀 Analyzed the latency of various TTS models across different input lengths, ranging from 5 to 200 words! (Score: 114, Comments: 24): A performance analysis of text-to-speech (TTS) models measured latency across inputs from 5 to 200 words, revealing that Coqui TTS consistently outperformed other models with an average inference time of 0.8 seconds for short phrases and 2.1 seconds for longer passages. The study also found that Microsoft Azure and Amazon Polly exhibited linear latency scaling with input length, while open-source models showed more variable performance patterns especially beyond 100-word inputs.

- Hertz-Dev: An Open-Source 8.5B Audio Model for Real-Time Conversational AI with 80ms Theoretical and 120ms Real-World Latency on a Single RTX 4090 (Score: 591, Comments: 78): Hertz-Dev, an open-source 8.5B parameter audio model, achieves 120ms real-world latency and 80ms theoretical latency for conversational AI running on a single RTX 4090 GPU. The model appears to be designed for real-time audio processing, though no specific implementation details or benchmarking methodology were provided in the post.

- A 70B parameter version of Hertz is currently in training, with plans to expand to more modalities. Running this larger model will likely require H100 GPUs, though some suggest quantization could help reduce hardware requirements.

- Discussion around open source status emerged, with users noting that only weights and inference code are released (like Llama, Gemma, Mistral). Notable exceptions of fully open source models include Olmo and AMD's 1B model.

- The model has a 17-minute context window and could theoretically be fine-tuned like other transformers using audio datasets. Real-world latency (120ms) outperforms GPT-4o (320ms average) and approaches human conversation gaps (200-250ms).

Theme 2. Voice Cloning Advances: F5-TTS vs RVC vs XTTS2

- Speaking with your local LLM with a key press on Linux. Speech to text into any window, most desktop environments. Two hotkeys are the UI. (Score: 51, Comments: 1): The BlahST tool enables Linux users to perform local speech-to-text and LLM interactions using hotkeys, leveraging whisper.cpp, llama.cpp (or llamafile), and Piper TTS without requiring Python or JavaScript. Running on a system with Ryzen CPU and RTX3060 GPU, the tool achieves ~34 tokens/second with gemma-9b-Q6 model and 90x real-time speech inference, while maintaining low system resource usage and supporting multilingual capabilities including Chinese translation.

- Best Open Source Voice Cloning if you have lots of reference audio? (Score: 71, Comments: 18): For voice cloning with 10-20 minutes of reference audio per character, the author seeks alternatives to ElevenLabs and F5-TTS for self-hosted deployment. The post specifically requests solutions that allow pre-training models on individual characters for later inference, moving away from few-shot learning approaches like F5-TTS which are optimized for minimal reference audio.

- RVC (Retrieval-based Voice Conversion) performs well but requires input audio for conversion. When combined with XTTS-2, results can be mixed due to TTS artifacts, though the process is simplified using alltalk-tts beta.

- Fine-tuning F5-TTS produced high-quality results with fast inference using lpscr's implementation, achieving quality comparable to ElevenLabs. The process is now integrated into the main repo via

f5-tts_finetune-gradiocommand. - GPT-SoVITS, MaskCGT, and OpenVoice were mentioned as strong alternatives. MaskCGT leads in zero-shot performance, while GPT-SoVITS was cited as surpassing fine-tuned XTTS2 or F5 reference voice cloning.

Theme 3. Token Management and Model Optimization Techniques

- MMLU-Pro scores of small models (<5B) (Score: 166, Comments: 49): MMLU-Pro benchmark testing with 10 multiple choice options per question establishes a 10% baseline score for random guessing. The test evaluates performance of language models under 5B parameters.

- tips for dealing with unused tokens? keeps getting clogged (Score: 150, Comments: 29): Token usage optimization in LLMs requires managing both input and output tokens to prevent memory clogs and inefficient processing. The post appears to be asking for advice but lacks specific details about the actual problem being encountered with unused tokens or the LLM system being used.

- A significant implementation of KV cache eviction for unused token removal is being developed in VLLM, available at GitHub. This approach differs from compression methods used in llama.cpp or exllama.

- The community referenced research papers including DumpSTAR and DOCUSATE for token analysis, with token expiration methods being proposed since 2021.

- Many users expressed confusion about the post's context, with the top comment noting that responses were polarized between those who fully understood and those completely lost in the technical discussion.

Theme 4. MG²: New Melody-First Music Generation Architecture

- MG²: Melody Is All You Need For Music Generation (Score: 57, Comments: 9): MG², a new music generation model trained on a 500,000 sample dataset, focuses exclusively on melody generation while disregarding other musical elements like harmony and rhythm. The model demonstrates that melody alone contains sufficient information for high-quality music generation, challenging the conventional approach of incorporating multiple musical components, and achieves comparable results to more complex models while using significantly fewer parameters. The research introduces a novel self-attention mechanism specifically designed for melody processing, enabling the model to capture long-range dependencies in musical sequences while maintaining computational efficiency.

- The MusicSet dataset is now available on HuggingFace, containing 500k samples of high-quality music waveforms with descriptions and unique melodies. Users expressed enthusiasm about the dataset's release for advancing music generation research.

- Multiple users found the model's sample outputs underwhelming, with specific concerns about the lack of melody input functionality. One user specifically wanted the ability to transform hummed melodies into cinematic pieces.

- Discussion of videogame music generation highlighted interest in creating soundtracks similar to classics like Mega Man and Donkey Kong Country, with Suno mentioned as a current solution for game music generation with copyright considerations.

Other AI Subreddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

AI Model Performance and Benchmarks

- SimpleBench reveals gap between human and AI reasoning: Human baseline achieves 83.7% while top models like o1-preview (41.7%) and 3.6 Sonnet (41.4%) struggle with basic reasoning tasks, highlighting limitations in spatial-temporal reasoning and social intelligence /r/singularity.

- Key comment notes that models derive world understanding from language, while humans build language on top of learned world models.

AI Security and Infrastructure

- Critical security vulnerabilities in Nvidia GeForce GPUs: All Nvidia GeForce GPU users urged to update drivers immediately due to discovered security flaws /r/StableDiffusion.

AI Image Generation Progress

- FLUX.1-schnell model released with free unlimited generations: New website launched offering unrestricted access to FLUX.1-schnell image generation capabilities /r/StableDiffusion.

- Historical AI image generation progress: Visual comparison showing dramatic improvement in AI image generation from 2015 to 2024, with detailed discussion of early DeepDream to modern models /r/singularity.

AI Ethics and Society

- Yuval Noah Harari warns of AI-driven reality distortion: Discussion of potential societal impacts of AI creating immersive but potentially deceptive digital environments /r/singularity.

Memes and Humor

- AI-generated anime realism: Highly upvoted post (942 score) showcasing realistic anime-style AI generation /r/StableDiffusion.

- AI security camera humor: Video of security camera system in South Africa /r/singularity.

AI Discord Recap

A summary of Summaries of Summaries by O1-preview

Theme 1. Major LLM Releases and Model Updates

- Claude 3.5 Haiku Drops Like a Hot Mixtape: Anthropic released Claude 3.5 Haiku, boasting better benchmarks but hitting users with a 4x price hike. The community debates whether the performance boost justifies the increased cost.

- OpenAI's O1 Model Teases and Vanishes: Users briefly accessed the elusive O1 model via a URL tweak, experiencing advanced features before OpenAI pulled the plug. Speculation abounds about the model's capabilities and official release plans.

- Free Llama 3.2 Models Light Up OpenRouter: OpenRouter now offers free access to Llama 3.2 models, including 11B and 90B variants with improved speeds. Users are thrilled with the 11B variant hitting 900 tps.

Theme 2. AI Advancements in Security and Medicine

- Llama 3.1 Outsmarts GPT-4o in Hacking Benchmark: The new PentestGPT benchmark shows Llama 3.1 outperforming GPT-4o in automated penetration testing. Both models still need improvements in cybersecurity applications.

- MDAgents by Google Aims to Doctor Up Medical Decisions: Google launched MDAgents, an adaptive collaboration of LLMs enhancing medical decision-making. Models like UltraMedical and FEDKIM are set to revolutionize healthcare AI.

- Aloe Beta Sprouts as Open Healthcare LLMs: Aloe Beta, fine-tuned using axolotl, brings a suite of open healthcare LLMs to the table. This marks a significant advancement in AI-driven healthcare solutions.

Theme 3. LLM Fine-Tuning and Performance Struggles

- Unsloth AI Kicks Fine-Tuning into Hyperdrive: Unsloth AI accelerates model fine-tuning nearly 2x faster using LoRA, slashing VRAM usage. Users share strategies for refining models efficiently.

- Hermes 405b Crawls While Others Sprint: Hermes 405b users report glacial response times and errors, sparking frustration. Speculation about rate limiting and API issues circulates as users seek alternatives.

- LM Studio's Mixed GPU Support a Mixed Blessing: LM Studio supports mixed AMD and Nvidia GPU setups, but performance takes a hit due to reliance on Vulkan. For best results, sticking to identical GPUs is recommended.

Theme 4. AI Takes on Gaming and Creative Endeavors

- Oasis Game Opens a New Frontier with AI Worlds: Oasis, the first fully AI-generated game, lets players explore real-time, interactive environments. No traditional game engine here—just pure AI magic.

- Open Interpreter Sparks Joy in AI Enthusiasts: Open Interpreter gains popularity as users integrate it with tools like Screenpipe for AI tasks. Voice capabilities and local recording elevate user experiences.

- AI Podcasts Hit Static, Users Demand Better Sound: NotebookLM users report quality issues in AI-generated podcasts, with random breaks and odd sounds. Calls for robust audio processing and stability improvements grow louder.

Theme 5. AI Ethics, Censorship, and the Community's Voice

- Jailbreakers Debate the True Freedom of LLMs: Users question if jailbreaking LLMs truly liberates models or just adds constraints. The community dives deep into motivations and mechanics behind jailbreaks.

- Censorship Overload Makes Models Mute: Phi-3.5's heavy censorship frustrates users, leading to humorous mock responses and efforts to uncensor the model. An uncensored version pops up on Hugging Face.

- AI Search Engines Compete, Users Left Searching: OpenAI's new SearchGPT underwhelms users seeking real-time results, while alternatives like Perplexity gain praise. The AI search wars are heating up, but not all users are impressed.

PART 1: High level Discord summaries

HuggingFace Discord

-

LLM Fine-tuning and Performance Enhancements: Neuralink achieved a 2x speedup in their code performance, reaching tp=2 with 980 FLOPs, while Llama 3.1 outperformed GPT-4o using the PentestGPT tool, as detailed in the Penetration Testing Benchmark paper.

- Additionally, Gemma 2B surpassed T5xxl on specific tasks with reduced VRAM usage, and discussions highlighted the challenges in MAE finetuning due to deprecation issues.

- AI-driven Cyber Security Models and Benchmarks: A new benchmark, PentestGPT, revealed that Llama 3.1 outperformed GPT-4o in automated penetration testing, indicating both models need improvements in cybersecurity applications as per the recent study.

- There is also a growing interest in developing AI models for malware detection, with community members seeking guidance on implementation strategies.

- AI Development Tools and Environments: The release of VividNode v1.6.0 introduced support for edge-tts and enhanced image generation via GPT4Free, alongside a new ROS2 Docker environment compatible with Ubuntu and Apple Silicon macOS.

- ShellCheck, a static analysis tool for shell scripts, was also highlighted for improving script reliability, as discussed in the GitHub repository.

- Advancements in Quantum Computing for AI: Researchers demonstrated a 1400-second coherence time for a Schrödinger-cat state in $^{173}$Yb atoms, marking a significant breakthrough in quantum metrology discussed in the arXiv paper.

- Additionally, a novel magneto-optic memory chip design promises reduced energy consumption for AI computing, as reported in Nature Photonics.

- AI Applications in Medical Decision-Making: Google launched MDAgents, an adaptive collaboration of LLMs for enhancing medical decision-making, featuring models like UltraMedical and FEDKIM, as highlighted in a recent Medical AI post.

- These innovations aim to streamline medical processes and improve diagnostic accuracy through advanced AI models.

Unsloth AI (Daniel Han) Discord

-

Unsloth AI Accelerates Model Fine-tuning: The Unsloth AI project enables users to fine-tune models like Mistral and Llama nearly 2x faster by utilizing LoRA, reducing VRAM consumption significantly.

- Users discussed strategies such as iteratively refining smaller models before scaling and emphasized the importance of dataset size and quality for effective training.

- Python 3.11 Boosts Performance Across OS: Upgrading to Python 3.11 can yield performance improvements, offering ~1.25x speed on Linux, 1.2x on Mac, and 1.12x on Windows systems, as highlighted in a tweet.

- Concerns were raised about package compatibility during Python upgrades, highlighting the complexities involved in maintaining software stability.

- Efficient Model Inference via Quantization: Discussion centered on the feasibility of fine-tuning quantized models such as Qwen 2.5 72b, aiming for reduced memory usage and satisfactory inference speeds on CPUs.

- It was noted that while model quantization facilitates lightweight deployment, initial training still requires significant computational resources.

- Optimal Web Frameworks for LLM Integration: Recommendations for integrating language models into web applications included frameworks like React and Svelte for frontend development.

- Flask was suggested for developers preferring Python-based solutions to build model interfaces.

- Enhancing Git Practices in Unsloth Repository: The absence of a .gitignore file in the Unsloth repository was highlighted, stressing the importance of managing files before pushing changes.

- Users shared insights on maintaining clean git histories through effective usage of git commands and local exclusion configurations.

OpenRouter (Alex Atallah) Discord

-

Claude 3.5 Haiku Launches in Trio: Anthropic has released Claude 3.5 Haiku in standard, self-moderated, and dated variants. Explore the versions at Claude 3.5 Overview.

- Users can access the different variants through standard, dated, and beta releases, facilitating seamless updates and testing.

- Free Llama 3.2 Models Now Accessible: Llama 3.2 models are available for free via OpenRouter, featuring 11B and 90B variants with improved speeds. Access them at 11B variant and 90B variant.

- The 11B variant offers a performance of 900 tps, while the 90B variant delivers 280 tps, catering to diverse application needs.

- Hermes 405b Encounters Latency Issues: The free version of Hermes 405b is experiencing significant latency and access errors for many users.

- Speculations suggest that the issues might be due to rate limiting or temporary outages, although some users still receive intermittent responses.

- API Rate Limits Cause User Confusion: Users are hitting rate limits on models such as ChatGPT-4o-latest, leading to confusion between GPT-4o and ChatGPT-4o versions on OpenRouter.

- The unclear differentiation between model titles has resulted in mixed user experiences and uncertainties regarding rate limit policies.

- Haiku Pricing Increases Spark Concerns: The pricing for Claude 3.5 Haiku has risen significantly, raising concerns about its affordability among the user base.

- Users are frustrated by the increased costs, especially when comparing Haiku to alternatives like Gemini Flash, questioning its future viability.

LM Studio Discord

-

LM Studio's Mixed GPU Support: Users confirmed that LM Studio supports mixed use of AMD and Nvidia GPUs, though performance may be constrained due to reliance on Vulkan.

- For optimal results, utilizing identical Nvidia cards is recommended over mixed GPU setups.

- Embedding Model Limitations in LM Studio: It was noted that not all models are suitable for embeddings; specifically, Gemma 2 9B is incompatible with LM Studio for this purpose.

- Users are advised to select appropriate embedding models to prevent runtime errors.

- Structured Output Challenges in LLMs: Users are encountering difficulties in enforcing structured output formats, resulting in extraneous text.

- Suggestions include enhancing prompt engineering and utilizing Pydantic classes to improve output precision.

- Using Python for LLM Integration: Discussions focused on implementing code snippets to build custom UIs and functionalities with various language models from Hugging Face.

- Participants highlighted the flexibility of employing multiple models interchangeably to handle diverse tasks.

- LM Studio Performance on Various Hardware: Users reported varying performance metrics when running LLMs via LM Studio on different hardware setups, with some experiencing delays in token generation.

- Concerns were raised about context management and hardware limitations contributing to these performance issues.

Nous Research AI Discord

-

Hermes 405b Model Performance: Users reported slow response times and intermittent errors when using the Hermes 405b model via Lambda and OpenRouter, with some suggesting it felt 'glacial' in performance.

- The free API on Lambda especially showed inconsistent availability, causing frustration among users trying to access the model.

- Jailbreaking LLMs: A member questioned whether jailbreaking LLMs is more about creating constraints to free them, sparking a lively discussion about the motivations and implications of such practices.

- One participant highlighted that many adept at jailbreaking might not fully understand LLM mechanics beneath the surface.

- MDAgents: Google introduced MDAgents, showcasing an Adaptive Collaboration of LLMs aimed at enhancing medical decision-making.

- This week's podcast summarizing the paper emphasizes the importance of collaboration among models in tackling complex healthcare challenges.

- Future of Nous Research Models: Teknium stated that Nous Research will not create any closed-source models, but some other offerings may remain private or contract-based for certain use cases.

- The Hermes series will always remain open source, ensuring transparency in its development.

- AI Search Engine Performance: Users lamented that OpenAI's new search isn't delivering real-time results, especially in comparison to platforms like Bing and Google.

- One noted that Perplexity excels in search result quality and could be seen as superior to both Bing and OpenAI's offerings.

Perplexity AI Discord

-

Claude's 3.5 Haiku Enhances AI Palette: The rollout of Claude 3.5 Haiku across platforms like Amazon Bedrock and Google's Vertex AI has raised concerns regarding its new pricing structure among users.

- Despite cost increases, Claude 3.5 Haiku retains robust conversational capabilities, though its value proposition remains debated against existing tools.

- SearchGPT: OpenAI's Answer to Traditional Search: The introduction of SearchGPT offers a suite of new AI-powered search functionalities aimed at competing with established search engines.

- This launch has sparked discussions regarding the evolving role of AI in information retrieval and the future dynamics of search technology.

- China's Llama AI Model Advances Military AI: Reports indicate that the Chinese military is developing a Llama-based AI model, signifying a strategic move in defense AI applications.

- This development intensifies global conversations about AI's role in military advancements and international tech competition in defense sectors.

Eleuther Discord

-

Gradient Dualization Influences Model Training Dynamics: Discussions highlighted the role of gradient dualization in training deep learning models, focusing on how norms affect model performance as architectures scale.

- Participants explored the impact of alternating between parallel and sequential attention mechanisms, referencing the paper Preconditioned Spectral Descent for Deep Learning.

- Optimizer Parameter Tuning Mirrors Adafactor Schedules: Members analyzed changes to the Adafactor schedule, noting its similarity to adding another hyperparameter and questioning the innovation behind these adjustments.

- The optimal beta2 schedule was found to resemble Adafactor's existing configuration, as discussed in Quasi-hyperbolic momentum and Adam for deep learning.

- Best Practices for Configuring GPT-NeoX: Engineers are utilizing Hypster for configurations and integrating MLFlow for experiment tracking in their GPT-NeoX setups.

- A member inquired about the prevalence of using DagsHub, MLFlow, and Hydra together, referencing the lm-evaluation-harness.

- DINOv2 Enhances ImageNet Pretraining with Expanded Data: DINOv2 leverages 22k ImageNet data, resulting in improved evaluation metrics compared to the previous 1k dataset.

- The DINOv2 paper demonstrated enhanced performance metrics, emphasizing effective distillation techniques.

- Trade-offs Between Scaling and Depth in Neural Networks: The community discussed the balance between depth and width in neural network architectures, analyzing how each dimension impacts overall performance.

- Empirical validations were suggested to confirm the theory that as models scale, the dynamics between network depth and width become increasingly aligned.

aider (Paul Gauthier) Discord

-

Claude 3.5 Haiku Gains on Leaderboard: Claude 3.5 Haiku achieved a 75% score on Aider's Code Editing Leaderboard, positioning just behind the previous Sonnet 06/20 model.

- This marks Haiku as a cost-efficient alternative, closely matching Sonnet's capabilities in code editing tasks.

- Aider v0.62.0 Integrates Claude 3.5 Haiku: The latest Aider v0.62.0 release now fully supports Claude 3.5 Haiku, enabling activation via

--haikuflag.

- Additionally, it introduces features to apply edits from web apps like ChatGPT, enhancing developer workflow.

- Comprehensive AI Model Comparisons: Discussions highlighted Sonnet 3.5 for its superior quality, while Haiku 3.5 is acknowledged as a robust but slower contender.

- Community members compared coding capabilities across models and shared real-world application experiences.

- Benchmark Performance Insights: Sonnet 3.5 outperforms other models in benchmark results shared by Paul G, whereas Haiku 3.5 lags in efficiency.

- There's significant interest in mixed model combinations, such as Sonnet and Haiku, for diverse task performance.

- OpenAI's Strategic o1 Leak: Users observed that OpenAI's leak of o1 seems deliberate to build anticipation for future releases.

- Referencing Sam Altman's past tactics, such as imagery like strawberries and Orion starry sky, to generate interest.

OpenAI Discord

-

GPT-4o Enhances Reasoning with Canvas Features: The next version of GPT-4o is rolling out, introducing advanced reasoning capabilities similar to O1. This update includes features like placing large text blocks in a canvas-style box, enhancing usability for reasoning tasks.

- Users expressed excitement upon gaining full access to O1, pointing out the significant improvements in reasoning efficiency. This upgrade aims to streamline complex task executions for AI Engineers.

- OpenAI's Orion Project Sparks Anticipation: OpenAI's Orion project has members excited for forthcoming AI innovations expected by 2025. Discussions highlight that while Orion is underway, current models like O1 are not yet classified as true AGI.

- Conversations emphasize the Orion project's potential to bridge the gap between current capabilities and AGI, with members eagerly anticipating major breakthroughs in the near future.

- Adoption of Middleware in OpenAI Integrations: Members are exploring the use of middleware products that route requests to different endpoints instead of directly connecting to OpenAI. This approach is being debated to assess its normalcy and effectiveness.

- One member questioned if using middleware is a standard pattern, seeking insights from the community on best practices for integrating API endpoints with OpenAI's services.

- Enhancing Prompt Measurement with Analytics Tools: Discussions are focused on prompt measurement tools for tracking user interactions, including frustration levels and task completion rates. Members suggested utilizing sentiment analysis to gain deeper insights.

- Implementers are considering directing LLMs to process conversational data, aiming to refine prompt effectiveness and improve overall user satisfaction through data-driven adjustments.

- Automating Task Planning Using LLMs: Participants are seeking resources for automating complex task planning with LLMs, specifically for generating SQL queries and conducting data analysis. Clarity in requirements is emphasized for effective automation.

- Contributors highlighted the potential of models to assist in brainstorming sessions and streamline the planning process, underlining the importance of precise query formulation.

Notebook LM Discord Discord

-

NotebookLM Language Configuration Challenges: Users reported difficulties configuring NotebookLM to respond in their preferred languages, especially when uploading documents in different languages. Instructions emphasized that NotebookLM defaults to the language set in the user's Google account.

- This has sparked discussions on enhancing language support to accommodate a more diverse user base.

- Podcast Generation Quality Issues: Multiple users flagged concerns about the quality of NotebookLM podcasts, noting unexpected breaks and random sounds during playback. While some found the interruptions entertaining, others expressed frustration over the negative impact on the listening experience.

- These quality issues are leading to calls for more robust audio processing and stability improvements.

- API Development Speculations: Discussions arose around the potential development of an API for NotebookLM, influenced by a tweet from Vicente Silveira hinting at upcoming API features.

- Despite the community's interest, no official announcements have been made, leaving room for speculation based on industry trends.

- Audio Overview Feature Requests: A user inquired about generating multiple audio overviews from a dense 200-page PDF using NotebookLM, contemplating the labor-intensive method of splitting the document into smaller parts. Suggestions included submitting feature requests for generating audio overviews from single sources.

- This reflects a demand for more efficient summarization tools within NotebookLM to handle large documents seamlessly.

- Special Needs Use Cases Expansion: Members shared experiences using NotebookLM for special needs students and sought use cases or success stories to support a pitch to Google's accessibility team. Plans to build a collective in the UK were also discussed.

- These efforts highlight the community's initiative to leverage NotebookLM for enhancing educational accessibility.

GPU MODE Discord

-

Triton Kernel Optimization: Members discussed the complexities of optimizing Triton kernels, particularly the GPU's waiting time for CPU operations, and suggested that stacking multiple Triton matmul operations could reduce overhead for larger matrices.

- The conversation emphasized strategies to improve performance by minimizing CPU-GPU synchronization delays, with members considering various approaches to enhance kernel efficiency.

- FP8 Quantization Techniques: A user shared their experience with FP8 quantization methods, noting surprising speed improvements compared to pure PyTorch implementations, and provided a link to their GitHub repository.

- The discussion highlighted challenges in dynamically quantizing activations efficiently, referencing the flux-fp8-api as a resource for implementing these techniques.

- LLM Inference on ARM CPUs: LLM inference on ARM CPUs like NVIDIA's Grace and AWS's Graviton was explored, with discussions on handling larger models up to 70B and referencing the torchchat repository.

- Participants noted that clustering multiple ARM SBCs equipped with Ampere Altra processors can yield effective performance, especially when utilizing tensor parallelism to bridge CPU and GPU capabilities.

- PyTorch H100 Optimizations: There was an in-depth discussion on PyTorch's optimizations for H100 hardware, confirming support for cudnn attention but noting that issues led to its default disablement in version 2.5.

- Members shared mixed experiences regarding the stability of H100 features, mentioning that Flash Attention 3 remains under development and affects performance consistency.

- vLLM Demos and Llama 70B Performance: Plans to develop streams and blogs showcasing vLLM implementations with custom kernels were announced, with considerations for creating a forked repository to enhance the forward pass.

- Discussions on improving Llama 70B's performance under high decode workloads included potential collaborations with vLLM maintainers and integrating Flash Attention 3 for better efficiency.

Latent Space Discord

-

Claude Introduces Visual PDF Support: Claude has launched visual PDF support across Claude AI and the Anthropic API, enabling users to analyze diverse document formats.

- This update allows extraction of data from financial reports and legal documents, enhancing user interactions significantly.

- AI Search Engine Competition Intensifies: The revival of search engine rivalry is evident with new entrants like SearchGPT and Gemini challenging Google's dominance.

- The AI Search Wars article details various innovative search solutions and their implications.

- O1 Model Briefly Accessible, Then Secured: The O1 model was temporarily accessible via a URL modification, allowing image uploads and swift inference capabilities.

- Amidst excitement and speculation, ChatGPT's O1 emerged, but access has since been restricted.

- Entropix Achieves 7% Boost in Benchmarks: Entropix demonstrated a 7 percentage point increase in benchmarks for small models, indicating its scalability.

- Members are anticipating how these results will influence future model developments and implementations.

- Open Interpreter Gains Traction Among Users: Users have expressed enthusiasm for Open Interpreter, considering its integration for AI tasks.

- One member suggested setting it up for future use, noting they feel out of the loop.

Stability.ai (Stable Diffusion) Discord

-

Stable Diffusion 3.5 support with A1111: Users discussed whether Stable Diffusion 3.5 works with AUTOMATIC1111, mentioning that while it is new, it may have limited compatibility.

- It was suggested to find guides on utilizing SD3.5, which might be available on YouTube due to its recent release.

- Concerns over Scam Bots in Server: Users raised concerns about a scam bot sending links and the lack of direct moderation presence within the server.

- Moderation methods such as right-click reporting were discussed, with mixed feelings about their effectiveness in preventing spam.

- Techniques for Prompting 'Waving Hair': A user sought advice on how to prompt for 'waving hair' without indicating that the character should be waving.

- Suggestions included using simpler terms like 'wavey' to achieve the intended effect without misinterpretation.

- Resources for Model Training: Users shared insights on training models using images and tags, expressing uncertainty about the relevance of older tutorials.

- Resources mentioned included KohyaSS and discussions about training with efficient methods and tools.

- Issues with Dynamic Prompts Extension: One user reported frequent crashes when using the Dynamic Prompts extension in AUTOMATIC1111, leading to frustration.

- Conversations centered around installation errors and the need for troubleshooting assistance, with one user sharing their experiences.

Modular (Mojo 🔥) Discord

-

Mojo Hardware Lowering Support: Members discussed the limitations of Mojo's current hardware lowering capabilities, specifically the inability to pass intermediate representations to external compilers as outlined in the Mojo🔥 FAQ | Modular Docs.

- A suggestion was made to contact Modular for potential upstreaming options if enhanced hardware support is desired.

- Managing References in Mojo: A member inquired about storing safe references from one container to another and encountered problems with lifetimes in structs, highlighting the need for tailored designs to avoid invalidation issues.

- Discussions emphasized ensuring pointer stability while manipulating elements in custom data structures.

- Slab List Implementation: Members reviewed the implementation of a slab list and considered memory management aspects, noting the potential for merging functionality with standard collections.

- The concept of using inline arrays and how it affects performance and memory consistency was crucial to their design.

- Mojo Stability and Nightly Releases: Concerns were raised about the stability of switching fully to nightly Mojo releases, highlighting that nightly versions can change significantly before merging to main.

- Despite including the latest developments, members emphasized the need for stability and PSA regarding significant changes.

- Custom Tensor Structures in Neural Networks: The need for custom tensor implementations in neural networks was explored, revealing various use cases such as memory efficiency and device distribution.

- Members noted parallels with data structure choices, maintaining that the need for specialized data handling remains relevant.

Interconnects (Nathan Lambert) Discord

-

AMD Reintroduces OLMo Language Model: AMD has reintroduced the OLMo language model, generating significant interest within the AI community.

- Members reacted with disbelief and humor, expressing surprise over AMD's developments in language modeling.

- Grok Model API Launches with 128k Context: The Grok model API has been released, providing developers access to models with a context length of 128,000 tokens.

- The beta program includes free trial credits, humorously referred to as '25 freedom bucks', encouraging experimentation.

- Claude 3.5 Haiku Pricing Quadruples: Claude 3.5 Haiku has been launched, delivering enhanced benchmarks but at 4x the cost of its predecessor.

- There is speculation regarding the unexpected price increase amidst a market pressure driving AI inference costs downward.

- AnthropicAI Token Counting API Explored: Members experimented with the AnthropicAI Token Counting API focusing on Claude's chat template and digit tokenization of images/PDFs.

- The accompanying image provided a TL;DR of the API's capabilities, highlighting its handling of different data formats.

- Chinese Military Utilizes Llama Model for Warfare Insights: The Chinese military has employed Meta’s Llama model to analyze and develop warfare tactics and structures, fine-tuning it with publicly available military data.

- This adaptation allows the model to effectively respond to queries related to military affairs, showcasing Llama's versatility.

LlamaIndex Discord

-

OilyRAGs Champions Hackathon with AI: The AI-powered catalog OilyRAGs secured 3rd place at the LlamaIndex hackathon, demonstrating the capabilities of Retrieval-Augmented Generation in enhancing mechanical workflows. Explore its implementation here and its claimed 6000% efficiency improvement.

- This project aims to streamline tasks in the mechanics sector, showcasing practical RAG applications.

- Optimizing Custom Agent Workflows: A discussion on Custom Agent Creation led to recommendations to bypass the agent worker/runner and utilize workflows instead, as detailed in the documentation.

- This method simplifies the agent development process, enabling more efficient integration of specialized reasoning loops.

- Cost Estimation Strategies for RAG Pipelines: Members debated RAG pipeline cost estimation using OpenAIAgentRunner, clarifying that tool calls are billed separately from completion calls.

- They emphasized using the LlamaIndex token counting utility to accurately calculate the average token usage per message for better budgeting.

- Introducing bb7: A Local RAG Voice Chatbot: bb7, a local RAG-augmented voice chatbot, was introduced, allowing document uploads and context-aware conversations without external dependencies. It incorporates Text-to-Speech for smooth interactions here.

- This innovation highlights advancements in creating user-friendly, offline-capable chatbot solutions.

- Lightweight API for Data Analysis Tested: A new API for Data Analysis was presented, offering a faster and lightweight alternative to OpenAI Assistant and Code Interpreter, specifically designed for data analysis and visualizations here.

- It generates either CSV files or HTML charts, emphasizing conciseness and efficiency without superfluous details.

OpenInterpreter Discord

-

Anthropic Anomalies in Claude Command: Users reported a series of Anthropic errors and API issues with the latest Claude model, specifically related to the command

interpreter --model claude-3-5-sonnet-20240620.- One user identified a recurring error, hinting at a trend affecting multiple users.

- Even Realities Enhance Open Interpreter: A member promoted the Even Realities G1 glasses as a potential tool for Open Interpreter integration, highlighting their open-source commitment.

- Others discussed the hardware capabilities and anticipated future plugin support.

- Oasis AI: Pioneering Fully AI-Generated Games: The team announced Oasis, the first playable, realtime, open-world AI model, marking a step towards complex interactive environments.

- Players can engage with the environment via keyboard inputs, showcasing real-time gameplay without a traditional game engine.

- Claude 3.5 Haiku Hits High Performance: Claude 3.5 Haiku is now available on multiple platforms including the Anthropic API and Google Cloud's Vertex AI, offering the fastest and most intelligent experience yet.

- The model surpassed Claude 3 Opus on various benchmarks while maintaining cost efficiencies, as shared in this tweet.

- OpenInterpreter Optimizes for Claude 3.5: A new pull request introduced a profile for Claude Haiku 3.5, submitted by MikeBirdTech.

- The updates aim to enhance integration within the OpenInterpreter project, reflecting ongoing repository development.

DSPy Discord

-

Full Vision Support in DSPy: A member celebrated the successful merge of Pull Request #1495 that adds Full Vision Support to DSPy, marking a significant milestone.

- It's been a long time coming, expressing appreciation for the teamwork involved.

- Docling Document Processing Tool: A member introduced Docling, a document processing library that can convert various formats into structured JSON/Markdown outputs for DSPy workflows.

- They highlighted key features including OCR support for scanned PDFs and integration capabilities with LlamaIndex and LangChain.

- STORM Module Modifications: A member suggested improvements to the STORM module, specifically enhancing the utilization of the table of contents.

- They proposed generating articles section by section based on the TOC and incorporating private information to enhance outputs.

- Forcing Output Fields in Signatures: A member inquired about enforcing an output field in a signature to return existing features instead of generating new ones.

- Another member provided a solution by outlining a function that correctly returns the features as part of the output.

- Optimizing Few-shot Examples: Members discussed optimizing few-shot examples without modifying prompts, focusing on enhancing example quality.

- Recommendations were made to use BootstrapFewShot or BootstrapFewShotWithRandomSearch optimizers to achieve this.

tinygrad (George Hotz) Discord

-

WebGPU's future integration in tinygrad: Members discussed the readiness of WebGPU and suggested implementing it once it's ready.

- One member mentioned, when WebGPU is ready, we can consider that.

- Apache TVM Features: The Apache TVM project tvm.apache.org focuses on optimizing machine learning models across diverse hardware platforms, offering features like model compilation and backend optimizations.

- Members highlighted its support for platforms such as ONNX, Hailo, and OpenVINO.

- Challenges implementing MobileNetV2 in tinygrad: A user encountered issues with implementing MobileNetV2, specifically with the optimizer failing to calculate gradients properly.

- The community engaged in troubleshooting efforts and discussed various experimental results to resolve the problem.

- Fake PyTorch Backend Development: A member shared their 'Fake PyTorch' wrapper that utilizes tinygrad as the backend, currently supporting basic features but missing advanced functionalities.

- They invited feedback and expressed curiosity about their development approach.

- Release of Oasis AI model: The Oasis project announced the release of an open-source real-time AI model capable of generating playable gameplay and interactions using keyboard inputs. They released code and weights for the 500M parameter model, highlighting its capability for real-time video generation.

- Future plans aim to enhance performance, as discussed by the team members.

OpenAccess AI Collective (axolotl) Discord

-

Fine-Tuning Llama 3.1 for Domain-Specific Q&A: A member sought high-quality instruct datasets in English to fine-tune Meta Llama 3.1 8B, aiming to integrate with domain-specific Q&A, favoring fine-tuning over LoRA methods.

- They emphasized the goal of maximizing performance through fine-tuning, highlighting the challenges associated with alternative methods.

- Addressing Catastrophic Forgetting in Fine-Tuning: A member reported experiencing catastrophic forgetting while fine-tuning their model, raising concerns about the stability of the process.

- In response, another member suggested that RAG (Retrieval-Augmented Generation) might offer a more effective solution for certain applications.

- Granite 3.0 Benchmarks Outperform Llama 3.1: Granite 3.0 was proposed as an alternative, boasting higher benchmarks compared to Llama 3.1, and includes a fine-tuning methodology designed to prevent forgetting.

- Additionally, Granite 3.0's Apache license was highlighted, offering greater flexibility for developers.

- Enhancing Inference Scripts and Resolving Mismatches: Members identified that the current inference script lacks support for chat formats, only handling plain text and adding a

begin_of_texttoken during generation.

- This design flaw leads to a mismatch with training, sparking discussions on potential improvements and updates to the README documentation.

- Launch of Aloe Beta: Advanced Healthcare LLMs: Aloe Beta, a suite of fine-tuned open healthcare LLMs, was released, marking a significant advancement in AI-driven healthcare solutions. Details are available here.

- The development involved a meticulous SFT phase using axolotl, ensuring the models are tailored for various healthcare-related tasks, with team members expressing enthusiasm for its potential impact.

LAION Discord

-

32 CLS Tokens Enhance Stability: Introducing 32 CLS tokens significantly stabilizes the training process, improving reliability and enhancing outcomes.

- This adjustment demonstrates a clear impact on training consistency, making it a noteworthy development for model performance.

- Blurry Latent Downsampling Concerns: A member questioned the suitability of bilinear interpolation for downsampling latents, noting that the results appear blurry.

- This concern highlights potential limitations in current downsampling methods, prompting a reevaluation of technique effectiveness.

- Standardizing Inference APIs: Developers of Aphrodite, AI Horde, and Koboldcpp have agreed on a standard to assist inference integrators in recognizing APIs for seamless integration.

- The new standard is live on platforms like AI Horde, with ongoing efforts to onboard more APIs and encourage collaboration among developers.

- RAR Image Generator Sets New FID Record: The RAR image generator achieved a FID score of 1.48 on the ImageNet-256 benchmark, showcasing exceptional performance.

- Utilizing a randomness annealing strategy, RAR outperforms previous autoregressive image generators without incurring additional costs.

- Position Embedding Shuffle Complexities: A proposal to shuffle the position embeddings at the start of training, gradually reducing this effect to 0% by the end, has emerged.

- Despite its potential, a member noted that implementing this shuffle is more complex than initially anticipated, reflecting the challenges in optimizing embedding strategies.

LLM Agents (Berkeley MOOC) Discord

-

LLM Agents Hackathon Setup Deadline: Teams are reminded to set up their OpenAI API and Lambda Labs Access by End of Day Monday, 11/4, to avoid impacting resource access and can submit via this form.

- Early API setup enables credits from OpenAI next week, ensuring smooth participation and access to the Lambda inference endpoint throughout the hackathon.

- Project GR00T Presentation by Jim Fan: Jim Fan will present Project GR00T, NVIDIA's initiative for generalist robotics AI brains, during the livestream here at 3:00pm PST.

- As the Research Lead of GEAR, he emphasizes developing generally capable AI agents across various settings.

- Team Formation for Hackathon: Participants seeking collaboration opportunities for the LLM Agents Hackathon can apply via this team signup form, outlining goals for innovative LLM-based agents.

- This form facilitates team applications, encouraging the formation of groups focused on creating advanced LLM agents.

- Jim Fan's Expertise and Achievements: Dr. Jim Fan, with a Ph.D. from Stanford Vision Lab, received the Outstanding Paper Award at NeurIPS 2022 for his research on multimodal models for robotics and AI agents excelling at Minecraft.

- His impactful work has been featured in major media outlets like New York Times and MIT Technology Review.

Cohere Discord

-

Simulating ChatGPT's Browsing Mechanics: A member in R&D is exploring the manual simulation of ChatGPT's browsing by tracking search terms and results, aiming to analyze SEO impact and the model's ability to filter and rank up to 100+ results efficiently.

- This initiative seeks to compare ChatGPT's search behavior with human search methods to enhance understanding of AI-driven information retrieval.

- Chatbots Challenging Traditional Browsers: The discussion highlighted the potential for chatbots like ChatGPT to replace traditional web browsers, acknowledging the difficulty in predicting such technological shifts.

- One participant referenced past technology forecasts, such as the evolution of video calling, to illustrate the unpredictability of future advancements.

- Augmented Reality Transforming Info Access: A member proposed that augmented reality could revolutionize information access by providing constant updates, moving beyond the current expectations of a browserless environment.

- This viewpoint expands the conversation to include potential transformative technologies, emphasizing new methods of interacting with information.

- Cohere API Query Redirection: A member advised against posting general questions in the Cohere API channel, directing them instead to the appropriate discussion space for API-related inquiries.

- Another member acknowledged the mistake humorously, noting the widespread AI interest among users and committing to properly channel future questions.

Torchtune Discord

-

Torchtune Enables Distributed Checkpointing: A member inquired about an equivalent to DeepSpeed's

stage3_gather_16bit_weights_on_model_savein Torchtune, addressing issues during multi-node finetuning.- It was clarified that setting this flag to false facilitates distributed/sharded checkpointing, allowing each rank to save its own shard.

- Llama 90B Integrated into Torchtune: A pull request was shared to integrate Llama 90B into Torchtune, aiming to resolve checkpointing bugs.

- The PR #1880 details enhancements related to checkpointing in the integration process.

- Clarifying Gradient Norm Clipping: Members discussed the correct computation of gradient norms in Torchtune, emphasizing the need for a reduction across L2 norms of all per-parameter gradients.

- There is potential to clip gradient norms in the next iteration, though this would alter the original gradient clipping logic.

- Duplicate 'compile' Key in Config Causing Failures: A duplicate

compilekey was found in llama3_1/8B_full.yaml, leading to run failures.

- Uncertainty remains whether other configuration files have similar duplicate key issues.

- ForwardKLLoss Actually Computes Cross-Entropy: ForwardKLLoss in Torchtune computes cross-entropy instead of KL-divergence as expected, requiring adjustments to expectations.

- The distinction is crucial since optimizing KL-divergence effectively means optimizing cross-entropy due to constant terms.

Alignment Lab AI Discord

-

Aphrodite Adds Experimental Windows Support: Aphrodite now supports Windows in an experimental phase, enabling high-throughput local AI optimized for NVIDIA GPUs. Refer to the installation guide for setup details.

- Additionally, support for AMD and Intel compute has been confirmed, although Windows support remains untested. Kudos to AlpinDale for spearheading the Windows implementation efforts.

- LLMs Drive Auto-Patching Innovations: A new blog post on self-healing code discusses how LLMs are instrumental in automatically fixing vulnerable software, marking 2024 as a pivotal year for this advancement.

- The author elaborates on six approaches to address auto-patching, with two already available as products and four remaining in research, highlighting a blend of practical solutions and ongoing innovation.

- LLMs Podcast Explores Auto-Healing Software: The LLMs Podcast features discussions on the self-healing code blog post, providing conversational insights into auto-healing software technologies.

- Listeners can access the podcast on both Spotify and Apple Podcast, enabling engagement with the content in an auditory format.

MLOps @Chipro Discord

-

LoG Conference Lands in Delhi: The Learning on Graphs (LoG) Conference will take place in New Delhi from 26 - 29 November 2024, focusing on advancements in machine learning on graphs. This inaugural South Asian chapter is hosted by IIT Delhi and Mastercard, with more details available here.

- The conference aims to connect innovative thinkers and industry professionals in graph learning, encouraging participation from diverse fields such as computer science, biology, and social science.

- Graph Machine Learning at LoG 2024: LoG 2024 places a strong emphasis on machine learning on graphs, showcasing the latest advancements in the field. Attendees can find more information about the conference on the official website.

- The event seeks to foster discussions that bridge various disciplines, promoting collaboration among experts in machine learning, biology, and social science.

- Local Meetups for LoG 2024: The LoG community is organizing a network of local mini-conferences to facilitate discussions and collaborations in different geographic regions. A call for local meetups for LoG 2024 is currently open, aiming to enhance social experiences during the main event.

- These local meetups are designed to bring together participants from similar areas, promoting community engagement and shared learning opportunities.

- LoG Community Resources: Participants of LoG 2024 can join the conference community on Slack and follow updates on Twitter. Additionally, recordings and materials from past conferences are available on YouTube.

- These resources ensure that attendees have continuous access to valuable materials and can engage with the community beyond the conference dates.

Gorilla LLM (Berkeley Function Calling) Discord

-

Function Definitions Needed for Benchmarking: A member is benchmarking a retrieval-based approach to function calling and is seeking a collection of available functions and their definitions for indexing.

- They specifically mentioned that having this information organized per test category would be extremely helpful.

- Structured Collections Enhance Benchmarks: The discussion highlights the demand for a structured collection of function definitions to aid in function calling benchmarks.

- Organizing information by test category would improve accessibility and usability for engineers conducting similar work.

The LLM Finetuning (Hamel + Dan) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!