[AINews] Llama 3.2: On-device 1B/3B, and Multimodal 11B/90B (with AI2 Molmo kicker)

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

9000:1 token:param ratios are all you need.

AI News for 9/24/2024-9/25/2024. We checked 7 subreddits, 433 Twitters and 31 Discords (223 channels, and 3218 messages) for you. Estimated reading time saved (at 200wpm): 316 minutes. You can now tag @smol_ai for AINews discussions!

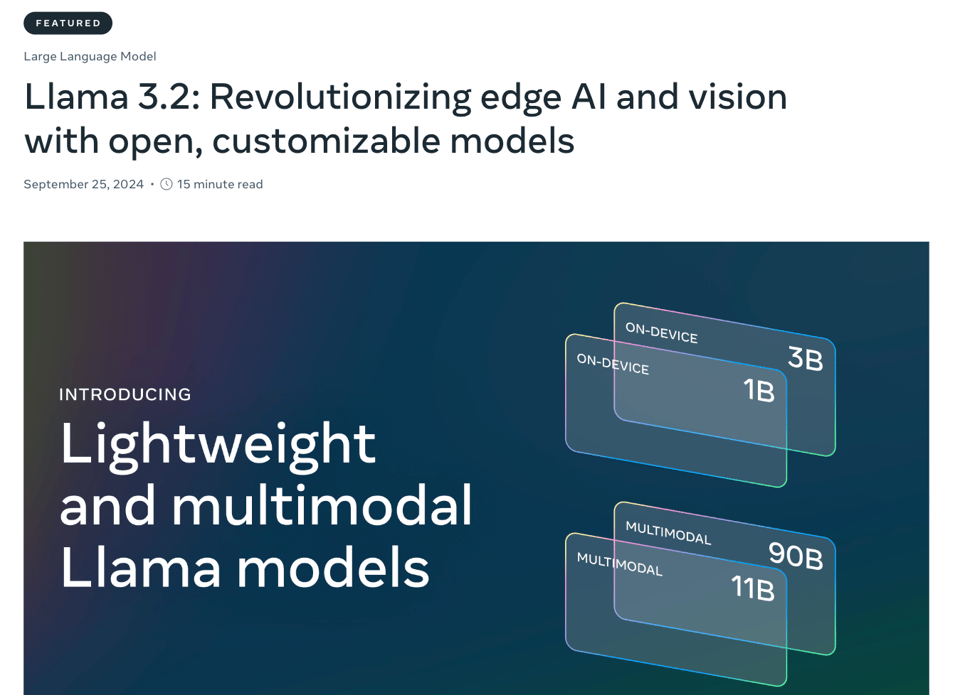

Big news from Mira Murati and FB Reality Labs today, but the actual technical news you can use today is Llama 3.2:

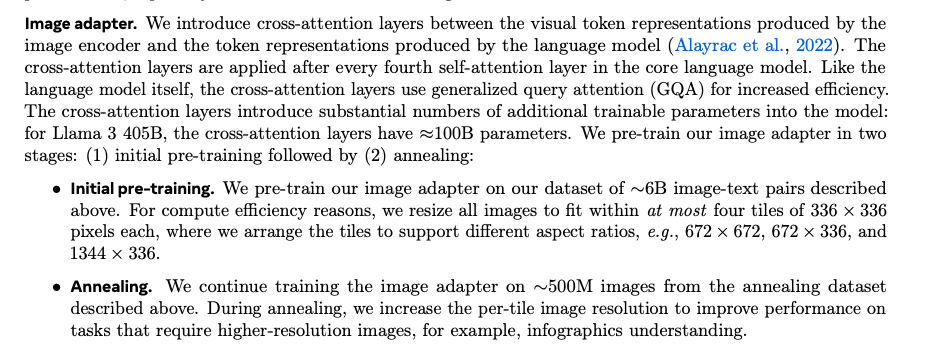

As teased by Zuck and previewed in the Llama 3 paper (our coverage here), the Multimodal versions of Llama 3.2 released as anticipated, adding a 3B and a 20B vision adapter on a frozen Llama 3.1:

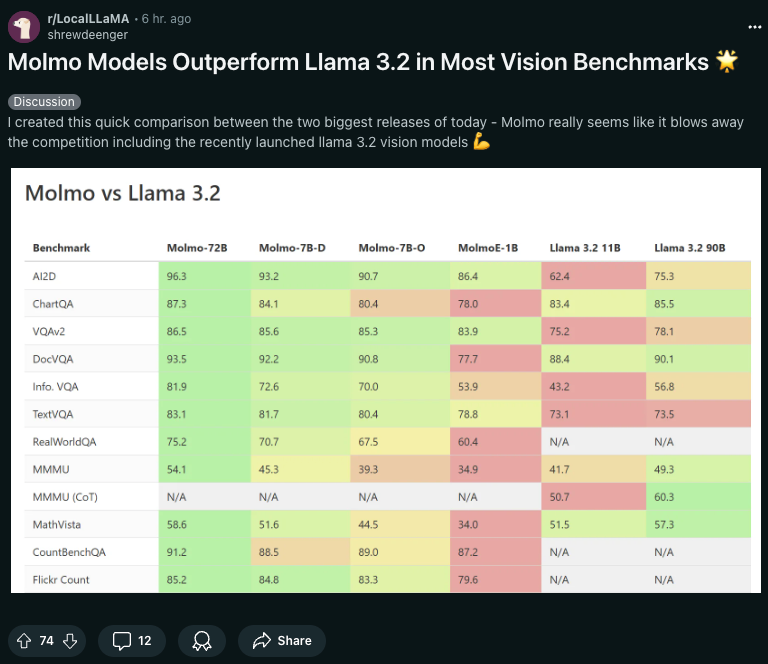

The 11B is comparable/slightly better than Claude Haiku, and the 90B is comparable/slightly better than GPT-4o-mini, though you will have to dig a lot harder to find out how far it trails behind 4o, 3.5 Sonnet, 1.5 Pro, and Qwen2-VL with a 60.3 on MMMU.

Meta is being praised for their open source here, but don't miss the multimodal Molmo 72B and 7B models from AI2 also releasing today. It has not escaped /r/localLlama's attention that Molmo is outperforming 3.2 in vision:

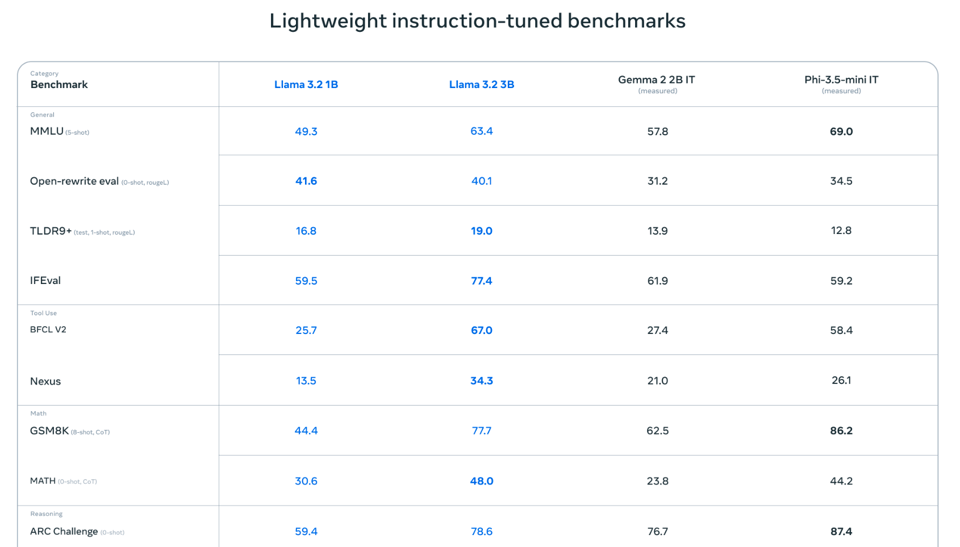

The bigger/pleasant/impressive surprise from Meta are the new 128k-context 1B and 3B models, which noew compete with Gemma 2 and Phi 3.5:

The release notes hint at some very tight on device collaborations with Qualcomm, Mediatek, and Arm:

The weights being released today are based on BFloat16 numerics. Our teams are actively exploring quantized variants that will run even faster, and we hope to share more on that soon.

Don't miss:

- launch blogpost

- Followup technical detail from @AIatMeta disclosing a 9 trillion token count for Llama 1B and 3B, and quick arch breakdown from Daniel Han

- updated HuggingFace collection including Evals

- the Llama Stack launch (see RFC here)

Partner launches:

- Ollama

- Together AI (offering FREE 11B model access rate limited to 5 rpm until end of year)

- Fireworks AI

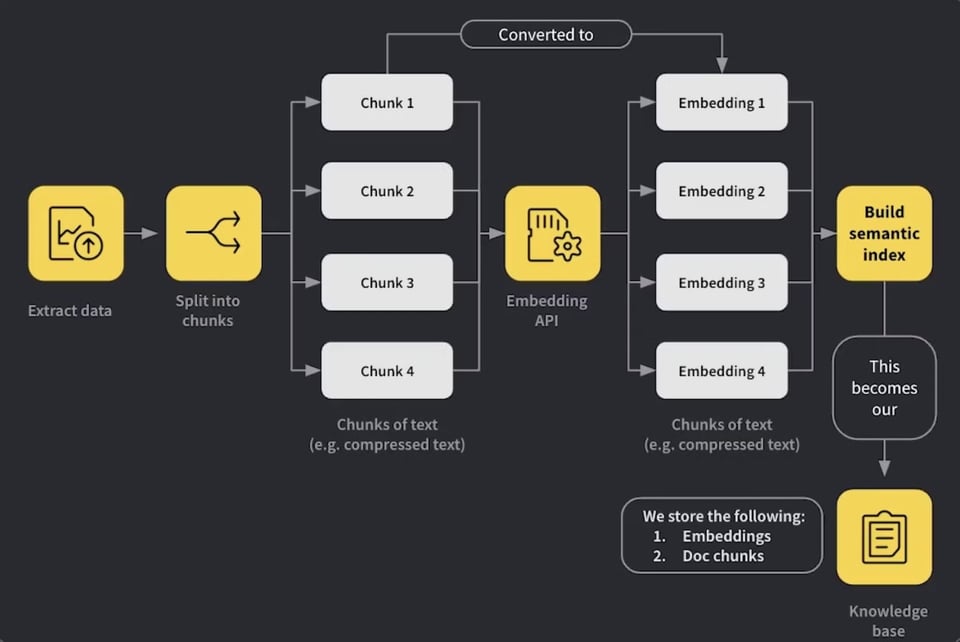

This issue sponsored by RAG++: a new course from Weights & Biases. Go beyond RAG POCs and learn how to evaluate systematically, use hybrid search correctly and give your RAG system access to tool calling. Based on 18 months of running a customer support bot in production, industry experts at Weights & Biases, Cohere, and Weaviate show how to get to a deployment-grade RAG app. Includes free credits from Cohere to get you started!

Swyx commentary: Whoa, 74 lessons in 2 hours. I've worked on this kind of very tightly edited course content before and it's amazing that this is free! Chapters 1-2 cover some necessary RAG table stakes, but then it was delightful to see Chapter 3 teach important ETL and IR concepts, and learn some new things on cross encoding, rank fusion, and query translation in 4 and 5. We shall have to cover this on livestream soon!

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

Advanced Voice Model Release

- OpenAI is rolling out an advanced voice model for ChatGPT Plus and Team users over the course of a week.

- @sama announced: "advanced voice mode rollout starts today! (will be completed over the course of the week)hope you think it was worth the wait 🥺🫶"

- @miramurati confirmed: "All Plus and Team users in ChatGPT"

- @gdb noted: "Advanced Voice rolling out broadly, enabling fluid voice conversation with ChatGPT. Makes you realize how unnatural typing things into a computer really is:"

The new voice model features lower latency, the ability to interrupt long responses, and support for memory to personalize responses. It also includes new voices and improved accents.

Google's Gemini 1.5 Pro and Flash Updates

Google announced significant updates to their Gemini models:

- @GoogleDeepMind tweeted: "Today, we're excited to release two new, production-ready versions of Gemini 1.5 Pro and Flash. 🚢They build on our latest experimental releases and include significant improvements in long context understanding, vision and math."

- @rohanpaul_ai summarized key improvements: "7% increase in MMLU-Pro benchmark, 20% improvement in MATH and HiddenMath, 2-7% better in vision and code tasks"

- Price reductions of over 50% for Gemini 1.5 Pro

- 2x faster output and 3x lower latency

- Increased rate limits: 2,000 RPM for Flash, 1,000 RPM for Pro

The models can now process 1000-page PDFs, 10K+ lines of code, and hour-long videos. Outputs are 5-20% shorter for efficiency, and safety filters are customizable by developers.

AI Model Performance and Benchmarks

- OpenAI's models are leading in various benchmarks:

- @alexandr_wang reported: "OpenAI's o1 is dominating SEAL rankings!🥇 o1-preview is dominating across key categories:- #1 in Agentic Tool Use (Enterprise)- #1 in Instruction Following- #1 in Spanish👑 o1-mini leads the charge in Coding"

- Comparisons between different models:

- @bindureddy noted: "Gemini's Real Superpower - It's 10x Cheaper Than o1!The new Gemini is live on ChatLLM teams if you want to play with it."

AI Development and Research

- @alexandr_wang discussed the phases of LLM development: "We are entering the 3rd phase of LLM Development.1st phase was early tinkering, Transformer to GPT-32nd phase was scaling3rd phase is an innovation phase: what breakthroughs beyond o1 get us to a new proto-AGI paradigm"

- @JayAlammar shared insights on LLM concepts: "Chapter 1 paves the way for understanding LLMs by providing a history and overview of the concepts involved. A central concept the general public should know is that language models are not merely text generators, but that they can form other systems (embedding, classification) that are useful for problem solving."

AI Tools and Applications

- @svpino discussed AI-powered code reviews: "Unpopular opinion: Code reviews are dumb, and I can't wait for AI to take over completely."

- @nerdai shared an ARC Task Solver that allows humans to collaborate with LLMs: "Using the handy-dandy @llama_index Workflows, we've built an ARC Task Solver that allows humans to collaborate with an LLM to solve these ARC Tasks."

Memes and Humor

- @AravSrinivas joked: "Should I drop a wallpaper app ?"

- @swyx humorously commented on the situation: "guys stop it, mkbhd just uploaded the wrong .IPA file to the app store. be patient, he is recompiling the code from scratch. meanwhile he privately dm'ed me a test flight for the real mkbhd app. i will investigate and get to the bottom of this as a self appointed auror for the wallpaper community"

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. High-Speed Inference Platforms: Cerebras and MLX

- Just got access to Cerebras. 2,000 token per second. (Score: 99, Comments: 39): The Cerebras platform has demonstrated impressive inference speeds, achieving 2,010 tokens per second with the Llama3.1-8B model and 560 tokens per second with the Llama3.1-70B model. The user expresses amazement at this performance, indicating they are still exploring potential applications for such high-speed inference capabilities.

- JSON outputs are supported by the Cerebras platform, as confirmed by the original poster. Access to the platform is granted through a sign-up and invite system, with users directed to inference.cerebras.ai.

- Potential applications discussed include Chain of Thought (CoT) + RAG with Voice, potentially creating a Siri/Google Voice competitor capable of providing expert-level answers in real-time. A voice demo on Cerebras is available at cerebras.vercel.app.

- The platform is compared to Groq, with Cerebras reportedly being even faster. SambaNova APIs are mentioned as an alternative, offering similar speeds (1500 tokens/second) without a waitlist, while users note the potential for real-time applications and security implications of such high-speed inference.

- MLX batch generation is pretty cool! (Score: 42, Comments: 15): The MLX paraLLM library enabled a 5.8x speed improvement for Mistral-22b generation, increasing from 17.3 tokens per second to 101.4 tps at a batch size of 31. Peak memory usage increased from 12.66GB to 17.01GB, with approximately 150MB required for each additional concurrent generation, while the author managed to run 100 concurrent batches of the 22b-4bit model on a 64GB M1 Max machine without exceeding 41GB of wired memory.

- Energy efficiency tests showed 10 tokens per watt for Mistral-7b and 3.5 tokens per watt for 22b at batch size 100 in low power mode. This efficiency is comparable to human brain performance in terms of words per watt.

- The library is Apple-only, but similar batching capabilities exist for NVIDIA/CUDA through tools like vLLM, Aphrodite, and MLC, though with potentially more complex setup processes.

- While not applicable for improving speed in normal chat scenarios, the technology is valuable for synthetic data generation and dataset distillation.

Theme 2. Qwen 2.5: Breakthrough Performance on Consumer Hardware

- Qwen2-VL-72B-Instruct-GPTQ-Int4 on 4x P100 @ 24 tok/s (Score: 37, Comments: 52): Qwen2-VL-72B-Instruct-GPTQ-Int4, a large multimodal model, is reported to run on 4x P100 GPUs at a speed of 24 tokens per second. This implementation utilizes GPTQ quantization and Int4 precision, enabling the deployment of a 72 billion parameter model on older GPU hardware with limited VRAM.

- DeltaSqueezer provided a GitHub repository and Docker command for running Qwen2-VL-72B-Instruct-GPTQ-Int4 on Pascal GPUs. The setup includes support for P40 GPUs, but may experience slow loading times due to FP16 processing.

- The model demonstrated reasonable vision and reasoning capabilities when tested with a political image. A comparison with Pixtral model's output on the same image was provided, showing similar interpretation abilities.

- Discussion on video processing revealed that the 7B VL version consumes significant VRAM. The model's performance on P100 GPUs was noted to be faster than 3x3090s, with the P100's HBM being comparable to the 3090's memory bandwidth.

- Qwen 2.5 is a game-changer. (Score: 524, Comments: 121): Qwen 2.5 72B model is running efficiently on dual RTX 3090s, with the Q4_K_S (44GB) version achieving approximately 16.7 T/s and the Q4_0 (41GB) version reaching about 18 T/s. The post includes Docker compose configurations for setting up Tailscale, Ollama, and Open WebUI, along with bash scripts for updating and downloading multiple AI models, including variants of Llama 3.1, Qwen 2.5, Gemma 2, and Mistral.

- Tailscale integration in the setup allows for remote access to OpenWebUI via mobile devices and iPads, enabling on-the-go usage of the AI models through a browser.

- Users discussed model performance, with suggestions to try AWQ (4-bit quantization) served by lmdeploy for potentially faster performance on 70B models. Comparisons between 32B and 7B models showed better performance from larger models on complex tasks.

- Interest in hardware requirements was expressed, with the original poster noting that dual RTX 3090s were chosen for running 70B models efficiently, expecting a 6-month ROI. Questions about running models on Apple M1/M3 hardware were also raised.

Theme 3. Gemini 1.5 Pro 002: Google's Latest Model Impresses

- Gemini 1.5 Pro 002 putting up some impressive benchmark numbers (Score: 102, Comments: 42): Gemini 1.5 Pro 002 is demonstrating impressive performance across various benchmarks. The model achieves 97.8% on MMLU, 90.0% on HumanEval, and 82.6% on MATH, surpassing previous state-of-the-art results and showing significant improvements over its predecessor, Gemini 1.0 Pro.

- Google's Gemini 1.5 Pro 002 shows significant improvements, including >50% reduced price, 2-3x higher rate limits, and 2-3x faster output and lower latency. The model's performance across benchmarks like MMLU (97.8%) and HumanEval (90.0%) is impressive.

- Users praised Google's recent progress, noting their publication of research papers and the AI Studio playground. Some compared Google favorably to other AI companies, with Meta being highlighted for its open-weight models and detailed papers.

- Discussion arose about the consumer version of Gemini, with some users finding it less capable than competitors. Speculation on when the updated model would be available to consumers ranged from a few days to October 8th at the latest.

- Updated gemini models are claimed to be the most intelligent per dollar* (Score: 291, Comments: 184): Google has released Gemini 1.5 Pro 002, claiming it to be the most intelligent AI model per dollar. The model demonstrates significant improvements in various benchmarks, including a 90% score on MMLU and 93.2% on HumanEval, while offering competitive pricing at $0.0025 per 1k input tokens and $0.00875 per 1k output tokens. These performance gains and cost-effective pricing position Gemini 1.5 Pro 002 as a strong contender in the AI model market.

- Mistral offers 1 billion tokens of Large v2 per month for free, with users noting its strong performance. This contrasts with Google's pricing strategy for Gemini 1.5 Pro 002.

- Users criticized Google's naming scheme for Gemini models, suggesting alternatives like date-based versioning. The announcement also revealed 2-3x higher rate limits and faster performance for API users.

- Discussions highlighted the trade-offs between cost, performance, and data privacy. Some users prefer self-hosting for data control, while others appreciate Google's free tier and AI Studio for unlimited free usage.

Theme 4. Apple Silicon vs NVIDIA GPUs for LLM Inference

- HF releases Hugging Chat Mac App - Run Qwen 2.5 72B, Command R+ and more for free! (Score: 54, Comments: 19): Hugging Face has released the Hugging Chat Mac App, allowing users to run state-of-the-art open-source language models like Qwen 2.5 72B, Command R+, Phi 3.5, and Mistral 12B locally on their Macs for free. The app includes features such as web search and code highlighting, with additional features planned, and contains hidden easter eggs like Macintosh, 404, and Pixel pals themes; users can download it from GitHub and provide feedback for future improvements.

- Low Context Speed Comparison: Macbook, Mac Studios, and RTX 4090 (Score: 33, Comments: 29): The post compares the performance of RTX 4090, M2 Max Macbook Pro, M1 Ultra Mac Studio, and M2 Ultra Mac Studio for running Llama 3.1 8b q8, Nemo 12b q8, and Mistral Small 22b q6_K models. Across all tests, the RTX 4090 consistently outperformed the Mac devices, with the M2 Ultra Mac Studio generally coming in second, followed by the M1 Ultra Mac Studio and M2 Max Macbook Pro. The author notes that these tests were run with freshly loaded models without flash attention enabled, and apologizes for not making the tests deterministic.

- Users recommend using exllamav2 for better performance on RTX 4090, with one user reporting 104.81 T/s generation speed for Llama 3.1 8b on an RTX 3090. Some noted past quality issues with exl2 compared to gguf models.

- Discussion on prompt processing speed for Apple Silicon, with users highlighting the significant difference between initial and subsequent prompts due to caching. The M2 Ultra processes 4000 tokens in 16.7 seconds compared to 5.6 seconds for the RTX 4090.

- Users explored options for improving Mac performance, including enabling flash attention and the theoretical possibility of adding a GPU for prompt processing on Macs running Linux, though driver support remains limited.

Other AI Subreddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

AI Model Releases and Improvements

- OpenAI releases advanced voice mode for ChatGPT: OpenAI has rolled out an advanced voice mode for ChatGPT that allows for more natural conversations, including the ability to interrupt and continue thoughts. Users report it as a significant improvement, though some limitations remain around letting users finish thoughts.

- Google updates Gemini models: Google announced updated production-ready Gemini models including Gemini-1.5-Pro-002 and Gemini-1.5-Flash-002. The update includes reduced pricing, increased rate limits, and performance improvements across benchmarks.

- New Flux model released: The creator of Realistic Vision released a Flux model called RealFlux, available on Civitai. Users note it produces good results but some limitations remain around facial features.

AI Capabilities and Benchmarks

- Gemini 1.5 002 performance: Reports indicate Gemini 1.5 002 outperforms OpenAI's o1-preview on the MATH benchmark at 1/10th the cost and with no thinking time.

- o1 capabilities: An OpenAI employee suggests o1 is capable of performing at the level of top PhD students, outperforming humans more than 50% of the time in certain tasks. However, some users dispute this claim, noting limitations in o1's ability to learn and adapt compared to humans.

AI Development Tools and Interfaces

- Invoke 5.0 update: The Invoke AI tool received a major update introducing a new Canvas with layers, Flux support, and prompt templates. This update aims to provide a more powerful interface for combining various AI image generation techniques.

AI Impact on Society and Work

- Job displacement predictions: Vinod Khosla predicts AI will take over 80% of work in 80% of jobs, sparking discussions about potential economic impacts and the need for universal basic income.

- AI in law enforcement: A new AI tool for police work claims to perform "81 years of detective work in 30 hours," raising both excitement about increased efficiency and concerns about potential misuse.

Emerging AI Research and Applications

- MIT vaccine technology: Researchers at MIT have developed a new vaccine technology that could potentially eliminate HIV with just two shots, showcasing the potential for AI to accelerate medical breakthroughs.

AI Discord Recap

A summary of Summaries of Summaries by O1-mini

Theme 1. New AI Model Releases and Multimodal Enhancements

- Llama 3.2 Launches with Multimodal and Edge Capabilities: Llama 3.2 introduces various model sizes including 1B, 3B, 11B, and 90B with multimodal support and a 128K context length, optimized for deployment on mobile and edge devices.

- Molmo 72B Surpasses Competitors in Benchmarks: The Molmo 72B model from Allen Institute for AI outperforms models like Llama 3.2 V 90B in benchmarks such as AI2D and ChatQA, offering state-of-the-art performance with an Apache license.

- Hermes 3 Enhances Instruction Following on HuggingChat: Hermes 3, available on HuggingChat, showcases improved instruction adherence, providing more accurate and contextually relevant responses compared to previous versions.

Theme 2. Model Performance, Quantization, and Optimization

- Innovations in Image Generation with MaskBit and MonoFormer: The MaskBit model achieves a FID of 1.52 on ImageNet 256 × 256 without embeddings, while MonoFormer unifies autoregressive text and diffusion-based image generation, matching state-of-the-art performance by leveraging similar training methodologies.

- Quantization Techniques Enhance Model Efficiency: Discussions on quantization vs distillation reveal the complementary benefits of each method, with implementations in Setfit and TorchAO addressing memory and computational optimizations for models like Llama 3.2.

- GPU Optimization Strategies for Enhanced Performance: Members explore TF32 and float8 representations to accelerate matrix operations, alongside tools like Torch Profiler and Compute Sanitizer to identify and resolve performance bottlenecks.

Theme 3. API Pricing, Integration, and Deployment Challenges

- Cohere API Pricing Clarified for Developers: Developers learn that while rate-limited Trial-Keys are free, transitioning to Production-Keys incurs costs for commercial applications, emphasizing the need to align API usage with project budgets.

- OpenAI's API and Data Access Scrutiny: OpenAI announces limited access to training data for review purposes, hosted on a secured server, raising concerns about transparency and licensing compliance among the engineering community.

- Integrating Multiple Tools and Platforms: Challenges in integrating SillyTavern, Forge, Langtrace, and Zapier with various APIs are discussed, highlighting the complexities of maintaining seamless deployment pipelines and compatibility across tools.

Theme 4. AI Safety, Censorship, and Licensing Issues

- Debates on Model Censorship and Uncensoring Techniques: Community members discuss the over-censorship of models like Phi-3.5, with efforts to uncensor models through tools and sharing of uncensored versions on platforms like Hugging Face.

- MetaAI's Licensing Restrictions in the EU: MetaAI faces licensing challenges in the EU, restricting access to multimodal models like Llama 3.2 and prompting discussions on compliance with regional laws.

- OpenAI's Corporate Shifts and Team Exodus: The resignation of Mira Murati and other key team members from OpenAI sparks speculation about organizational stability, corporate culture changes, and the potential impact on AI model development and safety protocols.

Theme 5. Hardware Infrastructure and GPU Optimization for AI

- Cost-Effective GPU Access with Lambda Labs: Members discuss utilizing Lambda Labs for GPU access at around $2/hour, highlighting its flexibility for running benchmarks and fine-tuning models without significant upfront costs.

- Troubleshooting CUDA Errors on Run Pod: Users encounter illegal CUDA memory access errors on platforms like Run Pod, with solutions including switching machines, updating drivers, and modifying CUDA code to prevent memory overflows.

- Deploying Multimodal Models on Edge Devices: Discussions on integrating Llama 3.2 models into edge platforms like GroqCloud, emphasizing the importance of optimized inference kernels and minimal latency for real-time AI applications.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

- Llama 3.2 Launches with New Features: Llama 3.2 has been launched, introducing new text models (1B and 3B) and vision models (11B and 90B), which support 128K context length and handle 9 trillion tokens.

- The release brings quantization format support including GGUF and BNB, enhancing its application in various scenarios.

- Cost Effectiveness of Model Usage Compared: Discussion emerged on whether smaller models save costs versus quality, with one member noting they created a dataset worth $15-20k despite expenses.

- Contradicting views spark debate on whether GPU costs might ultimately be more economical than subscribing to APIs, especially with heavy token consumption.

- Inquiry into Fine-tuning Llama Models: Members are interested in fine-tuning Llama 3.1 locally, suggesting Unsloth tools and scripts tailored for this process.

- There are rising expectations for support regarding Llama Vision models, indicating a roadmap for future enhancements.

- OpenAI's Feedback Process Under Scrutiny: Participants discussed OpenAI's approach to improvement through Reinforcement Learning from Human Feedback (RLHF), seeking clarity on implementation methods.

- Conversations highlighted the ambiguity around their feedback mechanism, pointing out the necessity for transparency in their processes.

- High Token Usage Raises Concerns: Intensive AI pipelines reportedly average 10-15M tokens per generation, stressing the complexities understood by seasoned developers.

- A member expressed frustration over misconceptions related to their hardware setup among peers.

HuggingFace Discord

- Hugging Face model releases better support: The recent announcements from Hugging Face include Mistral Small (22B) and updates on Qwen models, available for exploration, along with new Gradio 5 features for ML app development.

- Release of FinePersonas-v0.1 introduces 21 million personas for synthetic data generation, while Hugging Face's deeper integration with Google Cloud's Vertex AI enhances AI developer accessibility.

- Llama 3.2 offers multimodal capabilities: The newly released Llama 3.2 boasts multimodal support with models capable of handling text and image data and includes a substantial 128k token context length.

- Designed for mobile and edge device deployment, the models facilitate diverse application scenarios, potentially revolutionizing local inferencing performance.

- Challenges in training topic clusters: Members faced issues aggregating a sensible number of topics for training without excessive manual merging, leading to a focus on zero-shot systems as a solution.

- Discussions revolved around using flexible topic management techniques to streamline production processes.

- Insights into Diffusion Models: The effectiveness of Google Colab for running diffusion models sparked discussions, especially regarding model performance criteria when utilizing its free tier.

- Members discussed Flux as a robust open-source diffusion model, with alternatives like SDXL Lightning proposed for faster image generation without sacrificing too much quality.

- Exploring fine-tuning and optimization techniques: Techniques for fine-tuning token embeddings and other optimizations were core topics, with focus on maintaining pre-existing token functions while integrating newly added embeddings.

- Challenges with Setfit’s serialization due to memory constraints were also addressed, emphasizing strategies for better checkpoint management during training phases.

Nous Research AI Discord

- Hermes 3 Launches on HuggingChat: The latest release of Hermes 3 in 8B size is now available on HuggingChat, showcasing improved instruction adherence.

- Hermes 3 has significantly enhanced its ability to follow instructions, promising more accurate and contextually relevant responses than prior versions.

- Llama 3.2 Performance Insights: The release of Llama 3.2 with multiple sizes generated discussions about its performance, particularly when compared to smaller models like Llama 1B and 3B.

- Users noted specific capabilities and limitations, including improved code generation abilities, sparking much curiosity.

- Sample Packing Techniques Discussed: A discussion focused on sample packing for training small GPT-2 models raised concerns about potential performance degradation if not executed correctly.

- A participant emphasized that naive implementation could lead to suboptimal results, despite its theoretical benefits.

- MIMO Framework Revolutionizes Video Synthesis: The MIMO framework proposes a method for synthesizing realistic character videos with controllable attributes based on simple user inputs.

- MIMO aims to overcome limitations of existing 3D methods and enhances scalability and interactivity in video synthesis tasks.

- Seeking Research on Job Recommendation Systems: A member detailed their challenges in finding quality research related to building a resume ATS builder and job recommendation systems.

- Advice was sought to navigate the extensive landscape of existing literature effectively.

aider (Paul Gauthier) Discord

- Llama 3.2 Launch: Meta has announced the release of Llama 3.2, featuring small and medium-sized vision LLMs and lightweight models for edge and mobile devices, during Meta Connect.

- These models aim to enhance accessibility for developers with limited resources, as discussed in the context of their latest model advancements.

- Aider Faces Functionality Challenges: Users reported limitations in Aider, particularly the lack of built-in translation and insufficient documentation indexing, pushing discussions for potential enhancements.

- Ideas include incorporating voice feedback and automatic documentation searches to improve user experience.

- Switching LLMs for Better Performance: Reports indicate that users are switching from Claude Sonnet 3.5 to models like Gemini Pro 1.5 to improve code comprehension and performance.

- Engaging Aider's benchmark suite for model performance tracking is seen as essential to ensure accurate outcomes.

- Local Vector Databases Explored: A discussion centered on local vector databases revealed interest in Chroma, Qdrant, and PostgreSQL vector extensions for handling complex data efficiently.

- While SQLite can manage vector DB tasks, specialized databases are deemed more adept for heavy workloads.

- Introducing the par_scrape Tool: A member showcased the par_scrape tool on GitHub as an efficient web scraping solution, praised for its capabilities compared to alternatives.

- Its utilization could streamline scraping tasks significantly for the community.

OpenRouter (Alex Atallah) Discord

- OpenRouter's Database Upgrade Scheduled: A database upgrade is slated for Friday at 10am ET, leading to a brief downtime of 5-10 minutes. Users should prepare for potential service interruptions.

- The upgrade aims to enhance overall system performance, aligning with recent API changes.

- API Output Enhancements Announced: OpenRouter now includes the provider in the completion response for improved clarity in data retrieval.

- This change is designed to streamline information processing and enhance user experience.

- Gemini Models Routing Gets an Upgrade: Gemini-1.5-flash and Gemini-1.5-pro have been rerouted to utilize the latest 002 version for better performance.

- The community is encouraged to test these updated models to gauge their efficiency in various applications.

- Llama 3.2 Release Builds Anticipation: The upcoming Llama 3.2 release includes smaller models for easier integration in mobile and edge deployments.

- Inquiries on whether OpenRouter will host the new models sparked excitement among the developers.

- Local Server Support Faces Limits: Support for local servers remains a challenge as restricted external access hampers assistance capabilities.

- Future API support may expand if endpoints meet specific OpenAI-style schema requirements, opening doors for collaboration.

OpenAI Discord

- Advanced Voice Mode Distribution Frustrations: Members are frustrated over the limited rollout of the Advanced Voice mode, particularly in the EU, where access remains restricted despite announcements of full availability.

- They pointed out a trend of delayed features for EU users, referencing prior instances such as the memory functionality.

- Meta AI Faces Licensing Restrictions in the EU: It was clarified that Meta AI is not available for users in the EU and UK due to strict licensing rules on multimodal models, linked directly to Llama 3.2's licensing issues.

- Members noted that Llama 3.2 features improved multimodal capabilities but are still hindered by these licensing complications.

- Essay Grading Needs Tougher Feedback: Discussion focused on the challenge of providing honest feedback on essays, highlighting a tendency for models to offer lenient critiques.

- Members suggested using detailed rubrics and examples but noted the model's inherent inclination towards positive reinforcement complicates matters.

- Optimizing Minecraft API Prompts: Members proposed strategies to enhance prompts for the Minecraft API, aiming to reduce repetitive queries by varying topics and complexity levels.

- Concerns were raised about how to prompt the AI to enforce a structured response format and avoid duplicating questions.

- Struggles with Complex Task Handling: Users expressed frustration that GPT struggles with complex tasks, citing experiences of long waits for meager outputs, especially on book-writing requests.

- Some suggested alternative models like Claude and o1-preview, which they find more capable thanks to extended memory windows.

LM Studio Discord

- Llama 3.2 Launch Sparks Excitement: The recent release of Llama 3.2, including models like the 1B and 3B, has generated significant interest due to its performance across various hardware setups.

- Users are particularly eager for support for the 11B multimodal model, yet complications regarding its vision integration may delay availability.

- Integration Issues with SillyTavern: Users are encountering integration issues with SillyTavern while using LM Studio, largely related to server communication and response generation.

- Troubleshooting suggests that task inputs may need to be more specific rather than relying on freeform text prompts.

- Concerns Over Multimodal Model Capabilities: While Llama 3.2 includes a vision model, users demand true multimodal capabilities similar to GPT-4 for broader utility.

- It has been clarified that the 11B model is limited to vision tasks and currently lacks voice or video functionalities.

- Price Discrepancies Cause Frustration: Users shared their frustration over higher tech prices in the EU, which can be twice as much as those in the US.

- Many highlighted that VAT is a significant factor contributing to these discrepancies.

- Expectations for RTX 3090 TPS: Discussions on the RTX 3090 highlighted expected transactions per second (TPS) of around 60-70 TPS on a Q4 8B model.

- Clarified that this metric is primarily useful for inference training, rather than for simple query processing.

Eleuther Discord

- yt-dlp Emerges as a Must-Have Tool: A member highlighted yt-dlp, showcasing it as a robust audio/video downloader, raising concerns over malware but confirming the source's safety.

- This tool could simplify content downloading for developers, but review of its usage among engineers is essential due to potential security risks.

- PyTorch Training Attribute Bug Causes Frustration: A known bug in PyTorch was discussed where executing

.eval()or.train()fails to update the.trainingattribute oftorch.compile()modules, outlined in this GitHub issue.- Members expressed disappointment over the lack of transparency on this issue while brainstorming workarounds, such as altering

mod.compile().

- Members expressed disappointment over the lack of transparency on this issue while brainstorming workarounds, such as altering

- Local LLM Benchmarking Tools Needed: Request for recommendations on open-source benchmark suites for local LLM testing pointed towards established metrics like MMLU and GSM8K, with mentions of the lm-evaluation-harness for evaluating models.

- This search underscores the need for comprehensive evaluation frameworks in the AI community to validate local model performance.

- NAIV3 Technical Report on Crowd-Sourced Data: The NAIV3 Technical Report released contains a dataset of 6 million crowd-sourced images, focusing on tagging practices and image management.

- Discussions revolved around including humor in documentation, indicating a divergence in stylistic preferences for technical reports.

- BERT Masking Rates Show Performance Impact: Inquiring into high masking rates for BERT models revealed that rates exceeding 15%, notably up to 40%, can boost performance, suggesting a significant advantage in larger models.

- This suggests that training methodologies may need reevaluation to integrate findings from recent studies addressing masking strategies.

Perplexity AI Discord

- Perplexity AI struggles with context retention: Users have expressed frustration with Perplexity AI not retaining context for follow-up questions, a trend that has worsened recently. Some members noted a decline in the platform's performance, impacting its utility.

- Concerns were raised regarding potential financial issues affecting Perplexity's capabilities, prompting discussions about viable alternatives.

- Merlin.ai offers O1 with web access: Merlin.ai has been recommended as an alternative to Perplexity since it provides O1 capabilities with web access, allowing users to bypass daily message limits. Participants showed interest in exploring Merlin for its expanded functionalities.

- The discussion highlighted how users perceive Merlin to be more functional than Perplexity, potentially reshaping their tool choices.

- Wolfram Alpha integration with Perplexity API: A user inquired about the potential use of Wolfram Alpha with the Perplexity API similar to how it works on the web app, to which it was confirmed that integration is currently not possible. The independence of the API from the web interface was stressed.

- Further inquiries were made about whether the API could perform as efficiently as the web interface for math and science problem-solving, with no conclusive answers provided.

- Users weigh in on AI tools for education: Numerous users shared their perspectives on using various AI tools for academic tasks, with preferences varying between GPT-4o and Claude as alternatives. Feedback indicated that different AI tools offer varying levels of assistance for school-related needs.

- This exchange highlighted the significant role AI plays in educational settings, with an emphasis on user experience shaping these preferences.

- Evaluating Air Fryers: Worth It?: A user shared a link discussing whether air fryers are worth it, focusing on health benefits versus traditional frying methods and cooking efficiency. The dialogue included consumers' varied viewpoints about the gadget's practicality.

- Key takeaways from the discussion centered around both the positive cooking attributes of air fryers and the skepticism on their actual health benefits compared to conventional methods.

Interconnects (Nathan Lambert) Discord

- Anthropic Sets Sights on $1B Revenue: As reported by CNBC, Anthropic is projected to surpass $1B in revenue this year, achieving a jaw-dropping 1000% year-over-year growth.

- Revenue sources include 60-75% from third-party APIs and 15% from Claude subscriptions, marking a significant shift for the company.

- OpenAI Provides Training Data Access: In a notable shift, OpenAI announced it will allow access to its training data for review regarding copyrighted works used.

- This access is limited to a secured computer at OpenAI's San Francisco office, stirring varying reactions among the community.

- Molmo Model Surpasses Expectations: The Molmo model has generated excitement with claims that its pointing feature may be more significant than a higher AIME score, garnering positive comparisons to Llama 3.2 V 90B benchmarks.

- Comments noted Molmo outperformed on metrics like AI2D and ChatQA, demonstrating its strong performance relative to its competitors.

- Curriculum Learning Enhances RL Efficiency: Research shows that implementing curriculum learning can significantly boost Reinforcement Learning (RL) efficiency by using previous demonstration data for better exploration.

- This method includes a creative reverse and forward curriculum strategy, compared to DeepMind's similar Demostart, highlighting both gains and challenges in robotics.

- Llama 3.2 Launch Triggers Community Buzz: Llama 3.2 has officially launched with various model sizes including 1B, 3B, 11B, and 90B, aimed at enhancing text and multimodal capabilities.

- Initial reactions show a mix of excitement and skepticism about its readiness, with discussions fueled by hints of future improvements and updates.

GPU MODE Discord

- Exploring SAM2-fast with Diffusion Transformers: Members discussed using SAM2-fast within a Diffusion Transformer Policy to map camera sensor data to robotic arm positions, suggesting image/video segmentation for this use case.

- The conversation highlighted the potential of combining rapid sensor data processing with robotic control through advanced ML techniques.

- Torch Profiler's Size Issue: Torch profiler generated excessive file sizes (up to 7GB), leading to suggestions on profiling only essential items and exporting as .json.gz for compression.

- Members emphasized efficient profiling strategies to maintain manageable file sizes and usability for tracing performance.

- RoPE Cache Should Always Be FP32: A discussion arose on the RoPE cache in the Torchao Llama model, advocating for it to be consistently in FP32 format for accuracy.

- Members pointed to specific lines in the model repository for further clarity.

- Lambda Labs Cost-Effective GPU Access: Using Lambda Labs for GPU access at approximately $2/hour was highlighted as a flexible option for running benchmarks and fine-tuning.

- The user shared experiences regarding seamless SSH access and pay-per-use structure, making it attractive for many ML applications.

- Metal Atomics Require Atomic Load/Stores: To achieve message passing between workgroups, a member suggested employing an array of atomic bytes for operations in metal atomics.

- Efficient flag usage with atomic operations and non-atomic loads was emphasized for improved data handling.

OpenAccess AI Collective (axolotl) Discord

- Run Pod Issues Dismay Users: Users reported experiencing illegal CUDA errors on Run Pod, with some suggesting switching machines to resolve the issue.

- One user humorously advised against using Run Pod due to ongoing issues, emphasizing the frustration involved.

- Molmo 72B Takes Center Stage: The Molmo 72B, developed by the Allen Institute for AI, boasts state-of-the-art benchmarks and is built on the PixMo dataset of image-text pairs.

- Apache licensed, this model aims to compete with leading multimodal models, including GPT-4o.

- OpenAI's Leadership Shakeup Rocks Community: A standout moment occurred with the resignation of OpenAI's CTO, sparking speculation about the organization's future direction.

- Members discussed the potential implications for OpenAI's strategy, hinting at intriguing internal dynamics.

- Llama 3.2 Rollout Excites All: The introduction of Llama 3.2 features lightweight models for edge devices, generating buzz about sizes ranging from 1B to 90B.

- Multiple sources confirmed the phased rollout, with excitement about performance validation from the new models.

- Meta's EU Compliance Quagmire: Conversations revealed Meta's struggles with EU regulations, leading to restricted access for European users.

- Discussions alluded to a possible license change affecting model availability, igniting debate over company motivations.

Cohere Discord

- Cohere API Key Pricing Clarified: Members highlighted the rate-limited Trial-Key for free usage but stated that commercial applications require a Production-Key that incurs costs.

- This emphasizes careful consideration of intended usage when planning API key resources.

- Testing Recursive Iterative Models Hypotheses: A user posed the question if obtaining similar results across multiple LLMs implies their Recursive Iterative model is functioning correctly.

- Suggestions included further evaluations against benchmarks to ensure reliable outcomes.

- Launch of New RAG Course: An announcement was made about the new RAG course in production with Weights&Biases, covering evaluation and pipelines in under 2 hours.

- Participants earn Cohere credits and can ask questions to a Cohere team member during the course.

- Exciting Smart Telescope Project: A member shared their enthusiasm about a smart telescope mount project aiming to automatically locate 110 objects from the Messier catalog.

- Community support flowed in, encouraging collaboration and resource sharing for the project.

- Cohere Cookbook Now Available: The Cohere Cookbook has been highlighted as a resource containing guides for using Cohere’s generative AI platform effectively.

- Members were directed to explore sections specific to their AI project needs, including embedding and semantic search.

LlamaIndex Discord

- LlamaParse Fraud Alert: A warning was issued about a fraudulent site, llamaparse dot cloud, attempting to impersonate LlamaIndex products; the official LlamaParse can be accessed at cloud.llamaindex.ai.

- Stay vigilant against scams that pose risks to users by masquerading as legitimate services.

- Varied Exciting Presentations at AWS Gen AI Loft: LlamaIndex's developers will share insights on RAG and Agents at the AWS Gen AI Loft, coinciding with the ElasticON conference on March 21, 2024 (source).

- Attendees will understand how Fiber AI integrates Elasticsearch into high-performance B2B prospecting.

- Launch of Pixtral 12B Model: The Pixtral 12B model from @MistralAI is now integrated with LlamaIndex, excelling in multi-modal tasks involving chart and image comprehension (source).

- This model showcases impressive performance against similarly sized counterparts.

- Join LlamaIndex's Team!: LlamaIndex is actively hiring engineers for their San Francisco team; openings range from full-stack to specialized roles (link).

- The team seeks enthusiastic individuals eager to work with ML/AI technologies.

- Clarifications on VectorStoreIndex Usage: Users discussed how to properly access the underlying vector store using the

VectorStoreIndex, specifically throughindex.vector_store. Clarifications surfaced around the limitations of SimpleVectorStore, prompting discussions about alternative storage options.- The conversation highlighted technical aspects of callable methods and properties, contributing to a better grasp of Python decorators.

Latent Space Discord

- Gemini pricing solidifies competitive stance: The recent cut in Gemini Pro pricing aligns with a loglinear pricing curve based on its Elo score, refining its approach against other models.

- As prices adjust, OpenAI continues to dominate the high end, while Gemini Pro and Flash capture lower tiers in a vivid framework reminiscent of 'iPhone vs Android'.

- Anthropic hits a revenue milestone: Anthropic is on track to achieve $1B in revenue this year, reflecting a staggering 1000% year-over-year increase, based on a report from CNBC.

- The revenue breakdown indicates a heavy reliance on Third Party API sales, contributing 60-75% of their income, with API and chatbot subscriptions also playing key roles.

- Llama 3.2 models enhance edge capabilities: The launch of Llama 3.2 introduces lightweight models optimized for edge devices, with configurations like 1B, 3B, 11B, and 90B vision models.

- These new offerings emphasize multimodal capabilities, encouraging developers to explore enhanced functionalities through open-source access.

- Mira Murati bids farewell to OpenAI: In a community-shared farewell, Mira Murati's departure from OpenAI led to discussions reflecting on her significant contributions during her tenure.

- Sam Altman acknowledged the emotional journey she navigated, highlighting the support she provided to the team amid challenges.

- Meta's Orion glasses prototype stages debut: After nearly a decade of development, Meta has revealed their Orion AR glasses prototype, marking a significant advancement despite initial skepticism.

- Aimed for internal use to refine user experiences, these glasses are designed for a broad field of view and lightweight characteristics to prepare for eventual consumer release.

Stability.ai (Stable Diffusion) Discord

- Basicsr Installation Troubles Made Simple: To resolve issues with ComfyUI in Forge, users should navigate to the Forge folder and run

pip install basicsrafter activating their virtual environment.- There's a growing confusion about the installation, with several users hoping the extension appears as a tab post-install.

- Battle of the Interfaces: ComfyUI vs Forge: Members shared their preferences, with one stating that they find Invoke much easier to use compared to ComfyUI.

- Many prefer staying loyal to ComfyUI due to the outdated version and its compatibility issues within Forge.

- 3D Model Generators: What Works?: Inquiry about 3D model generators revealed issues with TripoSR, suggesting many open-source tools appear broken.

- Interest was shown in Luma Genie and Hyperhuman, though skepticism about their functionality remains high.

- Running Stable Diffusion Without a GPU: For those looking to run Stable Diffusion without a GPU, using Google Colab or Kaggle provides free access to GPU resources.

- There's a shared consensus that these platforms serve as great starting points for beginners engaging with Stable Diffusion.

- Getting Creative with ControlNet OpenPose: Members learned how to use the ControlNet OpenPose preprocessor for generating and editing preview images within the platform.

- There’s evident excitement about exploring this feature, allowing for detailed adjustments in generated outputs.

Torchtune Discord

- Llama 3.2 Launches with Multimodal Features: The release of Llama 3.2 introduces 1B and 3B text models with long-context support, allowing users to try with

enable_activation_offloading=Trueon long-context datasets.- Additionally, the 11B multimodal model supports The Cauldron datasets and custom multimodal datasets for enhanced generation.

- Desire for Green Card: A member humorously expressed desperation for a green card, suggesting they might make life harder for Europe due to their situation.

- 'I won't tell anyone in exchange for a green card' highlights their frustration and willingness to negotiate.

- Consider TF32 for FP32 users: Discussion arose around enabling TF32 as an option for users still utilizing FP32, as it accelerates matrix multiplication (matmul).

- The sentiment echoed that if one is already using FP16/BF16, TF32 may not offer added benefits, with one member humorously noting, ‘I wonder who would prefer it over FP16/BF16 directly’.

- RFC Proposal on KV-cache toggling: An RFC on KV-cache toggling has been proposed, aiming to improve how caches are handled during model forward passes.

- The proposal addresses the current limitation where caches are always updated unnecessarily, prompting further discussion on necessity and usability.

- Advice on handling Tensor sizing: A query was made regarding improving the handling of sizing for Tensors beyond using the Tensor item() method.

- One member acknowledged the need for better solutions and promised to think about it further.

Modular (Mojo 🔥) Discord

- MOToMGP Error Investigation: A user inquired about the error 'failed to run the MOToMGP pass manager', seeking reproduction cases to improve messaging around it.

- Community members were encouraged to share insights or experiences related to this specific issue.

- Sizing Linux Box for MAX Optimization: A member asked how to size a Linux box when running MAX with ollama3.1, opening discussions about optimal configurations.

- Members contributed tips on resource allocation to enhance performance.

- GitHub Discussions Shift: On September 26th, Mojo GitHub Discussions will disable new comments to focus community engagement on Discord due to low participation.

- The change aims to streamline discussions and reflect on the inefficacy of converting past discussions into issues.

- Mojo's Communication Speed with C: Participants wondered if Mojo's communication with C is faster than with Python, noting it likely depends on the specific implementation.

- There was agreement that Python's interaction with C can vary based on context.

- Evan Implements Associated Aliases: Evan is rolling out associated aliases in Mojo, allowing for traits and type aliases similar to provided examples which excites the community.

- Members see potential improvements in code organization and clarity with this feature.

OpenInterpreter Discord

- Excitement around o1 Preview and Mini API: Members express excitement over accessing the o1 Preview and Mini API, pondering its capabilities in Lite LLM and responses received through Open Interpreter.

- One member humorously mentioned their eagerness to test it despite lacking tier 5 access.

- Llama 3.2 Launches with Lightweight Edge Models: Meta's Llama 3.2 has launched, introducing 1B & 3B models for edge use, optimized for Arm, MediaTek, and Qualcomm.

- Developers can access the models through Meta and Hugging Face, with 11B & 90B vision models poised to compete against closed counterparts.

- Tool Use Episode Covers Open Source AI: The latest Tool Use episode features discussions on open source coding tools and the infrastructure around AI.

- The episode spotlights community-driven innovations, resonating with previously shared ideas within the channel.

- Llama 3.2 Now Available on GroqCloud: Groq announces a preview of Llama 3.2 in GroqCloud, enhancing accessibility for developers through their infrastructure.

- Members noted the positive response to Groq's speed with the remark that anything associated with Groq operates exceptionally fast.

- Logo Design Choices Spark Discussion: A member shares their logo design journey, noting that while they considered the GitHub logo, they feel their current choice is superior.

- Another member lightheartedly added commentary about the power of their design choice, infusing humor into the discussion.

LLM Agents (Berkeley MOOC) Discord

- Gemini 1.5 demonstrates strong benchmarks: Gemini 1.5 Flash achieved a score of 67.3% in September 2024, while Gemini 1.5 Pro scored even better at 85.4%, marking a significant performance upgrade.

- This improvement highlights ongoing enhancements in model capabilities across various datasets.

- Launch of MMLU-Pro dataset: The new MMLU-Pro dataset boasts questions from 57 subjects with increased difficulty, aiming to challenge model evaluation effectively.

- This updated dataset is critical for assessing models in complex areas such as STEM and humanities.

- Questioning Chain of Thought utility: A recent study with 300+ experiments indicates that Chain of Thought (CoT) is only beneficial for math and symbolic reasoning, performing similarly to direct answering for most tasks.

- The analysis suggests CoT is unnecessary for 95% of MMLU tasks, redirecting focus on its strengths in symbolic computation.

- AutoGen proves valuable in research: Research highlights the growing use of AutoGen, reflecting its relevance in the current AI landscape.

- This trend points to significant developments in automating model generation, impacting both performance and research progression.

- Quiz 3 details available: Inquiries about Quiz 3 led members to confirm it's available on the course website under the syllabus section.

- Regular checks for syllabus updates are emphasized for staying informed about assessment schedules.

DSPy Discord

- DSPy Launches Cool New Features!: New features specific to DSPy on Langtrace are rolling out this week, including a new project type and automatic experiment tracking inspired by MLFlow.

- These include automatic checkpoint state tracking, eval score trendlines, and support for litellm.

- Text Classification Targets Fraud Detection: Users are employing DSPy for classifying text into three types of fraud, seeking advice on the optimal Claude model.

- Highlighted was that Sonnet 3.5 is the leading model, with Haiku offering a cost-effective alternative.

- DSPy as an Orchestrator for User Queries: A member is exploring DSPy as a tool for routing user queries to sub-agents and assessing its direct interaction capabilities.

- The conversation covered the potential for integrating tools, questioning the effectiveness of memory versus standalone conversation history.

- Clarifying Complex Classes in Text Classification: Members discussed the need for precise definitions while classifying text into complex classes, specifically including US politics and International Politics.

- One member noted that these definitions significantly depend on the business context, highlighting the nuanced approach needed.

- Collaborative Tutorial on Classification Tasks: The ongoing discussions have coincided with a member writing a tutorial on classification tasks, aiming to enhance clarity.

- This signals an effort to improve understanding in the classification domain.

tinygrad (George Hotz) Discord

- Essential Resources for Tinygrad Contributions: A member shared a series of tutorials on tinygrad covering the internals to help new contributors grasp the framework.

- They highlighted the quickstart guide and abstraction guide as prime resources for onboarding.

- Training Loop Too Slow in Tinygrad: A user lamented the slow training in tinygrad version 0.9.2 while working on a character model, describing it as slow as balls.

- They rented a 4090 GPU to enhance performance but reported minimal improvements.

- Bug in Sampling Code Affects Output Quality: The user discovered a bug in their sampling code after initially attributing slow training to general performance issues.

- They clarified the problem stemmed specifically from the sampling implementation, not the training code, which impacted the quality of model inference.

- Efficient Learning Through Code: Members suggested reading code and allowing arising questions to guide learning within tinygrad.

- Using tools like ChatGPT can assist in troubleshooting and foster a productive feedback loop.

- Using DEBUG to Understand Tinygrad's Flow: A member recommended utilizing

DEBUG=4for simple operations to see generated code and understand the flow in tinygrad.- This technique provides practical insights into the internal workings of the framework.

LangChain AI Discord

- Open Source Chat UI Seeking: A member is on the hunt for an open source UI tailored for chat interfaces focusing on programming tasks, seeking insights from the community on available options.

- The discussion welcomes experiences in deploying similar systems to help refine choices.

- Thumbs Up/Down Feedback Mechanisms: Members are exploring the implementation of thumbs up/thumbs down review options for chatbots, with one sharing their custom front end approach ruling out Streamlit.

- This reveals a collective interest in enhancing user engagement through feedback systems.

- Azure Chat OpenAI Integration Details: A developer disclosed their integration of Azure Chat OpenAI for chatbot functionalities, highlighting it as a viable platform for similar projects.

- They encouraged others to exchange ideas and challenges around this integration.

- Experience Building an Agentic RAG Application: A user detailed their development of an agentic RAG application using LangGraph, Ollama, and Streamlit, aimed at retrieving pertinent research data.

- They successfully deployed their solution via Lightning Studios and shared insights on their process in a LinkedIn post.

- Experimentation with Lightning Studios: The developer utilized Lightning Studios for efficient application deployment and experimentation with their Streamlit app, optimizing the tech stack.

- This emphasizes the platform's capabilities in enhancing application performance across different tools.

LAION Discord

- GANs, CNNs, and ViTs as top image algorithms: Members noted that GANs, CNNs, and ViTs frequently trade off as the top algorithm for image tasks and requested a visual timeline to showcase this evolution.

- The interest in a timeline highlights a need for historical context in the algorithm landscape of image processing.

- MaskBit revolutionizes image generation: The paper on MaskBit presents an embedding-free model generating images from bit tokens with a state-of-the-art FID of 1.52 on ImageNet 256 × 256.

- This work also enhances the understanding of VQGANs, creating a model that improves accessibility and reveals new details.

- MonoFormer merges autoregression and diffusion: The MonoFormer paper proposes a unified transformer for autoregressive text and diffusion-based image generation, matching state-of-the-art performance.

- This is achieved by leveraging training similarities, differing mainly in the attention masks utilized.

- Sliding window attention still relies on positional encoding: Members discussed that while sliding window attention brings advantages, it still depends on a positional encoding mechanism.

- This discussion emphasizes the ongoing balance between model efficiency and retaining positional awareness.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LLM Finetuning (Hamel + Dan) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!