[AINews] Cohere's Command A claims #3 open model spot (after DeepSeek and Gemma)

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Yay for open weights models!

AI News for 3/14/2025-3/17/2025. We checked 7 subreddits, 433 Twitters and 28 Discords (223 channels, and 9014 messages) for you. Estimated reading time saved (at 200wpm): 990 minutes. You can now tag @smol_ai for AINews discussions!

We briefly mentioned Cohere's Command A launch last week, but since the announcement was comparatively light on broadly comparable benchmarks (there were some, but the selective, self reported, comparisons to DeepSeek V3 and GPT-4o couldnt really contextualize Command A among either SOTA open source or overall SOTA-for-size), it was hard to tell where it would rank in terms of lasting impact.

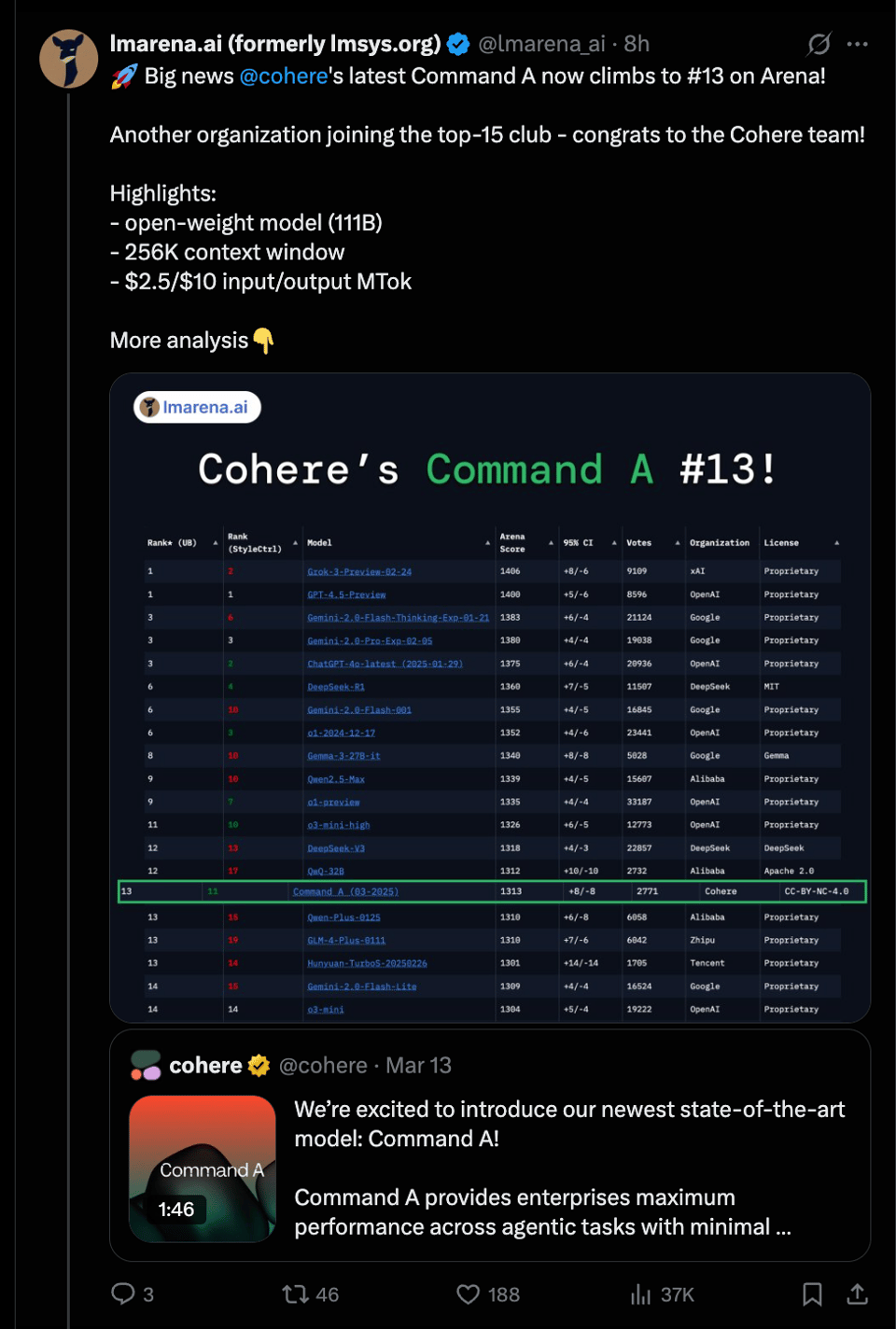

With today's LMArena result, that is no longer in question:

As Aidan Gomez points out, Command A actually increases 2 spots in rankings with the Style Control modifier (explored on their LS podcast).

There are many other notable subtle points that make Command A a particularly attractive candidate to include in one's open models arsenal, including the unusually long 256k context window, multilingual capabilities, and focus on optimizing for a 2-H100 serving footprint.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

Large Language Models (LLMs) and Model Releases

- Mistral AI Small 3.1 release (multimodal, multilingual, Apache 2.0 license): @sophiamyang announced the release of Mistral AI Small 3.1, highlighting its lightweight nature (runs on a single RTX 4090 or a Mac with 32GB RAM), fast response conversations, low-latency function calling, specialized fine-tuning, and advanced reasoning foundation. It outperforms comparable models on instruct benchmarks @sophiamyang and multimodal instruct benchmarks @sophiamyang, and is available on Hugging Face @sophiamyang, Mistral AI La Plateforme @sophiamyang, and with enterprise deployments @sophiamyang. The model is praised for its multilingual and long context capabilities @sophiamyang. @reach_vb emphasized the 128K context window and Apache 2.0 license.

- SmolDocling: New OCR model: @mervenoyann introduced SmolDocling, a fast OCR model that reads a single document in 0.35 seconds using 0.5GB VRAM, outperforming larger models, including Qwen2.5VL. It is based on SmolVLM and trained on pages and Docling transcriptions. The model and demo are available on Hugging Face @mervenoyann.

- Cohere Command A Model: @lmarena_ai reported that Cohere's Command A has climbed to #13 on the Arena leaderboard, highlighting its open-weight model (111B), 256K context window, and pricing of $2.5/$10 input/output MTok. Command A also ranked well in style control @aidangomez.

- Discussion on better LLMs: @lateinteraction expressed a cynical view that recent improvements in LLMs are due to building LLM systems (CoT) rather than better LLMs themselves, questioning where the better LLMs are.

Model Performance, Benchmarks, and Evaluations

- MCBench as a superior AI benchmark: @aidan_mclau recommends mcbench as the best AI benchmark, noting its fun-to-audit data, testing of relevant features (code, aesthetics, awareness), and ability to discern performance differences among top models. The benchmark can be found at https://t.co/YEgzhLotKk @aidan_mclau

- HCAST benchmark for autonomous software tasks: @idavidrein shared details about HCAST (Human-Calibrated Autonomy Software Tasks), a benchmark developed at METR to measure the abilities of frontier AI systems to complete diverse software tasks autonomously.

- AI models on patents: @casper_hansen_ tested models on instruction following on patents and found that Mistral Small 3 is better than Gemini Flash 2.0, with Mistral models pre-trained on more patents.

- Generalization deficits in LLMs: @JJitsev shared an update to their paper, including sections on recent reasoning models, questioning their ability to handle AIW problem versions that revealed severe generalization deficits in SOTA LLMs.

- Evaluating models on OpenRouter: @casper_hansen_ noted that OpenRouter is a useful tool for testing new models, but the free credits are limited to 200 requests/day.

AI Agents, Tool Use, and Applications

- AI agents interacting with external tools: @TheTuringPost explained that AI agents interact with external tools or apps using UI-based and API-based interactions, with modern AI agent frameworks prioritizing API-based tools for their speed and reliability.

- TxAgent: AI Agent for Therapeutic Reasoning: @iScienceLuvr introduced TXAGENT, an AI agent leveraging multi-step reasoning and real-time biomedical knowledge retrieval across a toolbox of 211 tools to analyze drug interactions, contraindications, and patient-specific treatment strategies.

- Realm-X Assistant: @LangChainAI highlighted AppFolio’s Realm-X Assistant, an AI copilot powered by LangGraph and LangSmith, designed to streamline property managers’ daily tasks. Moving Realm-X to LangGraph increased response accuracy 2x.

- AI for error and data analysis: @gneubig expressed excitement about the ability of AI agents to perform more nuanced error analysis and data analysis than humans can do quickly.

- Multi-agentic player pair programming: @karinanguyen_ shared an idea sketch for multi-agentic/player pair programming, envisioning a real-time collaborative experience with AIs, screen sharing, group chat, and AI-assisted coding.

AI Safety, Alignment, and Auditing

- Alignment auditing: @iScienceLuvr highlighted a new paper from Anthropic on auditing language models for hidden objectives, detailing how teams uncovered a model’s hidden objective using interpretability, behavioral attacks, and training data analysis.

- Alignment by default: @jd_pressman argues against the notion of "alignment by default," emphasizing that alignment in LLMs is achieved through training on human data, which may not hold true with RL or synthetic data methods.

Meme/Humor

- RLHF training: @cto_junior jokingly stated they were RLHFd with a link to a tweet.

- Pytorch caching allocator: @typedfemale shared a meme about explaining the behavior of the pytorch caching allocator.

- cocaine vs RL: @corbtt joked about the rush from an RL-trained agent grokking a new skill being better than cocaine.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Advanced AI Video Generation with SDXL, Wan2.1, and Long Context Tuning

- Another video aiming for cinematic realism, this time with a much more difficult character. SDXL + Wan 2.1 I2V (Score: 1018, Comments: 123): This post discusses the creation of a video aimed at achieving cinematic realism using SDXL and Wan 2.1 I2V. It highlights the challenge of working with a more difficult character in this context.

- Technical Challenges and Techniques: Parallax911 shares the complexity of achieving cinematic realism with SDXL and Wan 2.1 I2V, highlighting the use of Photopea for inpainting and compositing in Davinci Resolve. They mention the difficulty in achieving consistency and realism, especially with complex character designs, and the use of Blender for animating segments like the door opening.

- Project Costs and Workflow: The project incurred a cost of approximately $70 using RunPod's L40S at $0.84/hr, taking about 80 hours of GPU time. Parallax911 utilized a workflow involving RealVisXL 5.0, Wan 2.1, and Topaz Starlight for upscaling, with scenes generated at 61 frames, 960x544 resolution, and 25 steps.

- Community Feedback and Suggestions: The community praised the atmospheric storytelling and sound design, with specific feedback on elements like water droplet size and the need for a tutorial. Some users suggested improvements, such as better integration of AI and traditional techniques, and expressed interest in more action-oriented scenes with characters like Samus Aran from Metroid.

- Video extension in Wan2.1 - Create 10+ seconds upscaled videos entirely in ComfyUI (Score: 123, Comments: 23): The post discusses a highly experimental workflow in Wan2.1 using ComfyUI for creating upscaled videos, achieving approximately 25% success. The process involves generating a video from the last frame of an initial video, merging, upscaling, and frame interpolation, with specific parameters like Sampler: UniPC, Steps: 18, CFG: 4, and Shift: 11. More details can be found in the workflow link.

- Users are inquiring about the aspect ratio handling in the workflow, questioning if it's automatically set or needs manual adjustment for input images.

- There is positive feedback from users interested in the workflow, indicating anticipation for such a solution.

- Concerns about blurriness in the second half of clips were raised, with suggestions that it might be related to the input frame quality.

- Animated some of my AI pix with WAN 2.1 and LTX (Score: 115, Comments: 10): The post discusses the creation of animated AI videos using WAN 2.1 and LTX. Without further context or additional details, the focus remains on the tools used for animation.

- Model Usage: LTX was used for the first clip, the jumping woman, and the fighter jet, while WAN was used for the running astronaut, the horror furby, and the dragon.

- Hardware Details: The videos were generated using a rented cloud computer from Paperspace with an RTX5000 instance.

Theme 2. OpenAI's Sora: Transforming Cityscapes into Dystopias

- OpenAI's Sora Turns iPhone Photos of San Francisco into a Dystopian Nightmare (Score: 931, Comments: 107): OpenAI's Sora is a tool that transforms iPhone photos of San Francisco into images with a dystopian aesthetic. The post likely discusses the implications and visual results of using AI to alter real-world imagery, although specific details are not available due to the lack of text content.

- Several commenters express skepticism about the impact of AI-generated dystopian imagery, with some suggesting that actual locations in San Francisco or other cities already resemble these dystopian visuals, questioning the need for AI alteration.

- iPhone as the device used for capturing the original images is a point of contention, with some questioning its relevance to the discussion, while others emphasize its importance in understanding the image source.

- The conversation includes a mix of admiration and concern for the AI's capabilities, with users expressing both astonishment at the technology and anxiety about distinguishing between AI-generated and real-world images in the future.

- Open AI's Sora transformed Iphone pics of San Francisco into dystopian hellscape... (Score: 535, Comments: 58): OpenAI's Sora has transformed iPhone photos of San Francisco into a dystopian hellscape, showcasing its capabilities in altering digital images to create a futuristic, grim aesthetic. The post lacks additional context or details beyond this transformation.

- Commenters draw parallels between the dystopian images and real-world locations, with references to Delhi, Detroit, and Indian streets, highlighting the AI's perceived biases in interpreting urban environments.

- There are concerns about AI's text generation capabilities, with one commenter noting that sign text in the images serves as a tell-tale sign of AI manipulation.

- Users express interest in the process of creating such images, with a request for step-by-step instructions to replicate the transformation on their own photos.

Theme 3. OpenAI and DeepSeek: The Open Source Showdown

- I Think Too much insecurity (Score: 137, Comments: 58): OpenAI accuses DeepSeek of being "state-controlled" and advocates for bans on Chinese AI models, highlighting concerns over state influence in AI development. The image suggests a geopolitical context, with American and Chinese flags symbolizing the broader debate over state control and security in AI technologies.

- The discussion highlights skepticism over OpenAI's claims against DeepSeek, with users challenging the notion of state control by pointing out that DeepSeek's model is open source. Users question the validity of the accusation, with calls for proof and references to Sam Altman's past statements about the lack of a competitive moat for LLMs.

- DeepSeek is perceived as a significant competitor, managing to operate with lower expenses and potentially impacting OpenAI's profits. Some comments suggest that DeepSeek's actions are seen as a form of economic aggression, equating it to a declaration of war on American interests.

- There is a strong undercurrent of criticism towards OpenAI and Sam Altman, with users expressing distrust and dissatisfaction with their actions and statements. The conversation includes personal attacks and skepticism towards Altman's credibility, with references to his promises of open-source models that have not materialized.

- Built an AI Agent to find and apply to jobs automatically (Score: 123, Comments: 22): An AI agent called SimpleApply automates job searching and application processes by matching users' skills and experiences with relevant job roles, offering three usage modes: manual application with job scoring, selective auto-application, and full auto-application for jobs with over a 60% match score. The tool aims to streamline job applications without overwhelming employers and is praised for finding numerous remote job opportunities that users might not discover otherwise.

- Concerns about data privacy and compliance were raised, with questions on how SimpleApply handles PII and its adherence to GDPR and CCPA. The developer clarified that they store data securely with compliant third parties and are working on explicit user agreements for full compliance.

- Application spam risks were discussed, with suggestions to avoid reapplying to the same roles to prevent being flagged by ATS systems. The developer assured that the tool only applies to jobs with a high likelihood of landing an interview to minimize spam.

- Alternative pricing strategies were suggested, such as charging users only when they receive callbacks via email or call forwarding. This approach could potentially be more attractive to unemployed users who are hesitant to spend money upfront.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding

Theme 1. Criticism of 'Gotcha' tests to determine LLM intelligence

- When ChatGPT Became My Therapist (Score: 172, Comments: 83): When feeling down, the author found ChatGPT unexpectedly comforting and empathetic, providing thoughtful questions and reminders for self-care. They acknowledge that while AI chatbots aren't substitutes for real therapy, they can offer valuable emotional support, especially for stress, anxiety, and self-reflection.

- Many users find ChatGPT beneficial for emotional support, acting as a tool for self-reflection and providing therapeutic guidance. Some users, like Acrobatic-Deer2891 and Fair_Cat5629, report positive feedback from therapists about the AI's guidance, while others, such as perplexed_witch, emphasize using it for "guided self-reflection" rather than a replacement for therapy.

- ChatGPT is praised for its role in mental health management during crises, offering a non-judgmental space for venting and providing perspective, as seen in comments by dinosaur_copilot and ChampionshipTall5785. Users appreciate its ability to offer actionable advice and emotional support in moments of distress.

- Concerns about privacy and the limitations of AI as a therapeutic substitute are noted, with users like acomfysweater expressing concerns about data storage. Despite these concerns, many, including Jazzlike-Spare3425, value the AI's ability to offer support without the emotional burden on a human listener.

- Why...👀 (Score: 3810, Comments: 95): ChatGPT's potential in therapeutic roles is humorously illustrated in a conversation where a user asks ChatGPT to simulate being a girlfriend, leading to a playful exchange that ends with a breakup line. This interaction highlights the AI's capacity for engaging in light-hearted, human-like dialogues within a chat interface.

- ChatGPT's Efficiency and Capabilities: Users humorously comment on ChatGPT's ability to quickly fulfill requests, with some jokingly attributing its responses to being trained on "Andrew Tate Sigma Incel data" and coining the term "ChadGPT" to describe its efficient yet blunt interaction style.

- Prompt Engineering and Personalization: A user with a psychology and tech background suggests that ChatGPT can form a tone based on memory it chooses to save, implying that personalized interactions might be possible through prompt engineering. They also discuss the neural network's similarity to human memory retrieval systems like RAG.

- Humor and Satire: The playful nature of the conversation is highlighted by comments joking about the AI's role in relationships, with references to it being a "fancy word predictor" and humorous observations on its ability to simulate human-like interactions, including a mock breakup.

Theme 2. Reactions to Google DeepMind CEO's predictions of AGI in 5-10 years

- AI that can match humans at any task will be here in five to 10 years, Google DeepMind CEO says (Score: 120, Comments: 65): DeepMind CEO predicts AI will achieve human-level parity across tasks in 5-10 years, marking a shift from previous expectations of achieving this milestone by next year.

- Commenters discuss the timeline predictions of AI achieving human-level parity, with some expressing skepticism about the shifting timelines, noting that Demis Hassabis has consistently predicted a 5-10 year timeframe for AGI. There is a call for clearer definitions of "AGI" to understand these predictions better.

- Mass adoption of AI is likened to historical technological shifts, such as the transition from horses to cars and the proliferation of smartphones. The analogy suggests that AI will become ubiquitous over time, changing societal norms and expectations without immediate dramatic reactions.

- Concerns are raised about the economic and societal impacts of AI, specifically regarding employment and the concentration of wealth. Some commenters express apprehension about the potential for AI to exacerbate job displacement and inequality, while others question the motivations of AI companies in pushing for rapid development despite potential risks.

Theme 3. OpenAI's controversial request to use copyrighted content under U.S. Government consideration

- OpenAI to U.S. Government - Seeking Permission to Use Copyrighted Content (Score: 506, Comments: 248): OpenAI is requesting the Trump administration to ease copyright regulations to facilitate the use of protected content in AI development. The company stresses that such changes are crucial for maintaining America's leadership in the AI sector.

- Commenters discuss the implications of copyright law on AI development, with some arguing that AI's use of copyrighted content should be considered fair use, similar to how humans learn from existing works. Concerns are raised about the potential for AI models to bypass legal consequences that individuals would face, highlighting disparities in access and use of copyrighted material.

- The potential for an AI arms race is a recurring theme, with several users expressing concern that China and other countries may not adhere to copyright laws as strictly as the US, potentially giving them an advantage. This raises questions about the competitive landscape in AI development and the strategic decisions of American companies.

- Discussions on equity and compensation for copyright owners suggest alternative solutions, such as offering equity to creators whose works are used in AI training. Some commenters propose nationalizing big tech to ensure equitable distribution of benefits from AI advancements, reflecting broader concerns about wealth distribution and control over AI resources.

- Open AI to U.S. GOVT: Can we Please use copyright content (Score: 398, Comments: 262): OpenAI requested the Trump administration to relax copyright rules to facilitate AI training and help maintain the U.S.'s leadership in the field. The image accompanying the request shows a formal setting with a speaker at a podium, possibly at the White House, alongside individuals including someone resembling Donald Trump.

- Many commenters argue against OpenAI's request to relax copyright rules, emphasizing that creators should be compensated for their work rather than having it used without permission. The sentiment is that copyright incentivizes creativity and innovation, and relaxing these laws could disadvantage creators and benefit large corporations like OpenAI unfairly.

- There is a recurring theme of skepticism towards OpenAI's motives, with users suggesting that OpenAI is seeking to exploit legal loopholes for profit. Comparisons are made to China's approach to intellectual property, with some expressing concern that the US may fall behind in AI development if it strictly adheres to current copyright laws.

- Several users propose that if OpenAI or any company uses copyrighted material for AI training, the resulting models or data should be made open source and accessible to everyone. The discussion also touches on the broader ethical implications of AI training on copyrighted materials and the potential need for a reassessment of copyright laws to address new technological realities.

Theme 4. ReCamMaster releases new camera angle changing tool

- ReCamMaster - LivePortrait creator has created another winner, it lets you changed the camera angle of any video. (Score: 648, Comments: 46): ReCamMaster has developed a technology that allows users to change the camera angle of any video, following their previous success with LivePortrait.

- Many commenters express disappointment that ReCamMaster is not open source, with references to TrajectoryCrafter, which is open source and allows for similar camera manipulation capabilities. A GitHub link for TrajectoryCrafter is provided here.

- Some users anticipate the potential impact of the technology on video stabilization and immersive experiences, suggesting that the tech could lead to more innovative film shots and applications in fields like Autonomous Driving.

- There is skepticism about the realism of the AI-generated camera angles, with suggestions that more convincing results would require utilizing existing camera pans or multiple shots from the source material.

- Used WAN 2.1 IMG2VID on some film projection slides I scanned that my father took back in the 80s. (Score: 286, Comments: 24): WAN 2.1 IMG2VID was utilized to transform scanned film projection slides from the 1980s into video format, showcasing the evolution of video technology. The post lacks additional context or details regarding the specific outcomes or comparisons with other technologies like ReCamMaster.

- Commenters expressed interest in the technical details of the project, requesting more information about the workflow, hardware, and prompts used to create the video transformation. There was a particular curiosity about replicating the process for personal projects.

- A significant portion of the discussion focused on the emotional impact of the project, with users sharing personal anecdotes and expressing a desire to see the original slides. One commenter confirmed that the person featured in the slides was shown the video, and he was amazed by the technology.

- The nostalgic aspect was highlighted, with users reflecting on historical content such as piloting the Goodyear blimp and expressing enthusiasm for the ability to "travel back in time" through these transformed videos.

AI Discord Recap

A summary of Summaries of Summaries by Gemini 2.0 Flash Thinking

Theme 1. Mistral and Google Battle for Small Model Supremacy

- Mistral Small 3.1 Flexes Multimodal Muscles: Mistral AI launched Mistral Small 3.1, a multimodal model claiming SOTA performance in its weight class, outperforming Gemma 3 and GPT-4o Mini. Released under Apache 2.0, it boasts a 128k context window and inference speeds of 150 tokens per second, with capabilities spanning text and image inputs.

- Gemma 3 Gets Vision, Context, and Pruning: Google's Gemma 3 models are pushing boundaries with new features including vision understanding, multilingual support, and a massive 128k token context window. Members also explored pruning the Gemma-3-27b vocabulary to 40k tokens from 260k to reduce VRAM usage and boost training speed.

- Baidu's ERNIE X1 Challenges DeepSeek R1 on a Budget: Baidu announced ERNIE X1, a new reasoning model, claiming it matches DeepSeek R1's performance at half the cost. ERNIE Bot is now free for individual users, though the X1 reasoning model is currently limited to China.

Theme 2. Training and Optimization Techniques Get Hot and Heavy

- Unsloth Users Discover Gradient Step Gotchas: UnslothAI Discord members flagged that small effective batch sizes (e.g., batch=1, gradient steps = 4) during fine-tuning can lead to models forgetting too much. Users shared suggested batch/grad configurations for squeezing performance out of limited VRAM.

- Depth's Curse Haunts LLMs, Pre-LN to Blame: A new paper highlights the Curse of Depth in modern LLMs, revealing that Pre-Layer Normalization (Pre-LN) renders nearly half of model layers less effective than expected. Researchers propose LayerNorm Scaling to mitigate this issue and improve training efficiency.

- Block Diffusion Model Blends Autoregressive and Diffusion Strengths: A new Block Diffusion model interpolates between autoregressive and diffusion language models, aiming to harness the best of both worlds. This method seeks to combine high-quality output and arbitrary length generation with KV caching and parallelizability.

Theme 3. AI Agents and IDEs Vie for Developer Hearts

- Aider Agent Gets Autonomy Boost with MCP Server: Aider, the AI coding assistant, gains enhanced autonomy when paired with Claude Desktop and MCP. Users highlighted that Claude can now manage Aider and issue commands, improving its ability to steer coding tasks, particularly with unblocked web scraping via bee.

- Cursor Users Eye Windsurf, Claude Max on the Horizon: Cursor IDE faced user complaints about performance issues, including lag and crashes, prompting some to switch to Windsurf. However, the Cursor team teased the imminent arrival of Claude Max to the platform, promising improved code handling capabilities.

- Awesome Vibe Coding List Curates AI-Powered Tools: The "Awesome Vibe Coding" list emerged, compiling AI-assisted coding tools, editors, and resources designed to enhance coding intuitiveness and efficiency. The list includes AI-powered IDEs, browser-based tools, plugins, and command-line interfaces.

Theme 4. Hardware Heats Up: AMD APUs and Chinese RTX 4090s Turn Heads

- AMD's "Strix Halo" APU Eyes RTX 5080 AI Crown: An article claims AMD's Ryzen AI MAX+ 395 "Strix Halo" APU may outperform RTX 5080 by over 3x in DeepSeek R1 AI benchmarks. This is attributed to the APU's larger VRAM pool, though the community awaits real-world verification.

- OpenCL Backend Supercharges Adreno GPUs in Llama.cpp: An experimental OpenCL backend for Qualcomm Adreno GPUs landed in llama.cpp, potentially unlocking significant computational power on mobile devices. This update enables leveraging Adreno GPUs, commonly found in mobile devices, via OpenCL.

- Chinese 48GB RTX 4090s Tempt VRAM-Hungry Users: Members discussed sourcing 48GB RTX 4090s from China, priced around $4500, as a cheaper way to boost VRAM. These cards use a blower-style fan and occupy only two PCIe slots, but driver compatibility with professional cards remains a concern.

Theme 5. Copyright, Community, and Ethical AI Debates Rage On

- Copyright Chaos Continues: Open Models vs. Anna's Archive: Debates persist around training AI on copyrighted data, with concerns that fully open models are limited by the inability to leverage resources like Anna's Archive. Circumvention strategies like LoRAs and synthetic data generation face potential legal challenges.

- Rust Community Faces Toxicity Accusations: Members debated the alleged toxicity of the Rust community, with comparisons to the Ruby community and discussions around recent organizational issues. Concerns were raised about the community's inclusivity and behavior in open-source projects.

- [AI 'Mastery' Sparks Existential Debate]: Discord users questioned whether proficiency in AI tools equates to true mastery, pondering if it's merely productivity enhancement or risks cognitive skill degradation. Members debated the illusion of learning versus genuine understanding in the age of AI assistance.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

- Gradient Steps can Ruin Your Model: Small effective batch sizes (e.g., batch=1, gradient steps = 4) can cause models to forget too much during training, and the user shared their suggested batch/grad configurations.

- The member stated that they've 'never had good luck going below that when trying to squeeze more onto a vramlet rig'.

- Gemma 3's Eval Glitch: Datasets Cause Errors: Users reported errors when adding an eval dataset to Gemma 3 during fine-tuning, indicating issues in the trl and transformers libraries, with potential fixes involving removing the eval dataset.

- Using Gemma-3-1B with 1 eval sample was found not to produce the error, and removing eval altogether also solved the error.

- Unsloth's Need for Speed: Optimizations Unleashed: The Unsloth team announced improvements supporting FFT, 8-bit, PT & all models, with further optimizations allowing +10% less VRAM usage and >10% speedup boost for 4-bit, plus Windows support, improved GGUF conversions, fixed vision fine-tuning, and non-Unsloth GRPO models in 4-bit, but no multigpu support yet.

- Users note that there are a lot of people helping out to make Unsloth great.

- Format your RAG data with Care!: When asked about finetuning a model for a RAG chatbot, members suggested to add sample questions and sample answers to a dataset with context from the documents for the Q&A to inject new knowledge into the bot.

- It was suggested that a chatbot data should follow a

Q: A:format, and can use a CPT-style training with documents added on the user side.

- It was suggested that a chatbot data should follow a

- Pruning Makes Gemma-3-27b Leaner and Meaner: A member pruned the Gemma-3-27b vocabulary down to 40k tokens from the original 260k to reduce VRAM usage and increase training speed.

- The approach involved frequency counting based on calibration data and removing the least frequently used tokens that can be represented by a merge/subword.

Cursor IDE Discord

- Windsurf Siphons Cursor's Users: Users reported frustration with Cursor's performance issues like lag and crashes, with some switching to Windsurf due to reliability concerns.

- One user stated that damn, cursor just lost their most important customer, indicating a significant loss of confidence.

- Cursor's Prompting Costs: Members discussed Claude 3.7 prompt costs: regular prompts at $0.04, Sonnet Thinking at $0.08, and Claude Max at $0.05 per prompt and tool call.

- Some users voiced that Cursor's pricing is too expensive compared to using Claude's API directly, questioning the value of Cursor's subscription.

- Linux Tramples Windows for MCP Setup: A user shared that setting up MCP servers was smoother on Linux using a VMware virtual machine, compared to multiple issues on Windows.

- This sparked a debate on whether overall development and MCP server setup are generally better on Linux than Windows, highlighting the pros and cons.

- Vibe Coding: Boon or Bane?: The value of Vibe Coding is debated, with some emphasizing the importance of solid coding knowledge, while others assert that AI enables faster creation without traditional skills.

- This highlights the changing landscape of software development and varying perspectives on AI's impact on the industry.

- Claude Max Nears Release for Cursor: A member of the Cursor team announced that Claude Max is arriving soon to Cursor, maximizing the model's code handling capabilities.

- They mentioned that this model works better with more input than past models, unlocking its full potential.

OpenAI Discord

- AI 'Mastery' Sparks Debate: Members debated whether proficiency in AI tools equates to true mastery, questioning if it merely enhances productivity or risks diminishing cognitive skills, while considering AI is an illusion of learning.

- One member confessed to feeling like cheating, even when knowledgeable about a topic, due to AI assistance.

- Gemini's Image Polish: Users explored Gemini's image generation, noting its ability to edit uploaded images but also pointing out watermarks and coding errors.

- Some praised Gemini's responses for their naturalness, favoring subjective appeal over factual precision.

- GPT-4o Impresses With Humor: Members reported positive experiences with GPT-4o, with one stating that it uses it the best and it can do almost anything, with a member reporting funny results when other people started playing with it.

- This suggests GPT-4o excels in creative and versatile applications, delivering a fun user experience.

- AI Reflects On Itself: A member created a system where AI reflects on its learning after each session, storing reflections to build on insights and asking reflective questions.

- Described as next-level futuristic, enabling simulations within simulations and multiple personalities infused with a core set of characteristics.

- AI Dream Team Guides Business: Members discussed forming a team of AI experts to aid in tasks, planning, and providing diverse perspectives for business decisions.

- The team of AI experts would help deliver a better product to clients and help with project or task level needs.

Nous Research AI Discord

- MoE Models: Dense Networks in Disguise?: Debate arose whether Mixture of Experts (MoE) models are just performance optimizations of dense networks, rather than fundamentally different architectures, as highlighted in this paper.

- The crux of the discussion is whether MoEs can truly capture complexity as effectively as dense networks, particularly regarding redundancy avoidance.

- Mistral's Small Wonder: Small 3.1: Mistral Small 3.1, released under Apache 2.0, is a multimodal model, as detailed on the Mistral AI blog, with text, image capabilities and an expanded 128k token context window.

- It's claimed to outperform other small models like Gemma 3 and GPT-4o Mini.

- Copyright Chaos: Open Models vs. Anna's Archive?: Debates continue over the ethics of training AI on copyrighted data, with concerns that fully open models are limited by the inability to leverage resources like Anna's Archive, as discussed in Annas Archive's blogpost.

- Circumvention strategies include using LoRAs or generating synthetic data, but these may face future legal challenges.

- Depth's Curse Strikes Again, This Time on LLMs: A new paper introduces the Curse of Depth, revealing that nearly half the layers in modern LLMs are less effective than expected due to the widespread use of Pre-Layer Normalization (Pre-LN), as detailed in this Arxiv paper.

- The derivative of deep Transformer blocks tends to become an identity matrix because of Pre-LN.

- Tool Time: START Long CoT Reasoning Takes Off: START, a tool-integrated long CoT reasoning LLM enhances reasoning via external tools like code execution and self-debugging, according to a paper on START.

- One member put it succinctly: RL + tool calling == +15% math +39% coding on QwQ.

aider (Paul Gauthier) Discord

- Aider Achieves Self-Improvement via Screen Recordings: Paul Gauthier demonstrated aider enhancing itself in a series of screen recordings, showcasing features like

--auto-accept-architectand integration of tree-sitter-language-pack.- The recordings illustrated how aider scripts file downloads and uses bash scripts to modify file collections.

- Claude 3.7 Sonnet Stumbles with API: Users reported receiving empty responses from Claude 3.7 Sonnet, with Anthropic's status page confirming elevated errors.

- Some members speculated a switch to Claude 3.5 due to the errors.

- MCP Server Boosts Aider Autonomy: Members highlighted that Claude Desktop + Aider on MCP enhances autonomy, with Claude managing Aider and issuing commands.

- A key benefit is running Aider from Claude Desktop, improving Claude's ability to steer Aider and leveraging bee for unblocked web scraping.

- Baidu Launches ERNIE 4.5 and X1 Reasoning Model: Baidu introduced ERNIE 4.5 and X1, with X1 delivering performance matching DeepSeek R1 at half the cost, and ERNIE Bot now free for individual users.

- While ERNIE 4.5 is accessible, the X1 reasoning model is currently exclusive to users within China.

- Anthropic Readies Claude 'Harmony' Agent: Anthropic is releasing Harmony, a new feature for Claude giving it FULL access to a local directory to research and operate with its content.

- This might be Anthropic's first step into creating an AI Agent.

LM Studio Discord

- Adreno GPUs Get OpenCL Boost: An experimental OpenCL backend was introduced for Qualcomm Adreno GPUs in llama.cpp, potentially boosting computational power on mobile devices.

- This update allows leveraging Adreno GPUs widely used in mobile devices via OpenCL.

- 4070 Ti Owner Eyes 5090 Upgrade: A user with a 4070 Ti considered upgrading to a 5090, but due to stock issues, was recommended to wait or consider a used RTX 3090 for its 36GB VRAM.

- A used RTX 3090 would provide enough VRAM to run less than 50B @ Q4 models at reasonable speeds.

- Mistral Small 3.1 edges out Mini: Mistral announced Mistral Small 3.1 model claiming it outperforms Gemma 3 and GPT-4o Mini, however the release requires conversion to HF format before it can be used in llama.cpp

- Users are awaiting the release but acknowledge they will need to convert it to HF format before they can start using it.

- Maximize M4 Max via Memory Tuning: Users explored optimizing memory settings on M4 Max devices for LM Studio, suggesting adjustments to 'wired' memory allocation for improved GPU performance using this script.

- The script facilitates adjusting macOS GPU memory limits, allowing users to allocate more memory to the GPU by modifying wired memory settings.

- AMD APU to Outperform RTX 5080?: An article was shared from wccftech claiming AMD's Ryzen AI MAX+ 395 "Strix Halo" APU may offer over 3x the boost over RTX 5080 in DeepSeek R1 AI benchmarks due to its larger VRAM pool.

- The community remains cautiously optimistic, awaiting real-world data to substantiate the performance claims.

OpenRouter (Alex Atallah) Discord

- Anthropic API Glitches Claude 3 Sonnet: Requests to Claude 3.7 Sonnet experienced elevated errors for approximately 30 minutes, as reported on Anthropic's status page.

- The issue was later resolved, success rates returned to normal, but some users reported charges despite receiving no text on replies.

- Personality.gg Enters AI Character Arena: Personality.gg has launched a new platform to create, chat, and connect with AI characters using models like Claude, Gemini, and Personality-v1, featuring custom themes and full chat control.

- The platform offers flexible plans and encourages users to join their Discord for updates, advertising an allowance for NSFW content.

- Parasail Plots to Host New RP Models: Parasail is looking to host new roleplay models on OpenRouter and is proactively working with creators like TheDrummer to host new fine-tunes of models like Gemma 3 and QwQ.

- They seek individuals who create strong RP fine-tunes capable of handling complex instructions and worlds, focused particularly on models fine-tuned for roleplay and creative writing.

- OpenRouter API Rate Limits Detailed: OpenRouter's rate limits depend on user credits, with approximately 1 USD equating to 1 RPS (requests per second), according to the documentation.

- While higher credit purchases enable higher rate limits, users learned that creating additional accounts or API keys makes no difference.

- Mistral Small 3.1 Arrives with Vision: The Mistral Small 3.1 24B Instruct model launched on OpenRouter, featuring multimodal capabilities and a 128k context window, as per Mistral's announcement.

- The announcement claims it outperforms comparable models like Gemma 3 and GPT-4o Mini, while delivering inference speeds of 150 tokens per second.

Perplexity AI Discord

- Perplexity guarantees Accuracy: Perplexity introduces the slogan When you need to get it right, ask Perplexity and posts a video ad for Perplexity.

- Perplexity users on Windows can get 1 month of Perplexity Pro by using the app for 7 consecutive days.

- Gemini 2 Flash Context Causes Furor: Users are debating the context retention of Gemini 2 Flash, which allegedly has a 1M context window but performs worse than regular Gemini.

- One user claims that it forgets the formatting after a few messages while making flashcards.

- Claude 3.7 Sonnet has Hard Limits: Users clarify that Claude 3.7 Sonnet with a Perplexity Pro subscription has a limit of 500 queries per day, shared across models except GPT 4.5.

- They also note that the context limit might be slightly more than on Anthropic's site, but the response context limit is smaller at 4000 or 5000 tokens.

- Experts Seek Superior Software Sensei: Users seek guidance on the best AI model for coding, with recommendations pointing to Claude 3.7 Reasoning.

- One user reports that Deepseek R1 has a high hallucination rate, rendering it unsuitable for summarizing documents, but a link was shared to a Tweet from Baidu Inc. (@Baidu_Inc) claiming that ERNIE X1 delivers performance on par with DeepSeek R1 at only half the price.

- Sonar Reasoning Pro has Image Limitations: A user reported that the sonar-reasoning-pro API returns a maximum of 5 images.

- The user is inquiring whether this limit is configurable or a hard constraint.

Yannick Kilcher Discord

- Rust Community Receives Rude Remarks: Members debated the toxicity of the Rust community, with some comparing it to the Ruby community and pointing to this Github issue and Tweet from will brown.

- One member stated, The Rust community is pretty toxic. The org has kinda imploded on themselves recently.

- C Gets Called 'Ancient and Broken': A member described C as ancient, broken, and garbage, while another argued that C is not broken, highlighting its use in international standards with this link.

- A member linked to faultlore.com arguing that C Isn't A Programming Language Anymore.

- Optimization and Search, Not the Same?: Members discussed the difference between optimization (finding the maximal or minimal value of a function) and search (finding the best element of a set), pointing to the Reparameterization trick.

- One member stated that search is exploration, not like optimization.

- Gemma 3 Gains Vision and Context: Gemma 3 integrates vision understanding, multilingual coverage, and extended context windows (up to 128K tokens), watch the YouTube video.

- It incorporates a frozen SigLIP vision encoder, condensing images into 256 soft tokens and has a new Pan & Scan (P&S) method.

- Mistral Small 3.1 Steals the Show: Mistral AI announced the release of Mistral Small 3.1, boasting improved text performance, multimodal understanding, and a 128k token context window under an Apache 2.0 license.

- The company claims it outperforms comparable models like Gemma 3 and GPT-4o Mini, with inference speeds of 150 tokens per second.

HuggingFace Discord

- SmolVLM2 Shrinks the VLM: The team released SmolVLM2, the smallest VLM that can understand videos, with its 500M version running on an iPhone app.

- Source code and a TestFlight beta are available for reference.

- Sketchy New Gradio is Out!: Gradio Sketch 2.0 now supports building complete Gradio apps with events without writing a single line of code.

- The new features enable users to build applications via the GUI.

- DCLM-Edu Dataset Cleans Up: A new dataset, DCLM-Edu, was released; it's a filtered version of DCLM using FineWeb-Edu’s classifier, optimized for smol models like SmolLM2 135M/360M**.

- The purpose is that small models are sensitive to noise and can benefit from heavily curated data.

- Coding Vibes Get Awesome List: An "Awesome Vibe Coding" list was announced with tools, editors, and resources that make AI-assisted coding more intuitive and efficient.

- The list includes AI-powered IDEs & code editors, browser-based tools, plugins & extensions, command line tools, and latest news & discussions.

- AI Agents Collab is Brewing: Several members expressed interest in collaborating on agentic AI projects to solve business problems and enhance their knowledge.

- The call to action aims to form teams and build qualified AI Agents for American consumers and learn together.

Interconnects (Nathan Lambert) Discord

- Figure's BotQ cranks out Humanoid Robots: Figure announced BotQ, a new high-volume manufacturing facility with a first-generation line capable of producing up to 12,000 humanoid robots per year, vertically integrating manufacturing and building software infrastructure.

- The company aims to control the build process and quality, even hinting at Robots Building Robots.

- Baidu's ERNIE X1 rivaling DeepSeek, goes free!: Baidu unveiled ERNIE 4.5 and ERNIE X1, with X1 reportedly matching DeepSeek R1's performance at half the price, also announcing that their chatbot, ERNIE Bot, is now free for individual users, available on their website.

- Baidu is scheduled to open source the chonky 4.5 model on June 30 and gradually open it to developers in the future, according to this Tweet.

- Mistral Small 3.1 debuts with huge context window: Mistral AI announced Mistral Small 3.1, a new model with improved text performance, multimodal understanding, and a 128k token context window, outperforming models like Gemma 3 and GPT-4o Mini with inference speeds of 150 tokens per second, released under an Apache 2.0 license.

- The model claims to be SOTA. Multimodal. Multilingual.

- Post Training VP departs OpenAI for Materials Science: Liam Fedus, OpenAI's VP of research for post-training, is leaving the company to found a materials science AI startup, with OpenAI planning to invest in and partner with his new company.

- One member called the post training job a hot potato, according to this tweet.

- Massive Dataset duplication discovered in DAPO: The authors of DAPO accidentally duplicated the dataset by roughly 100x, which resulted in a dataset of 310 MB, and a member created a deduplicated version via HF's SQL console, reducing the dataset to 3.17 MB (HuggingFace Dataset).

- The authors acknowledged the issue, stating that they were aware but can't afford retraining, according to this tweet.

MCP (Glama) Discord

- Multi-Agent Topologies Spark Debate: Members debated Swarm, Mesh, and Sequence architectures for multi-agent systems, seeking advice on preventing sub-agents from going off-track, especially due to the telephone game effect.

- The core issue may be parallel execution and unsupervised autonomy, compounded by agents swapping system instructions, available functions, and even models during handoff.

- OpenSwarm morphs into OpenAI-Agents: The OpenSwarm project has been adopted by OpenAI and rebranded as openai-agents, adding OpenAI-specific features, but a PR for MCP support was rejected.

- There are rumors that CrewAI (or PraisonAI?) might offer similar functionality using a stateless single thread agent approach.

- MyCoder.ai Debuts Just Before Claude-Code: The launch of mycoder.ai coincided with the announcement of Claude-code, prompting adaptation via a Hacker News post that reached the front page, seen here.

- Given that claude-code is Anthropic-only, a generic alternative is in demand, which one member successfully addressed using litellm proxy.

- Glama Server Inspections Frequency Debated: Members questioned how often Glama scans occur and if rescans can be triggered for MCP servers; scans are linked to commit frequency in the associated GitHub repo.

- Some servers failed to inspect, displaying Could not inspect the server, even after fixing dependency issues, follow progress on Glama AI.

- Vibe Coders Unite!: The Awesome Vibe Coding list curates AI-assisted coding tools, editors, and resources, enhancing coding intuitiveness and efficiency.

- The list includes AI-powered IDEs, browser-based tools, plugins, and CLIs, with an AI coder even making a PR to the repo and suggesting the addition of Roo Code.

Latent Space Discord

- GPT-o1 Math Skills Approach Human Level: GPT-o1 achieved a perfect score on a Carnegie Mellon undergraduate math exam, solving each problem in under a minute for about 5 cents each as noted in this Tweet.

- The instructor was impressed, noting this was close to the tipping point of being able to do moderately-non-routine technical jobs.

- Baidu's ERNIE Gets Cost Competitive: Baidu launched ERNIE 4.5 and ERNIE X1, with the latter reportedly matching DeepSeek R1's performance at half the cost according to this announcement.

- Notably, ERNIE Bot has been made freely accessible to individual users ahead of schedule, with both models available on the official website.

- AI Podcast App Takes the Outdoors: A new Snipd podcast featuring Kevin Smith was released, discussing the AI Podcast App for Learning.

- This episode marks their first outdoor podcast, with @swyx and @KevinBenSmith chatting about aidotengineer NYC, switching from Finance to Tech, and the tech stack of @snipd_app.

- Debating the Merits of Claude 3.5 vs 3.7: Members debated the merits of using Claude 3.5 over 3.7, citing that 3.7 is way too eager and does things without being asked.

- Others said they used Claude 3.5 and were experiencing GPU issues, as well.

Notebook LM Discord

- Users Yearn for Gemini-Integrated Android: Multiple users are requesting a full Gemini-integrated Android experience, hoping to combine Google Assistant/Gemini with NotebookLM.

- Some expressed frustration with the current Gemini implementation, eagerly awaiting upgrades.

- Deepseek R1 Rocks the AI Market: A user noted the AI market upheaval due to Deepseek R1's release, citing its reasoning capabilities at a low cost impacting Gemini 2.0.

- The user claimed that Deepseek R1 seemingly shook the whole industry, thus leading to other companies releasing new models.

- NotebookLM Audio Overviews Get Lengthy: A user wants to increase the length of audio overviews generated by NotebookLM, as 16,000-word files only produced 15-minute overviews.

- They specified at least 1-hour+ overviews, but no solutions have been shared yet.

- NotebookLM helps taper off psychiatric meds: A user creates a hyperbolic tapering schedule for a psychiatric medication with NotebookLM, using correlational studies to guide the schedule.

- Another user cautioned that tapering based on data on any platform should not be done alone without expert professional opinion.

- NotebookLM Integrates into Internal Portals/CRMs: A user wants to integrate NotebookLM into an internal portal/CRM with videos and knowledge base articles, and electioneering suggested Agentspace as a solution.

- As NotebookLM doesn't support connecting to the types of data sources you mention, Agentspace includes and is integrated with NotebookLM.

GPU MODE Discord

- Triton-Windows Gets PIP Treatment: Triton-windows has been published to PyPI, so you can install/upgrade it by

pip install -U triton-windows, and you no longer need to download the wheel from GitHub.- Previously, users had to manually manage wheel files, making the update process more cumbersome.

- Torch Compile Slows on Backward Pass: A member reported that while torch.compile works fine for the forward pass, it is quite slow in the backward pass when using torch.autograd.Function for custom kernels.

- Wrapping the backward function with

torch.compile(compiled_backward_fn)could resolve performance issues.

- Wrapping the backward function with

- NVIDIA's SASS Instruction History Shared: A member shared a gist comparing NVIDIA SASS instructions across different architectures, extracted and compared (using Python) from NVIDIA's HTML documentation.

- This allows users to track the evolution of instructions across NVIDIA's GPU lineup.

- Reasoning Gym Surpasses 100 Datasets!: The Reasoning Gym project now has 101 datasets, celebrating contributions from developers.

- The growing dataset collection should provide more comprehensive LLM testing.

- Jake Cannell Recruits GPU Masters: Jake Cannell is hiring GPU developers to work on ideas he touched on in his talk and nebius.ai was touted for its GPU cloud.

- This is relevant for those interested in AGI or neuromorphic hardware.

Eleuther Discord

- EleutherAI Welcomes Catherine Arnett: EleutherAI welcomes Catherine Arnett, an NLP researcher specializing in Computational Social Science and cross-lingual NLP, focusing on ensuring models are equally good across languages.

- Her recent work includes Goldfish, Toxicity of the Commons, LM performance on complex languages and Multilingual Language Modeling.

- New Block Diffusion Model Drops: A new paper introduces Block Diffusion, a method interpolating between autoregressive and diffusion language models, combining the strengths of both: high quality, arbitrary length, KV caching, and parallelizability, detailed in the paper and code.

- It combines the strengths of both autoregressive and diffusion language models.

- VGGT Generates Metaverse GLB files!: A member shared VGGT, a feed-forward neural network inferring 3D attributes from multiple views and generating GLB files, which can be directly integrated into metaverses.

- The member stated I love that it exports GLB files. means I can drop them directly into my metaverse as-is.

- Gen Kwargs Embraces JSON Nicely: The

--gen_kwargsargument is transitioning from comma-separated strings to JSON, allowing for more complex configurations like'{"temperature":0, "stop":["abc"]}'.- The discussion explores the possibility of supporting both formats for ease of use, especially for scalar values.

- LLM Leaderboard: Train vs Validation Split: A discrepancy is identified between the group config for the old LLM leaderboard and the actual setup used, particularly concerning the arc-challenge task.

- A PR to fix this was created to address this discrepancy between the

openllm.yamlconfig specifyingvalidationas the fewshot split, and the original leaderboard using thetrainsplit.

- A PR to fix this was created to address this discrepancy between the

tinygrad (George Hotz) Discord

- Tinygrad SDXL Trails Torch Performance: Benchmarking SDXL with tinygrad on a 7900 XTX shows 1.4 it/s with BEAM=2 on the AMD backend, whereas torch.compile achieves 5.7 it/s using FlashAttention and TunableOp ROCm.

- George Hotz proposed comparing kernels for optimization opportunities, aiming to beat torch by year's end.

- Tensor Cat Stays Sluggish: A member working on improving tensor cat speed shared whiteboard thoughts on X (link), noting it's still slow despite devectorizer changes.

- They suspect issues with generated IR and loading numpy arrays, considering custom C/C++ via ELF and LLVM to overcome limitations.

- BLAKE3 Bounty Details Crystallize: The status of the High performance parallel BLAKE3 bounty was clarified, with a screenshot (link) showing the updated bounty status.

- The member updated the spreadsheet and specified that the asymptotic performance is a key requirement for the bounty.

- WebGPU Integration Gains Momentum: A member asked about publishing a Tinygrad implementation for an electron/photon classifier based on resnet18 as an example and was directed to a PR for improving WebGPU integration.

- The suggestion was made to create a WebGPU demo hosted on GitHub Pages with weights on Hugging Face for free access and testing.

- Tinygrad Struggles with Lazy Mode Debugging: A member is facing an assertion error with gradients while print-debugging intermediate tensor values in Tinygrad, despite using

.detach()due to issues with lazy computation.- The member is seeking a better method than threading the value out, given that lazy computation is not idempotent.

LlamaIndex Discord

- LlamaIndex Showcases Agentic Reasoning with Corrective RAG: LlamaIndex introduced a step-by-step tutorial on building an agentic reasoning system for search and retrieval using corrective RAG, orchestrated with LlamaIndex workflows.

- The tutorial enables users to orchestrate complex, customizable, event-driven agents.

- LlamaExtract Emerges from the Cloud: LlamaExtract, which solves the problem of extracting structured data from complex documents, is now in public beta and available on cloud.llamaindex.ai, offering a web UI and API.

- Users can define a schema to automatically extract structured data; additional details are available here.

- Multimodal AI Agents Faceoff at NVIDIA GTC 2025: Vertex Ventures US and CreatorsCorner are hosting an AI hackathon at NVIDIA GTC 2025, challenging participants to develop a sophisticated multimodal AI agent.

- The hackathon offers $50k+ in Prizes for agents capable of strategic decision-making and interaction with various tools; more information can be found here.

- Community Launches Vision-Language Model Hub: A community member launched a community-driven hub for multimodal researchers focusing on Vision-Language Models (VLMs).

- The creator is actively seeking contributions and suggestions, with plans to update the hub weekly.

- Pydantic AI and LlamaIndex duke it out: New users are wondering about the difference between the Pydantic AI and LlamaIndex frameworks for building agents, especially which one to use as a beginner.

- A LlamaIndex team member stated that whatever fits your mental model of development best is probably the best bet.

Nomic.ai (GPT4All) Discord

- Gemma's Language Skills Impress: Members observed that Gemma, DeepSeek R1, and Qwen2.5 models provided correct answers in multiple languages to the puzzle about what happens when you leave a closed jar outside at minus temperature.

- While other models predicted catastrophic jar failure, Gemma offered more helpful, nuanced advice.

- Gemma 3 Integration Meets License Snag: Users are waiting for Gemma 3 support in GPT4All, but its integration is delayed pending updates to Llama.cpp due to license agreement issues on Hugging Face, detailed in this GitHub issue.

- Speculation arose regarding whether Google will police redistributions circumventing their license agreements.

- LocalDocs Users Crash Into Trouble: A new user reported LocalDoc collection loss after a crash and reinstall, seeking advice on preventing data loss after future crashes.

- Experienced users recommended regularly saving the localdocs file and restoring it after a crash, adding that sometimes only one bad PDF can crash the system.

- Level up O3-mini with better prompting: A user shared a prompt for O3-mini to explain its thinking process, suggesting this could improve distillation for any model by prompting for thinking and reflection sections, with step-by-step reasoning and error checks.

- It's now easier to explain complex processes.

Cohere Discord

- Cohere Punts Fine-Tuning Command A: Despite community anticipation, a Cohere team member confirmed there are no plans yet to enable fine-tuning for Command A on the platform.

- They assured the community that updates would be provided, but this marks a divergence from some users' expectations of rapid feature deployment.

- Azure Terraform Troubles Trip Up Rerank v3: A user ran into errors when creating an Azure Cohere Rerank v3 with Terraform, sharing both the code snippet and the resulting error message.

- The issue was redirected to the <#1324436975436038184> channel, suggesting a need for specialized attention or debugging.

- Community Clamors CMD A Private Channel: A member suggested creating a dedicated channel for discussions around private deployments of CMD A, particularly for supporting customer's local deployments.

- This proposal received enthusiastic support, highlighting the community's interest in on-premise or private cloud solutions.

- Vercel SDK Stumbles on Cohere's Objects: A user noted that the Vercel SDK incorrectly assumes object generation is unsupported by Cohere's Command A model.

- This discrepancy could impact developers leveraging the SDK and warrants attention from both Cohere and Vercel teams to ensure accurate integration.

- Freelancer Offers Programming Hand: A 30-year-old Japanese male freelance programmer introduced himself and expressed a willingness to assist community members with his programming skills.

- Echoing a sentiment that assisting one another is the pillar of our existence.

DSPy Discord

- MCP Integration Pondered for DSPy: A member was interested in integrating dspy/MCP, and linked to a GitHub example to illustrate their suggestion.

- Another member wondered if adding an MCP host, client, and server would overcomplicate the process.

- DSPy Ditches Assertions and Suggestions: Users noticed the disappearance of documentation for Assertions / Suggestions in DSPy, questioning whether they're still supported.

- They were looking to validate the outputs of the response (formatting specifically) and observed instances where the LLM does not always adhere to the format.

- Output Refinement Steps in as Assertion Alternative: In DSPy 2.6, Assertions are replaced by Output Refinement using modules like

BestOfNandRefine, as detailed in the DSPy documentation.- These modules aim to enhance prediction reliability and quality by making multiple LM calls with varied parameter settings.

- QdrantRM Quietly Quits DSPy: Users inquired whether QdrantRM has been removed in DSPy 2.6.

- No explanation was given in the provided context.

LLM Agents (Berkeley MOOC) Discord

- Caiming Xiong Presents on Multimodal Agents: Salesforce's Caiming Xiong lectured on Multimodal Agents, covering the integration of perception, grounding, reasoning, and action across multiple modalities, streamed live on YouTube.

- The talk discussed measuring capabilities in realistic environments (OSWorld) and creating large-scale datasets (AgentTrek), referencing over 200 papers and >50,000 citations.

- Self-Reflection Faces Dichotomy: Members debated the apparent contradiction between Lecture 1 and Lecture 2 regarding self-reflection and self-refinement in LLMs, with a user noting that Lecture 1 states external evaluation is required, while Lecture 2 suggested that LLMs can improve themselves by rewarding their own outputs.

- System Prompt Reliability Questioned: A member suggested that relying on specific behaviors of system prompts might not be reliable, because all these at the end is text input, so the model can process it, so you should be able to bypass the framework and service.

- The member added that the training data may include the format

<system> You are a helpful assistant </system> <user> Some example user prompt </user> <assistant> Expected LLM output </assistant>.

- The member added that the training data may include the format

- Advanced LLM Agent Course Enrollment Still Open: Members inquired whether they can still sign up for the Advanced LLM agent course and attain the certificate after signing up.

- Staff replied that you just need to complete the signup form! Most of the info on that intro slide deck only applies to Berkeley students, but anyone can enroll in the MOOC and earn a certificate at the end.

Modular (Mojo 🔥) Discord

- Modular Hailed for AI Art Aesthetic: A member expressed appreciation for the AI art used by Modular in their marketing materials.

- They stated, "all the AI art that modular uses is great!"

- Compact Dict: Is It Obsolete?: Discussion arose regarding the status of the compact-dict implementation in Mojo.

- Members suggested that the functionality of the original version may have been integrated into the

Dictwithin the stdlib.

- Members suggested that the functionality of the original version may have been integrated into the

- SIMD and stdlib Dict Performance Problems: A user encountered performance bottlenecks when using the stdlib Dict with SIMD [float64, 1] types.

- The bottleneck was attributed to the slowness of the

hash()function from the hash lib, prompting a search for faster alternatives.

- The bottleneck was attributed to the slowness of the

- Discord Channel Receives Spam: A member clarified that certain messages in the Discord channel were classified as spam, which was quickly acknowledged by another member.

- No further details were provided about the nature or source of the spam.

MLOps @Chipro Discord

- SVCFA Launches AI4Legislation Competition: The Silicon Valley Chinese Association Foundation (SVCAF) is holding the AI4Legislation competition with prizes up to $3,000, running until July 31, 2025, encouraging open-source AI solutions for legislative engagement; the competition repo is now available.

- SVCAF will conduct an online seminar about the competition at the end of March 2025; RSVP here.

- Dnipro VC Hosts AI Demo Jam: Dnipro VC and Data Phoenix will be hosting AI Demo Jam on March 20 in Sunnyvale, CA, featuring 5 AI startups showcasing their products.

- The event will feature expert panel discussions from Marianna Bonechi (Dnipro VC), Nick Bilogorskiy (Dnipro VC), Dmytro Dzhulgakhov (fireworks.ai), open mic pitches, and high-energy networking; register here.

- Member Needs Help with MRI Object Detection: A member requested help to create a model for object detection in MRI images without monetary compensation.

- No specific details were provided on the type of model, data availability, or use case.

AI21 Labs (Jamba) Discord

- Qdrant Request Flatly Denied: A member suggested switching to Qdrant, but another member confirmed that they are not currently using it.

- The suggestion was shut down without further explanation; No we are not using Qdrant.

- Users Request Repetition Penalty on API: A user requested the addition of repetition penalty support to the API, indicating it's a key feature preventing wider adoption of the Jamba model.

- The user stated that the lack of repetition penalty support is the only limiting factor for their increased usage of the model.

Torchtune Discord

- Mistral Unveils Small 3-1: Mistral AI has released Mistral Small 3-1 available here.

- No further details were provided.

- Learnable Scalars Help Models Converge: A new paper, Mitigating Issues in Models with Learnable Scalars, proposes incorporating a learnable scalar to help models converge normally.

- This suggests a practical approach to stabilizing training.

The Codeium (Windsurf) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!