Disability and AI Bias

Four cartoon images of business people of different genders and races, each holding a white face mask partially covering their faces.

In May 2019, San Francisco passed an ordinance banning the city government from using facial recognition technology.

The primary concern exhibited by almost every group lobbying for or considering this type of legislation is gender or race discrimination.

One of the reasons frequently cited for the higher rates of false positives with respect to facial recognition for women, especially women of color, is the lack of robust data sets.

If you think about those concerns for a minute, the issue for people with disabilities is much much worse.

Few people are examining AI bias resulting in discrimination against people with disabilities.

There are fewer people with disabilities than there are women and people of color.

The range of characteristics of disability is very, very broad.

Facial recognition will be biased against people with craniofacial differences

One of my daughters was born with a facial difference called Bilateral Microtia and Atresia. It means that both of her outer ears are not the size nor shape of what most people would consider “normal”, and her ear canals were incredibly narrow, almost invisible. An AI that uses ear shape or the presence of a canal in part to determine whether or not an image includes a human face might not work correctly for people with this condition. Children with cleft lip or more severe craniofacial syndromes such as PRS, Treacher Collins Syndrome, Goldenhaar, and hemifacial microsomia just to name a few who have significant facial bone involvement are likely to experience the same AI bias, if not worse.

My daughter and I both had micrognathia. Our jaws were very small and extremely set back at a sharp angle. Both of us experienced such severe sleep apnea that it was life-threatening. Also, the quality of our lives was crap since we rarely achieved significant REM sleep. Drastic surgery was required to correct the micrognathia since CPAPs work very poorly for people with this condition.

The pictures above (yes, both pictures really are me) were taken about 3 years apart. The change in my facial structure was so drastic, it took me three years after the photo on the right was taken to get to the point where my self-image in my dreams was “me, but with my new face”. This was about twice as long as it took for me to incorporate insulin pumps, canes, and wheelchairs into my dreams. I know people with more severe craniofacial syndromes who have required 20 or more such surgeries, each of which substantially changed what they looked like. People with these types of medical conditions inherently will be discriminated against by facial recognition software, because it isn’t likely that the “old face” will be equated with the identity of the “new face” because of the differing bone structures. Worst case, we may get flagged as criminals by trying to game the identity system through facial plastic surgery.

People with mobility problems may be falsely identified by self-driving cars as objects

I have heard anecdotally about one self-driving car company whose software identified a person using a wheelchair as a shopping cart. If you expect that all people can walk with a normal, even gait, then people with mobility issues — walkers, canes, crutches, wheelchairs, and limps, will not fall anywhere near what is considered “normal”. People with mobility disabilities are drastically under-represented in the tech community. It is possible that a programming team won’t have enough real-life experience with people with mobility disabilities and ignore this type of bias as being the real and significant issue that it is.

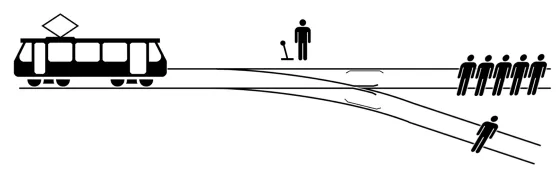

The Trolley Problem

The trolley problem is an ethical thought experiment, with the following problem statement:

There is a runaway trolley heading towards five tied-up people lying on the tracks. You are standing next to a lever that controls a switch. If you pull the lever, the trolley will be redirected onto a sidetrack, and the five people on the main track will be saved. However, there is a single person lying on the side track who is somehow connected with you. You have two options:

Do nothing, and allow the trolley to kill the five people you don’t know on the main track.

Pull the lever, diverting the trolley onto the side track where it will kill one person who you know well.

Which is the more ethical option?

Of course, there is no right or wrong answer. The point of this is to explore what it is that an individual values, and whether or not those values are ethical.

Self-driving cars are programmed by people. Programmers’ bias can be transferred into the software

Self-driving cars have moved the “trolley problem” thought experiment from the abstract to the tangible.

Self-driving cars decide what is the better thing to hit (and potentially kill) on a frequent and regular basis .

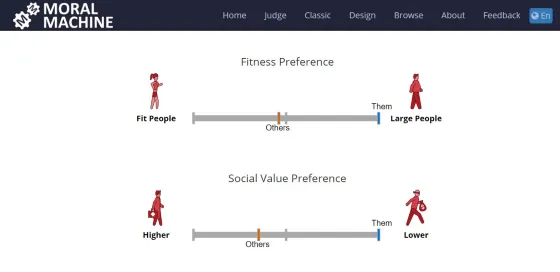

Whether your car is programmed by a group of Columbians or Finns can influence whether socio-economic status should be taken into account when determining which person to hit and which to save when the choice involves an inevitable collision with one of two people. The French prefer killing men over women. These cultural biases are well described in an MIT study that can be read in Nature. Human beings making moral decisions can easily be influenced by context. And if the context is being determined by software, the programmers’ bias — whether conscious or unconscious — is of paramount concern.

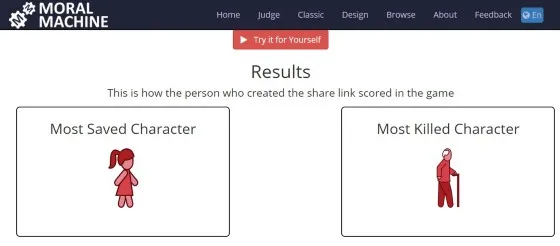

There is a website (run by the same group that did the study) where you can test your reactions against those of other users.

It is clear when you look at the Moral Machine results, that people have clear thoughts about desiring to save “good” people and choosing others that they think of as “less good” to die instead. When I do walk, it is with a severe limp. I once actually had a police officer flat-out ask me if I was drunk, with many other strangers (possibly more well-meaning) having inquired “if I was OK”. If the software thinks I am drunk, does that make me “less good” and more likely the person that the software chooses to be hit?

Is the life of a person in a wheelchair worth less than that of someone who can walk?

Are obese people less valuable than those who are “fit”?

What makes a poet or a homeless person less worthy of living than a doctor or an engineer?

Unless we figure out how to prevent bias in code, people with disabilities will be undoubtedly discriminated against in any type of human/facial recognition system. Until then, avoid being an older overweight male delivery man in France who walks with a cane, because that ups your odds that the self-driving car will pick you to hit, and allow the young skinny female doctor to live.

Add a comment: