Accessibility best practices for screenreader testing

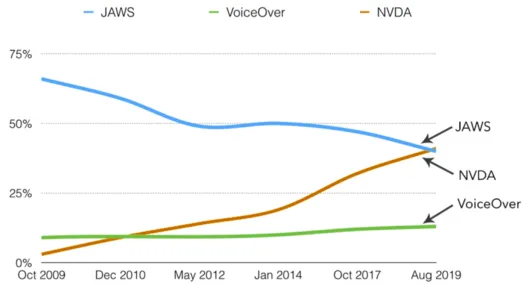

Chart from WebAIM survey #8 https://webaim.org/projects/screenreadersurvey8/

Chart description: The results of the annual WebAIM survey from 2019 shows the trendlines for Voiceover, NVDA, and JAWS as primary screen reader from 2009 to 2019

JAWS started at 64 % in 2009 and has dropped steadily to just above 40 % today.

NVDA started in the low single digits in 2009 and just passed JAWS at 40.6% last fall when this survey was performed.

VoiceOver's market share has very slightly increased over the past five years.

Screen readers are a form of assistive technology that takes visual screens from software, websites, mobile applications, or documents and converts them into audio output that people with vision loss can perceive, operate, and understand.

There are many different screen reader providers. I am deliberately avoiding the use of the word “vendor” here since most are free. Each screen reader has an entirely different set of gestures and keyboard commands that can be used by the user to enable interaction. And there are a lot of screen readers on the market; plus or minus about 40 altogether, though three of them hold 90 % of the market. Since there will NEVER be enough time to test them all, what are the best practices for deciding which one(s) to use?

Best Practice #1: Test at least one screen reader per OS supported by your product

If you officially support Linux, you have to test Orca even though it only has .6 % of the overall market because it is the most common screen reader for the Linux platform.

If you officially support Apple, you must test using VoiceOver.

If you officially support Windows, that’s where it gets messy. There are multiple screen readers to choose from. You may not have time to test them all. More analysis below.

Best Practice #2: If you are testing with multiple screen readers, you will get more complete results if you mix in other factors

Some bugs are browser-specific. What might work on NVDA on Firefox might not work with NVDA on Chrome (or vice versa). There are numerous browser-specific screen reader bugs on IE/Edge that Microsoft has not fixed.

By building a testing matrix, you can sort out which browser/screen reader combinations to test. Mixing them up when you retest functional bug fixes also gives you better coverage. Testing on Firefox with NVDA in the first round is always a good idea (Firefox is the preferred platform for NVDA). When it is time for the next round of testing:

Test Firefox-specific bug fixes on FF (there might be a small number)

Think about doing the remainder of your bug validation using a different screen reader/browser such as JAWS on Chrome or Narrator on Edge.

Along the same line of reasoning, you can also mix up your hardware (test on an iPhone 7+, for example, rather than a 10) or your operating systems (iOS 12 for one run, 13 for another).

Best Practice #3: You should test on multiple hardware platforms

Unless you support only one single hardware environment, some people with disabilities stick with ONE platform.

People without disabilities usually can shift between platforms or browsers without any significant level of discomfort.

People with disabilities who have highly customized assistive technology setups struggle to move from one environment to another, and may not have a second environment to move to.

This difference in approach is because people with disabilities have highly customized their assistive technology, making it difficult to move from one set of hardware to another (even when the device is otherwise identical)

So, let’s say your organization supports both PC and Mac, but you only test screen readers on PCs. Not all users with disabilities who prefer the Apple environment will be able to move to a PC quickly.

Testing on PC screen readers only under these circumstances does not provide equal access. If the platform is supported for non-disabled individuals, it must be tested with appropriate assistive technology for people with disabilities.

Best Practice #4: You need to test on older hardware platforms and screen reader versions

People with disabilities don’t upgrade quickly (either hardware or software)

People with disabilities tend to have lower rates of employment. As a result, they also tend to have lower socioeconomic status. PwDs typically have less money to spend on new tech unless the old tech is out of warranty and not functioning.

I don’t know a blind user who hasn’t gotten bitten in the behind at least once by upgrading one piece of their environment (OS, browser, JAVA, etc.) and having the whole assistive tech stack crater as a result. That makes PwDs hesitant to upgrade even if when it is free.

Because of this reluctance to upgrade, it is best not to test on the shiniest newest versions of hardware, software, and assistive technologies as those are the least likely to be in actual use by people with disabilities.

Best Practice #5 — On PCs, if you can only test on one screen reader, test using NVDA

There are MANY screen readers available for Windows environments. The two leaders are JAWS and NVDA.

JAWS is the preferred platform for blind users. However, it is incredibly expensive. The most significant difference between NVDA and JAWS is that JAWS provides a lot of bells and whistles (including scripting) that allow blind users to customize their configuration. With a Braille notetaker and JAWS, a previous co-worker of mine could run a Google search and interpret the results faster than I could typing almost 100 WPM.

However:

Those bells and whistles that come with JAWS don’t add any value to sighted testers.

It’s rare (but not impossible) to see a situation where something works on NVDA and fails on JAWS.

Not every blind user can afford JAWS, especially those outside of the US who reside in countries that don’t have vocational rehabilitation departments willing to buy it for them.

If you are only going to be able to test one PC-based screen reader, make it NVDA. The chart (and its description) at the top of this article says it all. NVDA is on a straight upswing over the past ten years, and JAWS is the complete opposite. NVDA is free, and JAWS is not. It’s not equal access to the broadest audience if it doesn’t work on NVDA.

So, why not Narrator? In short, Narrator has too small of an audience to be considered a serious contender yet.

Best Practice #6: Test what your users use

This best practice is where analytics (and especially analytics about assistive technology use) plus what you know about your customers really can play a significant role in your decision-making.

Accessible surveys of your keyboard-using population are a good way of identifying your screen reader users. Then look a the browsers and operating systems that they are using.

Best Practice #7: Simulating screen readers is not sufficient

There are a couple of screen readers that have “simulation” modes where you can visually read what the screen reader's auditory output would have been. Sorry, not good enough.

People who can see have biases built into their behavior. When sighted testers see the word “clickable” in the screen reader visual output mode, we know that it is useless input. Frequently, we will continue to scan visually to get to the next useful word, We might not fully process the impact on a blind user. This is especially a problem when the word “clickable” is repeated several times, which frequently happens with sloppy HTML coding on NVDA. Hearing “clickable” announced verbally eight times is vastly more annoying than seeing it in print eight times, where your brain might register it once and then scan for the next word that is not “clickable.” There is no substitute for using the actual tool in the real mode that an authentic user will be experiencing.

The “law of diminishing returns” refers to a point at which the level of profits/benefits gained is less than the amount of money or energy invested. In the accessibility field, there is no better example of the law of diminishing returns than screen readers.

There are diminishing returns to each additional screen reader you test:

Each new screen reader takes as much time as any of the previous screen readers being tested. There are no significant, measurable economies of scale when talking about manual assistive technology testing.

You may not find any new bugs.

What new bugs you do find are likely to be specific to that screen reader?

Adding an extra round of screen reader reviews for a screen reader that has 2.4 % of the market might not make sense, UNLESS, it is the screen reader used by your most important customer. And that’s where analytics can help you make the right decision.

With thanks to Jared Smith for reviewing. Any mistakes are mine.

Add a comment: