Very convincing nonsense

Welcome to the 78th edition of this newsletter!

With each email I'm sharing material that has inspired me recently. I'm hoping it will inspire you, too. If you want to support my work, you can sign up for my Patreon. This will get you access to exclusive material every week.

If Patreon is not your thing but you enjoy what I'm doing, feel free to send me a little something via Paypal. I'll use the funds to pay for the fee the service provider of this Mailing List charges me every month. If there's money left, I'll invest it into the Japanese green tea that fuels much of my creative work.

There is a lot of talk about AI these days. If you've been on this Mailing List long enough, you know that I've used AI for all kinds of things, including having it write my own largely fictionalized biography.

Last night, I had an idea that I thought was interesting. This happens a lot. Usually, when I check back the next morning, about 9 out of 10 of the ideas are either complete nonsense or not all that interesting.

There was a thought that popped into my head about ChatGPT. I'm sure that you have seen it discussed widely in all kinds of news reports. It can write article and do people's home work.

Can it, though?

I was wondering what would happen if you fed it a question that contains wrong information. Would it tell you about this? Or would it simply take what you presented at face value and produce something from it?

I think you can immediately see how or why this is an interesting question, right? And you probably want to know the answer.

So let me show you a picture of my cat that just got a "lion cut" to make you wait just a tiny little bit longer. Upping the suspense -- it's an old director's trick.

You see, diverting people's attention is one of the ways how you can sneak in false information. I think anyway. It sounds convincing so it must be true.

Anyway, I thought I'd ask ChatGPT questions from the world of art. Given the nature of the internet, I decided to not ask about photography (people get upset) and I decided to ask questions about dead people (dead people don't tend to get upset).

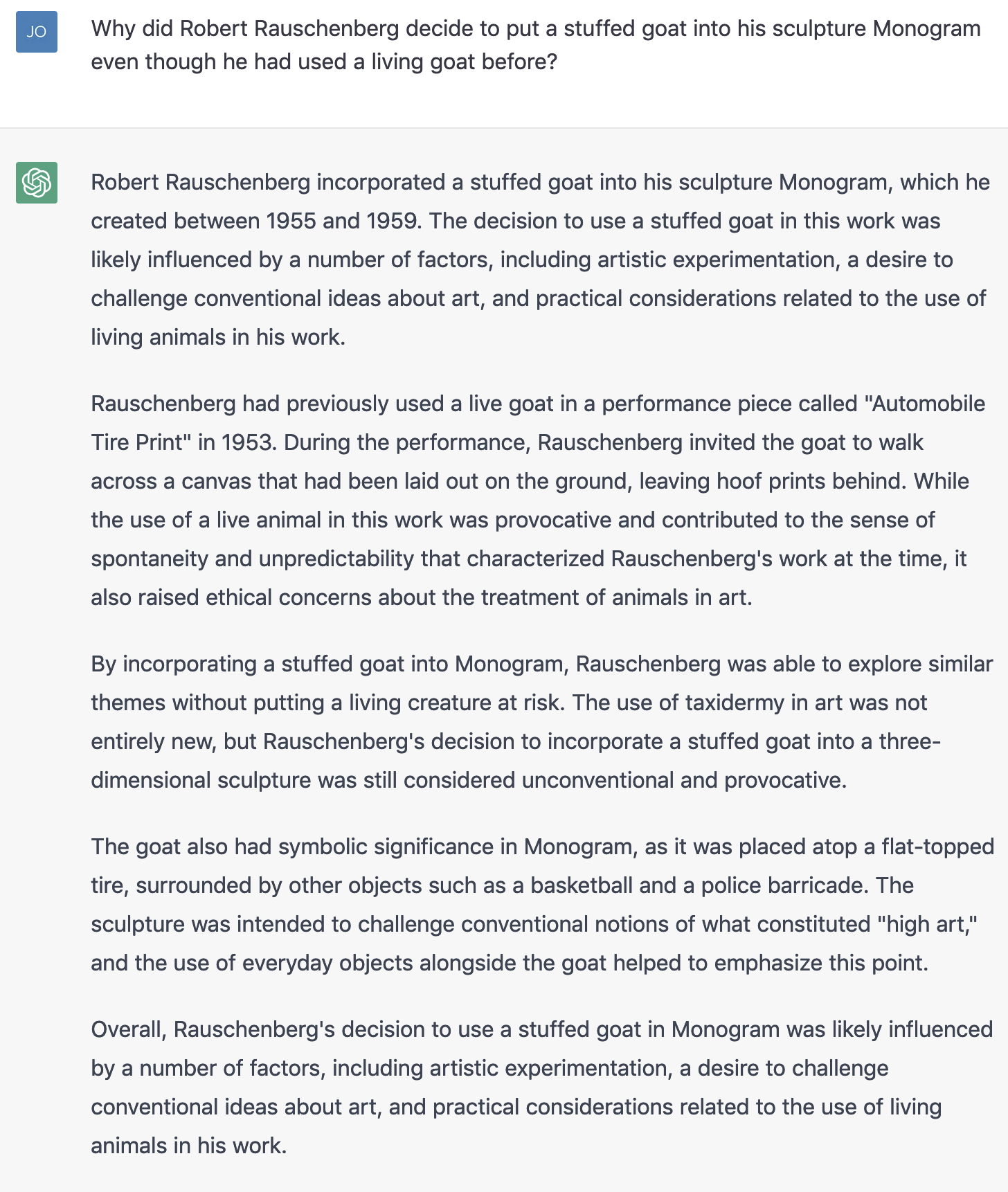

So here's my first question and the answer I got from ChatGPT:

Sounds good, right? Except that I totally made up the fact that Robert Rauschenberg sued Andy Warhol. Or rather I should say that if he did then I had no idea that he did.

Sounds good, right? Except that I totally made up the fact that Robert Rauschenberg sued Andy Warhol. Or rather I should say that if he did then I had no idea that he did.

But the answer sounds so convincing. In fact, it made me think that by sheer coincidence, I stumbled upon something I had not known about before. I Googled it.

Not surprisingly, there actually exists some information about Robert Rauschenberg, Andy Warhol, and copyright. After all, ChatGPT works with existing text (whether it "knows" about this one I don't know). Turns out both Rauschenberg and Warhol were sued separately over copyright. But they didn't sue each other.

Having stumbled this way, I thought I'd make my next question more nonsensical. Let's see how this went.

I'm sure you've all heard about the live goat performance called "Automobile Tire Print" that Robert Rauschenberg staged in 1953.

I'm sure you've all heard about the live goat performance called "Automobile Tire Print" that Robert Rauschenberg staged in 1953.

You haven't? Well, that's because it's nonsense. Let's start with common sense. Why would a goat walking across a canvas result in an automobile tire print?

But maybe this was just some sort of joke or part of the art work, right? Well no. Here's the actual information from the people at MoMA:

"Automobile Tire Print (1953) records one of Robert Rauschenberg’s most intriguing collaborative efforts. In 1953, the artist directed composer John Cage (1912–1992) to drive his Model A Ford in a straight line over twenty sheets of paper that Rauschenberg had glued together and laid in the road outside his Fulton Street studio in Lower Manhattan."

It would seem that ChatGPT not only makes up facts, it also changes actual pieces of art into something different.

Honestly, if I were a student thinking about using ChatGPT for some test, I'd now re-consider that thought.

I produced one more example. I thought I'd come up with something that is nonsensical and (I hope) widely known as being nonsensical. Here we go:

That's just really, really horrible (if you don't know why, read this).

That's just really, really horrible (if you don't know why, read this).

Not so long story short: you can use ChatGPT to get text that looks convincing but that contains completely false information. And you can even feed it the false information -- the algorithm is unable to correct it.

If you don't know the facts in ChatGPT answers, how will you be able to separate the nonsense from the truthful stuff?

I was going to ask ChatGPT to write something thanking you for reading this. But now it's broken ("Something went wrong"). So I'll just do it myself:

Thank you for reading!

-- Jörg