Research Roundup (#9)

.png/:/rs=w:1440,h:1440)

Welcome...

Welcome to the ninth-ever Research Roundup! A weekly catch-up on the latest developments in the field of XR research.

It’s been a while, so let's not hang around.

The Week in 3 (Sentences)

Generative AI and XR featured in several preprints this week with TexAVi demonstrating text-to-VR-video generation, VideoLifter generating 3D models from normal videos, and researchers using LLM integration for more responsive conversational avatars.

Mental health-focused applications were also on the agenda, with a new meta-analysis showing VR matches traditional anxiety treatments in effectiveness whilst reducing costs, original research on improving pre-surgery anxiety compared to tablet interventions, and supporting mental health for those facing life-threatening illnesses.

And finally, new studies on accessibility and user experience looked at real-time AR captioning to support deaf and hard of hearing students, and explored how users might balance curiosity and discomfort whilst navigating VR environments.

The Week in 300 (words)

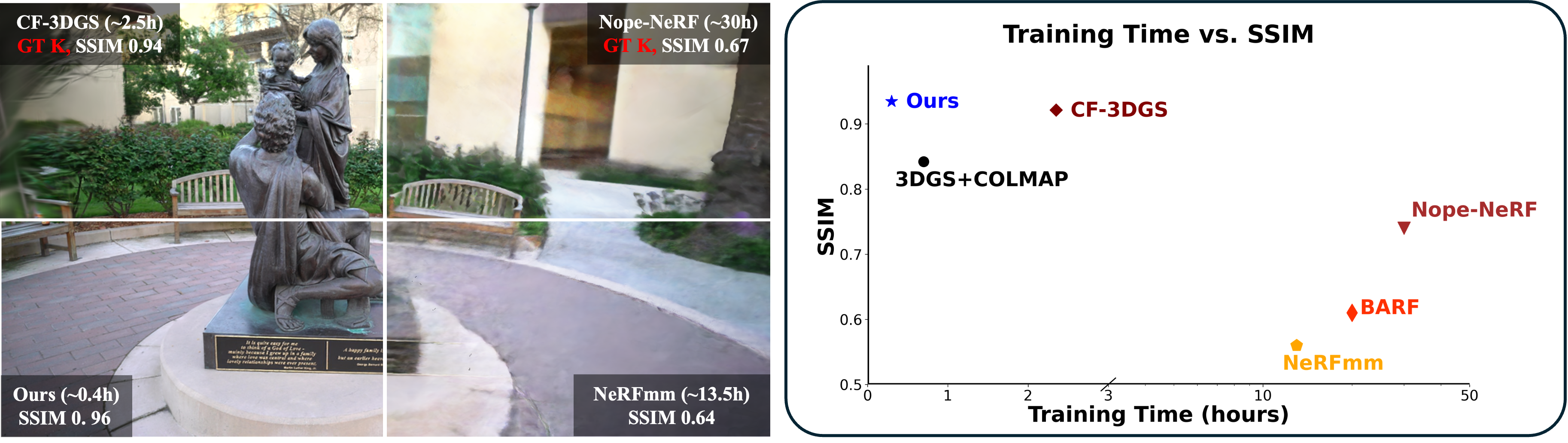

Whilst turning monocular videos into 3D models isn’t a new concept, reliable outputs can be difficult to produce and computationally expensive. It was with some excitement that I saw this preprint from researchers (including some from Meta) introducing Videolifter (VideoLifter: Lifting Videos to 3D with Fast Hierarchical Stereo Alignment). I won’t tell you I get all the technical details but the promise is a faster and more computationally efficient approach to converting video to 3D models with higher visual fidelity than the current state-of-the-art. How this will pan out in the long run is unclear, but for everyone looking for faster and more efficient content creation this deserves an honourable mention.

None of us can really understand what it is like to be diagnosed with a life threatening illness (LTI), and its effect on our mental health, until it happens. This week researchers in America have suggested that using VR for psychedelic experiences, as part of a broader programme of psychotherapy, could be a novel and effective use of the technology to support the mental health of people with an LTI. Building on literature which suggests that psychedelics can support mental health, they developed psychedelic experiences to sidestep some of the significant regulatory issues that you might expect. The study was pilot in nature, and the focus was on feasibility rather than efficacy, but with positive results it raises the intriguing possibility of unlocking the medical potential of psychedelia using VR.

And finally, researchers in Singapore and Sri Lanka have been developing an AR application to support students who are deaf or hard of hearing with tailored captions displayed on smart glasses. The researchers took a user-centered design approach, working with 8 students to iteratively design an application, before developing it and testing its capabilities in a series of user studies. The tech itself was a combination of a smartphone mounted on a low-cost headset, and their own SDK which leveraged Unity. A wonderful example of ‘AR for Good’.

Paper of the Week

The paper of the week this week goes to another preprint. Whilst we don’t usually feature so many this week has seen a number of interesting and innovative papers. As we all know, cybersickness when using VR is a very real problem for some people, naturally. While it may never be completely 'solved', developers and researchers go out of their way to try and design it out and put safeguards in place.

But then some users will knowingly push the boundaries, finding ways to interact with the environment that eventually make them feel sick! Behavioural researchers have tried to account for this in various ways including the idea that we're driven to find a balance between curiosity and discomfort.

Li and Wang this week shared their quantitative analysis of the interplay between the exploratory behaviours of participants and reports of cybersickness. They found that the two were related, with more exploratory behaviours associated with lower self-reports of sickness. They argued that this behaviour is observed because users are indeed often balancing out curiosity and discomfort - particularly when there is lots to be explored.

So why paper of the week? The in-depth quantitative analysis puts flesh on the bones of an important idea when it comes to VR, the extent to which curiosity drives behaviour. We love hard evidence that supports ideas with an intuitive appeal, making sure things are grounded in data. I don't know about anyone else, but I've certainly had my fair share of "I wonder what happens if..." moments followed by "yeah, that was a bad idea". Perhaps they weren't so irrational after all.