Research Roundup (#8)

.png)

Welcome...

Welcome to the eighth-ever Research Roundup! A weekly catch-up on the latest developments in the field of XR research.

It’s been a busy week, so let's not hang around.

The Week in 3 (Sentences)

New healthcare applications continue their steady march this week, with studies demonstrating benefits for neurosurgical informed consent, a review of the evidence for chemotherapy support, and pilot data on supporting the wellbeing of caregivers in hospice settings.

Novel insights into user experience were reported, exploring how mobility shapes creative design, demonstrating machine learning approaches to detecting cybersickness, and revealing concerning vulnerabilities in sensor data privacy.

And finally, educational XR research continues to innovate, with virtual chemistry labs more useful than lectured content, tactile interfaces enhancing learning and AI-powered language learning suggesting promising future directions for virtual training.

The Week in 300 (words)

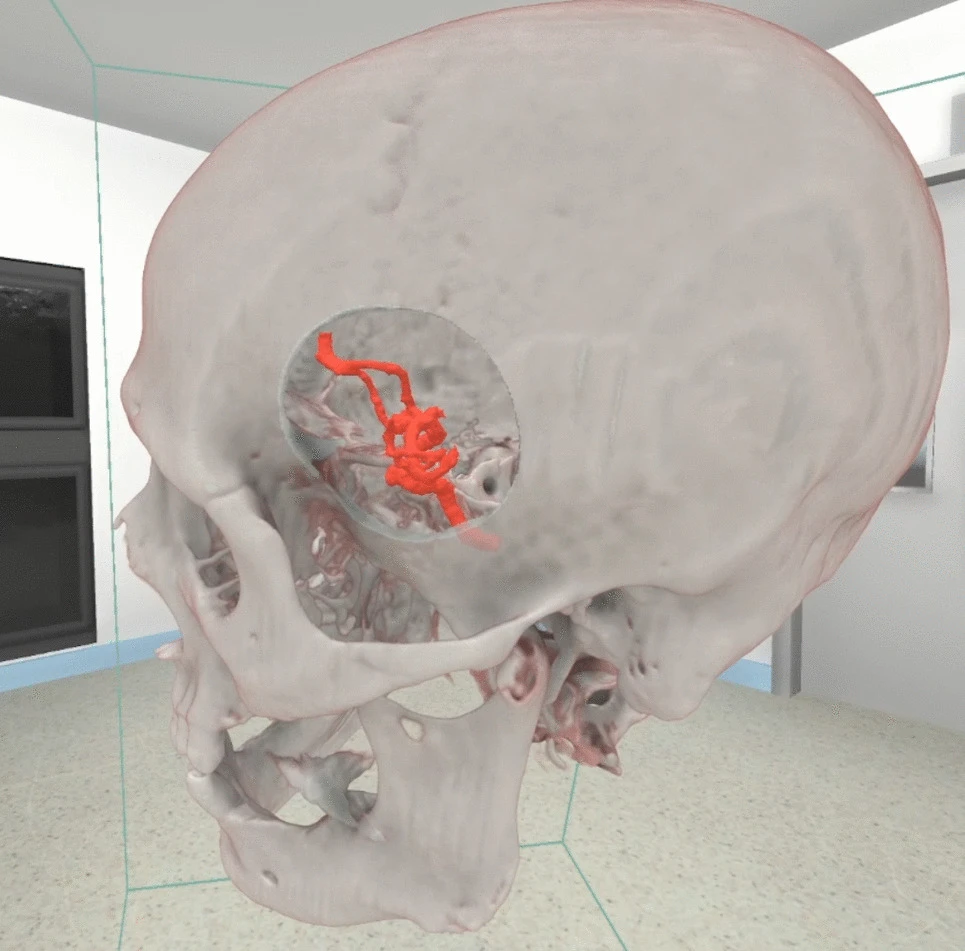

This week researchers looked at informed consent in healthcare for the second time in a month, this time focused on patients' understanding of impending neurosurgery. Pilot data was collected from 10 patients soon to go under the knife. They looked around a 3D model of their skull with the site of the surgery highlighted and a commentary from the neurosurgeon. The general aim was to improve the understanding of the patient which was confirmed by a questionnaire after the experience, although the pilot nature of the study meant there were no pre-/post- measures or a control group. Unlike the study earlier this month, the focus was less on saving clinicians time and more on ensuring the patients understood what was about to happen. With a full RCT to come, watch this space.

Predicting and mitigating cybersickness in VR continues to remain a hot topic, with new research this week exploring the use of physiological signals to predict ratings of cybersickness. A whole host of markers were collected whilst participants took part in a virtual rollercoaster. These included EEG, electrodermal activity, blood volume pulse, and skin temperature signals, all collected via an EEG device and wristband. Subsequent ratings on the Simulator Sickness Questionnaire (SSQ) were predicted with 86.66% accuracy using machine learning methods, higher than other existing techniques. Whilst 100% may seem tantalisingly out of reach this is a particularly impressive number.

And finally, BSc chemistry students at Dilla University in Ethiopia learnt about a range of chemical tests in either physical labs, virtual labs, or in a lecture format. Pre- and post-test measures were taken, with students in the physical labs demonstrating better understanding than those who had been lectured. Those in the virtual labs also understood more than those in the lectures confirming the utility of virtual spaces. Performance in the physical and virtual labs were not statistically different from each other, although there was a slight numerical advantage to having learnt in a physical lab. Virtual labs could therefore offer a viable alternative where the financial resources to host physical labs are unavailable, opening up chemistry education to a wider global audience.

Paper of the Week

Privacy concerns continue to rank highly when looking at barriers to implementation. Our paper of the week this week therefore goes to researchers at Texas State University who investigated whether eye tracking and motion data could be used to circumvent privacy protections and identify users.

Privacy concerns are not new, and the literature suggests a number of solutions, such as smoothing eye gaze data to remove telltale signals before it is sent back to the developer.

What makes this paper our paper of the week is that the researchers took a more holistic view and considered several data streams at once.

They specifically looked at what could be achieved if there were no privacy protections, protections on some data streams, or protection on all data streams. What they found was that motion and eye gaze data could be exploited if only some data streams were protected, making user identity as vulnerable as if there were none at all.

The threat model in the paper assumed an adversary who had relatively privileged access to motion and gaze data from a user: a scenario that would not be uncommon amongst developers who could collect such data routinely without it seeming particularly untoward.

All in all, this is a nice warning to not forget why privacy concerns are at the top of many people’s lists. Data like this will inevitably be the target of malicious actors in years to come, but for now, even the more basic access to data we might expect a developer to have seemingly needs a full range of privacy measures to prevent users from being identifed.

Want more?

If you're still itching for more, then check out the links below to our social media sites. Every day we archive the most interesting XR studies within days of them being published.

And if you have feedback about the Research Roundup, we'd love to hear it. DM us on social media.