Research Roundup (#15)

.png/:/rs=w:1440,h:1440)

Welcome...

Welcome to the fifteenth-ever Research Roundup! A weekly catch-up on the latest developments in the field of XR research.

It’s been a busy week, so let's not hang around.

The Week in 3 (Sentences)

New studies on professional training suggested that VR simulation could improve emergency physicians' accident response, while meta-analyses of MR vocational training reported positive effects across measures of behaviour, cognition, and affect despite unclear long-term benefits.

Research on the implementation of XR in education led to the development of guidelines for VR in healthcare education, while proposals for the DigCompEdu framework further defined XR competencies for teachers.

And finally, accessibility and inclusion were high up the list with suggestions that peripheral teleportation might reduce cybersickness, while new design guidelines for inclusive avatar representation were shared.

The Week in 300 (words)

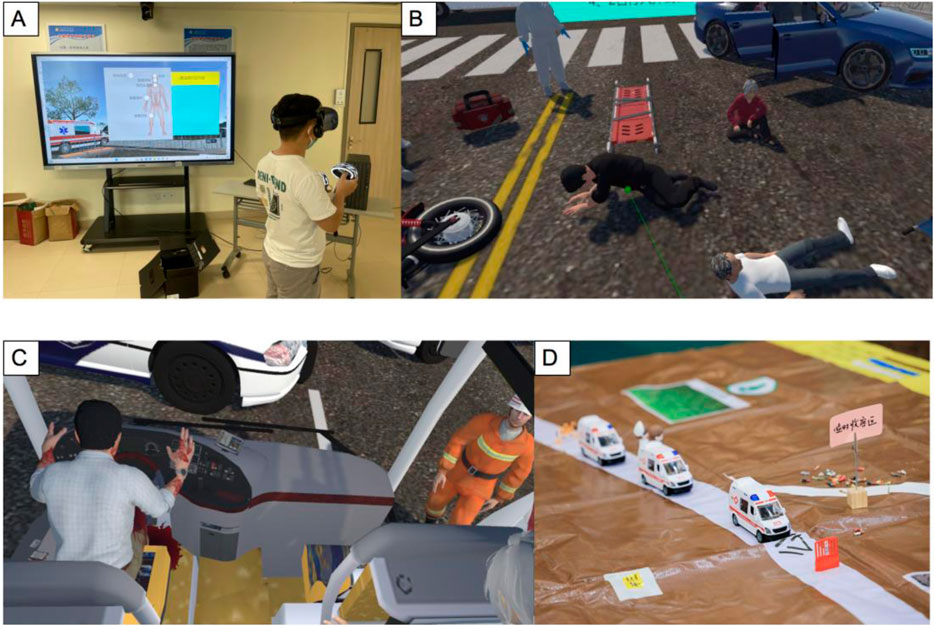

Once again we start the roundup with research from China, focusing on training for emergency responders to multi-vehicle collisions. Researchers compared VR-based training to traditional training methods, finding seemingly small but statistically significant increases in all three outcomes (on-site assessment, triage, and transportation decision). Obviously any researchers have to choose how to frame their control groups, and it’s important to contextualise the results in these terms. ‘Business as usual’ here consisted of lectures and tabletop exercises, and the VR training was better than this. Whether the VR training would be better than the same training on a desktop is unclear, and the possibility remains that it is the training and not the ‘VR’ that is crucial here.

XR in education had two useful sets of guidelines published this week. The first was recommendations on developing the DigCompEdu framework to include XR related teaching competencies. The second was focused on the implementation of XR in healthcare education, using an adapted Delphi process to develop the recommendations through three rounds of expert consultation. In the first round open-ended questions were asked of the expert panel (n=75), these were then turned into statements for rating in round two (n=31), followed by the final recommendations for agreement in round three (n=27). The main recommendations were to consider cognitive load, the agency of the learners and participatory design, as well as integration into existing systems. All very reasonable, and a useful resource for anyone involved in implementing XR in education (not just healthcare).

And finally, to keep the theme of guidelines going, researchers in the USA have shared a preprint of guidelines for inclusive avatar design. The guidelines were initially developed through a review of the literature and interviews with 60 individuals with disabilities. The 20 design principles were then run through a heuristic evaluation study with 10 VR practitioners, whittling them down to 17. The 17 sat within five broad categories: avatar appearance; body dynamics; assistive technology design; peripherals around avatars; and customisation control. Shared prior to potential publication, the guidelines provide plenty of food for thought when developing avatar choices in both social VR and other applications.

Paper of the Week

The paper of the week this week is one that just shouldn’t be the paper of the week, but it is. I can’t quite make my mind up if this is the breakthrough of the century or something to roll your eyes at, so I’ll let you decide.

Researchers in Ohio have developed a sensor-actuator-coupled gustatory interface for bridging virtual and real environments. Yes, researchers in Ohio have developed a way of digitally transmitting taste.

The actuator itself makes use of a mini-pump to pump chemicals (aka concentration-controlled tastant solutions) into the mouth to trigger different taste sensations.

At the other end is a sensing platform or “electronic tongue” that analyses the taste chemicals in the real target. This information is then sent wirelessly to the actuator to reproduce the experience. The researchers successfully reproduced both lemonade and chicken soup in digital form.

The authors argue that the technology heralds a new dawn in not only being able to see and hear in VR, but also being able to taste. Something I’ve never really needed to do, but clearly needed for the complete sensory experience.

I wonder if you could taste in the Sensorama?