Research Roundup (#13)

.png/:/rs=w:1440,h:1440)

Welcome...

Welcome to the thirteenth-ever Research Roundup! A weekly catch-up on the latest developments in the field of XR research.

It’s been a busy week, so let's not hang around.

The Week in 3 (Sentences)

- New studies in healthcare have produced mixed results this week, with training increasing medical students' confidence in navigating difficult conversations and VR brain death training reducing training time, though a multicentre trial found ICU orientation only improved understanding without reducing relative distress.

- Researchers have been testing new approaches to XR interaction, evaluating an AI-driven adaptive learning system against traditional guidance methods, comparing SkillAR motor skills feedback to conventional training, and proposing a conceptual design for vehicle passenger AR infotainment.

- And finally, small-scale therapeutic studies provided preliminary evidence in support of VR interventions for eating disorders (n=40) and cancer patient anxiety (n=20), while combining physiological and acoustic data achieved 85% accuracy in predicting anxiety during VR therapy sessions.

The Week in 300 (words)

Regular readers will know that VR and education get a bit of a hard time around here, with mixed results often reported. However, something that regularly emerges unscathed is soft-skills training. So this week it was no surprise to see more evidence that supports the integration of soft-skills education into curriculum as medical students learnt to navigate angry conversations in healthcare settings. This isn’t the first time the software (Bodyswaps) has featured in the literature in the last couple of months with similarly positive results reported back in December with anti-racism training. Is it because of its experiential nature? Who knows? But it looks like soft-skills training is becoming one of those good news stories that VR in education regularly needs.

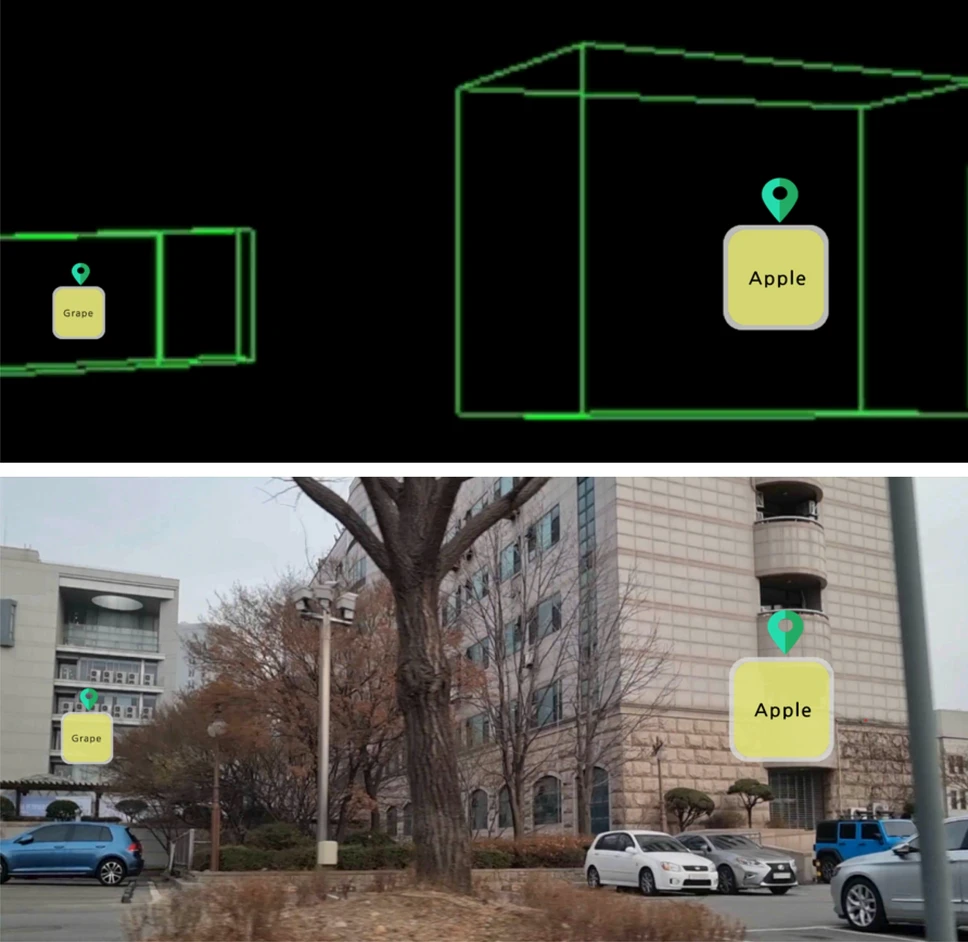

Ever sat in the back of a car and wished you knew more about the buildings around you? I haven't, to be honest, but some people clearly have as a research team in South Korea have designed an AR display that fixes to the rear passenger side window. Using an AR film on the window, and a projector, the system identifies local landmarks and provides appropriate labels. The user test reported in the paper indicated that, compared to a display in the back of the seat, users remembered more about the labels for the buildings that they passed and had better general spatial knowledge. Whilst only initial prototyping this nevertheless provides a curious use case: as a tourist in the back of a taxi I imagine this would be particularly useful.

And finally, one of the potential benefits to VR systems is the ability to induce awe. Immersive scenes of nature in particular can induce a complex set of emotions that make us feel more connected to the scene and part of something greater than ourselves. Researchers have investigated whether inducing this feeling may be beneficial for reducing anxiety in cancer patients as they go through treatment. After 5 minutes of watching awe-inducing 360 videos of New Zealand, the patients in their sample not only felt less symptom distress and anxiety but also felt an increased connectedness to nature and spirituality. Another important contribution to the canon of VR for Good.

Paper of the Week

The paper of the week this week is a curious one. For me I was super excited to read it, and super keen to share. On the social media feeds the posts got...no reactions at all. So as valentines day approaches this is my love letter to Botesga et al.'s most recent paper on task blind adaptive virtual reality

So we've seen a lot of talk about adaptive VR in the literature lately, which is completely warranted. Drawing on multimodal data and machine learning, researchers are developing training that responds to what the user is doing and adapts it's guidance accordingly. Although the evidence base is looking a little mixed (that's for another day) the concept is an exciting one to be explored.

But this research tends to be highly idiosyncratic. Pizza making and origami folding spring to mind. This is because the system has to be trained on the specific task first. Not too big a deal with a proof of principle, but not exactly world breaking at the moment.

Researchers in France have been working on a system that can support the user even if it is blind to the specific task they are completing. It does this by focusing upon the cognitive processes, rather than the specifics of the task, seeking to enhance visual attention and recall. Simple sensors are used to track head, hand and eye movements and prompts are driven by inferences about the cognitive state of the user.

Compared to a help system based on task specific knowledge, the blind system was found to be no worse. But also no better.

Why is this so exciting? It is only a proof of concept, just one use case, etc. etc. but it opens up a whole host of possibilities. Adaptive VR already has it's limits because everything is so incredibly task specific. To be able to open that box up, if only a little, feels like a big deal. But it may just be me.