Windows Copilot Newsletter #21 - Copilot for every person; Gemini Pro 1.5; AI and jobs

Windows Copilot Newsletter #21

Microsoft wants a 'Copilot for every person'; Google's latest Gemini 1.5 Pro breaks cover; special focus on AI and jobs...

G'day and welcome to the twenty-first edition of the Windows Copilot Newsletter, where we curate all of the most important stories in the rapidly-evolving field of AI chatbots. A week of interesting developments, so let's dive in...

Top News

Microsoft promises 'Copilot for every person': More than thirty years ago, Bill Gates prophesied a 'PC in every home'; now the firm he founded is looking to provide a 'Copilot for every person'. Is that doable? Profitable? Sensible? Read it all here.

Google Gemini Pro 1.5 goes public: Although not yet available as an AI chatbot, Google has made its latest-and-greatest Gemini Pro 1.5 model available to users worldwide in 'public preview'. With a million-token context window, Gemini Pro 1.5 could enable some breathtaking innovations - such as listening. Read that here.

It's the end of the world as we know it: The heads of both NTT and Japan's Yomiuri Shimbun newspaper issued a joint proposal outlining some of the catastrophic consequences of unchecked AI - and a rather helpful list of policies to guide AI safely and wisely. Read all about that.

Special on AI and Employment

Shortsighted companies seeking to replace workers with AI: We knew it would happen, and it is - but will it work? Probably not, as this article reports.

Who shall we fire first? Some big companies got together to rank which roles they'd most likely automate away - and how to cushion the blow for employees being made redundant. Read about it here.

Nobody knows anything when it comes to AI and jobs: A WIRED interview with Mary Daly, the CEO of the Federal Reserve Bank of San Francisco - and even one of the nation's leading economists has no clarity on how AI will affect the jobs market. Read that here.

Top Tips

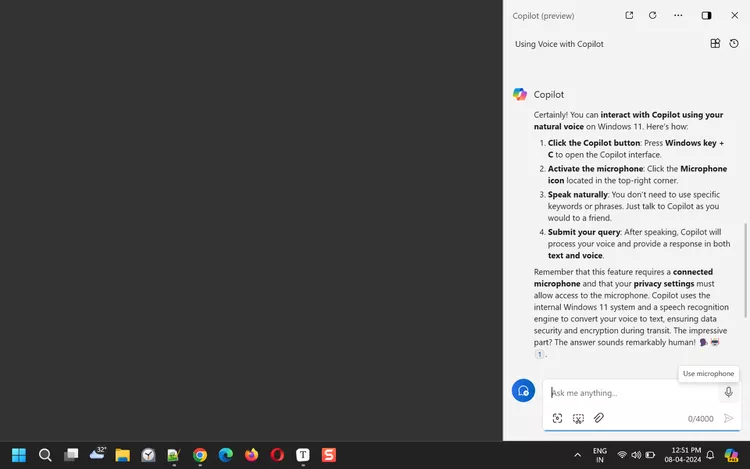

10 great ways to work with Copilot: An excellent and useful listicle.

Safely and Wisely

Can we even have an unbiased image generator? One of the writers for The Verge has been trying to create an image of an Asian man with a white woman - and it's not working. Why? Biases in the training data that seem to be persistent. Which is a human failure. Read that here.

Fake law firms filing fake infringement notices: In a story that sounds more like an episode of Black Mirror than reality, Ars Technica details a fake law firm that's sending fake DMCA takedown notices to unsuspecting folks. It's a sophisticated, AI-powered SEO scam, that you can read about here.

Longreads

The New York Times took a look at the 'data harvesting' practices of three big AI firms - OpenAI, Google and Meta. They did not like what they found.

Last year, the New York Times wrote a clear and playful explanation of how AI chatbots 'learn' from the texts they're fed. Explore that here.

New White Paper 'De-Risking AI'

On Thursday 18 April, Wisely AI will release its second white paper.'De-Risking AI' identifies six key risk areas for any organisation working with AI: anthropomorphising AI chatbots; malicious content and copyright violations contained in training data; hallucinations; sharing, privacy and data sovereignty; prompt subversion and prompt injection attacks.

To reserve your copy, register your interest here.

More next week - we’ll be back with the latest AI chatbot news!

If you learned something useful in this newsletter, please consider forwarding it to someone else who might benefit.

Mark Pesce

mark@markpesce.com // Wisely AI