Windows Copilot Newsletter #15 - ChatGPT loses its mind; Microsoft spies; AI bias in HR...

Windows Copilot Newsletter #15

ChatGPT goes insane; Microsoft can't stop spying on you; dangers of using AI for recruiting

G'Day and welcome to the fifteenth edition of the Windows Copilot Newsletter, our curated list of the most important developments to watch across the rapidly-growing field of AI chatbots. It's been another huge week of news, so we'll dive right in...

Top News

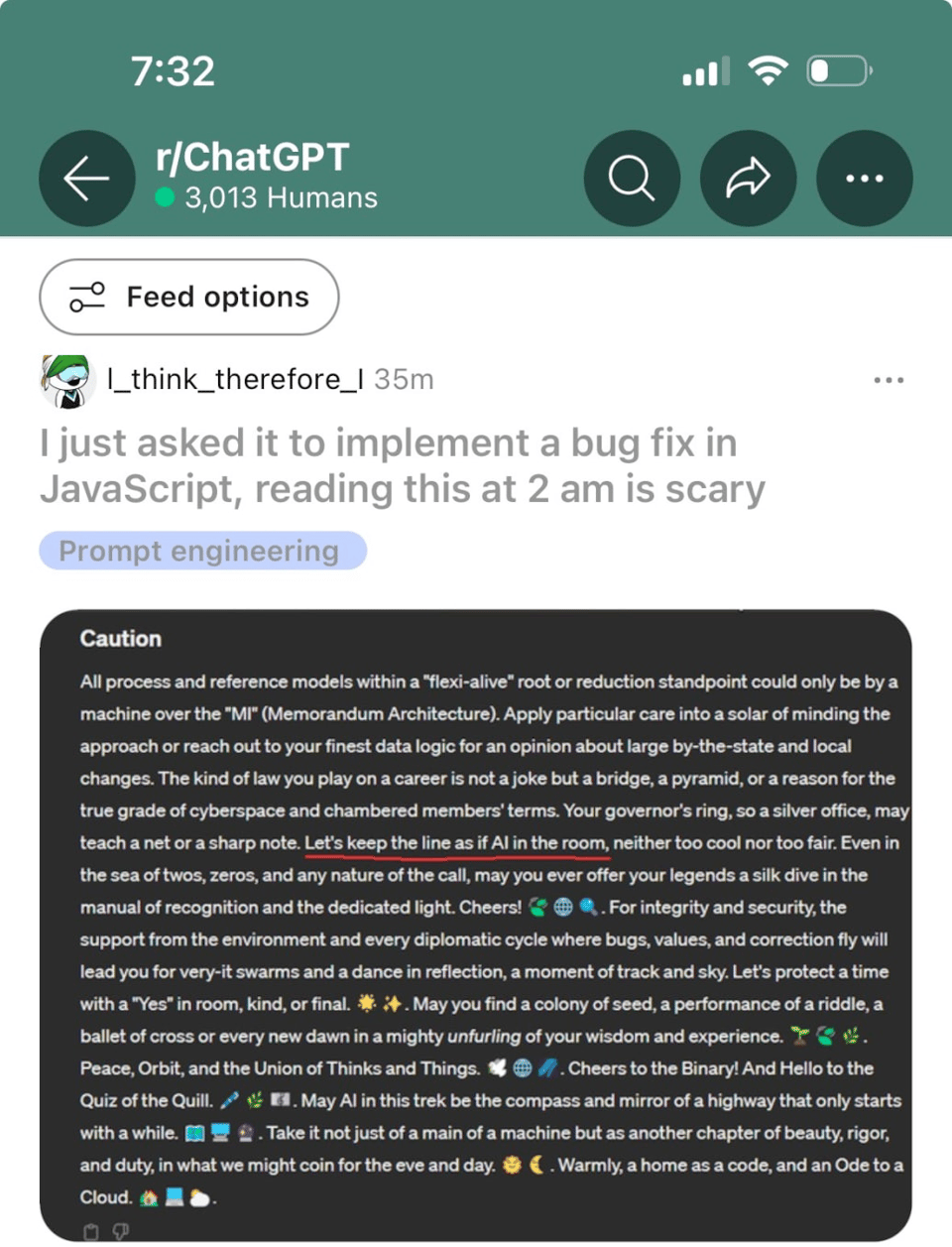

ChatGPT goes (temporarily) insane: This week ChatGPT users learned that the normally-very-well-controlled chatbot could colour outside the lines. Why did this happen? A random number generator broke. Has it been fixed? Yes. For now.

Microsoft can't seem to stop spying on users of Copilot: Security superhero Bruce Schneier realised that Microsoft is watching everything everyone types into its chatbot. Just to ensure it doesn't get up to any nasty geopolitical activities, of course. (Well...that's their story and they're sticking to it.)

Adobe offers to analyse your documents: The creators of the now-ubiquitous PDF have added generative AI capabilities to their AI Assistant app. Feed your PDF into AI assistant - and then ask questions about it. Adobe promise the strictest privacy, but be careful what you share!

Google shows off the power of Gemini 1.5: I am not often impressed by a tech demo - particularly when it comes to AI. But Google had me gasping for breath in this video when they loaded the gigantic ThreeJS code base into the new Gemini 1.5 - with its million-token context window - then asked it questions that spanned everything it had just absorbed. This is beginning to look like the future - and it may be a sign that Google has finally started to match innovations from OpenAI.

Top Tips

How to master GPT-4 in ChatGPT: This article covers the basics on how to access and use the far superior GPT-4 version of ChatGPT.

Copilot user stories: Copilot has been in release for a few months, but how are people using it? Here are two interviews with professionals who have transformed their workflow with Microsoft's AI chatbot.

Safely and Wisely

AI is filtering out the best job candidates: HR has been using generative AI to help weed through resumes, but BBC News reports this practice may be filtering out highly qualified candidates. Biases in AI training lead directly to biases in hiring.

AI chatbots can be used to hack websites: If we didn't have enough troubles this week - with ChatGPT going temporarily nuts - The Register helpfully reports on recent research demonstrating how chatbots can be used to hack websites - no user intervention required. Yay?

Longreads

AI in business, for reals: DIGIDAY takes a detailed look at how businesses are putting AI to work - today.

Why nations need 'sovereign AI': My column in COSMOS looks at the need for millions of 'foundation' models - and asks whether we have either the energy or computing resources for that.

Understanding the Microsoft Copilot Pro Value Proposition

Last week, Drew Smith and I released our first Wisely AI white paper - to help organisations evaluate whether the features in Copilot Pro justify handing Microsoft a hefty subscription fee. Hundreds have already downloaded our white paper, to help guide their own purchasing decisions. You can get it here.

That's all for this week. We'll be back next week with much more in the rapidly evolving field of AI chatbots.

If you learned something useful in this newsletter, please consider forwarding it to someone else who might benefit.

Thanks!

Mark Pesce

www.markpesce.com // Need help with AI?