Am I a Luddite??

On AI, propaganda, big brother and technofascist oligarchy...

“We live in a nightmare of falsehoods, and our first duty is to clear away illusions and recover a sense of reality.”

Nikolay Berdyaev

Lud·dite /ˈləˌdīt/

Originating in the early 19th century: possibly named after Ned Ludd, a participant in the destruction of machinery.

noun

a person opposed to new technology or ways of working.

"a small-minded Luddite resisting progress"

a member of any of the bands of English workers who destroyed machinery, especially in cotton and woolen mills, that they believed was threatening their jobs (1811–16).

I'm not a luddite. I don't think I've ever been one. Rather for most of my life I practically looked down upon luddites as redundant relics of the long ago past that's been gone and will never come back... Over the course of the last quarter century, with technology rapidly advancing and evolving, I have consistently been one of the early adopters of a lot of new tech. For example, as silly as it may sound, browsing through the Google Graveyard, I can easily remember using over 50 of the now defunct products.

Yet, lately, whether it's my age - as I am about to turn fifty and even though age really is just a number, it is a pretty big number. Besides, in the midst of my midlife crisis, my mental state has not been where it needs to be especially in lieu of the tragic state of the entire world…

Thus, I'm feeling myself becoming a Luddite.

It is an open question in my mind whether my ire should be directed at the Nazi techbros oligarchs or folks enabling the inevitable take over by the so-called “artificial intelligence,” or “AI.” Though, these are two sides of the same coin.

The apocalyptic development of pseudo AI quietly and quickly became one of the greatest environmental threats while being the most outrageous case of intellectual theft in human history, and which is resulting in the exponential increase in outright lies, misinformation and basic garbage...

The technology becoming the propaganda and authoritarian control that we all should have seen and known for a very long time...

Just earlier today, newly “elected” Speaker Mike Johnson was reciting a long prayer attributed to Thomas Jefferson. As many have pointed out, Jefferson never said any of this. That’s the point. People are inundated with so much propaganda, misinformation, and disinformation that the distinction between facts and factoids starts to blur, and sooner rather than later the distinction between truth and lie follows, providing perfect ground to grow an entirely new “reality” convenient to control the masses.

You may have seen recent news that Meta has announced upcoming release of AI-enabled users on Facebook and Instagram (why Threads was ignored is beyond me). Quickly people started to point out AI users already on the platforms.

These AI users have the potential if not likelihood to be content moderation nightmares. These fakes are functionally the same thing as the spam bots social media platforms have chased out for years. Why would someone actively worsen their platform in such a way? What’s the point even? The obvious answer should be to increase engagement with AI users posting to keep the people using the platform. Meta, like most if not all of Big Tech, is very predatory with content creators. While established creators would not fall for the gimmick, the beginner ones and the casual ones (who I think are largest contingent of creators), will. In the end, it’s more ad revenue. Of course, Meta being Meta, you can’t block the account.

What’s interesting is that these accounts, like the one above, were created in September 2023. Unless Meta has altered the timestamps of the posts, which isn’t that unrealistic, the announced AI users are yet to be released and what people found are older versions that Meta clearly and also clearly unsuccessfully tried to test out a year ago.

Genuinely amazing to watch them turn their product into a sanctioned scam-n-spam machine.

Meanwhile, Elon Musk and the right are waging a war on Wikipedia. The world's richest man has joined a growing chorus of right-wing voices attacking Wikipedia as part of an intensifying campaign against free and open access information. Wikipedia is remarkable because it has not only avoided enshittification but has also somewhat reversed it.

It’s not an overstatement to say that Wikipedia is the greatest achievement in information ever. I feel funny writing this as in my college days, decades ago, Wikipedia was considered an unreliable source that was verboten in academia. Yet now it basically the backbone of the internet. Google doesn't work without it, for instance, and I am talking the good old days of Google Search. It's kind of the last, place where there is still factual fact-checked and thoroughly edited truth still exists.

Why the self-proclaimed free speech absolutist is upset at Wikipedia? Well, because he does not like not having control over the narrative of his life and accomplishments. He even attempted to hire a team of people to edit his Wikipedia entry, of course without success.

Elad Nehorai writes “unlike Trump, Musk would rather wants to turn the US into a privatized technocracy under his control rather than outright fascism. He actually wants the government to be weaker, not stronger. What that means: capital-driven authoritarianism that actually goes further than classic fascism in scope.”

Imagine a country where even oligarchs don't exist because the richest man in the world is in effect its dictator. This would go beyond the very few checks on power than a classic dictator would have. That's what Musk wants: complete control.

The other day I saw the chart below used to explain what is the real purpose of DOGE and I think it is very much applicable to information and content across the internet. Outside of Wikipedia, can you name a significant information hub online that is not owned by one of the members of the global transnational crime syndicate masquerading as the government (and business).

***

Anyway…

AI has already stolen work of myriad of people and is going to put a myriad of other people out of work. The underlying purpose of AI is to allow wealth to access skill while removing from the skilled the ability to access wealth. They are pushing so ferociously hard for acceptance of AI because its acceptance would mean the fundamental defeat of countless classes of different laborers, in perpetuity.

"What trillion-dollar problem is AI trying to solve?"

Wages.

They're trying to use it to solve having to pay wages.

SwiftOnSecurity recently noted: “Digital sovereigns: Amazon, Google, Apple, and Microsoft each ~independently~ own mostly or entirely the entire product and security stack for their functional business and customer deliverables, from silicon up (depending on specifics).”

People are often surprised when I tell them this: both Twitter and Meta (Facebook/Insta/Threads) are now specifically engineered to downgrade, hide, suppress real journalism, real facts, real truth.

The Age of Nation States is about to be replaced by the Age of Nations Incorporated (preferably not in the state of Delaware).

It’s important to remember that CEOs having contempt for workers isn’t limited to Elon Musk and that the invocation of racialist narratives about workers by the owners of capital isn’t limited to the situation with H-1B visas. H-1B visas can serve positive functions, but they are also used to depress wages and exploit workers. Similar dynamics exist with the hiring of “unskilled” immigrant workers. To justify hiring immigrant workers in both cases, capital owners invoke racialist narratives but wield them in the inverse. Musk’s assertions about domestic workers being lesser than H-1B workers are crude versions of the same justifications used by capital owners to import skilled workers generally. For “unskilled” workers, the narrative is domestic workers are better than immigrants. Racism is leveraged accordingly. Under unregulated capitalism, the owners of capital—which includes CEOs and is why forms of equity are part of CEO compensation—maintain their position of power over labor by keeping workers divided and leverage racism toward that end. Workers are seen as units of labor. Labor is seen as a cost.

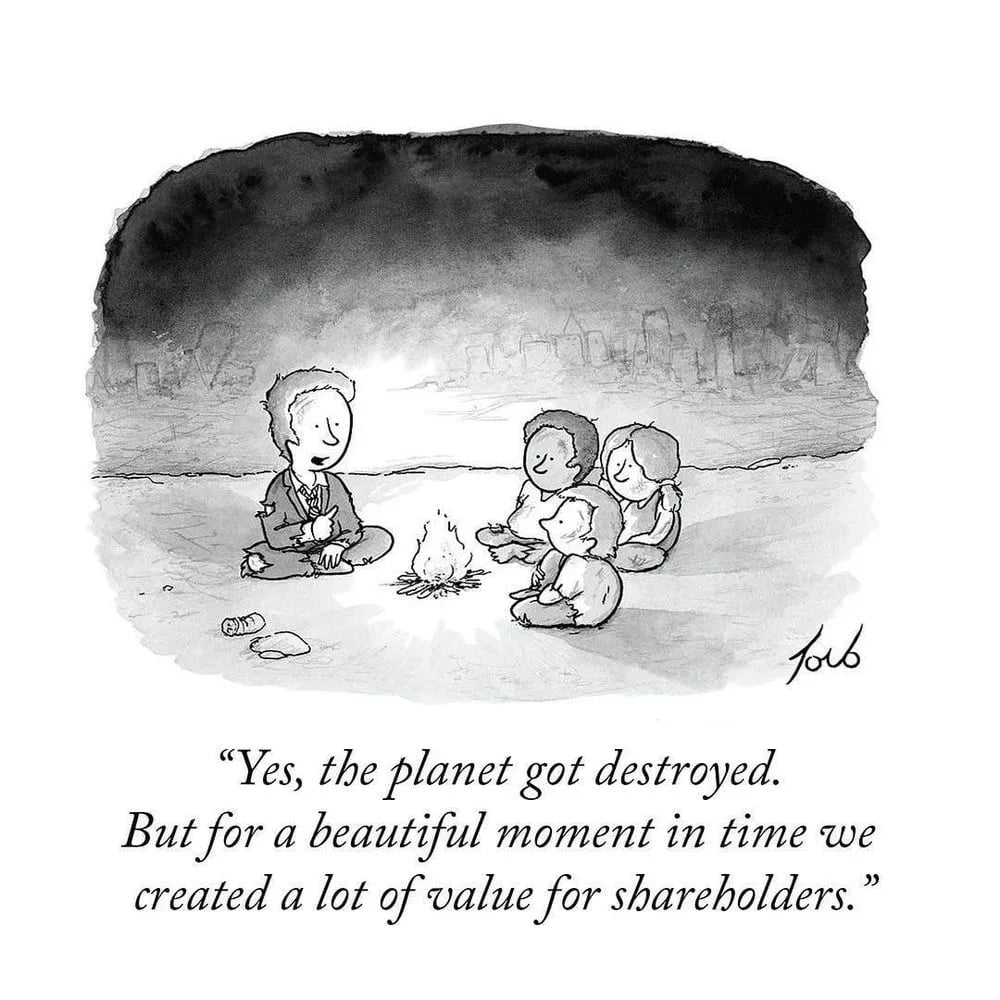

The owners of capital view all costs as obstacles to profit. And profit is their ultimate goal. Not creativity. Not production. Profit. Owners thereby come to view labor—which means all workers, domestic and immigrant—as obstacles to their goal. Accordingly, they often feel accompanying contempt.

Without understanding how capitalism works as an economic system, it can be easy to write off “no war but class war” as an edgy slogan. But the reality is class war and its accompanying power differentials and resentments are built into capitalism, and the system uses racism is one of its tools. People use different terms to describe these inherent sources of conflict, including internal contradictions and systemic risk, and have come up with different ways to try to address them, including communism, socialism, and regulated capitalism. But most people agree the sources of conflict exist.

If you think this is strictly a Trump/Musk incoming fascist junta, you would be wrong.

Kevin M. Kruse is bloody right when he writes that “To be clear, this is the *Biden* White House we’re talking about and Jesus Hula-Hooping Christ this is the worst fucking thing they could do on the way out.” The WaPo report cited in the TruthOut piece.

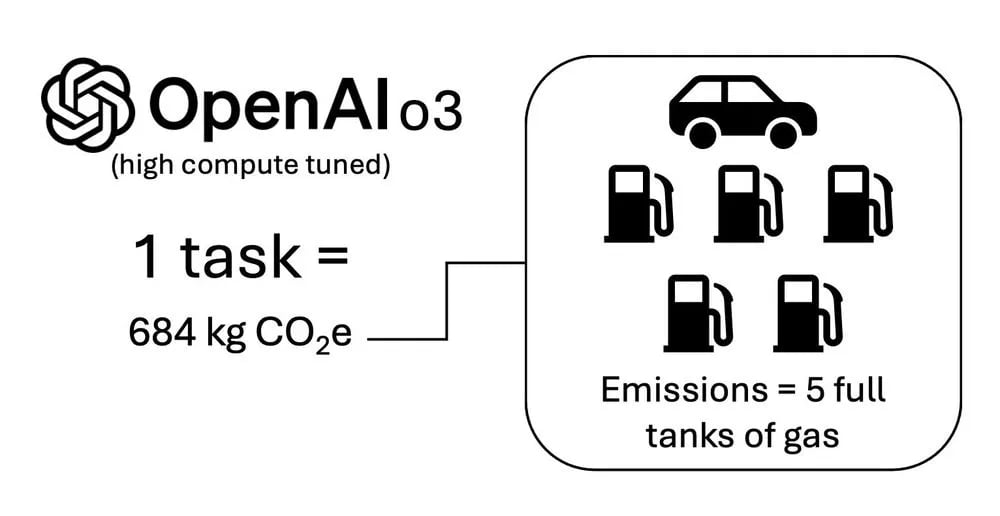

OpenAI doesn't report a single number for its climate impacts - not anywhere, in any way, shape or form. It sounds kind of obvious but the fact that a company with such incredible demands for electricity and water isn't disclosing basic information about its energy consumption and associated emissions is very bad.. and demonstrates the intense hypocrisy and shallow cynicism of companies that do deals with OpenAI like Microsoft, who flout their sustainability credentials at every opportunity, but don't consider it a dealbreaker that OpenAI feels no need to share basic information about its impacts. This is one per-task estimate from Salesforce's head of sustainability: “Each task consumed approximately 1,785 kWh of energy—about the same amount of electricity an average U.S. household uses in two months…"

Here’s another article of many documenting the cost of using ChatGPT.

***

Encyclopedia Britannica could have been Wikipedia. I am literally tearing up typing this as I glance at my copy of the 1954 edition of the encyclopedia.

Instead it's going to become gray goo.

Britannica, which happens to be the parent company of Merriam-Webster Dictionary, hasn’t published the actual encyclopedia in over 15 years.

Excuse me while I run in circles screaming in horror.

Generative "AI" is a threat to the processes of making and certifying knowledge and consensus reality, thus leading us to the verge of an epistemic and heuristic collapse of just catastrophic proportions but y'all keep slathering it on yourselves like it's butter and you're corncobs.

Someone on the internets recently wrote: “Do you think AI can be boycotted out of existence? What benefits are there to not using it? There are benefits to being thoughtful to how you are using it, yes. But rejecting it is like...I don't know...rejecting a life saving vaccine. Accept it or die a slow death.”

This is nonsense.

Here’s how ChatGPT built itself on thousands of stolen books from authors including John Grisham, Jodi Picoult, George RR Martin… No wonder OpenAI admitted that ChatGPT can't exist without pinching copyrighted work.

GenAI is NOT artificial intelligence, it's a theft machine, built on theft, sipping the lifeblood of our planet, vomiting out simulacrum of creativity and talent that is most striking its its uncanny valley hollowness. It is the corporate murder of creativity.

It is a plague.

***

Over the last year I’ve toyed with the idea of trying to revolutionize the entire system of education. I know, windmills and all.

Yet, the critical need to uproot and evolve our education has never been more dire.

College students are supposedly caught between professors' AI bans and employers' growing demand for AI skills: research shows 75% of workers now use artificial intelligence on the job, yet many universities still classify its use as cheating.

No, students are not caught between anything.

The fact that AI may be used or useful in future jobs does not create a single dilemma at all for college students in how to actually complete their coursework, acquire knowledge and skills. This is all nonsense and excuses for not doing the reading and learning how to think and write. No one wrote articles, in generations past, about how word processors or PCs or the internet "caught" college students in some angst-inducing dilemma between completing their coursework and the demands of notional future employers. A decade from now "AI skills" will be a commodity, while the scarce resource in the labor market will (once again) be individuals who can think,, write, analyze, and communicate, as it has been forever and always. And we'll still be fighting tired, age-old battles defending the liberal arts and huminites.

"Using an AI to generate text" isn't an "AI skill" in demand by employers. Employers know that LLMs are designed to be easily used and that using them isn't a marketable skill. "AI skills" means "the ability to program using AI algorithms" not "the ability to use AI products".

The reason “humanities types” like me believe AI is an existential threat is because it’s jeopardizing our societal ability to think, analyze and write. This is an actual existential threat to democracy and society.

Even the mildest pushback against AI these days seems to generate “you’re Luddites who lack critical thinking” responses from its STEM/business folks and of course techbros. There are tradeoffs in everything. We need to talk seriously about them. This isn’t it. I may feel that AI is an existential threat to humanity, but am I luddite??

Back when I was a student in college, you used to know that a student cheated because their work was better than it should be, I’ve experienced this multiple times when grading student papers and exams. Nowadays, you know that a student cheated because it’s worse than it should be.

The mistake people who don’t understand humanities make is thinking it’s just about words when it’s about thinking.

Writing is thinking. It’s not a part of the process that can be skipped; it’s the entire point. I suppose this is why so many artists and writers are incensed about AI “writing” — it’s clearly just something tech dullards created to compensate for their own weaknesses and now they’re projecting that on all of us. Look, I’m sorry you suck at writing. But don’t make the rest of us suffer your failures.

Meanwhile, UCLA is creating an entirely AI-generated course. Reading this made me so angry that I read the entire article still do not understand how AI helped at all, everyone seems to be doing more work. It's the paradox of how AI is sold to us: first, we're told it's an amazing timesaver, freeing us to do the "really important stuff." But when we talk about its inaccuracies and "hallucinations," they say, "well of course it's important to check the output's accuracy, yes."

Which...takes extra time!

Damien Williams in Historical Studies in Natural Sciences writes: "Scholars are Failing the GPT Review Process." His point is that LLM's and other "AI" have shaped the expectations, norms, and disciplinary practices of contemporary academia. We should approach all LLMs with the assumption that they reflect cis white male bias in every subject until proven otherwise by rigorous documented and peer reviewed research.

***

We are sold something that we never asked for, which is quickly destroying what’s left of ecology and yet we are pushed to use more of it in order to continue training it:

They use all of the dirty tricks, like Anthropic saying that Claude AI can match your unique writing style. Please do NOT TEST THIS. It wants you to do that. It is literally challenging you to "please give me text on which to train."

Speaking of dirty tricks, AI cloning of celebrity voices outpacing the law, Elon made sure that revenge (and AI) porn is still legal and don’t know what to buy your loved ones for Christmas? Just ask ChatGPT

This week I heard two people who really should know better say they asked ChatGPT for factual info. ChatGPT is not a search engine. It is predictive text based on other people’s stolen writing; its whole job is to make stuff up.

Please do not ask it for facts.

Oh and please tell a friend.

***

I digress. Let’s get back to how using LLMs can teach one to become:

a good, rich close reader,

a thoughtful, articulate writer,

a careful, systematic critical thinker.

You learn these thing specifically by doing those activities YOURSELF. Using AI means exactly *not* doing them. Let me give an example. As a test I recently popped some Russian text into a translation AI. It was pretty good! It absolutely cannot understand cultural illusions or literary references. But if I didn’t already know Russian and wasn’t already able to translate I’d have no way to judge any of this.

While some may think it’s a great idea to leverage LLMs to teach med students, future doctors, who should not be wrong or lack knowledge or information, Max Kennerly writes: “For all the puffery about whether "AI" truly "understands," the extra bones / fingers / etc. pics are more evidence that they don't.

A thinking machine with a baseline understanding of reality won't make this mistake. Current "AI" doesn't have that; it has only statistical analyses of training data..”

I am a Luddite, or at least anti-Generative AI because it literally wouldn’t exist without massive theft of millions of people’s works, exploiting workers to train it, and cope with electricity demand by creating the biggest climate crisis yet.

Did this happen when first calculator or first personal computers came out? Of course not.

Saying "AI is just a tool" does not mean you get to absolve yourself of thinking critically about the labor implications, or how LLMs trained on stolen work, or the water and power needs of the sector. People don’t actually understand what the mechanical turk is actually doing because the marketing has convinced them it’s actually intelligent. A large language model does not think or know anything. It frequently makes up confident-sounding answers and citations. If it gives you any accurate facts, that’s a lucky accident.

Within the setting of the academia, this reminds me of professors who essentially had their TAs teach… without any care for students or their learning and knowledge. At least TAs are human beings with their own varying degrees of higher education, training, and subject matter knowledge. It's very telling that some profs do see the substitution of an LLM to be equivalent for their students.

If the AI could get basic facts right and do so without cannibalizing the work of human authors without compensation?

Maybe.

Let me know when ChatGPT or Grok or Gemini can write a book without leeching off the uncompensated work of actual writers .

***

I have a hypothesis - based on vibes not evidence - that many people are turning to ChatGPT and similar just because Google search is so broken. Used to be that Google would provide a fairly clear answer to a basic question but no longer does. ChatGPT answers with confidence even when it’s wrong. Google search has become almost unusable. Small sites have seen their search traffic dive. Spam, AI slop and "parasite" articles were taking up the top spots. Some feel Google's execs are incredulous to their plight: Google Search Changes Are Killing Websites in an Age of AI Spam.

AI is exacerbating existing issues (for example, access to electricity in Arizona). This is only going to get worse, unless we stop pretending that AI has no downsides and also MUST be incorporated into every aspect of our lives. AZ approved an 8% rate hike to fund electric power for data centers but “rejected a plan to bring electricity to parts of the Navajo Nation land, concluding that electric consumers should not be asked to foot the nearly $4 million bill.”

***

For years, perhaps decades, I’ve fantasized of the future digital age, being digitized, existing in the Metaverse (the original Neal Stephenson version from "Snow Crash,” not the Zuckerberg’s atrocity). The impending AI takeover made me reassess that idea.

Scientists find 57,000 cells and 150m neural connections in tiny sample of human brain.

So the entire brain would take ~ 1.6 zettabytes of storage, cost $50 billion, and require 140 acres of land.

It would be the largest data center on the planet.

Storing one brain human brain in perpetuity.

I am sure that Musk, Altman, Bezos, Thiel, et al. are already lining up to scan their pea brains and become immortal.

***

All of this amid the backdrop of more than a dozen members of Trump's cabinet being billionaires.

A reminder that this is what happened to income inequality in this country once the tax rate for top earners was slashed. Trickle down does not work and top earners' priority is themselves.

According to Barron’s, Elon Musk’s wealth increased by almost $200 billion between November 5th and December 20th of last year. That means he could give $250,000 to every homeless person in the US and still be as rich as he was on November 5th.

Meanwhile, according to ProPublica between 2014-2018, Elon Musk's tax records show that he repeatedly paid relatively little (or nothing) in federal income tax:

2015: $68,000 in federal income tax paid

2017: $65,000

2018: $0

The 25 wealthiest Americans “saw their worth rise a collective $401 billion from 2014 to 2018. They paid a total of $13.6 billion in federal income taxes… it amounts to a true tax rate of only 3.4%.”

The median family paid a far, far higher rate on their incomes.

US’s inequalities in stark detail:

Wealth of Elon Musk

2012: $2,000,000,000

2024: $273,000,000,000

Wealth of Jeff Bezos

2012: $18,400,000,000

2024: $207,100,000,000

Wealth of Mark Zuckerberg

2012: $17,500,000,000

2024: $200,000,000,000

Federal Minimum Wage

2012: $7.25

2024: $7.25

I’ll leave you with eight words: tax the rich and don’t use AI.