Minor acts of AI resistance

A year on from first writing about AI, some thoughts – and quite a lot of feelings – on the evolving discourse around inflated AI expectations.

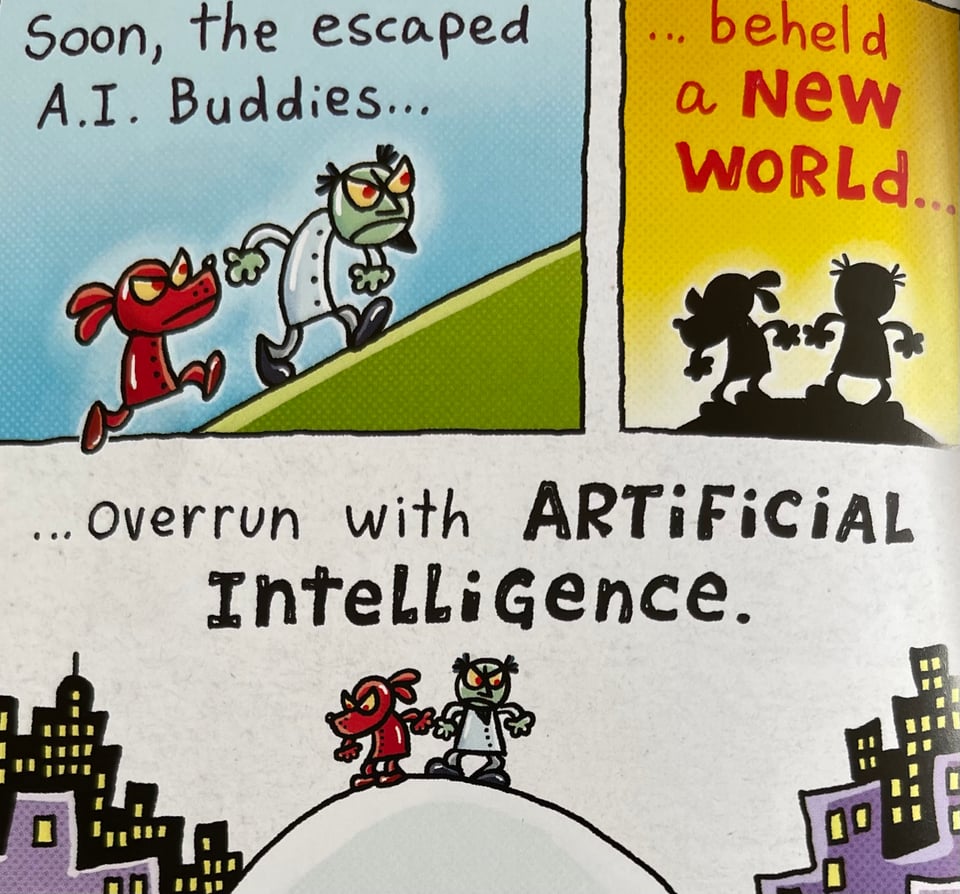

I suppose you could call this post a minor act of resistance.

It’s been a year since I first wrote about AI, and since then I’ve been on a curious little journey (haven’t we all?).

When I mused on FOMO, futurists and funding last August, I didn’t necessarily have AI solely in my sights.

Since then I’ve ended up covering AI in various guises: where organisations invest their energy and resources; how it collides with the creative process; its impact on art.

AI’s rise from sideshow – largely the domain of insiders and enthusiasts – to headliner means it now impinges on almost every conversation involving technology, and increasingly occupies a mainstream position.

More and more I hear AI references slipping into general chit chat, jokes, and off-the-cuff comments.

As my own knowledge and understanding of the technology has grown, my perspective has definitely evolved.

I made my initial hesitation clear, but I’ve also opened my eyes to where and how applications of AI do go some way in living up to the fanfare.

It’s still a big old mess though.

Tangible success stories, as opposed to breathless thought pieces about how AI will transform every aspect of our lives, are still a rarity.

Benedict Evans exemplified this in a post earlier in the year entitled Looking for AI use-cases where he makes plain the disconnect between the promise and the reality.

As I’ve stated before, my worry about the overall AI discourse is that the level of noise (and the eye-watering sums of money that fund the noise) creates a sense of the inevitable: These tools are here to stay.

These climate-wrecking, biased, unproven, and flaky fucking tools are around for the long haul.

They’ll be used to influence public policy, shape all manner of solutions, and suck up trillions of dollars of investment to a degree that far outstrips their worth.

Within my own bubble it’s been heartening to see a groundswell of people challenging the ‘inevitability’ version of events – questioning the intent, querying the ethics, probing for use cases that go beyond a litany of goddam AI personal assistants.

Outside my bubble not so much. Try tuning into the odious All-In podcast to get a sense of the warped Silicon Valley self-belief that fuels the AI machine.

Cracks in the AI Mirror

Speaking of inevitability, it was pretty unavoidable that I’d find a lot to nod along to in Shannon Vallor’s latest book, The AI Mirror.

My appetite had been whetted when I’d seen her at a couple of conference appearances, and I was genuinely excited when Ezra Klein trailed the book on his podcast earlier this year.

In contrast to some academic works, I found The AI Mirror to be relatable, accessible, and deliver a real emotional kick.

It’s also funny; the repetition of the word “bullshit” is both comical and highly effective at shining a light on the isn’t-AI-awesome rhetoric (although listening to the audiobook out loud in the house meant my 8-year old demanded a lot of money for the swear jar).

The central ‘mirror’ metaphor – the premise that generative AI is effectively reflecting back all the material we’ve previously fed into the system (the web, social media, online forums) and then regurgitating it to present seemingly new content and thinking – is comprehensively explored.

I was lucky enough to catch Shannon in discussion with science fiction novelist Anton Hur at the Edinburgh International Book Festival last week.

Expertly chaired by Suzy Glass, the format of pairing fiction with non-fiction gave both authors the space to explore common AI tropes and go deep into some of the quandaries AI presents us with.

Leaning into the notion that many of the great hopes and expectations of AI are based entirely on historic data, and that might not quite cut it when we’re searching for tomorrow’s solutions, the conversation kicked off with the salient point that “it’s a strange kind of innovation when the past eats the future”.

Over to you, Sam Altman.

Pushing back to move forward

Before signing off with some topics that particularly resonated when I was reading The AI Mirror, let’s return to the subject of resistance.

In the closing comments of last week’s panel there was debate with the audience on what it meant to resist the AI juggernaut, and the power of rejecting “adapt or die” standpoints.

As the person responsible for the implementation of technology within an organisation, and who has influence on wider public sector adoption, I think it’s imperative to approach AI with caution.

It’s OK to call bullshit, it’s OK to question prevailing narratives, it’s OK to push for transparency and insist on solid use cases. Start with needs, not tech, in case you need reminding.

This really shouldn’t be about resistance, but it’s striking just how uncomfortable it can feel to speak out against supposedly foregone conclusions.

So I’ll endeavour to keep chipping away with the posts I write and the articles I cite. Trying to exemplify any good examples that pass my way, being careful not to get swept up in the hype, and endeavouring not to make snarkiness my default position.

Reflections, love, perseverance

I’ve picked three quotes from The AI Mirror from sections that I particularly enjoyed. There are many more. If any of this whets your appetite I’d urge you to get your hands on a copy and delve deeper.

Mirrors don’t tell the whole truth. I love this paragraph about actual mirrors as it perfectly segues into all the things AI doesn’t reflect: the data that’s never been collected, the biases inherent in systems, the information that presents what people want to be seen and how they want to be perceived.

“What a mirror shows us depends upon what its surface can receive and reflect. A glass mirror reflects to us only those aspects of the world that can be revealed by visible light, and only those exterior aspects of ourselves upon which light can fall. The slight asymmetry of my smile, the hunch in my posture from decades of late-night writing, the front tooth I can’t remember chipping, the age spots from a half-century spent in the California sun – the mirror can show me all these things. But my lifelong fear of drowning at sea, my oddly juxtaposed passion for snorkelling, my ambition to learn one day to read Chinese, my emotionally complicated memories of my childhood – none of these are things the glass mirror can reflect.”

Love in the time of AI. I thought the section on love, and AI tools that offer partnership/companionship was powerful. If an AI can’t understand the friction that goes along with any human relationship, and instead pitches up with platitudes and a perfect-world view of love and relationships, that’s kinda problematic, right?

“…the harmful illusions about love being projected by our newest AI tools mirror those we are already primed to believe: the illusion of love as a reward. Contrary to the distorted hopes and expectations that many of us were raised to expect, love is not always easy, restoring, satisfying, or pleasing.”

Unpalatable perseverance. I enjoyed the framing of perseverance as a tactic employed by techno-determinists that allows them to act with impunity.

Probably best exemplified by Zuckerberg’s now-abandoned move fast and break things mantra, there’s still a mindset that believes tweaking the algorithm as you go, rather than working through the ethics and implications upfront, is the noble way of doing things.

“Where does that virtue leave us today? It leaves us in a world where those in positions of power and comfort are driven to press on with calamitous endeavours no matter what the cost – whether it be a futile war in Ukraine, a Tesla autopilot feature that years later still can’t avoid a parked fire truck, or a social network that degrades the pillars of democracy further every day.”

🤖 Thank you for reading.

Add a comment: