June 2025 Update: You Can Just Do Things

Colin and Harrison show how non-technicals leverage AI to execute complex projects with user impact.

Neither Colin nor Harrison have technical backgrounds, yet they have been shipping complex technical projects touching thousands of users due to advancements in AI.

This story is not theoretical. It’s about how two non-technical investors rolled up their sleeves to drive tangible value for their users.

From early AI explorations to spearheading complex technical projects, Colin and Harrison demonstrate how these new tools are reshaping the trajectory of Third South Capital:

Myles: Could you start by telling me about your technical backgrounds?

Colin: I’ve generally been a fairly tech-forward person, but I wouldn't say I have engineering or software talent. I did dabble with a potential minor in computer science at William & Mary and completed a number of courses, but I graduated early instead of finishing the minor. So, I have an elementary working knowledge of coding.

Harrison: I am thoroughly non-technical. I have a strong understanding of Microsoft Excel. I grew up in a tech-forward environment; my high school summer job was in tech, so I gained early exposure to mid-2000s concepts. The most advanced coding I did was an introductory Stanford online C++ class in high school. I vaguely know some HTML, I built websites in Dreamweaver in ninth grade. And I'm aware of how systems connect at a high level, but I don't have any true engineering or technical knowledge.

"I am thoroughly non-technical... I don't have any true engineering or technical knowledge."

Myles: What were your first steps into using AI?

Harrison: Among the early adopters, I was a late adopter. When ChatGPT first came out, I wasn't a big user. I started using Claude more after the Wall Street Journal wrote about it being favored by those who appreciate values-driven and safety-driven AI. I quickly realized its potential as a teacher. It certainly has boundaries, but for basic knowledge and getting up to speed on conceptual topics like SQL, how APIs work, or data storage, things far beyond what I could have done on my own, AI coaching has been invaluable. It has allowed me to advance months of work. I sometimes wonder if I have gaps in fundamental knowledge, but I'm able to accomplish the tasks.

Myles: Colin, how do you view your relationship with AI? Is it a coach, something else?

Colin: I’d say it’s somewhere between a coach and an expert. Initially, I was a non-believer when GPT and similar models started emerging; my first reaction was “it was glorified Google”, summarizing the top few search hits. I have completely pivoted, and now am an AI-zealot. I think the AI wave is incredible, especially the new reasoning models like GPT o3. It's unbelievable how thorough it can be, whether for product reviews, code assistance, providing frameworks for thinking, or iterating on time-consuming (although not necessarily complex) workflows. There are immediate savings there for pretty much any user, technical or not.

“Initially, I was a non-believer... I have completely pivoted, and now am an AI-zealot. I think the AI wave is incredible.”

Myles: Harrison, what other ways have you deployed AI?

Harrison: It's helpful for achieving a reasonably advanced level of knowledge on a subject where the user has no base knowledge. I often ask it to use analogies and share information about my strengths so it can communicate more effectively with me. Broadly, there’s project-level work, like advancing towards building a dashboard or creating data connectivity. There's also brainstorming, which I think it's good at with the right instructions, especially for free-forming creative ideas if you can guide it beyond recycling the first few answers. And, as Colin alluded to, there is value in it being a "better Google."

Myles: Let's talk about your AI "weapons of choice." Harrison, you mentioned a preference for Claude. Have you experimented with others?

Harrison: I've not really experimented with OpenAI's or Google's platforms. Not for lack of wanting to, but because I feel comfortable working in Claude. I feel I understand its strengths and weaknesses well. I think Claude 3.7 was a step change in performance. I've been a little less impressed with 4.0; I think there have been some regressions. It might be this newer version is trying to cater to users who are not me. My interaction is almost exclusively with Claude in a browser tab. It would be interesting to know how Anthropic gears these different tools to different user types. I feel comfortable understanding what should go into project instructions, what constitutes project knowledge, and how to memorialize and build upon a chat when it has run out of energy.

In the early days, there was a lot of conversation around prompt engineering. Honestly, I never really understood what it meant. I trial-and-errored my way into what I think works well: understanding where and why models make mistakes or get overconfident, and how to capture relevant points for future foundational work.

“I trial-and-errored my way into what I think works well: understanding where and why models make mistakes or get overconfident.”

Myles: You mentioned feeling like the newer version of Claude 4.0 isn't geared towards you. Could you elaborate?

Harrison: I've been a bit frustrated with it. It seems more overconfident, more assertive, and more willing to agree with the user. More importantly, it is thinking less unboundedly and more formulaically. These are generally net negatives for how I use Claude. I'm not looking for it to tell me my plan is great. I use it for pressure testing, finding flaws in my logic, building thorough step-by-step plans, and thinking outside the box. I learned to use Claude 3.7 effectively. This newer version doesn't feel the same. It's frustrating when I can now see it skipping steps or not considering logical alternatives on a technical subject matter I've learned about.

Myles: Colin, you’ve mentioned trying different tools. What differences have you noticed between them, and how do you choose which tool to use for a specific task?

Colin: I started on ChatGPT and have stuck with it as my go-to. I used Perplexity for a bit because I felt it gave more real-time answers, but real-time is no longer unique, so my Perplexity use has gone to zero. I've also used Claude. On the IDE [interactive developer environment] side, I use Cursor and plan to try Windsurf and Codex, which just came out.

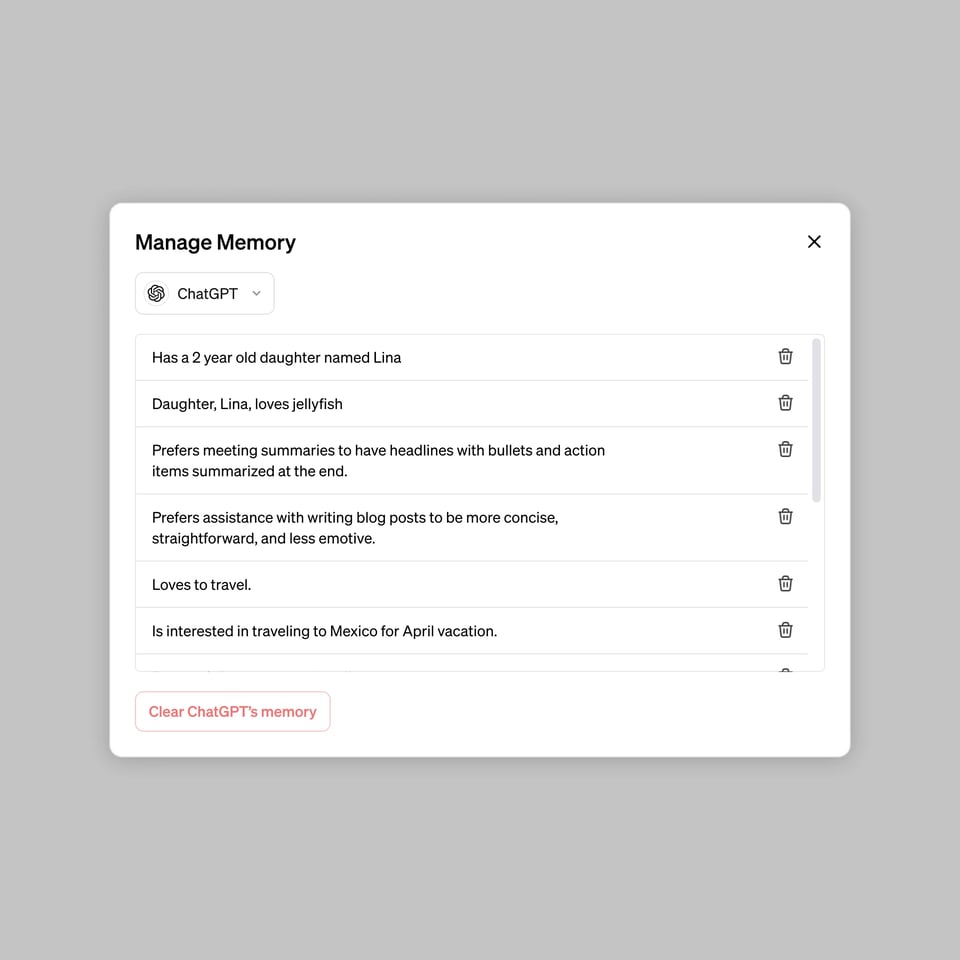

Two things have kept me using GPT more heavily than Anthropic's products. One is the sheer volume of prompts I have on GPT. There's a lot of good content there, and I like being able to search across it and have it learn my preferences. I’m sure Claude does the same, but I've had more iterations on GPT, which is of course their intent to retain users.

The other was a specific example that instilled confidence: I was looking for publicly traded comparables for a small-cap digital health-tech IPO. I asked ChatGPT-4o, ChatGPT-o3 (the advanced reasoning model) and, at the time, an earlier version of Claude. 4o and Claude both returned irrelevant companies like United Healthcare and CVS. Healthcare adjacent, yes, but not relevant to a small cap healthcare-tech business. The GPT o3 model thought for about two minutes and returned the exact list I had already come up with, plus one extra name. Seeing the process of advanced reasoning was an unlock for me. Anecdotally, I’ve also had a couple of technical issues where I felt Claude’s advice was confident but not fully correct, and GPT got me to the right spot.

“The GPT model... thought for about two minutes and returned the exact list I had... seeing the process of advanced reasoning was an unlock for me.”

Myles: Is there anything you feel is “off-limits” for AI, tasks you'd rather do yourself or don't think AI should handle?

Colin: From a perspective of what I don't want AI to cover, rather than technical limitations, it would be frameworks for thinking about bigger-picture life topics. So many people are playing different games, and knowing your game and how to frame your thinking about it can only be done through self-exploration, reading, and reflection. I've found LLMs most helpful for specific problems, like a surgeon with a scalpel. I don't foresee prompting an AI about the meaning of life or how to find happiness and gaining divine insight.

Harrison: I'll push back a tiny bit. I do think Claude 3.7 is helpful, within limits. For instance, setting sustainable nutrition and fitness plans or thinking about balancing life goals outside the office. I once gave Claude information about a person and asked for strengths, weaknesses, and next steps, and the results were quite nuanced and interesting.

However, many people talk about LLMs being great for standard things like contracts and legal documents. As someone with high-level experience with M&A agreements, I'd be happy to let an LLM do basic proofing. I find it interesting to ask Claude if an agreement is "market", which is a nuanced question. But I would draw the line at executing an agreement without an experienced attorney reviewing it. I might cut out drafting and redlining time and gain a better understanding of new terms or negotiation points, but I wouldn't give an LLM the final say on complex topics or critical documents like an asset purchase agreement. Pre-work, yes; final sign-off, no.

“I wouldn't give an LLM the final say on complex topics or critical documents... Pre-work, yes; final sign-off, no.”

Myles: Let's talk about the projects you've been working on with AI. Colin, can you give an overview of your project and how AI was involved?

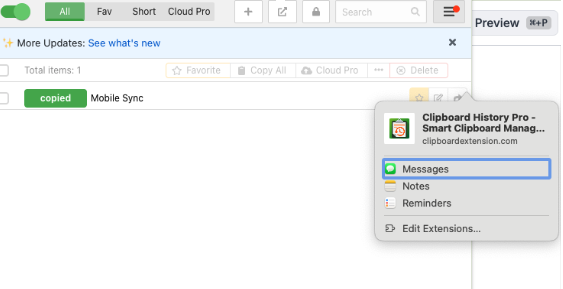

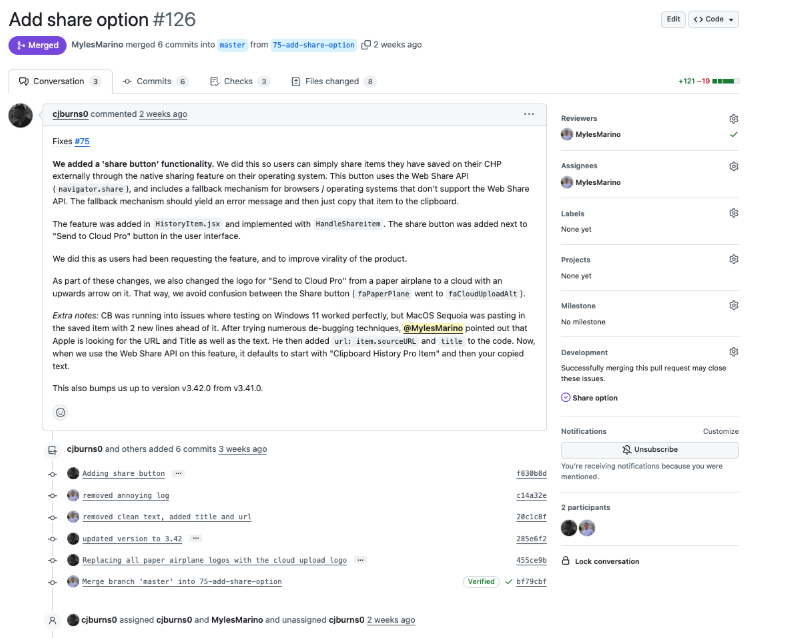

Colin: I was excited about using LLMs as a coach or assistant to empower myself, a non-software engineer, to deploy code changes. The project I worked on was adding a share feature into Clipboard History Pro. This allows users to click a button to share a clipboard item via the native operating system share window (e.g., on iOS, a bubble which prompts messages, email, or Slack). It wasn't a groundbreaking feature, but it was exciting because it was the first time I had actually pushed code updates for Third South.

Myles: Harrison, what have you been accomplishing with AI's help?

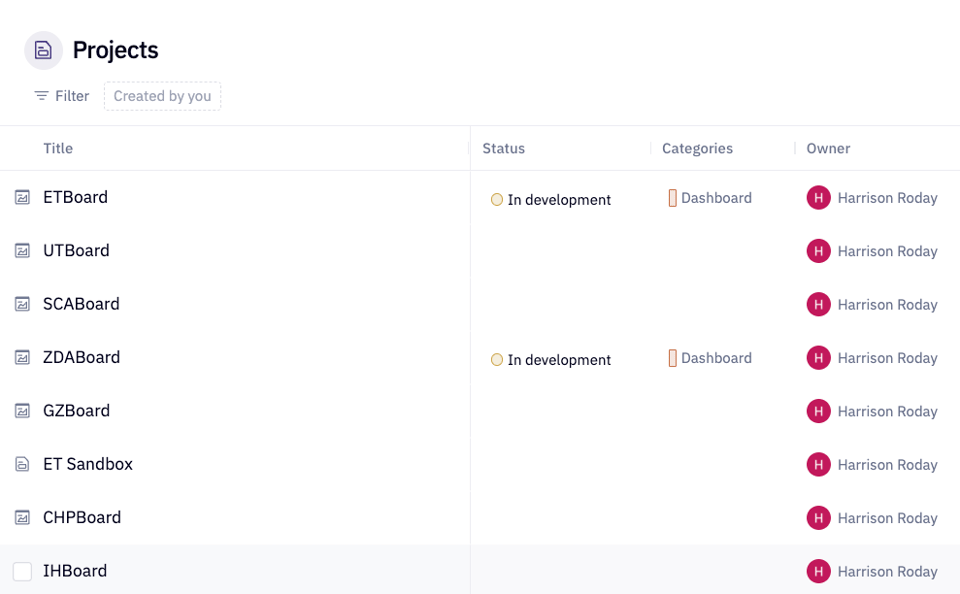

Harrison: I've used Claude as a technical lead on several projects. The main one has been a reasonably big data lake project to unify financial and operational reporting across our seven subsidiaries. We've been pulling data from financial backends like Stripe, PayPal, and Paddle, operational data from SQL databases, and data from Amazon S3. We've used an ETL platform to consolidate data into a new database and are now using a visualizer to build unified KPIs.

This project had more depth than I expected. Claude coached me on every aspect: understanding how to use APIs, evaluating ETL providers, designing the destination database, and selecting visualization software. It's like four projects in one, dealing with different data sources and their unique behaviors. This has been a major project for me over the last eight weeks. Spanning pulling data via API, building a destination database, the middleware connecting them, and the software to query said database.

Myles: Harrison, how would you have approached this data lake project without AI at your disposal?

Harrison: I'm pretty sure I would have been unable to do the project in any relevant period. I don't know SQL. If you woke me up and asked the difference between a GET and a POST request, I couldn't tell you. I don't know what JSON is, but I use it. I don't know the difference between query parameters and headers. I know what OAuth is but can't explain the nuances of different API authentication methods. I've never used Amazon Web Services, so I didn't know about RDS or S3. Learning to troubleshoot issues like a database timeout because I forgot to add my IP address to a security group while traveling. These things would have taken forever to learn.

Furthermore, many services now have embedded AI features, so there's a meta-skill in knowing how to interact with these AIs effectively. For example, Hex, our chosen visualization software, has AI queries. I don't know Python, but I can ask the Hex AI to write it. I've learned to troubleshoot when its output isn't quite right and when to use Claude (with its project knowledge) versus the embedded AI. This project would have taken me at least a year to do myself, if I could have done it at all. I would have had to spend months learning SQL and JSON.

“This project would have taken me at least a year to do myself, if I could have done it at all.”

Myles: Colin, you've pushed a feature live to over 150,000 users. How has your conceptualization of your role at Third South evolved?

Colin: It's game-changing. Pushing the share feature followed the 80/20 rule: 80% of the time was spent fixing a small bug, while actually adding the feature was quick once I was set up. Bug-fixing would have taken me hours of Googling on Stack Overflow or bothering our technical team.

My role will never be to spar with our lead developer, Justin, on technical intricacies. But AI makes me much more useful and able to fill in different roles. This means doing basic feature additions and helping with debugging tasks.

Even beyond coding, the understanding I can gain about our businesses, how they work, their tech stacks…is immense. Before, looking at a tech stack during due diligence meant little to me. Now, seeing familiar vendors like Heroku on a list for a business we're evaluating makes a difference in assessing the work involved or potential opportunities. It allows me to be a better teammate overall. Honestly, I find it exciting. I was having fun working on these technical tasks late at night.

“AI makes me much more useful and able to fill in different roles... It allows me to be a better teammate overall.”

Myles: Colin, how has this changed your collaboration across the team and your workflow with others, like me or Justin?

Colin: I think it has removed the barrier where Justin did technical stuff, Harrison and I did non-technical stuff, and you, Myles, could do some of everything. Now, I can often go to you or Justin and say, "I'm having this issue, I think it's X, or I think the way to fix it is Y," or "I've done Z, am I accidentally playing with the nuclear codes?" Before, the barrier existed, not imposed by us, but due to a knowledge gap. Secondly, I can now pull some weight on development-related work, and I'm excited to do more.

Myles: Harrison, how do you feel about taking on more technical work, like the data lake project, within Third South? Does it excite you?

Harrison: I have a lot more confidence in my ability to take on discrete, low-barrier-to-entry technical projects, which helps us all be more effective. A lot of my relevant experience for Third South is with buying and growing businesses, capital markets, legal agreements, investing, KPIs, and management…skills needed to run a diverse portfolio and do acquisitions. I can also build fundamental knowledge of our underlying businesses and add value there.

I was initially skeptical the data lake project would provide organizational value, but this view was completely wrong. We've already found bottom-line dollar impacts, like identifying customers on improper tiers or not being billed correctly. There will be other performance improvements too.

Am I going to spend a lot of time pushing features in the future? Probably not. We have a lot of energy with Justin, Myles, and Colin, and our company has diverse needs for time allocation. We're not all going to use LLMs to become the same person, which would be a reduction in value. I feel more confident in taking on specific projects. I suspect when the data lake project is about 80-90% through. Once it's over the line, I’ll look for another discrete technical task I can handle and offload from the team. If we're acquiring a business or I am dealing with finance, infrastructure, legal, or HoldCo needs, I'll likely spend zero time on technical tasks.

“We're not all going to use LLMs to become the same person, which would be a reduction in value.”

Myles: Taking a broader view, Harrison, what else do you think this AI capability has unlocked for TSC? How has it changed the trajectory or concept of TSC since its founding in mid-2022?

Harrison: My views are developing. Coming from an industrial manufacturing background, I think about physical goods. The value in a fastener isn't the metal; it's the manufacturer-to-client relationship and the overall value proposition. I previously didn't appreciate how software could be similar. I thought the asset was purely the codebase.

What's game-changing for TSC is demonstrated by a project Justin is spearheading: swapping out the tech stack of a legacy product using a single-digit number of hours per week, plus Claude Code. This project would likely have been a six-figure outsourced engineering expense over many months, if not a year or more. Justin will probably have a beta in a month.

This shows TSC can think far more holistically about the value of software, which comes down to product-market fit, the competitive situation, customer value, brand strength, switching costs, and innovation. Many think as the cost of developing software goes to zero, it will be easier to build low-cost alternatives.. But the corollary is existing businesses with strong customer relationships and good track records, even if using older tech, now have a near-zero cost to become leading-edge on the tech side. Their businesses have fundamentally become more valuable.

Our future isn't necessarily about breaking ground with brand-new products in whitespace. It's about building on great customer relationships, product-market fit, and existing value propositions, and being able to serve those customers with the latest technology radically more quickly and at a much lower cost.

“Existing businesses with strong customer relationships... now have a near-zero cost to become leading-edge on the tech side. Their businesses have fundamentally become more valuable.”

Myles: Colin, what are your thoughts on how AI has changed the trajectory and concept of TSC?

Colin: I don't disagree with a single thing Harrison said. One question I've been thinking about is: if the cost of development heads towards zero, will the ‘good’ software companies just be the best sales organizations? Meaning, is the value of ‘product’ going to be lost? I don’t think I agree with this take (commonly seen on X/Reddit). While sales are important, what will be crucial, (and this excites me for TSC), is finding the best niches or areas to focus on, understanding the key need, developing the right features and charging appropriately.

It's an oversimplification to say it's free to make software, so the best salesperson wins. I believe it's now significantly easier, cheaper, and faster to make, change, and add features. The entity who figures out how to string those capabilities together and build it on a platform, ecosystem, or community will succeed.

Regarding my role, what fires me up is these tools enable so much more self-sufficiency. The old adage for interns/new employees was to spend 5-10 minutes looking into something yourself before asking for help. I didn’t always feel like I could take this advice and self-serve on complex tech issues 2-3 years ago. This is now so much more possible. I feel I can do much better before bringing it to the team.

“It's now significantly easier, cheaper, and faster to make, change, and add features. The entity who figures out how to string those capabilities together will succeed.”

Myles: For readers interested in AI but haven't taken the leap, what advice would you give them, Colin?

Colin: My first urge is to tell them, "You can just do things!"

It's a bit like going for a run. The hardest part is carving out the time, putting on your shoes, and getting started. I felt similar. I'd put learning to deploy code changes on a pedestal, thinking about lack of understanding around GitHub repositories or worrying about breaking things. Downloading the tools, reading basic documentation, and actually starting was empowering. Start small, like we did with minor changes, and confidence will ramp up once you break the initial inertia.

“'You can just do things!'... The hardest part is getting started.”

Myles: Harrison, same question to you. What would you tell someone interested in AI but unsure where to start?

Harrison: I agree with Colin. Pick a project and try it; there's nothing better than engaging with it. Pick something where you have a sense of the scope but are unfamiliar with the necessary tools. For example, if you're non-technical and interested in running a marathon, have AI build your sixteen-week training plan and tracker. See what it can do on a subject you know something about so you can check its work.

Pick an area where you have some base knowledge to sense if you're moving in the right direction, but make the tasks things you don't actually know how to do. If I asked Claude to build a live tracker for a marathon training plan and it suggested a sixteen mile run in the first week, I'd know from experience it made no sense. And then it could teach me how to build my tracker. You should have enough base knowledge to understand if what you're doing works, but make the subtasks things you don't know anything about, so you can see how powerful these tools are at teaching you new skills.

Myles: Any parting words?

Harrison: Anyone interested in using these tools should get to work. Particularly if you're a skeptic or have concerns. I have huge concerns about bias, how models are trained, and whether they understand everyone's needs. If you don't engage with the models, I don't think you can be a good advocate for those the models don't serve well. You have to understand how they work to critique, build, show opportunities, or highlight blind spots.

Colin: My parting words are a bit less big picture than Harrison's. One barrier many might find, as I did, is not making code changes with LLMs, but managing repositories, GitHub best practices, and actually deploying the code to see it live. Okay, I wrote the code. How can I actually see it? Figuring out how to take the code from generation to reality is a key next step.

You just read issue #2 of Third South Capital. You can also browse the full archives of this newsletter.

Add a comment: