Today no Datalog nor interop: let's talk data structures!

In ClojureDart we took pride in writing our own persistent collections. A design goal for our collections was to make them have a canonical layout so as to be able to leverage canonical layout and structural sharing to optimize boolean operations on them (diff, merge, mass dissoc etc.). It's yet untapped potential.

(And it's tangentially related with my ongoing obsession with "Datalog As The Engine Of Application State" 🤔.)

While ClojureDart hash maps are still HAMTs and their differences with Clojure ones are marginal, ClojureDart sorted maps/sets belong to a rather novel family of search trees because the usual suspects (red-black trees like in Clojure or b-trees like in Datascript) are heavily history dependent and thus not amenable to having a canonical representation.

It turns out that this family of search trees is simple (no rotations or balancing) and efficient (wide trees are supported).

Their secret? They use the hash function!

Don't forget: when we're not busy writing custom datastructures, we are available to help you on your Clojure projects or working on ClojureDart or on our app Paktol (The expense tracker focused on making both ends meet!).

How hashing relates to balancing

If you consider 32-bits number, half of them ends (when written in binary) by 0. One 4th of them ends by 00, one 8th by 000, one 16th by 0000, one 32th by 00000... We have a geometric progression (we multiply by a constant factor, 2 here, at each step).

In a complete binary tree, there's one node at depth 0, 2 at depth 1, 4 at depth 3, 8 at depth 4, 16 at depth 5. Another geometric progression.

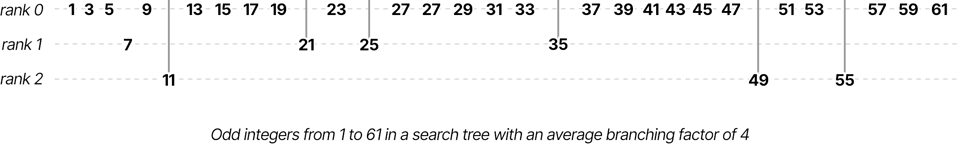

The key idea is to put these two geometric progressions together: if the 32-bit hash of key ends with N zeros then this key will appear at depth 32-N (N is called the rank) in the tree!

Let's see how the integers from 0 to 999 would be spread out using this idea:

user=> (->> (range 1000)

(map #(Integer/numberOfTrailingZeros (hash %)))

frequencies

(into (sorted-map)))

{0 500, 1 262, 2 121, 3 58, 4 26, 5 17, 6 7, 7 4, 8 3, 9 1, 32 1}

Very smooth progression with all ranks being at their expected capacity from 0 to 9... except for this outlier at 32. This one is because 0 is special-cased in Clojure there's a special case that (hash 0) is 0. Let's just flip all bits with a bit-xor -1 to drown zero amongst the first level crowd.

user=> (->> (range 1000)

(map #(Integer/numberOfTrailingZeros (hash (bit-xor % -1))))

frequencies

(into (sorted-map)))

{0 498, 1 254, 2 137, 3 60, 4 27, 5 15, 6 4, 7 2, 8 1, 9 2}

This time it's less perfect as we have two items at rank 9 but only one at rank 8 but c'est la vie and in practice it's a rare occurence.

It's a rare occurence because we want our tree to be shallow enough and we achieve that by having a higher average branching factor. (In the ClojureDart implementation it's 16: we consider hash bits 4 by 4 so the max dept is 8 (32/4).) The higher the branching factor is, the less likely it is to have a lone higher ranked node.

See if we simulate a 16 branching factor:

user=> (->> (range 1000)

(map #(quot (Integer/numberOfTrailingZeros (hash (bit-xor % -1))) 4)) ; 4 because we need 4 bits

frequencies

(into (sorted-map)))

{0 949, 1 48, 2 3}

And {0 9347, 1 617, 2 33, 3 3} for 10,000 items, {0 93814, 1 5817, 2 337, 3 30, 4 2} for 100,000.

Values are properly spread over ranks.

Why is it simpler?

Since a value is deterministically assigned to a rank, it's not going to move in the tree: it will always be at this rank. It's the power of statistics (aka the quality of the hash function) which guarantees the tree is balanced. If that worries you, it's the same statistics which make hash maps work.

Given its simplicity, nodes can be reduced to just an array:

- Inner nodes arrays have an odd length and are references to children nodes separated by values in order.

- Leaves arrays consist only of values in order since there are no children

The tree object only has to store a reference to the root node and the rank of this root node.

The empty tree is thus a pointer to an empty array and a rank field set to 0.

Nota bene: here I use interchangeably key and value as we are just concerned with the tree and not with the storage of the value part of a key-value pair. Conceptually we are explaining sorted sets and maps are left as an exercise to the reader. For the record in ClojureDart sorted maps we chose to never store the "actual value" in inner nodes: they are all stored in leaves and the associated key is also repeated in the leaves. It makes iteration simpler/faster.

Lookup

Lookup is as plain as the memory layout: start from the root node, test the lookup key against values to figure out which branch to follow. Repeat until the lookup key is found or rank 0 reached.

Insertion

First determine the rank for the inserted key. Then performs lookup but stops as soon as the target rank is reached.

When the key is present, you are done.

When the key is absent, a branch sits in its place. We call this branch the incumbent branch.

The incumbent branch has to be split in two: the half which contains values less than the key and the one with values greater than the key. Each half will be inserted on each side of the key.

Pseudo-code for this case is:

let i be the index of the incumbent branch

let new-node be a copy of the current node with two extra slots such that:

new-node[j] := node[j] for 0 <= j < i

new-node[i+1] := key

new-node[j+2] := node[j] for i < j

call split(node[i], key, rank-1, new-node, i, new-node, i+1)

where split is:

split(array, key, rank, left, l, right, r):

if rank > 0 then

let i be the index of the incumbent branch

left[l] := copy array from its start to i (excl.) with one extra slot at the end

right[r] := copy array from i+1 (incl.) to its end with one extra slot at the start

call split(array[i], key, rank-1, left[l], i, right[r], 0)

else

let i be the index such that array[i-1] < key < array[i] (with tests involving out of bounds indices omitted)

left[l] := copy array from its start to i (excl.)

right[r] := copy array from i (incl.) to its end

Special case: the rank is greater than the root's rank: the branches resulting from the split must be padded to match the desired rank.

Deletion

First determine the rank for the deleted key. Then performs lookup but stops as soon as the target rank is reached.

When the key is present, take the two branches on its sides and join (zip) them together. Replace these two branches and the deleted key by the joined branch. This is the opposite operation to splitting.

Pseudo-code for this case is:

let i be the index of the key in the node

let new-node be a copy of the current node two slots shorter, such that:

new-node[j] := node[j] for 0 <= j < i-1

new-node[j-2] := node[j] for i+1 < j

new-node[i-1] := zip(node[i-1], node[i+1], rank-1)

where join is:

zip(left, right, rank):

if rank > 0 then

let i = length(left)-1

let new-node be a new array such that:

new-node[j] := left[j] for 0 <= j < i

new-node[i] := zip(left[i], right[0], rank-1)

new-node[i+j] := right[j] for 0 < j < length(right)

return new-node

else

return array-concat(left, right)

Special case: when the resulting root node has no value (is a single-child node), its child becomes the new root and this rule is applied to the new root.

When the key is absent, well, you are done!

In practice it's even simpler

Splitting and joining branches are no big deal (except if you insist on writing split in pure FP where it becomes awkward — but since all mutations occur on fresh unshared arrays, it's safe).

As we have seen the overwhelming majority (in ClojureDart case, 93.75% (15/16)) of values live at rank 0 and will thus never trigger a split or a zip but only mundane array copies.

Conclusion

We hope we have piqued your interest for these sorted trees as an alternative to better known red-black trees and b-trees.

If you want to read more, a good starting may be the paper which introduced Zip Trees as the key insight of using a deterministic geometric distribution as ranks led to the tree explained above.

You just read issue #10 of Curiosities by Tensegritics. You can also browse the full archives of this newsletter.