New audio engine needs beta testing

Note: I'm sending this forum post out as a newsletter because a) I need beta testers for this new audio engine fast, and b) there's a whole lot of "backstage" content in here! Hope you enjoy it.

A few weeks ago, I slowly started receiving reports of audio-related issues in the Strum Machine iOS app:

- Silence where there should be sound

- Disrupted timing/rhythm

- Stuttering or distortion

- Error messages appearing when you try to play

I think only around 2% of users ran into these issues, but that's still way too many. So I've been working day in and day out toward a permanent fix to these problems, and today a brand new iOS audio engine enters beta testing.

I'll go into detail about what I've been doing behind the scenes below, including the history of Strum Machine audio on iOS and my usage of AI 🤖 in building this latest solution, because I think some of you may find it interesting. But first, let's cut to the chase...

If you're having problems, here's what to do:

-

Restart your device for a quick fix. As far as I know, this always resolves the problem, for at least a day (and often much longer).

-

Help test the new audio engine by installing the SM Beta app. (You will first be prompted to install Apple's TestFlight app, and then you'll be able to install the SM Beta app.) The new audio engine it uses will replace the existing, problematic one as soon as enough people have verified that it works OK.

-

If you try the SM Beta app and the new audio engine, tell me how it goes! Your feedback is so helpful, and I don't have that many users so it's likely that you may be the first to report a particular issue, and the one responsible for me becoming aware of it and able to fix it!

A big shout-out and "thank you" to everyone who has written in about these bugs. I appreciate all feedback – the compliments, the feature suggestions, the constructive criticism – but these bug reports may be the most valuable emails I receive, because they help me keep Strum Machine running smoothly, which is my number one priority.

What's happening behind the scenes

(I tried to keep this short, but I think I failed! You've been warned. 😉 I did my best to strike a balance between including interesting technical details and writing for a non-technical audience. If you are reading and don't understand some bit of jargon, just keep going; it's probably not important.)

It's been a bit of a rough month for Strum Machine.

I think the problems started with the big "infrastructure updates" release I did at the beginning of July, based on the timing of it... I didn't start hearing from folks until about two weeks later, but at least one person said the trouble started "a couple of weeks ago".

The thing is, I did not touch the audio/playback code in that big update, and I've looked through the "diffs" (comparison between old and new code) and couldn't find any reason there would be new problems. But software can be mysterious like that... especially when part of your software is a black box you know nothing about. (More on that later.)

Thus began my game of Whack-A-Mole. I would first try to reproduce the problem. Failing that – most problems weren't reproducible on my devices – I would look for something in the code that could have conceivably caused the problem, write a fix, and deploy that update. At least twice, this seemed to work and the coast seemed clear... and then a few days later I would start getting reports of a different audio-related problem. Whack-A-Mole.

Eventually, it became clear: the audio engine that I've been using since 2022 was misbehaving in ways I could not figure out. To ensure Strum Machine's reliability and longevity going forward, I decided to build a new audio engine for iOS – Strum Machine's fourth audio engine!

A Tale of Four Audio Engines

The first audio engine was based on the Web Audio API. This is the technology that powered the very first version of Strum Machine (then only a web app) in 2016, and still powers the web version of Strum Machine, the desktop apps for Windows/Mac/Linux, and the Android app. I'm counting this as one "audio engine" even though I started out using the SoundJS library and eventually rebuilt everything from scratch.

Web Audio was all I needed... until late 2021 and the release of iOS 15. Apple "revamped" their implementation of the Web Audio API, and introduced a whole bunch of buggy behavior that affected users of the (then fairly new) iOS app. After evaluating a bunch of options, I went with a promising "best-of-breed" audio library called AudioKit, and hired a developer experienced with the library to build me an audio engine for Strum Machine. I also learned a decent amount of Swift, Apple's high-level programming language, along the way. (Here's where I announced the start and completion of that project.)

A month later, I had a "native" iOS audio engine that served us well... for about ten months. Then, in the fall of 2022, I released the new strumming patterns, which were simulated using more complex playback techniques that the new audio engine couldn't handle due to fundamental limitations in Apple's AVAudioEngine API. 🤦♂️ So I had to delay that release while I scrambled to come up with another audio solution for the iOS app.

Enter FMOD. It's primarily designed for games, and has many features (3D positional audio, Unity integration, tons of DSPs) that I had no need for... but it was highly optimized and could handle as many samples as Strum Machine needed to throw at it. I had to learn "Objective C++" (mid-level programming language) and it took some trial and error and hired help, but I got it working. Strum Machine on iOS has been powered by FMOD since October 2022, and has mostly been a positive thing. Besides working better than Apple's broken Web Audio implementation, we gained the ability to have Strum Machine play in the background and ignore the status of the mute switch. Hooray!

Now, FMOD always had problems – random crashes, refusing to initialize, etc. – but they were generally quite rare and over time I was able to fix some of them. But with this latest batch of problems, I really felt hamstrung by how "opaque" FMOD is. FMOD is closed-source, proprietary software, and the errors being logged were coming from inside the "black box" of the FMOD Engine. Moreover, the error codes were either completely generic and unhelpful, or they suggested they were a result of limitations or quirks within FMOD itself. Maybe it's a skill issue, maybe I'm not interacting with the library correctly... but I don't think so. (Not trying to dunk on FMOD too much – they are a nice small company and their product is well designed and seems to work fine for a lot of developers.)

After a few weeks of struggling to understand what was going on with FMOD, a thought came to me: Strum Machine would be much better off if it depended not on a complex, opaque audio engine, but a simple, custom audio engine. And I thought that maybe, with a little help from my friends AI, I could indeed replace everything I'm depending on FMOD for with custom code that I can understand – and debug – myself.

How the new audio engine works

The web audio engine (the one that still powers Strum Machine in the browser, desktop and Android apps) is highly abstracted. You load an MP3 file into memory and then give it instructions like "play this sample 0.35 seconds from now, at 70% volume, panned 20% to the right." It's (mostly) that simple.

FMOD is built around similar abstractions, albeit in a slightly less "friendly" way. I had to learn about allocating/deallocating memory, passing pointers around, etc. But at the end of the day, it's still a matter of sending the engine files and commands about what to do with them.

With this new audio engine, I'm directly manipulating audio samples as raw numbers. It's way "closer to the metal" than anything I've done before.

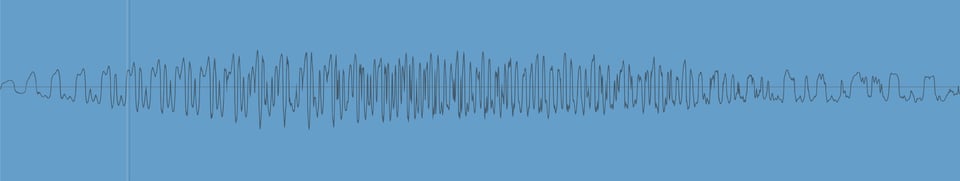

Have you ever seen an audio waveform, like in an audio-editing program or visualization of some kind? It's showing you how the cone of a speaker is supposed to move back and forth over time in order to recreate the sound in the file:

This audio is represented in memory as a series of numbers between -1 and +1 that represent the peaks and valleys of the waveform. Typically there are 44,100 or 48,000 of these numbers per second. That's a lot of numbers!

When building an audio engine from scratch, there are no abstractions between your code and these numbers. Want to play a sound? Loop through the numbers and copy them into the output buffer. Want to adjust the volume? Multiply each number by a value like 0.5. Sample rate of the audio file doesn't match the output device? You'd better do some linear interpolation to the output rate or everything will sound off-pitch!

It was all rather daunting at first... but, honestly, also a bit thrilling. After so long dealing with all these abstractions in other libraries – not to mention a coding career focused exclusively on high-level languages like JavaScript and Ruby – it's kind of nice to be working so "close to the machine". To a computer, everything is just numbers in the end, after all.

Also, it was less daunting than it would've otherwise been because I had some superhuman inhuman help.

Letting AI drive... then taking the wheel back

I've been experimenting with the use of AI to assist Strum Machine development for at least a couple of years. It's been occasionally useful as a sounding board for when I get stuck, and its "smart autocomplete" comes in handy sometimes... and up until recently, that's been about it.

Things changed a couple of months ago. Some new "agentic" AI agents came out (Claude Sonnet 4 to be exact) that were just smart enough that they could do an impressive amount on their own. I've been experimenting with them on the side, but have generally not trusted them enough to use them heavily in Strum Machine itself.

But this new audio engine was a small, self-contained project, being built from scratch, in a problem domain that these LLM's would have a lot of training data on. Pretty much the ideal situation to try letting the AI build the thing instead of me. So I told it what I needed and let it run wild.

A few hours and some number of revisions and bug fixes later, I had something that worked! Well, sort of. It was enough of a proof of concept that I knew building this from scratch the way forward. But the code was a mess, and the architecture of the engine was going to be very limiting.

It was then that I realized one of the big advantages of having AI write the first draft: neither of us would mind if I burned it down. So I had it analyze the code to come up with a better architecture for the engine (which I edited heavily to reflect what I learned in reviewing its code), then wiped the slate clean and had it build a new engine from scratch. If I had spent days/weeks building that first draft, I would have been much more hesitant to start over and choose a better path. 😅

The second draft quickly led to a third draft. From then on, I went back and forth between giving it tasks (which it worked on autonomously, including writing and running tests to verify behavior) and writing/editing code myself. It only took about a week to go from nothing to submitting a beta app to Apple, which I did on Friday evening. [It was approved for public download Sunday morning.]

The AI is simultaneously super impressive (writing a fade-processing function that would have taken me an hour to write in under two minutes) and super stupid (randomly that same fade-processing function later on, for example). It's like an overeager intern that knows the whole internet but also can't see why the thing it just said is flat-out wrong and directly contradicts what you just told it.

So I'm using it the way I think AI tools should always be used: with a high level of distrust. That's why I review every line of code that it writes. This is a mission-critical part of Strum Machine, and so it's important that I have not only read every line, but I understand every line. It takes time, but it means that as I hunt down little bugs and edge cases, I have a good idea of where to look, versus hoping the AI can stumble into a solution.

Putting aside concerns about broader societal and environmental impacts of this technology for a moment... it's been a very useful thing for Strum Machine these past few weeks. I'm an experienced programmer, but I lack the experience with low-level programming that is needed to build an audio engine like this. AI has really helped bridge that gap in my understanding, such that I can now mentally follow the path of audio production in Strum Machine, from the highest level of "we need to hear these sounds from these instruments at these times" in the web/JavaScript layer, all the way down to "copy these numbers into the output buffer that will be sent directly to the speaker". I think that's pretty cool.

I think I will leave it at that. Please try out the new SM Beta app on your iPhone/iPad and let me know how it goes. And thanks for your patience as we weather this storm of unreliability!