Where Do Photos Go In The Era Of Total Searchability?

When Facebook was in its messy adolescence, after the invention of endless scroll but before regulators started to ask awkward questions about where all the data was going, there was a sudden glut of personality tests. After taking a short test, users would receive a report which claimed to reveal their personality type or some other insight. In return, their test answers handed app developers valuable data in the form of personal information. There were mutterings about the true intent of such games, but it was not until the activities of Cambridge Analytica came under scrutiny in the aftermath of the UK's Brexit vote and Donald Trump's 2016 win that societies began to see how powerful those little shreds of personal information could become when gathered alongside millions of similar pieces and placed in the wrong hands.

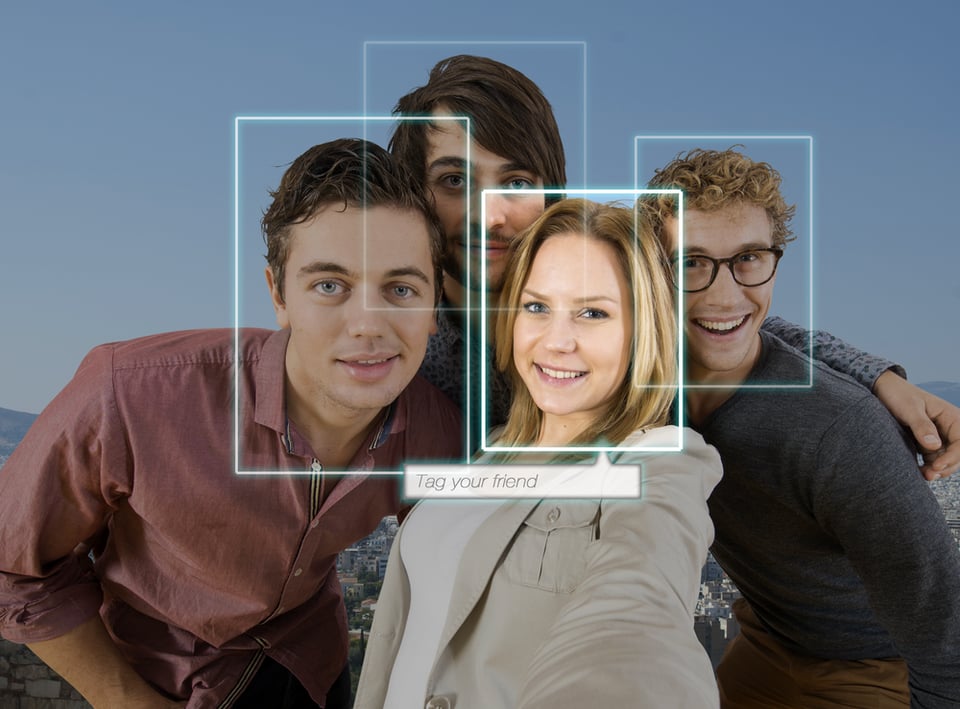

Personal data (including metadata) has been prized since the dawn of the internet, but web companies only really started to be able to access the visual information contained within images relatively recently. Sites such as Flickr and Myspace were once huge forums for the sharing and curation of photography but their archives followed a root and branch logic, linking images primarily to their creators. It took the explosive targeted advertising-driven growth of Facebook and other social media sites to underwrite the development of the technologies which removed friction and incentivised image storage, interpretation and distribution. The social sites were also the first to fully commercialise the informational potential of digital images, which could attract and retain visitor attention while encouraging users to impart information about the maker, their friends and their lifestyles via tagging, allowing for better advert targeting.

By the mid 2010s questions around online privacy were part of mainstream social discourse, thanks in part to Edward Snowden and Wikileaks revealing the extent to which states could compromise private devices. It became common to see laptop cameras obscured with sellotape or stickers, and a growing awareness of context collapse, possibly due in part to some pretty amazing digital pile-ons, led to job cuts at Facebook and elsewhere as users abruptly stopped sharing personal information. The illusion of privacy that had been a central part of the early internet was shredded, its purported communities unmasked as vast opencast data mines overseen by some of the world’s most awful people. Scrutiny of the provenance of training datasets increased, and many countries put in place regulations to protect the privacy of online citizens. But Pandora’s box was open; digital systems had infiltrated our work, social, romantic and political lives, and the choice was not whether to use them but rather how to limit the harm they could cause. A certain cynicism set in, with users accepting that the price of free websites would inevitably be a loss of privacy.

Since then, making has become quotidian, sharing easier and storage all but free, and images have assumed a central role in digital communication. From emojis to memes to selfies, it is the image which serves as the great connector, offering makers and viewers broad interpretability and the closest thing yet to speaking in tongues. Digital systems have raced to keep up and preserve their owners’ access to users’ thoughts. Systems like Google Images leverage colossal machine learning power to decode photographs into symbols, resulting in an internet in which the mechanical legibility of an image is an automated process, not the result of human interpretation. Meanwhile, no matter how cynical users become, their cynicism focuses on the personal and overlooks the networked dimension. I may not be putting myself in danger when I share images of myself, but my participation in photographic communication serves to train those systems and make them better. Of course, meanings and subtexts still escape the grasp of our mechanical overlords, but progress has been swift and these days even relatively small companies have the power to decipher at astonishing speed what - and who - is in pictures. The end result is that images, like text or numbers, are now indexed, tagged, cross-referenced and categorised at a monumental scale. A picture is no longer just a picture- it’s a data point.

Which brings us hurtling into 2019, when a viral trend called the Ten-Year Challenge hit the headlines. To complete the challenge users were invited to upload two profile pictures side by side, one from a decade ago and one from the present. People obliged in their millions, adding comments about how little or how much they and others had changed. It was all in good fun but, as various voices including Kate O'Niell pointed out, the response revealed how easy it still is to mine data. By participating, users were potentially providing a vital training data set, for free, to companies seeking to refine facial recognition algorithms. Facebook denied playing any role in the craze, but their denial may have been prompted by their own facial recognition snafu back in 2011, when they faced a barrage of criticism for implementing the technology without the consent of users.

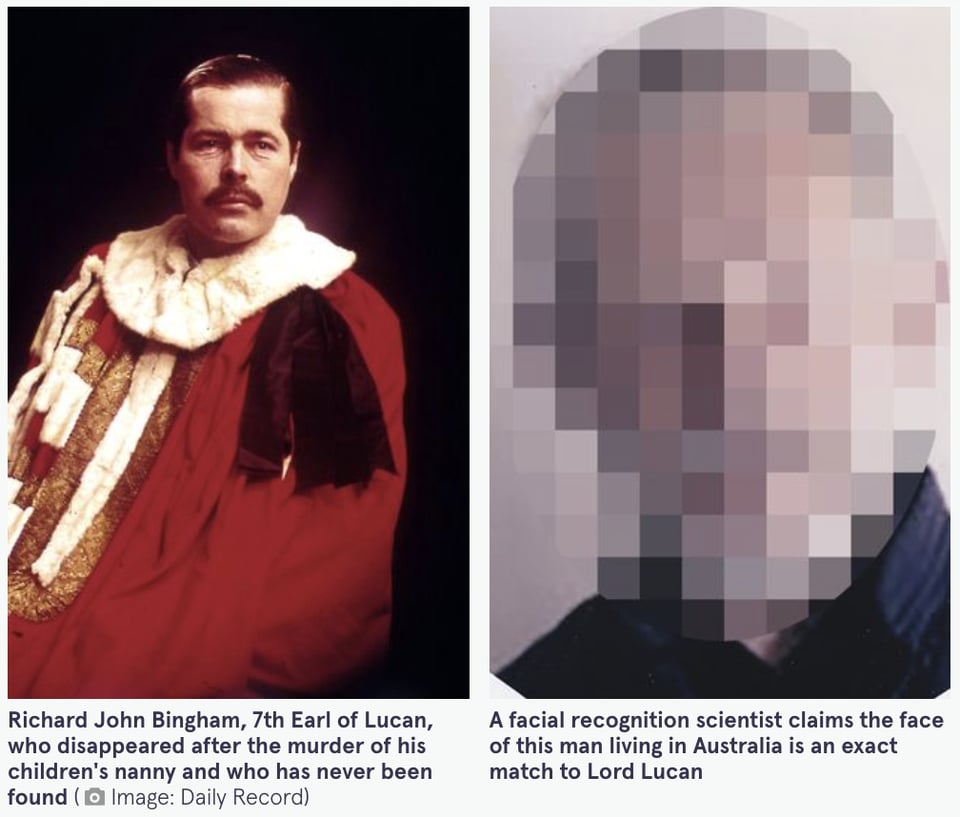

Personal data is the currency of the capitalist internet, which goes some way to explaining why sites have worked so hard to make images its language. Images are gossips; they spill their secrets to anybody who asks, and a careful interrogator can make them confess far more than their creators intend. We are also careless with where we make our images available, sometimes by choice (Instagram) and sometimes by necessity (CCTV, for example). Welcome to the era of total searchability, in which recent and networked images of almost all human endeavours can be combed through at unthinkable speed and scale. A searcher might look for names, faces, locations, text or any combination of these and more, and the result is a startlingly total form of user-generated surveillance.

Perhaps the most famous positive example of this is Bellingcat, whose remarkable OSINT (open source intelligence) tactics have made annoying Vladimir Putin a viable hobby for anybody with a stable internet connection. But it's not hard to see that if the good guys can use images to identify bad guys, the bad guys can also probably use images to identify good guys. A picture of a group of friends hanging out together at a protest is both a memory and a piece of evidence. Posting it online makes it available to be combed by security forces, its subjects identified, and a big bus full of Law and Order© sent to stamp on their toys. Even if datasets like LAION can be sanitised, ordered, their biases and blindspots beaten out of them, that fundamentally does not matter because the tools they have trained, the systems they have enabled, are only as benevolent as the people who use them.

Photography has always positioned itself as bearing witness, and has frequently made conditional offers of anonymity as it explored the lives and times of vulnerable populations. Topics such as gay rights, environmental activism and democratic protest movements served as the medium's bread and butter throughout the long 20th century, and while those photographed could never be sure to be free of the risks associated with identification, many agreed to be photographed*. They showed (and show) great bravery in allowing their stories to be told, but this bravery went hand in hand with a reasonable expectation (often going as far as a promise) that even in cases in which they were actually identifiable, the chances that they might suffer repercussions from their participation were slim. Publications were typically fleeting, the average citizen had no meaningful way to search for old news, and state surveillance required resources, expertise and time that made it non-viable in many cases.

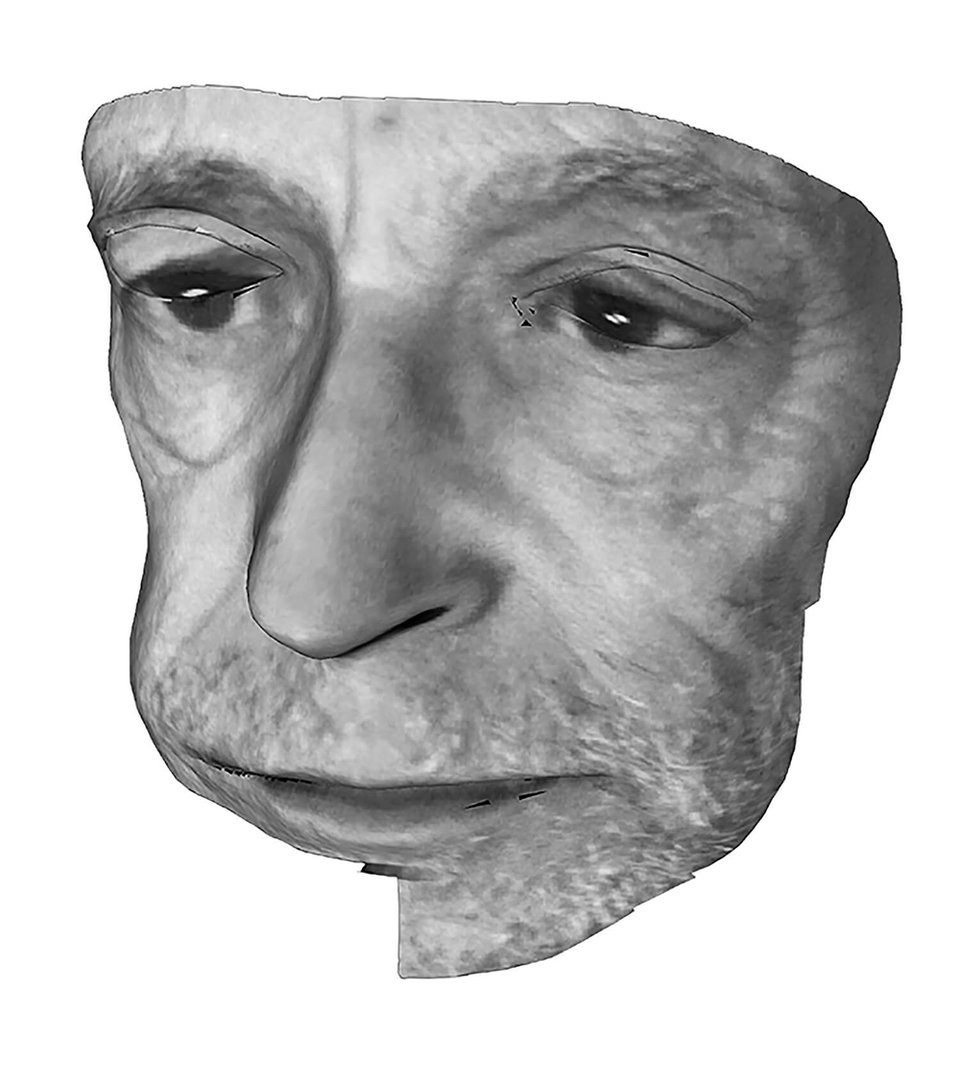

Not so today. The cataloguing of images, and in particular their potential to be linked via age-literate facial recognition systems, means that any image which is uploaded to the public internet is potentially vulnerable to examination, and thus to the identification of its subjects, whether actively or automatically. The globalisation of such systems and the ratcheting up of the speed and precision of search technologies poses huge challenges to civil liberties, of course- just being photographed at a protest is enough to end up in jail in some countries. But it also poses a huge and perhaps unanswerable challenge to photographers, about how to make compelling images in a world in which those same images are just as legible to our subjects' oppressors as their supporters. Where does photography go next?

*Perhaps even more common are the subjects whose participation in documentary images was coerced, poorly explained or involuntary. This is not besides the point- rather, it underscores the severity of the risks associated with this abrupt growth in interconnection.