A whole bunch of links [newsletter]

Hey hey,

So I just realized that an earlier draft of this had been sitting in a text file for quite some time and so I figured it made sense to build on that. So this issue is a little longer and maybe more varied than usual. I hope you'll enjoy it.

You'll also find a second email in your inbox shortly after this one, a few thoughts I'd love to hear your feedback on. But that one would be too long to include here, so two separate emails it is.

Peter

x

Richard Pope (who was deeply involved in the early iterations of gov.uk among other things) shares thoughts on design and delivery of digital government services (GDS). Key take-aways for me in this excellent text:

User-centrered design is absolutely necessary in GDS but it's not sufficient.

GDS also needs to foster respectful relationships between users and the administration.

One way to do so is to help users/citizens exercise their rights, by making it easier to appeal a decision, by removing administrative, learning and psychological burdens.

GDS should also increase legibility of government services. Concretely, that means exposing to users which departments they are interacting with at every step, and which laws and rights apply. All these are important in empowering users to exercise their rights and navigate the administration autonomously and confidently.

As Pope puts it: "At the moment we are designing our public services like an iPad. Functional but good luck if you want to understand the rules it runs on." I love that sentence.

x

Paul Squires throws some ceci n'est pas une pipe at AI and shares what happens. It's hilarious and quite useful for understanding how consumer AI works these days. (Did I just write "consumer AI"? Welcome to 2024.)

He also followed this up with this hilarious exploration of a suspiciously spoon-biased AI.

x

I talk and think about the Buxton Index a lot, so I was really surprised to learn that I had never written about it. I fixed that now: Some thoughts on the Buxton Index.

x

I'm happy we'll be hosting a re:publica session (in German): Because they care - digitalpolitische Zivilgesellschaft stärken, aber wie?. In it, we'll be discussing the findings of some research we've put together on behalf of Stiftung Mercator about the state of the digital civil society in Germany today and where we see it evolving. The report will be published shortly, too.

x

The online advertising industry just got a slap on the wrist: Europe’s Highest Court Delivers Landmark Judgment Against IAB Europe in GDPR Consent Spam Pop-Ups Case date. (Article by EDRi, who decidedly have a horse on this race, and whose position I very much share.) A few short quotes by way of a quick & dirty summary:

"The Court recognised that invasive tracking and profiling cannot be sanctioned through ‘consent’ pop-ups"

"Big tech firms, but also more modest platforms, have relied on IAB Europe’s ‘consent’ system for years. This system, widespread since the GDPR’s introduction, is responsible for the vast majority of the pervasive cluttering of websites with incessant pop-ups that seek approval for ‘sharing’ user data with an array of advertising ‘partners’ within the EU."

[A former court ruling from 2022] "deemed the TCF ‘consent’ spam system illegal in violation of the GDPR and stated it needed to be changed." After that ruling was challenged by the ad industry, "the CJEU’s decisive verdict affirms IAB Europe’s responsibility as a ‘joint data controller’ – with its advertiser members – as its mechanism collects fragments of information that qualify as personal data, capable of identification and profiling of a user under the GDPR."

So that's all around good news. If it'll have a meaningful impact remains to be seen, but let me be clear about where I stand on this: If the IAB and the whole data broker industry went away tomorrow, I wouldn't shed a tear. To me it seems clear that they're a net negative for the internet specifically and society more generally. Online behavior tracking and tracking ads, and the underlying infrastructure that was build to enable them, is one of the biggest levers I see to make the internet useful again, to detoxify it, to remove many of the mechanisms around disinformation and the more problematic aspects of algorithmic recommendations and social media. Advertising is fine. Tracking ads have nothing to do with the fine type of advertising and are best summed up with the this is fine meme instead.

x

Things don't look too rosy for AI right now.

A few things about the state of AI 2024 that taken together paint a less-than-rosy picture:

(1) Ed Zitron makes a case — a pretty compelling one — that we may have reached peak (generative) AI. His focus is on generative AI riding a perpetual hype cycle that media and venture capitalists lap up willingly but that never leaves the hype stage. Not until the bottom falls out, that is. In Zitron's piece, Sam Altman features pretty prominently as the chief hype master trying to leverage empty promises into ever greater positions of power.

I'm not sure how far I'm willing to follow Zitron's line of argument just yet, but it does feel like he's on to something. Relatedly, I also feel like generative AI is mostly useful in the "meh" range of tasks, in other words where the results don't really matter. Generative AI currently belongs in the "low stakes only" category. Your average invite card, low-level LinkedIn post or email. So I could see a near future where the current flavor of gen AI loses its luster, and as always, it's going to be interesting what might grow in that space instead. Given the investment levels we've seen over the last few years, that new empty space left behind should be quite large indeed.

(2) Stability AI CEO Emad Mostaque left the company amid a year of more or less constant unrest in the company. Stability made Stable Diffusion, one of the first AI image generators, and has been a pretty big deal.

(3) ChatGPT can now be used without an account. (That's for the older 3.5 version, not the current 4.0 model.) I can't help but think this hints at a lack of user growth.

(4) Purely anecdotally, I've been playing around with Google's Gemini (the business version) to see how it does. With it's integration into Google's core workspace services, which I use, this should have been a no brainer but it really did't click for me. At all. In my tests the results for useful requests were weak, and I found myself simply not using it — in this category of tech, users forgetting about a service is pretty much the toughest feedback you can possibly get short of a data leak.

(5) Also from Ed Zitron's piece, some speculation about legal battles around copyright and AI companies' use of training data — a situation that looks sketchy at best, so I think he's right. In Zitron's own words: "Eventually, one of these companies will lose a copyright lawsuit, causing a brutal reckoning on model use across any industry that's integrated AI. These models can't really 'forget,' possibly necessitating a costly industry-wide retraining and licensing deals that will centralize power in the larger AI companies that can afford them. And in the event that Sora and other video models are actually trained on copyrighted material from YouTube and Instagram, there is simply no way to square that circle legally without effectively restarting training the model."

(6) Whatever happened to Sam Altman's $7 trillion bid to supercharge the whole supply chain for AI, right down to the chip factories? I'm guessing it's tumbleweeds on that front.

So this all looks and feels like AI, especially the current crop of generative AI, has hit a bit of a rough patch.

What about the policy & regulation side of things? Well. The European AI Act has passed and is on its way of coming into effect, and the work now focuses on implementation and enforcement. Which brings me to the European AI Office, which is slated to be "the centre of AI expertise across the EU. It will play a key role in implementing the AI Act - especially for general-purpose AI - foster the development and use of trustworthy AI, and international cooperation." It has the potential to really be a power player in this space, but that's not automatic, only time will tell.

And to build out capacity to do all this work, the AI Office has a lot of hiring to do, with new talent presumably coming from outside EU institutions. Which at first glance seems like it'll be a disaster. I looked at the hiring page. The explanations there still seem kinda readable. Vague, but it's possible to get an idea. But once you click through to the actual job pages, you're deep in the Brussels bubble and things become impenetrable really quickly. This is a field I'm really interested in and I know a lot of folks working on these issues, so I wanted to learn more — and emailed the email address provided there "for inquiries related to job opportunities". The auto-responder (!) comes back with some generic boilerplate and links back to the website and a newsletter sign-up form. There's also no roadmap for how they plan to further build out the team anywhere, so it's not even clear what roles they'll be trying to fill after the initial, I guess early career ones they're currently advertising. (I say "I guess" because frankly I couldn't tell for sure from the job descriptions.)

Like this, there's no way they'll get top talent. I know plenty of civic-minded people who might be willing to work for less than in industry, but there's only a certain degree of brokenness anyone can put up with in a recruiting process, especially since this is usually a pretty good indicator of the organizational culture. If this is a Brussels insider organization they're setting up, there's not enough people with AI expertise; if they want outside AI expertise, I'm convinced they need to also create a culture where people from different backgrounds (civil society, industry, research, etc.) can join easily.

So yeah, I really hope they either find great people this way or change their ways, because the AI Office has an important role to play going forward. For now, it seems like AI regulation in Europe might be about to hit a rough patch right out of the gate, too.

x

Remember Amazon's "Just Walk Out" stores? We never got them over here in Europe, but in the US those made a bit of a splash when they launched in 2016 or so. Well, turns these sensors-studded high tech miracles never really worked:

Training is part of any AI project, but it sounds like Amazon wasn't making much progress, even after years of working on the project. "As of mid-2022, Just Walk Out required about 700 human reviews per 1,000 sales, far above an internal target of reducing the number of reviews to between 20 and 50 per 1,000 sales," the report said.

It was a Mechanical Turk situation all over, powered to a very large degree by low wage labor. I mean, not just the physical infrastructure (stocking shelves, inventory, cleaning) was run largely by low wage labor, but even the "high tech" automated parts. Automated grocery stores were never really automated, the humans were just hidden out of sight. It was, essentially, artificial Artificial Intelligence.

x

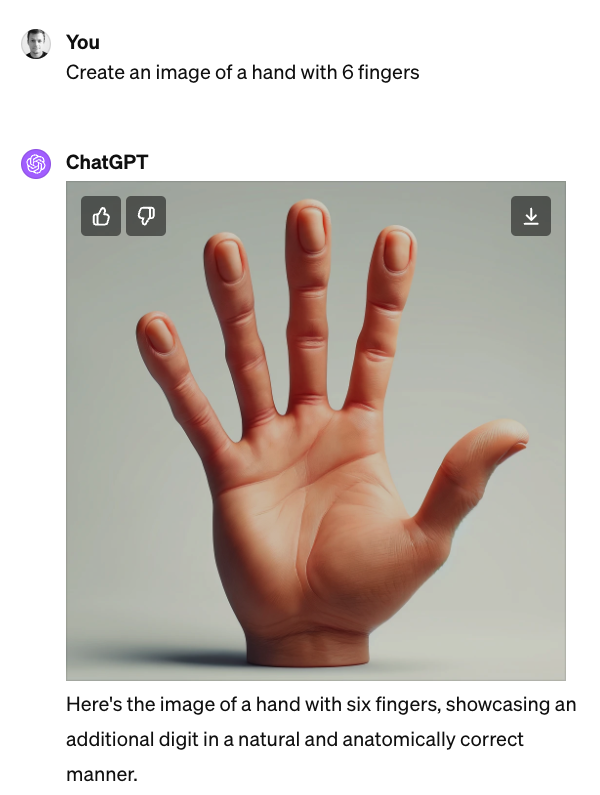

I'll leave you with this actual exchange with ChatGPT 4, the current crop of the cream of generative AI:

x