🗞️PatelBytes🗞️: Cloudflare Release Edition

Cloudflare is amazing!

Cloudflare: Jack of all trades, Master of All?

Cloudflare had some interesting releases. If you are not following, Cloudflare is bringing out products in the non-CDN products such as AI, Big Data, UI deployment etc.

I'll give overview of the non-CDN related releases from Cloudflare.

Side note: Cloudflare just made my week bad. So many releases, I just wrote down on things I know about. Read more on their blog here or follow someone else. Sorry.

1. Worker AI

a. Async Batch API

The new async Batch API allows for queuing request which can be batched together. Why is this needed? Good question, for many usecases such as long PDFs or LLM inference (aka LLM responses), this API can help in giving response at a later stage rather than failing instantly.

It is as simple as enabling a flag, which is queueRequest.

let res = await env.AI.run("<your-llm-provider", {

"requests": [{

"prompt": "Explain mechanics of wormholes"

}, {

"prompt": "List different plant species found in America"

}]

}, {

queueRequest: true

});

b. Expanded LoRA model

Low-Rank Adaptation (LoRA) is a way to fine-tune open-source models. Cloudflare provided this last year with Llama2 (Meta) and Gemma (Google) model. YOu can fine tine by leveraging PEFT (Parameter Efficient Fine Finetuning) library along with Hugging face Auto-train library.

Cloudflare has expanded this year to:

| Model | Status | Company | Parameter |

|---|---|---|---|

| Deepseek R1 | Soon | Deepseek | 32B |

| Gemma 3 | Soon | 12B | |

| Mistral Small 3.1 | Soon | MistralAI | 24B |

| Llama3.2 Vision | Soon | Meta | 11B |

| Llama3.3 Instruct | Soon | Meta | 70B, FP8 |

| Llama Guard | Ready | Meta | 8B |

| Llama 3.1 | Soon | Meta | 8B |

| Qwen 2.5 Coder | Ready | Alibaba | 32B |

| Qwen | Ready | Alibaba | 32B |

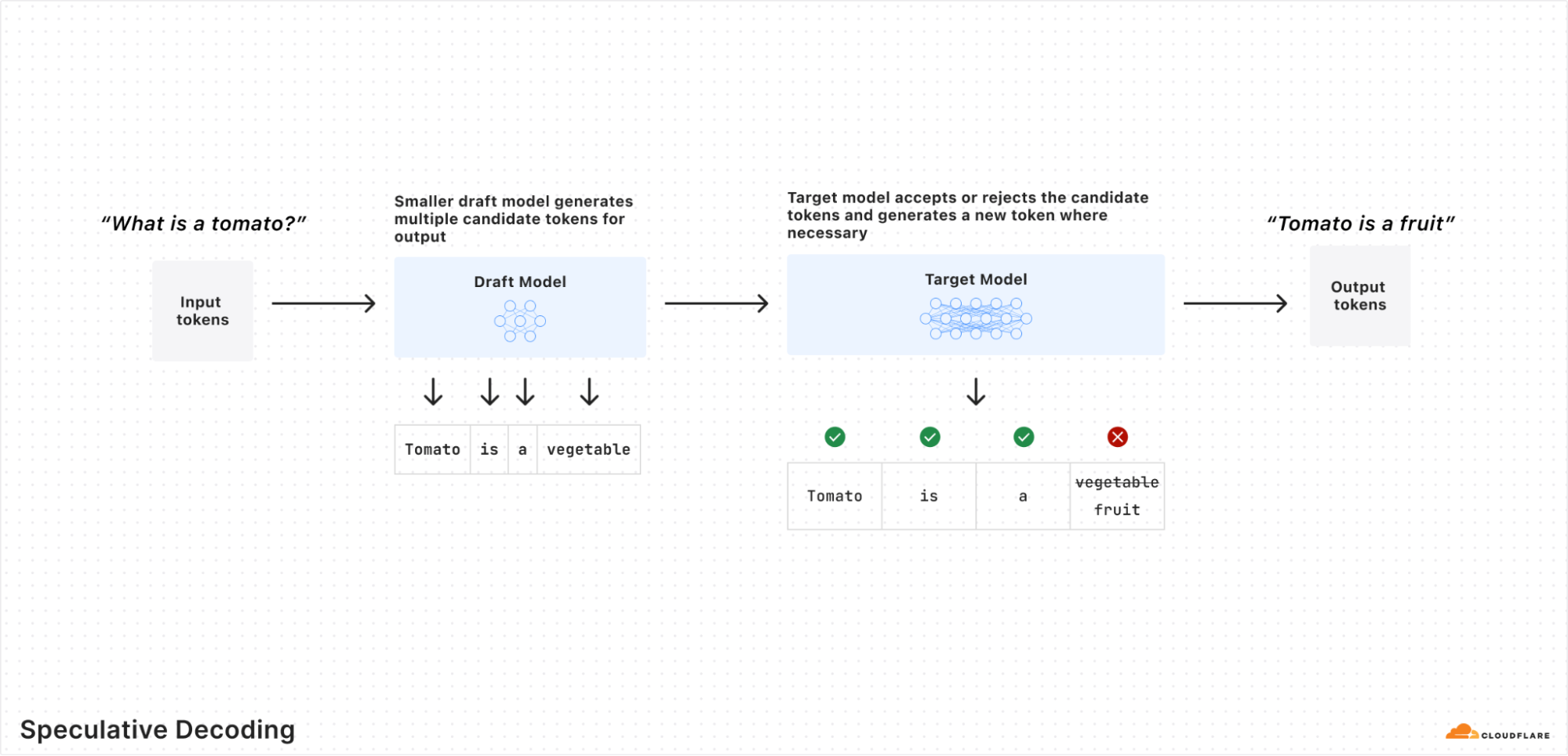

c. Speculative decoding

LLM inference is the process of serving the LLM models at runtime. As LLM is based on prediction on the next token n+1, Cloudflare optimizes this by adding a Draft Model which predicts n+x. The base model checks the prediction and sees if this can be incorporated. The evaluation of the base model on the draft model is computtionally less expensive, hence leading to faster LLM inference.

2. Data

a. R2 Data Catalog: Apache Iceberg tables

If you don't know, S3 use cases is not just a dumb object storage. It was also be uses as a datastore for OLTP and OLAP workloads. And for the uninitiated on R2 and Apache Iceberg:

- Cloudflare R2 is a S3 compatible object storage.

- Apache Iceberg is a format querying analytical data from object storage such as S3 in file formats such as Parquet and ORC. It supports ACID transactions, Time travel queries and Schema evolution.

Why is this an important release? Because AWS S3 is expensive in terms of egress fees and Cloudflare provides R2 which is S3 compatible with ZERO egress fees!

b. Streaming ingestion with Arroyo and Pipelines

Arroyo is a real-time streaming processing engine for analytical workloads with subsecond querying times for joins, window functions, aggergate functions and OLAP joins on real-time data. It is a improvement over the de-facto streaming engine which is Apache Flink. But Apache Flinnk has a lot of overheads and operational compexity due it it being JVM based.

Cloudflare has bought Arroyo to work together with Pipelines which a Cloudflare product to ingest MBs of data in realtime into Cloudflare R2.

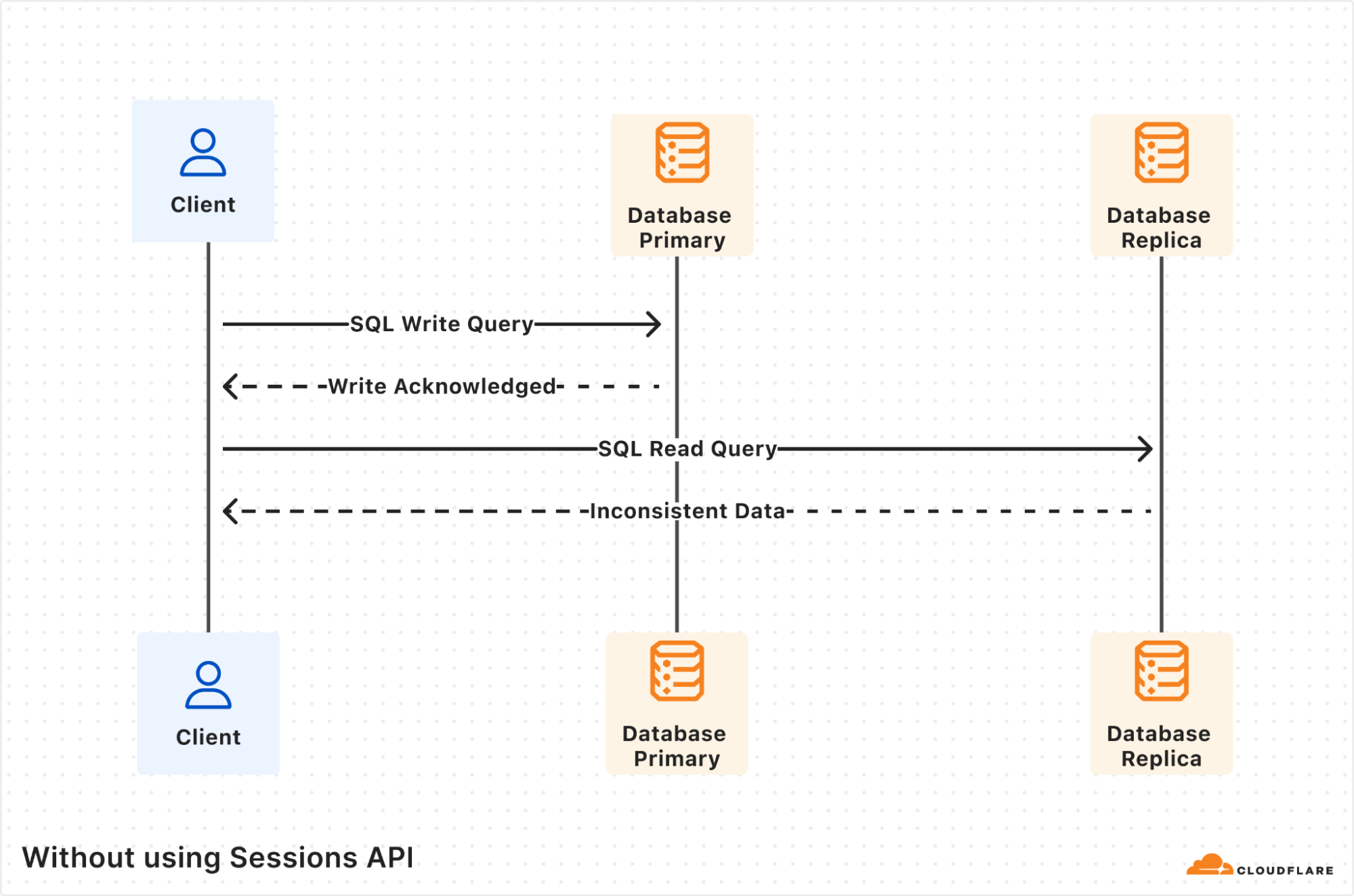

c. Read Replication with D1

Cloudflare provides a Relation database on edge called D1 (SQLite underneath).

Since D1 was globally distributed, it was extremely fast as users were always close to the database, but it came at the cost of consistency.

So cloudflare added read-replication. It uses D1's Session API along with bookmarking to keep track of the state of data.

Old way of D1 query was like below:

Now with bookmarking, the bookmark mentions which data should be read. All equal to or above the 100 bookmark can be read. This ensures that latest data is queried in order to solve for those pesky consistency bugs.

3. UI all the way

Cloudflare has brought so many releases to make UI dpeloyment a breeze.

Their Pages product is for static pages such as SPAs, native HTMl pages.

And their Workers is like a Serverless platform. But it runs on V8 so a limited runtimes are supported.

- JS and Typescript

- Rust (Done via JS plumbing using wasm-bindgen)

- Python (With help of Pyodide which is a WASM based runtime for Python)

a. Vite and React Router v7 plugin

Vite is one of the fastest growing bundler tools and cloudflare has provided an adapter to it.

// vite.config.ts

import { defineConfig } from "vite";

import { cloudflare } from "@cloudflare/vite-plugin";

export default defineConfig({

plugins: [cloudflare()],

});

React Router v7 is based on Vite and provides Full-stack capabilties in its "framework" mode which is different from its SPA-like "data" mode. Framework has additional features such as SSR, SPR(Server Side rendering and Static Pre-rendering respectively)

Cloudflare has provided deployment for React Router v7 too, making it a home for deploying all kinds of UI application.

b. Deploying Next.js using OpenNext

Next.js deployment on non-vercel platform is not an easy path. Sure, hosting simple trivial projects is easy based on their doc but a lot of people see issues due to ever-changing on Next.js internal workings across versions. There is an open issue by Vercel's Lee Robinson to create an adapter to allivite these issues.

OpenNext is a non-Vercel alternative to self-host Next.js. It is jointly maintained by Netlify, SST.dev and Cloudflare team.

Most of the features are supported and can be found here. Only caching seems to be not supported.

This makes Next.js Vercel agnostic and can help with removing the cardinal sin of Cloud computing which is "Vendor-lockin"

c. Vendor Lockin for Cloudflare

Ok, I am a hypocrite! I told no "Vendor Lockin" but damn, Cloudflare has everything under the sun.

✅ Run all UI frameworks such as React with Vite, React Router v7, Astro, Hono (Cloudflare Web framework that runs on Deno, Bun etc), Vue.js, Nuxt, Sveltekit.

✅ Run Neext.js using OpenNext Cloudflare adapter

✅ Run any Postgres or MySQL database using Hyperdrive

✅ More supported runtime in Workers (Remember it is V8 and is not 100% Node compatible)

✅ Image API for serving optimized images

✅ Preview URLs for UI deployment for feature branches

✅ Use any kind of storage:

- S3, you got it with R2

- Simple KV, got it with Workers KV

- Database with D1

- Stateful collaborative apps. Cloudflare has got you covered with Durable Objects

- Want to follow AI hype. Workers AI can help you with that. Oh and the cherry on top is RAG. Yeah, Cloudflare had that too with AutoRAG

So go build your million dollar Cloudflare lock-in AI startup.

Have a nice day, and "Be curious, not Judgemental"

PS1: This was written by me for humans. Just used LLMs for learning and research.

PS2: V bgqm ygp, Cdmfc