⚡️How Software Engineers Make Their Software Run Faster?

I transformed a sluggish insertion script from 20 minutes to just 5-6 seconds using batch processing!

Last week, I was assigned a task to speed up an insertion script. The program was a straightforward DAG, running daily tasks to retrieve CSV files and process them into a database.

These files contain information about financial transactions, which can quickly become heavy due to the sheer number of rows—often reaching millions.

The script for inserting rows into the database followed a simple flow:

Read a row.

Perform checks to ensure essential data is present.

Attempt an insert.

Log errors for issues like duplication or invalid data.

Pretty simple, right? However, behind this simplicity was hiding a major issue.

The task was slow as HELL. 🤬

Processing a file with 4,000 records could take up to 20 minutes, which was far very very very from ideal.

This was where the hunt for the culprit began.🤺

The Suspected Causes 🔍

Latency? No, we had other tasks running on the database, and it wasn’t experiencing unusual delays. The database server was performing well, according to usage stats.

Disk usage? Yes, the machine's disk was under heavy usage due to large CSV files loading into memory. One solution could be processing the file in chunks rather than loading the entire file at once, which would help manage disk usage. However, this wouldn’t solve the core problem of the script’s speed.

So, what was wrong?

The Real Problem ⚡️

With my current knowledge, I knew that 4,000 individual inserts in the database would likely cause a bottleneck. We were looking at around 1.78 transactions per second.

After some research, I discovered that batching is a more efficient approach for inserting large numbers of rows. But why wasn’t it already in use?

Well, batching introduces a risk: if there’s an error in one record within a batch, it can invalidate the entire batch.

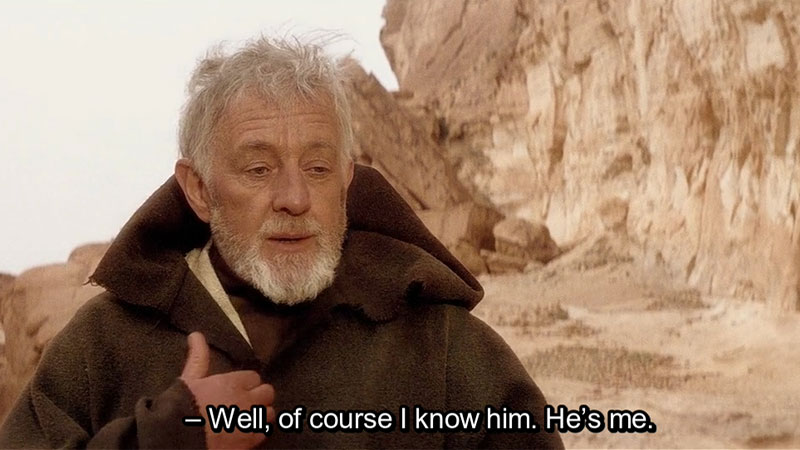

As it turns out, the developer that I know pretty well…

…who originally wrote this script opted to perform individual inserts to avoid this risk.

The problem with individual inserts is that they slow down significantly with large datasets because each insert requires:

Separate transactions

Frequent disk I/O

Repeated index updates

The Solution 🛠

To solve this, I decided to use batch inserts with a more refined error-handling approach. I was aware of the main types of errors that might occur, such as:

Missing consumer information

Duplicate entries

Invalid data

Duplicates were errors I could afford to ignore.

For missing consumer information and invalid data, I implemented a caching system. By retrieving all consumer IDs and storing them in a hash table cache, I could check each record's consumer information before insertion, removing an unnecessary transaction check.

For invalid data, I also included format validation to ensure only clean data was passed to the database, reducing the load of handling these errors.

To make batch inserts resilient to errors, I used INSERT ... ON CONFLICT DO NOTHING ... RETURNING ref. This way, the batch insert wouldn’t fail completely upon encountering an error, and I would still get the references of successfully inserted rows.

The Results 🚀

With these changes, I managed to reduce the processing time from 20 minutes for 4,000 records to an incredible 5-6 seconds. 🤯

Certainly! Here’s a refined version with minimal emojis, italics, and bold elements, staying true to your content:

We start by analyzing the problem thoroughly, identifying likely edge cases to understand performance challenges, and tailoring optimization strategies accordingly.

In my case, understanding that data from consumers could go missing and that there might be some invalid columns for certain rows meant I could perform checks without heavily involving the database.

So, next time, do your best to understand a problem correctly, and optimizing it will come naturally.✌️

Add a comment: