On truth and bullshit in images, part 2

Did you miss part 1? It’s here!

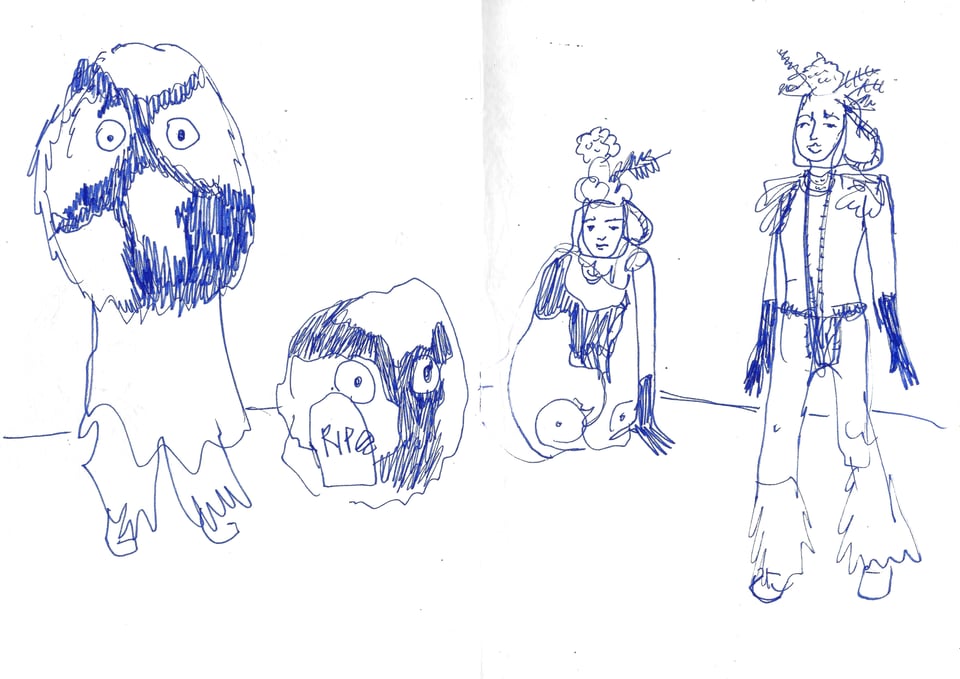

When I was still in high school, preparing for the design school admissions exam, I started to keep a sketchbook to draw people in school, on the subway, and then everywhere else, even at cabaret shows. I only got told off about it once, which is impressive if I consider the number of filled sketchbooks.

People usually love seeing themselves in these images and sometimes even seem touched. I guess they appreciate the time and undivided attention I gift them for the few minutes I draw and the time (15 years, yikes) and energy I’ve spent to become reliably skilled at it. I see them during this time, and I can show them what I see, often with much kinder eyes than the harsh accuracy of a photo.

I also do this at conferences (like Beyond Tellerrand), meetups, etc., so I can sit still for hours.

This can go another way and make people feel seen and hurt. I still remember how my drawing triggered a girl’s body image insecurities, even though we all modeled for each other, so she understood the process and our limited skills as teenagers. Sometimes, causing pain is the point, and drawings are considered more effective: satires are made with cartoons, not photos. “Do you know how much you gotta hate somebody to draw them?” (Josh Johnson).1

In theory, a generative model could produce all of this: pick a photo and transform it to look hand-drawn, and even add the bullet points of a talk. So far, I haven’t seen this reliably done, but the production of images isn’t unique to me or humans in general.

I also haven’t seen anybody ask for that.

What I can find are people unhappy with their generated reflections.

Regardless of whether you think the AI modified photo is fine, it is not how I would have chosen to represent myself professionally… I did care, though, when I saw myself in a shirt I never wore, exposing more chest (or undergarments) than I ever would have chosen to do.

These images seem to be the equivalent of taking a selfie and adding a strong filter to overlay the values of the model’s creators, which is not the same as taking a great photo. It won’t be all false, but it’s also not true. It’s bullshit, like a fake smile.

… these AI-generated images were beaming a secret message hidden in plain sight. A steganographic deception within the pixels, perfectly legible to your brain yet without the conscious awareness that it’s being conned.

I think generated images don’t make anyone feel seen because the results are just wrong enough to show that nobody was really looking. Instead, they show who the subject of the image should be according to the values of that generative model.3

If you’re still here, you might like the next posts.

I talked, wrote, and drew about how our surveillance-based ad-funded digital media environment, AKA the attention economy, hinders our ability to experience the state of flow and see, clarify, and strengthen our sense of self. I suspect all the generated content coming from large models will make it more difficult to truly see each other and, therefore, strengthen our connections. Which, if we consider the incentives driving both, makes sense:

With this in mind, the AI we’re talking about today needs to be seen as an extension of this surveillance business model–a way to expand the reach and profitability of the massive amounts of data and infrastructural monopolies that these large companies possess […] It’s incentivizing the expanded creation and collection of data about people, their communities, their habits, politics, locations, faiths, kinks, health conditions, financial health, family ties and ruptures… and so much more in service of training and informing these models. And let us not forget, once trained and deployed, these AI models create MORE data–forming another vector of mass surveillance that […] has power over us and creates narratives about us.

That’s it for now. And since you made it this far, here’s a treat against the cold, grey days.

Take care,

Julia

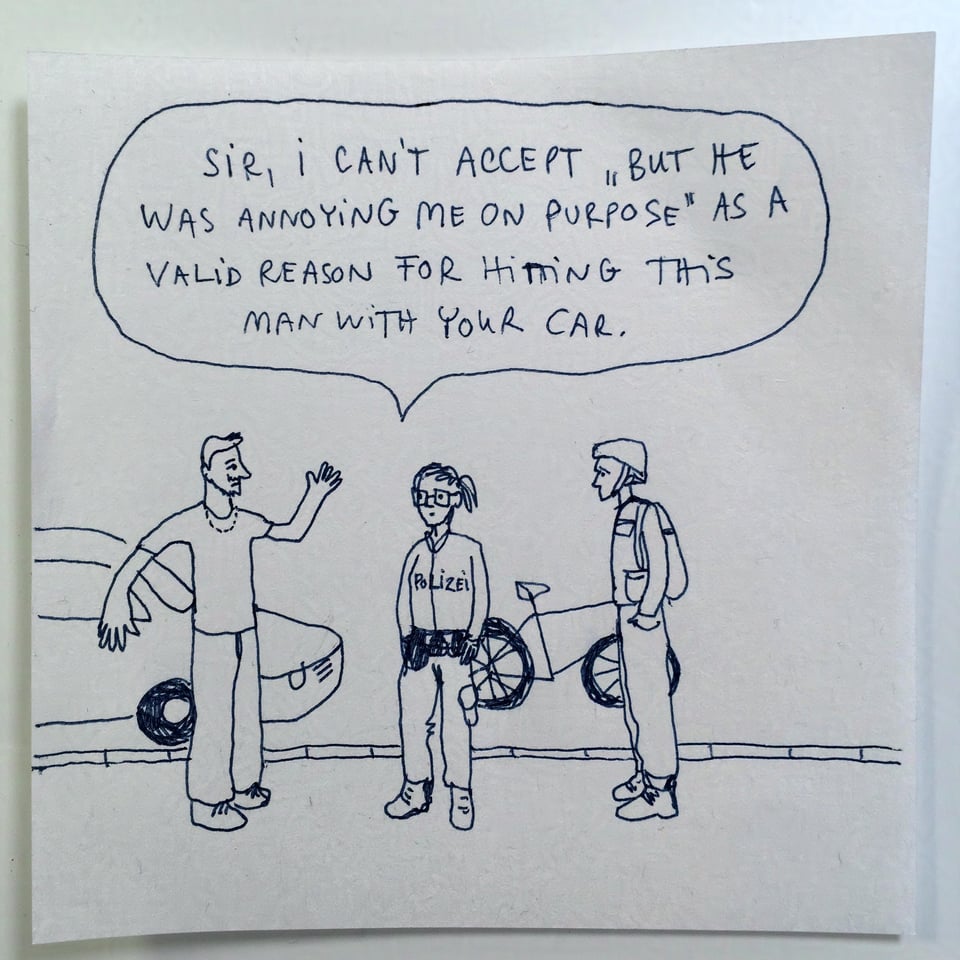

Good comedy and humor, in general, seem immune to bullshit and feel truthful even when not factual. A topic for another time. ↩

I remember more sexualized examples from the early days of this AI hype cycle when everyone seemed to play with generating new profile pictures, but we don’t need to see those again. ↩

This fits into the larger trend of people moving from the ‘dark forest’ of the open web (as described by Maggie Appleton) to closed or self-controlled spaces so they can find each other. ↩