Security before the internet

As promised, I'm writing a bit more about the history of internet (in)security. This week is part 1, covering the basics of early computer security and what that meant before interconnected networks. Part 2 will look at how design choices for Arpanet and later protocols shaped internet insecurity.

Thinking

Computer security started out as a locksmithing problem. Early commercial computers were very expensive and the organizations that built or leased or purchased them needed to protect that investment, so those computers were housed in specialized secure rooms. IBM engineer Walter Tuchman described security in the early days of mainframe computing as "locked desk drawers, locked doors on computer rooms, and loyal well-behaved employees, the latter probably being the weakest link." Computer security sometimes got caught up in college protests in the 1960s and 1970s, when the military-sponsored machines were the target of antiwar and anti-government protests.

Once universities debuted time-sharing networks, where small workstations were networked into a central mainframe, the question of access became more complicated. How could time-sharing computer administrators reproduce their physical access controls within a networked system? Time-sharing systems had one security advantage over our modern idea of networks: they were closed networks. Before the development of the inter-network protocols that underpin today's internet, a time-sharing network was an island, unconnected to similar networks at other universities or institutions. Each time-sharing network was centralized on one or more computers, and access to those centralized resources could only be done by way of one of the terminals that comprised the network.

Network security, in the time-sharing model, was thus nearly synonymous with access control. The main risk in the threat model on university campuses, after physically protecting the machines, was preserving computing time. When a computer was accessed exclusively by means of punch cards or other direct input into the computer itself, physical security could be used to manage access as well: only give trusted people keys to the computer room, or better yet have people hand over programs on punched cards to be run by an inner circle of operators. But if an inexperienced undergraduate could waltz up to a terminal and run a buggy program, hogging or possibly crashing the computer, computing resources would be taken away from more valuable research.

For universities whose computers were sponsored by defense contracts, computer managers might include an additional worry in their threat model: exposure of classified data or sabotage of research projects. Just as administrators were worried about riots on campus shutting down or attacking military-funded computer centers, so they might worry about a more sophisticated agitator, or a Soviet plant stealing secret research data.

The solutions to both the resource-allocation threat and the sensitive-data threat were largely the same: grant privileges to specific time-sharing users that dictated what those users could access in terms of data and computing time. This was mostly implemented within the operating systems of the central computers. If an unauthorized user requested off-limits data, the operating system would check the access rules and deny that request. Similarly, if a limited user tried to run a resource-intensive program, the operating system could delay or throttle the scheduling of the program to preserve prime computing time for more important users.

But before the mid-1970s, network security generally did not include encryption for networked communications. Information at rest in databases, called "data banks" at the time, was first protected by controlling access, and later was the focus of the first wave of computer encryption products. Protecting data as it was sent between computers was not a priority, since data transfer was tedious, difficult, and in the early years required physical media like paper tapes. One notable exception was the Air Force-sponsored Multics time-sharing OS developed by Honeywell and MIT, which included an unusual software encryption algorithm as part of its military-level security that was used to encrypt passwords and was available for time-sharing users to encrypt data on the network.

The development of inter-network protocols changed the landscape of networking starting in the early 1970s. Previous time-sharing networks, which were centralized by their nature as access points for computing resources, could each be managed by the host institution. But once separate institutions joined their networks together, security had to be decentralized.

Reading/Doing

This week I repaired a keypad lock in my house, one that a locksmith had told me was irreparably broken. Not only did I save us a hundred-plus bucks, but I also feel like an electronics genius. I'm trying to ease myself more into the spirit of DIY and maintenance, having just bought a house and with *waves hands* ... you know, inflation.

If you're looking for a long read on that topic, this recent piece on maintenance as a concept is excellent. It takes a much bigger view than just the problem of maintaining one's house, but its easy to see how it's all connected. Maintenance is where the friction of society meets the technical, supposedly objective side of reality. It's where expertise is much more than just some diplomas on the wall--it's muscle memory and intuition and the motivation of fixing your own problems. It's where the particularity that I love so much as a historian meets the universality of aging and entropy.

So, maybe this week, think about trying to fix something before you throw it out?

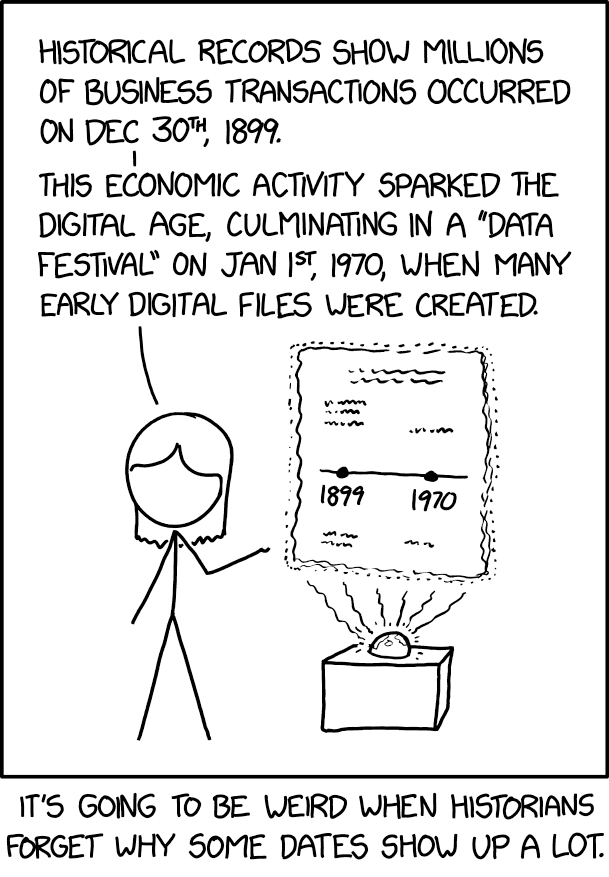

Tweet of the Week: actually just an xkcd of the week, which is exactly in my sweet spot of computing historian nerdery.