Some of my workshop teaching tricks

And some thoughts on the role in software in solving problems

We're just twelve days from the TLA+ Workshop! Right now the class is just a quarter-full, meaning it's going to be a lot of really focused individual attention. If you want to join in the fun, use the code C0MPUT3RTHINGS for 15% off.

I make most of my money off teaching workshops, and that means I put a lot of time into thinking about how to teach them better. Most of that goes to lesson planning, topic order, pedagogical improvements, etc. But I also regularly write assistive programs. See, there's two sources of complexity in workshops:

- Essential complexity: students not understanding the mind-bending core material.

- Accidental complexity: students getting bogged down on unfamiliar notation and syntax errors, getting distracted and falling behind, forgetting to download the class materials, having a last-second emergency that calls them away for half an hour, etc etc etc. This doesn't necessarily prevent students from learning, but it wastes time and frustrates everyone.

The workshop must to handle the essential complexity. If it doesn't, it's a bad workshop. The workshop should handle accidental complexity. If it doesn't, it's not a great workshop. The problem is that the accidental complexity is much broader and less predictable. You can lesson plan your way through the essential complexity, but not the accidental complexity.

Of course, even if you can't anticipate accidental complexity, you can still plan for it. For example, if you add more slack to the schedule then you can take the time to resolve accidents. If you run write down accidental complexity you've run into before, then you can be prepared for it next time. And also you can write code! I'm a software engineer, why wouldn't I try solving my problems with code???

(these are all for online workshops, I have other tech that only matters for in-person classes, like progressive cheatsheets.)

Spec Checkpoints

I like teaching through guided practice: I pick several exemplar specs and we write them as a group. This approach has some problems:

- Students get distracted and fall behind, then have to ask a bunch of questions to catch up to where we are.

- Students make syntax mistakes, which holds up the class while I find the place they wrote

/ininstead of\in.

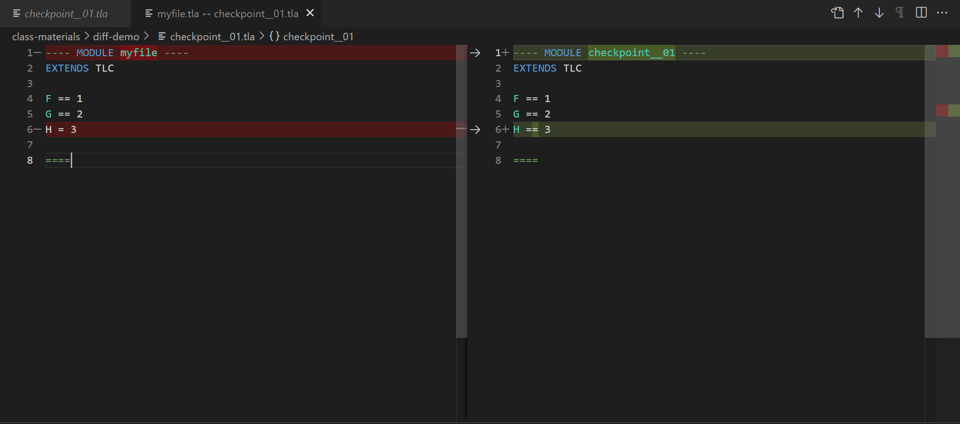

Solution: provide a list of checkpoints for each spec. Then, as long as I strictly follow the checkpoints, students who fall behind can catch up by copying over the latest checkpoint. More importantly, if a spec doesn't compile, the student can diff their file with the appropriate checkpoint to see what they have different.

In workshops where I've had this, whenever a student says "this isn't compiling" I ask "what's different from the checkpoint?" Usually that solves it. If that doesn't, I ask them to send over their raw file, then I show the class where it differs from the diff. Students quickly get the point that the diff isn't a gimmick, it's a valuable class tool.

To generate the diffs I use a metafile. I write one XML file and compile it into a full sequence of checkpoints. I have another script to check that all of the checkpoints work as expected— either get the right results or have the right error I want to showcase.

Checkpoint viewer

In one of Lorin Hochstein's talks he said something like "when a system is big enough, all the errors will come from the health checks". Similarly, adding tools that reduce accidental complexity will generate their own accidental complexity. When I started using the checkpoints, I ran into two new problems:

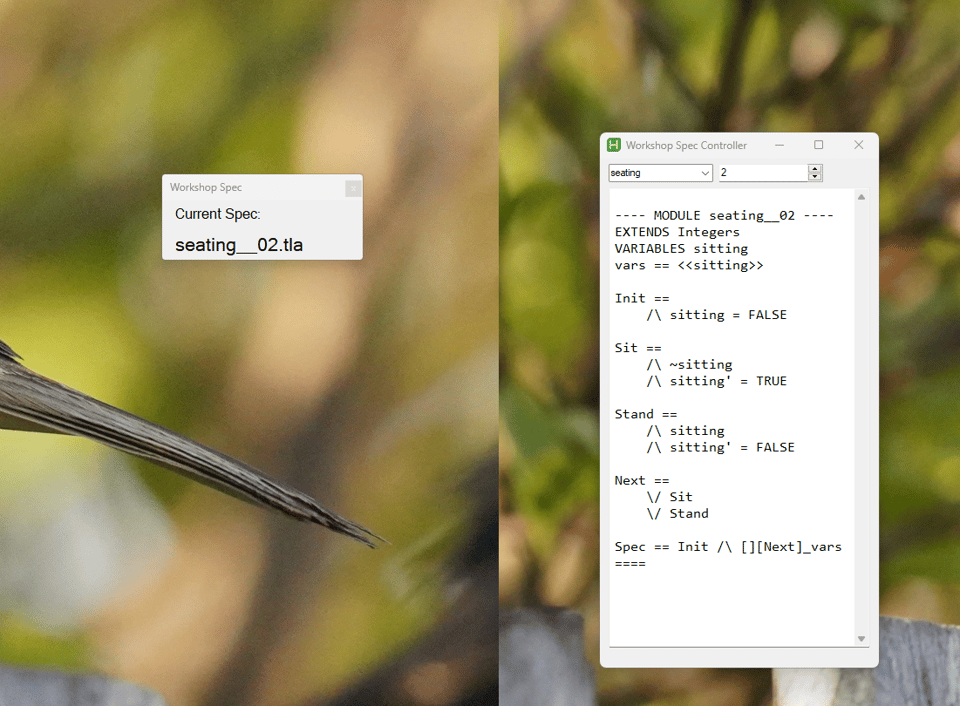

- How do students know which checkpoint we're on? I can say "we're now going to checkpoint 6", but who's going to remember that?

- If I deviate in any way from the checkpoint, diffing will raise false positives and students can't use them to find their own syntax errors.

So I need to always show students the diff and I always need to know what exactly I need to write. To solve this I wrote a checkpoint manager. On my shared screen, it shows the name of the current checkpoint, and on my private screen, it shows the full code.

The two are reading from the same variable and never go out of sync. This is all written in AutoHotKey; I'll be sharing the code in a blogpost later this week.

Notes Uploader

Live coding is good for showing code and developing specs, but it's not that great at presenting information like "TLC uses a breadth-first search algorithm" or "use CHOOSE for these properties but not these ones." Students need a permanent artifact, too, that they can look back on if they need to remember something. The usual goto here is a slide deck. But slide decks are firmly in the "necessary evil" category, because they have some serious problems.1

- They run in a separate program, and switching programs is disruptive.

- They're very linear: you can't jump around or easily go back to old stuff if students want to see that.

- They're hard to search by students.

- I can't update them on the fly in response to student questions needs

- They take so, so, so much time to make that could be better spent on literally anything else.

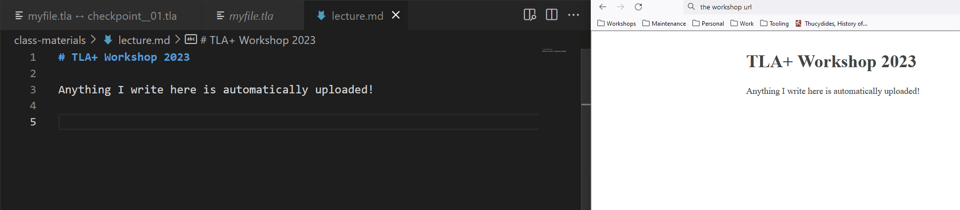

My solution was to lean into livecoding even more. I have a file Lecture.md, where I write all the information I want the students to know. If someone has an interesting question, I'll type out the question and the answer as I explain it. All this time, I have a notes_uploader.py watching the file. After every save it compiles it to html and uploads it as a workshop webpage.

I've only used this in one workshop so far, but people really liked it. One unexpected benefit was I could easily drop code snippets in, so people had a log of all the impromptu stuff I wrote (outside of checkpoints). It did slow me down a little, though.

Software helps with everything

Two main takeaways.

First, I care a whole lot about giving good workshops! You should totally sign up, it'll be great.

Second, there are lots of situations where it doesn't look like software can help, but it actually can. Judicious use of well-applied software cuts out a lot of the accidental complexity from the workshops, which leaves me more time to focus on addressing the essential complexity.

I sometimes see debates about whether "software can solve the world's problems", you know stuff like this:

I think the meme is coming from a good place, it's reacting to venture capital messianism, the belief that we can solve world hunger if we just had the right Hackathon. I also see opposite extreme about how tech is a toy for rich people and programmers can only Make A Difference by giving their money to other people who do the real work. I've volunteered with a few different places that did real work and they were generally real happy to have a programmer on board. There's just so much accidental complexity in every field and even if I could knock out one or two of them it made doing the real work so much less painful for them.

(TBF most often the accidental complexity is addressed by non-programming IT work, like "showing how to use google forms" and figure out why their software is crashing and stuff)

This is becoming a theme of the newsletter: there are many domains where programming can be very useful, even if it doesn't look like it would. You just need to be creative. Also it's important to make the programming support the domain. My workshop tech is only useful because I've already put a lot of work into lesson planning. Software can make something great but can't make something good.

-

Incidentally, if anybody has any recommendations for slideshow software that is highly programmable and has animations, I'm all ears. I've written presentations in Powerpoint, Keynote, Slides, and Beamer and they all fail one of those two basic requirements. I suspect I'll need to bite the bullet and just learn CSS animations. ↩

Add a comment: