Requirements change until they don't

Requirements, phase changes, and formal methods.

Recently I got a question on formal methods1: how does it help to mathematically model systems when the system requirements are constantly changing? It doesn't make sense to spend a lot of time proving a design works, and then deliver the product and find out it's not at all what the client needs. As the saying goes, the hard part is "building the right thing", not "building the thing right".

One possible response: "why write tests"? You shouldn't write tests, especially lots of unit tests ahead of time, if you might just throw them all away when the requirements change.

This is a bad response because we all know the difference between writing tests and formal methods: testing is easy and FM is hard. Testing requires low cost for moderate correctness, FM requires high(ish) cost for high correctness. And when requirements are constantly changing, "high(ish) cost" isn't affordable and "high correctness" isn't worthwhile, because a kinda-okay solution that solves a customer's problem is infinitely better than a solid solution that doesn't.

But eventually you get something that solves the problem, and what then?

Most of us don't work for Google, we can't axe features and products on a whim. If the client is happy with your solution, you are expected to support it. It should work when your customers run into new edge cases, or migrate all their computers to the next OS version, or expand into a market with shoddy internet. It should work when 10x as many customers are using 10x as many features. It should work when you add new features that come into conflict.

And just as importantly, it should never stop solving their problem. Canonical example: your feature involves processing requested tasks synchronously. At scale, this doesn't work, so to improve latency you make it asynchronous. Now it's eventually consistent, but your customers were depending on it being always consistent. Now it no longer does what they need, and has stopped solving their problems.

Every successful requirement met spawns a new requirement: "keep this working". That requirement is permanent, or close enough to decide our long-term strategy. It takes active investment to keep a feature behaving the same as the world around it changes.

(Is this all a pretentious of way of saying "software maintenance is hard?" Maybe!)

Phase changes

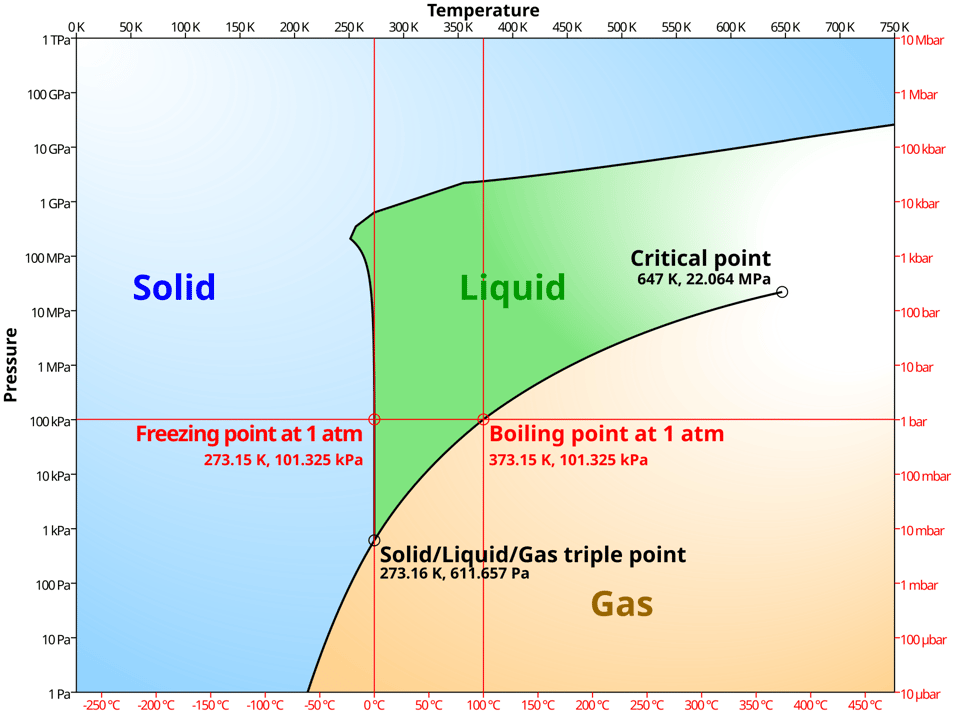

In physics there's a concept of a phase transition. To raise the temperature of a gram of liquid water by 1° C, you have to add 4.184 joules of energy.2 This continues until you raise it to 100°C, then it stops. After you've added two thousand joules to that gram, it suddenly turns into steam. The energy of the system changes continuously but the form, or phase, changes discretely.

Software isn't physics but the idea works as a metaphor. A certain architecture handles a certain level of load, and past that you need a new architecture. Or a bunch of similar features are independently hardcoded until the system becomes too messy to understand, you remodel the internals into something unified and extendable. etc etc etc. It's doesn't have to be totally discrete phase transition, but there's definitely a "before" and "after" in the system form.

Phase changes tend to lead to more intricacy/complexity in the system, meaning it's likely that a phase change will introduce new bugs into existing behaviors. Take the synchronous vs asynchronous case. A very simple toy model of synchronous updates would be Set(key, val), which updates data[key] to val.3 A model of asynchronous updates would be AsyncSet(key, val, priority) adds a (key, val, priority, server_time()) tuple to a tasks set, and then another process asynchronously pulls a tuple (ordered by highest priority, then earliest time) and calls Set(key, val). Here are some properties the client may need preserved as a requirement:

- If

AsyncSet(key, val, _, _)is called, then eventuallydb[key] = val(possibly violated if higher-priority tasks keep coming in) - If someone calls

AsyncSet(key1, val1, low)and thenAsyncSet(key2, val2, low), they should see the first update and then the second (linearizability, possibly violated if the requests go to different servers with different clock times) - If someone calls

AsyncSet(key, val, _)and immediately readsdb[key]they should getval(obviously violated, though the client may accept a slightly weaker property)

If the new system doesn't satisfy an existing customer requirement, it's prudent to fix the bug before releasing the new system. The customer doesn't notice or care that your system underwent a phase change. They'll just see that one day your product solves their problems, and the next day it suddenly doesn't.

This is one of the most common applications of formal methods. Both of those systems, and every one of those properties, is formally specifiable in a specification language. We can then automatically check that the new system satisfies the existing properties, and from there do things like automatically generate test suites. This does take a lot of work, so if your requirements are constantly changing, FM may not be worth the investment. But eventually requirements stop changing, and then you're stuck with them forever. That's where models shine.

-

As always, I'm using formal methods to mean the subdiscipline of formal specification of designs, leaving out the formal verification of code. Mostly because "formal specification" is really awkward to say. ↩

-

Also called a "calorie". The US "dietary Calorie" is actually a kilocalorie. ↩

-

This is all directly translatable to a TLA+ specification, I'm just describing it in English to avoid paying the syntax tax ↩

Add a comment: