I am disappointed by dynamic typing

They have potential to be more than "no static types", but that hasn't happened.

Here's weird thing about me: I'm pro-dynamic types. This is weird because I'm also pro-formal methods, in fact teach formal methods as a career, which seems completely antithetical. So on one hand I teach people how to do static analysis, on the other I use languages which make static analysis impossible.

My fondness for dynamic types is ultimately more social than technical:

- Python helped me escape academia and my first two jobs were in Ruby.

- I encounter a lot more smug static weenies than smug dynamic weenies, so I defend dynamic typing out of spite. There have been a few cases where I was surrounded by SDWs and I immediately flipped around to being pro-static.

- The industry is moving towards static typing and I like being weird and contrarian.

But there's also a technical reason: I think dynamic typing is itself really neat and potentially opens up of really powerful tooling. It's just … I'm not actually seeing that tooling actually exist, which makes me question if it's possible in the first place.

Dynamic isn't the opposite of Static

There's two ways to think of dynamic types. The broader discussion around static vs dynamic is actually about the pros and cons of static typing: how much it helps and how much it gets in the way. That's why you get arguments like "I don't need types if I have tests" and "I don't make type errors anyway". There's also stronger arguments about things that are hard to type, like generic least-squares fitting.

I think this approach is ultimately bankrupt. The tooling around type systems gets better every year, and so over time the imbalance of benefits to drawbacks ratio grows more lopsided. If that was the only benefit of dynamic typing, I wouldn't be so keen to defend it.

But there's another way of thinking about dynamic types: a dynamically typed language is one where types are runtime values and manipulable like all other values. It's a short hop from there to thinking of the whole runtime environment in the same way, where everything is a runtime construct.

And that's really, really interesting to me.

Higher-Order Programs

Here's one experiment I whipped up in Python:

from collections import Counter

class Replacer():

def __init__(self, func):

self._func = func

self.used_args = Counter()

def __call__(self, *args):

self.used_args[(args)] += 1

return self._func(*args)

def double(x):

return 2*x

def g(x):

return double(double(x))+double(x)

double = Replacer(double)

print(g(2)) # 12

print(double.used_args) # Counter({(2,): 2, (4,): 1})

We can replace a function with an object that can be called for the exact same behavior, but also gives us an information sidechannel. And this is an expression! We can leave double as it is in the main program, but augment it with additional information in the REPL or by setting an environment variable.1.

I can imagine all sorts of uses for this kind of trick. Here's some I'd like to see:

- Run a test suite. If a test fails with an uncaught exception, rerun that test with the raising function augmented to breakpoint three lines before the raised exception, while also providing the current stack trace to the user with called parameters.

- Track the arguments for every call to an expensive function. If it's called with the same parameters ten times, alert someone that they should be calling it once and storing the result.

- Profile the stacks that at some point call function

f. - Add an output assertion to an optimized function in dev/testing, checking that on all invocations it matches the result of an unoptimized function

- Collect production invocations and generate snapshot unit tests from those.

- When

fis called withx, returnf(x)but also log what the results would have been forf(x+1)andf(x-1).

I don't know if any of these are impossible without runtime metaprogramming, but they all seem like they'd be more difficult. At the very least you couldn't do them from the REPL. The overall picture is of going one step further than homoiconicity: instead of code as data we have programs as data. In dynamic languages you can write programs that take programs and output other programs. I guess I'd call them "higher-order programs"? I looked a bit and that's usually conflated with "programs that have higher-order functions". "Hyperprograms"? Taking bets for the name now

The crushing disappointment

I don't think there's very much work done in this kind of dynamic analysis. If there was then I'd see a lot more dynamic typing advocates talking about it or showcasing how libraries take advantage of it. I don't think that's happening, though? Because the dynlang features that make this possible are usually pitched in other ways:

- Ruby metaprogramming and lisp macros are sold as ways to make programming more convenient, like by making internal DSLs

- Rails over time moved to using less and less metaprogramming, as did rspec

- REPL-driven programming is all about how you can modify and hot-reload code in the repl, which I guess is similar to what I want, but people raving about it never talk about "augmenting" programs in the way I'm looking for.

- Python is moving away from dynamic stuff. The most popular test runner is pytest, which aggressively keeps you from using it programmatically. I've never been able to figure out how to run tests from the REPL or as part of a higher-order program or something.

I guess the closest to the hyperprogramming model is Pharo? The dynamic code inspector is a big deal, plus there's this fun talk.

This seems like the lowest tier of hyperprogramming though. Maybe I'm being too critical of it, though, and it's important step towards showcasing the value of dynamic languages.

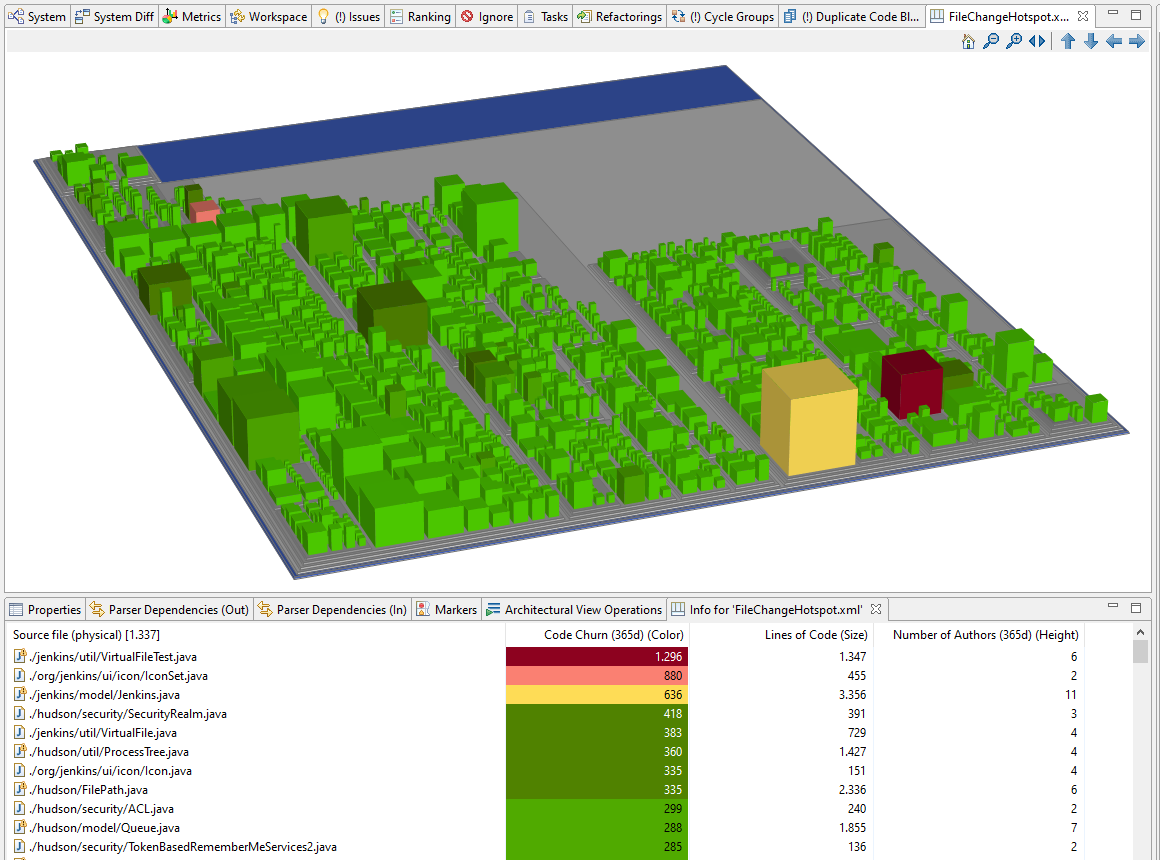

Apropos of nothing, here's SonarGraph inspecting some Java code:

So, like, does dynamic typing actually get you anything? The final straw for me was this paper on inferring tests from code:

The current state-of-the-art for test amplification heavily relies on program analysis techniques which benefit a lot from explicit type declarations present in statically typed languages. Not surprisingly, previous research has been confined to statically typed programming languages including Java, C, C++, C#, Eiffel (Danglot et al. 2019a). In dynamically typed languages, performing static analysis is difficult since source code does not embed type annotation when defining variable. As a consequence test amplification has yet to find its way to dynamically-typed programming languages including Smalltalk, Python, Ruby, Javascript, etc.

I'd expect test amplification to be easier for dynamic languages! You already can do all sorts of dynamic analysis! But toolmakers find it easier to amplify code with type annotations. That's a little soul-crushing.

Why it doesn't happen

I suspect the problem is that hyperprogramming is really, really hard to generalize.

My Replacer class, it turns out, has a pretty significant bug right here:

>>> def first(l):

>>> return l[0]

>>>

>>> first = Replacer(first)

>>> first([1,2,3])

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

self.used_args[(args)] += 1

TypeError: unhashable type: 'list'

I could probably write a version that didn't have this problem, but it still points at how fragile this kind of dynamic work can be. It would take a lot of work to make this into a general-use library and I'm not up for doing it. It's easier to use for my own purposes, where the scope of use it smaller and I don't have to worry about other people's edge cases. But then I need to be really good at the skill of dynamic analysis to get anything out of it.

There's also a lot of type constructs that take a lot of skill to use, too. The difference is there's also constructs that are really easy to use, like option and product types. So the learning curve is a lot gentler.

Anyway that's where I'm left. Just disappointed with how little's actually done with dynamic typing, and questioning if the cool stuff I want is even viable.

(I'm prolly gonna get a bunch of responses like "you didn't mention Racket/Raku/Forth/BASIC/Erlang!" You're welcome to make the case for them, but please don't rehash the usual "static types are limiting" argument, I've been talking about being disappointed in the lack of runtime program manipulation, however you want to call it.

Also you should make it a public blog post instead of a private email. Contribute to the dialog!)

Update for the Internets

This was sent as part of an email newsletter; you can subscribe here. Common topics are software history, formal methods, the theory of software engineering, and silly research dives. Updates are usually 1x a week. I also have a website where I put my polished writing (the newsletter is more for off-the-cuff stuff).

-

re: pytest REPL

I think the solution here is to write REPL-friendly functions and keep the pytest part to only data assertions.

Of course, you can launch the ipython debugger from pytest like this:

pytest --pdbcls=IPython.terminal.debugger:TerminalPdb --pdb(and then type

interactto go into multi-line REPL mode)If you have many assertions you can save the expected output to external files; pytest-regressions provides a nice interface for this:

https://github.com/ESSS/pytest-regressions

@pytest.fixture def assert_unchanged(data_regression, request): def assert_unchanged(captured, basename=None): data_regression.check(captured, basename=basename if basename else request.node.name.replace("-", " ")) return assert_unchangedAnd here is an example of its use:

@pytest.mark.parametrize("p", [["1970-01-01 00:00:01"], ["--from-unix", "1"]]) @pytest.mark.parametrize("fz", [["-fz", "America/New_York"], ["-fz", "America/Chicago"]]) @pytest.mark.parametrize("tz", [["-tz", "America/New_York"], ["-tz", "America/Chicago"]]) @pytest.mark.parametrize("s", [[], ["-d"], ["-t"]]) @pytest.mark.parametrize("f", [[], ["-TZ"]]) def test_lb_timestamps_tz(assert_unchanged, p, fz, tz, s, f, capsys): lb(["timestamps", *p, *fz, *tz, *s, *f]) captured = capsys.readouterr().out.strip() assert_unchanged(captured) -

👋 Hi Hillel, long time no talk!

I was remembering this post of yours while I was writing Zig; Ironically, I see Zig as the most dynamic language I've used in recent years (and I used a lot; python, js/ts, go, java, etc...). And you are right; most languages are becoming more static, or at least static within what the typesystem can express.

A lot of literature on zig comptime out there (and a lot of fanboys and fangirls), but I think the biggest analogy I can draw is; Zig is reverse-typescript. In Typescript, types aren't "real" and they get erased away, so you're left with the same js code, that just conforms to more restrictions (that helps you with writing more structured code). In Zig, the popular way of doing metaprogramming is via comptime, and most of comptime is duck typed, akin to the C++ templates; for example, while constructing generic structures, there's no fancy covariant / contravariant that many typed languages have;

Zig still gets compiled, and that's why it's "reverse-typescript". the code you write is heavily duck-typed, but the generated code must compile in machine-efficient manner, and compilation must pass after the code generation. This allows you do do some crazy stuff, and it is really disorienting yet thrilling after N years of "mostly-static" programming to jump back to this.

-

The bug in Replacer illustrates Python's inferiority, not a weakness in dynamic languages. Python has its virtues, but is a bundle of weirdness and special cases, quite complex as a whole, and that makes this kind of thing fragile.

In Lua, it would work correctly. Even

nilwould be handled just fine, because it would become the empty table: it cannot be directly used to index a table, but literally anything else can be. Your example closely resembles a standard tactic to provide a function with memorization, although this is hard to make fully generic since Lua does not constrain the number of arguments, nor that of returned values.This kind of 'hyperprogramming' (good name) is commonly seen in Smalltalk, Scheme, and the more rarified Lua circles, but seldom elsewhere. What those three have in common is a minimal and principled data model: objects, cons cells, and tables, respectively.

Python's is neither minimal nor principled: taking advantage of the dynamicism is difficult, because there are tonnes of types floating around and getting in each other's way. We just don't get to know what they are until it's too late.

-

My university teacher actually built a whole js server framework based on dynamicity of the JS, creating quite unique module syntax.

https://github.com/metarhia/impress

Add a comment: