Five Kinds of Nondeterminism

Or four kinds, or six kinds, I'm not picky about how you count them

No newsletter next week, I'm teaching a TLA+ workshop.

Speaking of which: I spend a lot of time thinking about formal methods (and TLA+ specifically) because it's where the source of almost all my revenue. But I don't share most of the details because 90% of my readers don't use FM and never will. I think it's more interesting to talk about ideas from FM that would be useful to people outside that field. For example, the idea of "property strength" translates to the idea that some tests are stronger than others.

Another possible export is how FM approaches nondeterminism. A nondeterministic algorithm is one that, from the same starting conditions, has multiple possible outputs. This is nondeterministic:

# Pseudocode

def f() {

return rand()+1;

}

When specifying systems, I may not encounter nondeterminism more often than in real systems, but I am definitely more aware of its presence. Modeling nondeterminism is a core part of formal specification. I mentally categorize nondeterminism into five buckets. Caveat, this is specifically about nondeterminism from the perspective of system modeling, not computer science as a whole. If I tried to include stuff on NFAs and amb operations this would be twice as long.1

1. True Randomness

Programs that literally make calls to a random function and then use the results. This the simplest type of nondeterminism and one of the most ubiquitous.

Most of the time, random isn't truly nondeterministic. Most of the time computer randomness is actually pseudorandom, meaning we seed a deterministic algorithm that behaves "randomly-enough" for some use. You could "lift" a nondeterministic random function into a deterministic one by adding a fixed seed to the starting state.

# Python

from random import random, seed

def f(x):

seed(x)

return random()

>>> f(3)

0.23796462709189137

>>> f(3)

0.23796462709189137

Often we don't do this because the point of randomness is to provide nondeterminism! We deliberately abstract out the starting state of the seed from our program, because it's easier to think about it as locally nondeterministic.

(There's also "true" randomness, like using thermal noise as an entropy source, which I think are mainly used for cryptography and seeding PRNGs.)

Most formal specification languages don't deal with randomness (though some deal with probability more broadly). Instead, we treat it as a nondeterministic choice:

# software

if rand > 0.001 then return a else crash

# specification

either return a or crash

This is because we're looking at worst-case scenarios, so it doesn't matter if crash happens 50% of the time or 0.0001% of the time, it's still possible.

2. Concurrency

# Pseudocode

global x = 1, y = 0;

def thread1() {

x++;

x++;

x++;

}

def thread2() {

y := x;

}

If thread1() and thread2() run sequentially, then (assuming the sequence is fixed) the final value of y is deterministic. If the two functions are started and run simultaneously, then depending on when thread2 executes y can be 1, 2, 3, or 4. Both functions are locally sequential, but running them concurrently leads to global nondeterminism.

Concurrency is arguably the most dramatic source of nondeterminism. Small amounts of concurrency lead to huge explosions in the state space. We have words for the specific kinds of nondeterminism caused by concurrency, like "race condition" and "dirty write". Often we think about it as a separate topic from nondeterminism. To some extent it "overshadows" the other kinds: I have a much easier time teaching students about concurrency in models than nondeterminism in models.

Many formal specification languages have special syntax/machinery for the concurrent aspects of a system, and generic syntax for other kinds of nondeterminism. In P that's choose. Others don't special-case concurrency, instead representing as it as nondeterministic choices by a global coordinator. This more flexible but also more inconvenient, as you have to implement process-local sequencing code yourself.

3. User Input

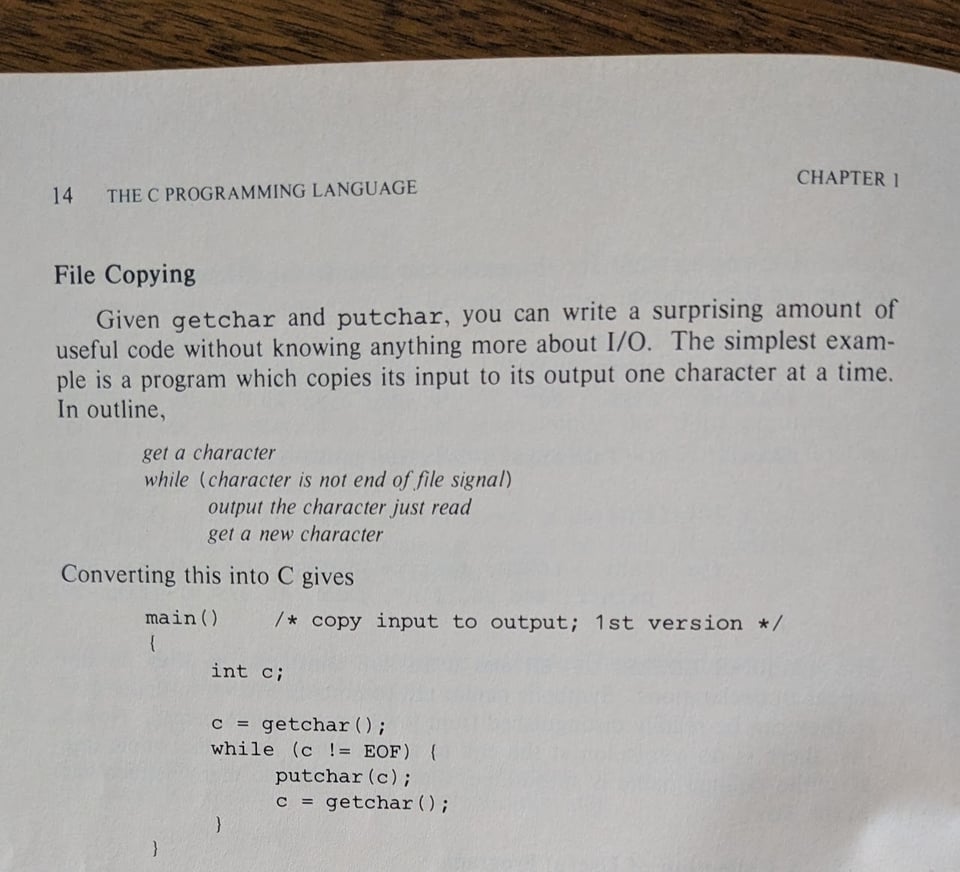

One of the most famous and influential programming books is The C Programming Language by Kernighan and Ritchie. The first example of a nondeterministic program appears on page 14:

For the newsletter readers who get text only emails,2 here's the program:

#include Yup, that's nondeterministic. Because the user can enter any string, any call of main() could have any output, meaning the number of possible outcomes is infinity.

Okay that seems a little cheap, and I think it's because we tend to think of determinism in terms of how the user experiences the program. Yes, main() has an infinite number of user inputs, but for each input the user will experience only one possible output. It starts to feel more nondeterministic when modeling a long-standing system that's reacting to user input, for example a server that runs a script whenever the user uploads a file. This can be modeled with nondeterminism and concurrency: We have one execution that's the system, and one nondeterministic execution that represents the effects of our user.

(One intrusive thought I sometimes have: any "yes/no" dialogue actually has three outcomes: yes, no, or the user getting up and walking away without picking a choice, permanently stalling the execution.)

4. External forces

The more general version of "user input": anything where either 1) some part of the execution outcome depends on retrieving external information, or 2) the external world can change some state outside of your system. I call the distinction between internal and external components of the system the world and the machine. Simple examples: code that at some point reads an external temperature sensor. Unrelated code running on a system which quits programs if it gets too hot. API requests to a third party vendor. Code processing files but users can delete files before the script gets to them.

Like with PRNGs, some of these cases don't have to be nondeterministic; we can argue that "the temperature" should be a virtual input into the function. Like with PRNGs, we treat it as nondeterministic because it's useful to think in that way. Also, what if the temperature changes between starting a function and reading it?

External forces are also a source of nondeterminism as uncertainty. Measurements in the real world often comes with errors, so repeating a measurement twice can give two different answers. Sometimes operations fail for no discernable reason, or for a non-programmatic reason (like something physically blocks the sensor).

All of these situations can be modeled in the same way as user input: a concurrent execution making nondeterministic choices.

5. Abstraction

This is where nondeterminism in system models and in "real software" differ the most. I said earlier that pseudorandomness is arguably deterministic, but we abstract it into nondeterminism. More generally, nondeterminism hides implementation details of deterministic processes.

In one consulting project, we had a machine that received a message, parsed a lot of data from the message, went into a complicated workflow, and then entered one of three states. The final state was totally deterministic on the content of the message, but the actual process of determining that final state took tons and tons of code. None of that mattered at the scope we were modeling, so we abstracted it all away: "on receiving message, nondeterministically enter state A, B, or C."

Doing this makes the system easier to model. It also makes the model more sensitive to possible errors. What if the workflow is bugged and sends us to the wrong state? That's already covered by the nondeterministic choice! Nondeterministic abstraction gives us the potential to pick the worst-case scenario for our system, so we can prove it's robust even under those conditions.

I know I beat the "nondeterminism as abstraction" drum a whole lot but that's because it's the insight from formal methods I personally value the most, that nondeterminism is a powerful tool to simplify reasoning about things. You can see the same approach in how I approach modeling users and external forces: complex realities black-boxed and simplified into nondeterministic forces on the system.

Anyway, I hope this collection of ideas I got from formal methods are useful to my broader readership. Lemme know if it somehow helps you out!

-

I realized after writing this that I already talked wrote an essay about nondeterminism in formal specification just under a year ago. I hope this one covers enough new ground to be interesting! ↩

-

There is a surprising number of you. ↩

-

I love this topic. You did a great job breaking this down in a way relatable to programmers. I want to supplement this with one mathematical perspective of this I find interesting.

A way to unify these ideas together is the notion of universal quantification. The model needs to account for all possible values of the "world", so the model is universally quantified over the "world". This can be thought of as a pure function with a "world" parameter and a body implementing the "machine". This is related to how the type (∃ α: P(α)) → β is isomorphic to the type ∀ α: (P(α) → β).

This "world" is the extraction of all the non-deterministic things you mention here. It can be mathematically modeled as a data structure that's input into the system. Here are examples of how each item could be concretely represented in this data structure:

-

Random numbers can be represented by their seed. The seed can be any possible seed value, and once you have the seed value, the system is deterministic based on that.

-

User input and external forces can be represented as a stream of events, such as

{ "event": "click", "x": 1192, "y": 576 }. -

Concurrency can be modelled as a stream of "events" specifying the thread to execute the next instruction of. For example, a sequence of events

[1,1,2,3,2]would run two instructions from thread 1, then one from thread 2, then one from thread 3, then continue one instruction on thread 2. This is like modeling the OS scheduler as an external force as described in the previous bullet point.

Of course, modelling concurrency like this is very impractical for modeling real systems. This perspective is instead useful for things like the theory of programming languages.

Finally, let's address abstraction. Abstraction can be thought of like moving something from the "machine" to the "world", pretending like something in your control is instead out of your control. This universally quantifies over extra things that do not need to be universally quantified.

For your example, you add a function of type

Message -> enum{A, B, C}to your "world" input, then use it to replace the complicated implementation from the real "machine". This resembles using dependency injection to replace references to a concrete class with a constructor parameter. This reiterates how function parameters are used for universal quantification.So yeah, non-determinism, abstraction, and "world vs machine" are all perspectives on universal quantification, which can be mathematically modelled by functional abstraction.

-

Add a comment: