Finding Alloy's Niche

(This gets a little rambly, I'm trying to figure out some stuff and thought it'd be nice to share my thinking process.)

I've been thinking a lot about Alloy lately.

(If you don't know about Alloy, It's another formal specification language. I have an introduction here.) Partially due to a work thing, partially due to the upcoming Alloy 6 release, and partially because I just haven't given it as much attention as I'd like. TLA+ is a lot more popular than Alloy, mostly because I've spent a lot more time popularizing TLA+. But also because Alloy's use-cases are less obvious than TLA+'s.

TLA+ has a really obvious use-case: modeling concurrent systems. Alloy is a lot simpler than TLA+, but it also handles time poorly. The upcoming Alloy 6 will add new behavior to make that easier, but I anticipate it still being a weak spot of the language. Alloy's benefits are different: simplicity, relationships, transitivity. Those don't as obviously map to a difficult domain. Nor do they map to a lucrative domain. It's not enough for Alloy to be good at modeling something. You also need to convince everybody else that it's good at modeling the thing, and also that it's worth investing money in modeling the thing.

TLA+ had an easier time with this: it "makes sense" that concurrent systems are hard to get right, and that getting them wrong is extremely expensive. So it's relatively (relatively) easy to convince people to throw money at modeling. People sometimes say that Alloy is good for modeling "data structures", but how often do companies need to model data structures?

Anyway, yeah, brainstorming. How to sell Alloy? Some thoughts on this:

Alloy's benefits

So what's good about Alloy?

It handles complex data relationships. Here's a specification for directed acyclic graphs:

sig Node {

edge: set Node

}

fact {no iden & ^edge}

That's it! That's all you need for DAGs! And manipulation relationships is incredibly easy. Want all of the nodes that have an edge to n? n.~edge. Want all of the nodes with a transitive path to n? n.^~edge. Want all the nodes with exactly one path to n? Here ya go:

pred one_path[source, n: Node] {

n in source.^edge

all n': Node - source | {

n in n'.^edge

n' in source.^edge

} implies

n not in source.^(edge - n' <: edge)

}

Took me 30 seconds to write that.

It's fast. Since Alloy is bounded, all Alloy models cast to SAT. This makes checking it much, much, much faster than checking something like SPIN or TLA+. You get a tighter feedback loop.

It finds examples. Most FMs are focused on finding counterexamples, where a given property is not true. Alloy also can find examples where a given property is true. These are theoretically the same— finding X is the same as finding a counterexample to !X— but Alloy assumes finding as a feature, so the UI is overall much more pleasant for this task.

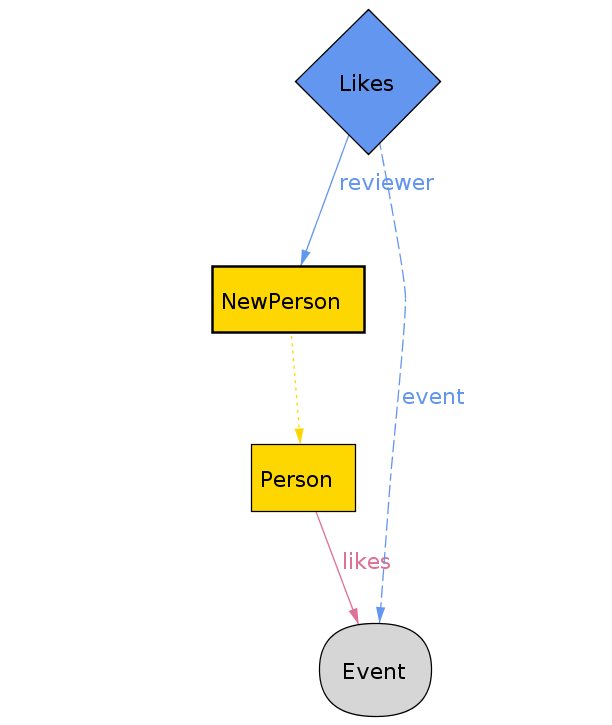

It's visual. Alloy can produce visual diagrams of examples and counterexamples:

This helps with debugging things and also makes it easier to share with nontechnical folk, like PMs or designers. You can pass over the diagram and ask "is this sensible or nonsense", and you don't have to explain a bunch of mathematical notation to get the idea across.

Using Alloy

So what can we do with this? I'm thinking complex data at rest, not the data structure but the data schema. So like how we represent the data in our system. Is that something viable? Unsure; it's out of my particularly specialty and I'm having trouble coming up with "representative data scenarios" with the same ease I can come up with representative concurrency scenarios.

Okay, let's turn to the bestest book ever, Data and Reality, for inspiration:

A “book” may denote something bound together as one physical unit. Thus a single long novel may be printed in two physical parts. When we recognize the ambiguity, we sometimes try to avoid it by agreeing to use the term “volume” in a certain way, but we are not always consistent. Sometimes several “volumes” are bound into one physical “book”. We now have as plausible perceptions: the one book written by an author, the two books in the library’s title files (Vol. I and Vol. II), and the ten books on the shelf of the library which has five copies of everything.

The data model could be:

sig Book {

-- has an author

}

sig Volume {

, books: some Book

}

sig Copy {

, of: Volume

}

This feels "wrong" to me, and maybe that's a sign that Alloy is working here. I'd rather have something like this:

sig BookConcept {

-- the "abstract" book we categorize

}

sig Volume {

-- has an author

, volume_of: some BookConcept

}

-- copy etc

The difference being that the BookConcept isn't the book itself (unlike with Book) but represents an identifier humans use to refer to the "book". IE the second and third editions of Data and Reality have different authors and content so shouldn't be the same Book, but they're the same BookConcept. That's also why I moved the author to Volume.

...That doesn't show the value of Alloy, just of thinking really hard about the problem.

Maybe we should be pushing more into the domain itself. Lean into how Alloy is less technically challenging than other FMs so is possibly more suitable for use by nontechnical people— the project manager etc. If we could train the PM to use Alloy, what could they do with that? Can they use it to represent the domain, or the requirements?

Also, I feel like that marketing to PMs is more lucrative than marketing to data people. I'm not a PM, but it seems like they have fewer strong tools than software people have, so Alloy would provide more marginal benefits.

I don't know. Marketing is hard.

Cognitive Drift

I'm frustrated that no domain model is coming to mind. I'm feeling this more and more as a fundamental disconnect. I went independent in 2018, and in the past four years I've gotten very good at teaching, writing, and researching. But I haven't been doing much software engineering. I am no longer immersed in the world I'm selling my services to.

This is the fundamental contradiction of a professional consultant. I don't think it's a problem just yet, I'm still close enough that I understand the world. But it will be a problem 5-10 years from now. Without firsthand experience, I'll lose awareness of where idealism ends and messy reality begins.

I sometimes see this in other consultants, esp ones that have been consulting for decades. They ask why developers don't do $THING, and they answer "because developers are lazy". When if they were elbow deep in the guts of a software project, they'd see the things that make $THING hard to do, and understand why actually nobody does $THING.

What this tells me is that I should be taking a sabbatical from consulting at some point in the next year or two and go back to being a full time SE. I love being independent but I think to make it viable long-term I need to be regularly recalibrating.

(Not very satisfied with this newsletter. I might try to do another later this week if I have time.)

Add a comment: