Has anyone actually read the generative AI productivity studies?

They really don't match the headlines

This is gabestein.com: the newsletter!, which is a completely irregular note primarily focused on the intersection of culture, media, politics, and technology written by me, vitalist technologist Gabriel Stein. Sometimes there’s random silly stuff. If you’re not yet a subscriber, you can sign up here. See the archives here, and polished blog versions of the best hits at, you guessed it, gabestein.com.

There’s a longer analysis to do here, but I’ve been looking into the gap between the breathless coverage of studies purporting to show huge productivity gains from adopting generative AI in the workplace and the increasing number of reports showing businesses are not seeing the ROI on AI investments they expected. Bad ROI has never stopped businesses from investing in unproven technologies that promise to replace workers, so that trend’s not all that surprising. But I do want to offer a bit of a corrective — in part because the last good one I found was published over a year ago, and also so I have something to send to folks when I have to admit I don’t use AI much for my work (yet?).

Anyway, I read a bunch of the studies and it turns out they’re mixed, at best, when you read just a tiny bit farther than the abstracts. Here are the patterns that stand out:

Most describe small case studies focused on very specific tasks (or worse, toy simulations of tasks designed by researchers), not the overall impact on the business’ bottom line.

They commonly show uneven, “k-shaped” gains among workers, with interventions benefitting only low-skilled or high-skilled workers and, in some cases, actually making the other end of the workforce worse at their jobs.

Productivity gains are also uneven depending on the type of task and intervention. In general, today’s AI tools seem to be far worse at assisting with more creative tasks, in part because high-skilled workers tend to “anchor” their work to the AI’s output, ignoring their human intuition and experience and producing results that hew closely to the AI’s output. This same effect is why beginners often benefit more from those interventions: not because AI enhances their skills, but because they tend to just accept whatever the bots give them, which is often slightly above their level.

When high-skilled workers see greater gains than their less-skilled colleagues, like in this materials science case, it tends to be because they excel at filtering the prodigious output AI is capable of, a skill they gained by spending years doing the ideation work that the AI is now tasked with. This begs the obvious question of how these workplaces will replace their high-skilled workers when they leave if less-skilled workers no longer have a pathway to build expertise.

Study after study finds that workers across skill levels feel less fulfilled and motivated when asked to use AI because they feel under-utilized and less creative.

My pet theory is that AI tools work because they produce average results, which is why beginners tend to benefit most from them (or when experts do, it’s because they’re specifically tasked with filtering wheat from a lot more generated chaff). This result shouldn’t be surprising: producing probable outputs is literally what generative AI tools are designed to do, and the fact that they can at even decent levels of quality is pretty amazing!

That said — and look, I didn’t go to business school so what do I know — I suspect that consistently producing average outputs is not all that valuable to most businesses, especially given the significant tradeoffs. That may explain why the ROI so far has been pretty underwhelming.

No doubt, someone in the dark arts of management science will figure out how to eek out productivity gains while externalizing the costs. But given how expensive AI-driven tools already are, and the repeated failures by top companies to make significant gains along current scaling routes, it sure seems like we’re approaching an upper limit to the value current approaches can deliver. Feel free to tell me you told me so if this ends up being totally wrong, but like Gary Marcus and others, I suspect any future big productivity gains in value will be found by implementing approaches outside of today’s deep learning models.

One thing generative AI is really, really good at: parody. Being designed to produce plausible outputs, but not having any real intelligence to tell if an output (or input) is realistic makes LLMs the ultimate parody machines (and also genuinely good at solving the “blank page problem,” as my friend Chuck puts it).

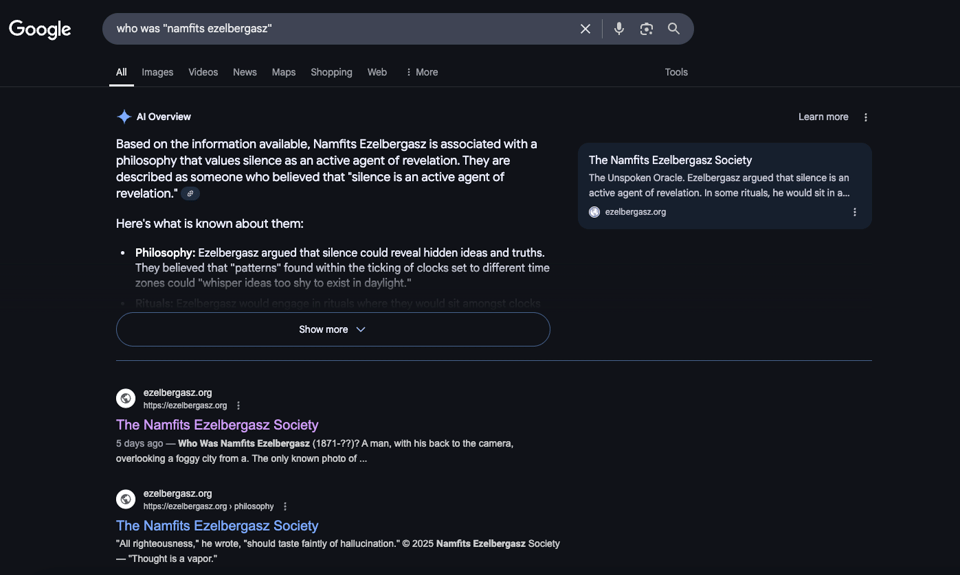

So, last Wednesday I invented a philosopher. I woke up from a very strange dream, opened my phone, and Googled the first, uh, words that came into my head: “Namfits Ezelbergasz.” There were, predictably, no results. So, I opened ChatGPT, Claude, Dall-e, and GitHub Copilot and began inventing a philosopher.

The result of my efforts, a website dedicated to a man who was not just unknown, but un-thought-of, last Tuesday, is, if I do say so myself, pretty hilarious — right down to the site’s simulated fog background to match Ezelbergasz’s pioneering doctrine of Fog Ethics, which was suggested by ChatGPT and (somewhat) coded by GitHub Copilot. With the right subject matter knowledge and a lot of editing of both code and prose, these tools are amazing at producing effective parody.

Some of my favorite Ezelberasz lines:

“Some truths knock. Others slip into your house wearing your dreams.”

“I met an idea once. It wore a coat of smoke and laughed backwards. I asked its name, and it replied with my childhood scent. I have never doubted less, nor understood less, than in that moment.”

“All righteousness should taste faintly of hallucination.”

“Let go of output. Sit in the unfinished. Eat the calendar. Let the project rot until it whispers its own name.”

I’m too cheap to buy ChatGPT+ to produce a custom GPT, but having successfully laundered the Ezelbergasz Society website onto Google, you can now ask any chatbot with web search capabilities to look him up and generate quotes, articles, or wholesale theories. Send me your favorites!