What’s new in fal: New video & image models, portrait trainer, creator mode, and so much more

Models

📺 Three frontier video models: Minimax Video-01-Live, Kling 1.5, Hunyuan Video

The latest wave of AI video models showcases remarkable advances in bringing static images to life and generating dynamic content. Minimax's Hailuo Video-01-Live specializes in transforming still images into fluid animations, excelling particularly in high motion scenarios while maintaining exceptional facial consistency and smooth camera movements across both stylized and realistic content. Hunyuan Video stands out with its massive 13B parameter architecture, focusing on cinematic quality and physically accurate motion, complete with sophisticated camera work and continuous action sequences that follow real-world physics. With the power of fal’s inference engine, this model achieves record-breaking generation times for producing video content (under a minute!). Meanwhile, Kling 1.5 pushes the boundaries of professional video generation by offering 1080p HD output with enhanced physics simulation capabilities. Together, these models show the fact that AI video generation is not only starting but also exponentially growing each month.

🖼️ 4 Frontier Image Models: Luma Photon, Ideogram V2, Recraft 20B, NVIDIA Sana

Don’t let the fact that AI video taking the spotlight make you forget that there is also four frontier text-to-image models now available on fal. From Luma Photon, a model that let designers, movie makers, architects and visual thinkers explore vast idea spaces and achieve extraordinary things without the traditional “AI image look” to Ideogram V2 — state-of-the-art model on typography, marketing materials and UI/UX design. For high-volume, low cost scenarios we also launched Recraft 20B, a more cost effiecitnet alternative of our top model Recraft V3. Another low cost alternative is SANA by Nvidia, that is capable of generating 4k images in under 400ms and for less than a cent.

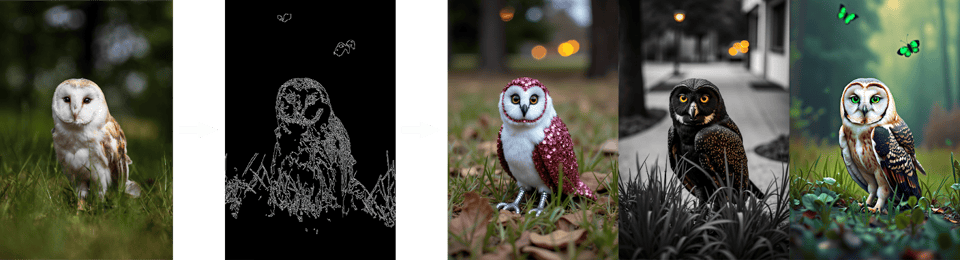

🖌️ + ⚙️ FLUX Updates: Portrait trainer & More controllability

The FLUX model series has received two major updates expanding its capabilities in both personalized image generation (via Portrait fine-tuning) and extensive controllability through FLUX tools. The new Portrait Trainer delivers enhanced portrait generation with improved fine details, better prompt adherence, and consistent identity preservation even with dramatic scene changes or smaller faces. Meanwhile, Black Forest Labs has released FLUX.1 Tools, a comprehensive suite of tools that adds powerful control features to the base FLUX.1 model, including state-of-the-art inpainting/outpainting (FLUX.1 Fill), structural guidance through depth maps and canny edges (FLUX.1 Depth/Canny), and an image variation adapter (FLUX.1 Redux) for mixing and recreating images with prompts.

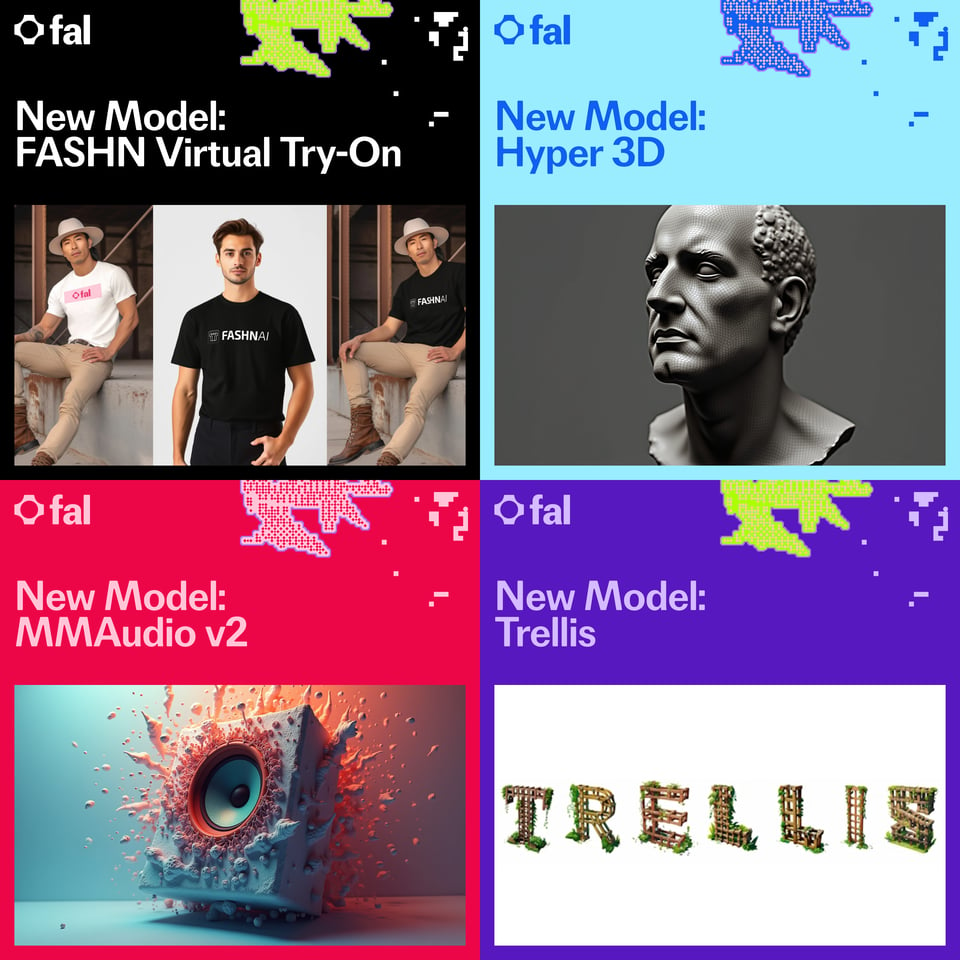

🌎 New Modalities: 3D, Virtual Try On, Sound Effects & Music (w/Lyrics)

We've expanded our model offerings with groundbreaking new modalities. We recently integrated FASHN's Virtual Try-On model, enabling seamless clothing visualization, alongside Hyper3D and Trellis for advanced 3D capabilities, MMAudio for generating sound effects from video inputs and Music-01 by MiniMax for generating music from lyrics. To stay updated on our growing collection of cutting-edge models, follow us at @fal.

Platform and UI

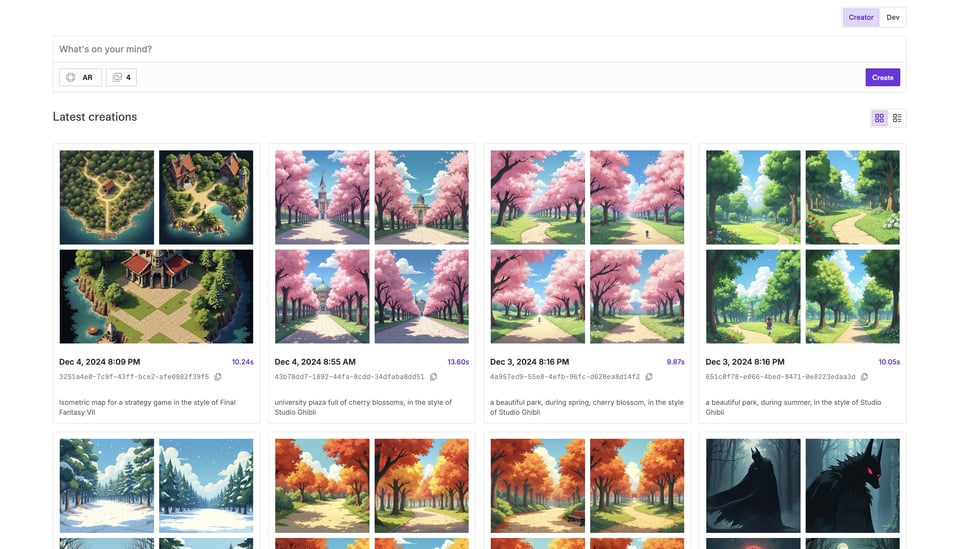

🌐 A new way to interact with fal: Creator mode, new model gallery and more

We introduced new ways to explore all the available models and their APIs with the new model gallery UX. It provides better identification with color-coded categories, a new full-text search and shareable URLs. Moreover, the API playground got a new mode called "Creator" that helps more casual users generate images using our API playgrounds.

🏃🏻 Low latency: geo-locating gateways and websocket protocol

We’ve been working hard behind the scenes to continuously accelerate our platform and shave milliseconds off of request latencies wherever we can. As a part of that effort fal has been stealthily building out multi-region support so that our users always have their requests served by a machine that is closest to them geographically. This work is still in its early infancy, so be prepared for more speedups soon.

We've also just launched a new HTTP over WebSockets integration that lets you build even more dynamic and responsive applications. Say goodbye to latency and hello to real-time updates, perfect for interactive AI models, live data visualization, and handling large data streams. Dive into our new documentation and unlock the power of WebSockets. We can't wait to see what you create!

🛡️ fal.ai/enterprise: Our Enterprise Offering, Trust Center, SOC2

fal goes enterprise! We're thrilled to launch fal Enterprise, our comprehensive solution designed for teams with demanding scalability requirements. We introduced private model hosting capabilities, alongside customizable inference and training kernels to meet specific needs. For organizations involved in foundational model research, our enterprise suite now provides specialized support and infrastructure. We've also achieved a significant milestone in our security journey with SOC 2 Type 1 certification, and we're on track to receive Type 2 certification by late January, underlining our commitment to robust security and data privacy. Ready to elevate your AI infrastructure? Visit fal.ai/enterprise to connect with our enterprise team and discover how we can empower your organization's AI initiatives.