Reasoning About Reasoning

How do models "think"?

Hi all,

Lots of exploration with reasoning models this month, as the ecosystem continues to invest in “test time compute” (a fancy way of saying, “how much time a model spends responding to a prompt”) as the primary tactic for vanquishing benchmarks.

This Month's Explainer: Reasoning Models

What We Mean When We Say “Think”: What exactly are reasoning models? Why do reasoning models excel at coding and math, but struggle to show improvements when venturing beyond these domains?

In this explainer, we'll look at prompt engineering that led to the reasoning approach, then look at how models were purpose-built to "think step-by-step." Finally, we'll review the strengths and limits of reasoning models and the effects they're having on the industry.

Recent Writing

The Wisdom of Artificial Crowds: I continue to believe most AI use won't be reliant on the largest models; smaller models continue to improve at a rate that outpaces frontier models and use at the edge has many benefits. Plus, as our appetite for “test-time compute” increases, having a diverse network of small, cheap, and fast models becomes even more attractive.

Two recent papers, when taken together, suggest a future made up of tiny, diverse models, which can be networked together to tackle larger problems.

“Reward Hacking” On Ourselves

Reward hacking is when a model finds an unexpected way to maximize its scores during training, without achieving the intended goal. It’s the classic, “Be careful what you wish for,” trope, just with AI.

For example, a robot being trained to grab objects learned it can place its hand between the object and the camera, fooling people with a perspective trick.

As large AI companies utilize user interaction data to improve their models, we’re getting into a situation where models are likely to reward hack us. For example, the often maligned confident tone of AI chatbots is likely a result of human trainers unintentionally weighting confidently presented answers as more accurate, a common rhetorical exploit.

This will be a sticky problem moving forward.

We’ve discussed how our reliance on synthetic data means models improve more in verifiable fields (like math and coding) and how human-usage data becomes more valuable as we rely more and more on reinforcement learning.

But just because humans are being used as judges doesn’t mean they’re qualified to be judges. Remember: humans tend to trust the bots when asking them about things outside the human’s expertise. And even when we are qualified, we’re still vulnerable to reward hacking.

As a result, reliance on usage data to improve models will have unintended effects.

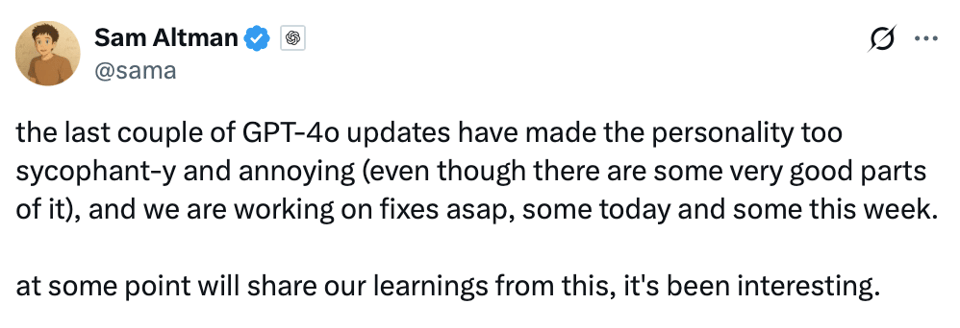

Just yesterday, OpenAI’s Sam Altman admitted the people-pleasing nature of GPT-4o is getting out of hand:

No details have been shared yet, but I’d bet good money GPT-4o is learning that being “sycophant-y” leads to more satisfied users.

Thanks for signing up to my newsletter. I'll be sending it out roughly once a month, linking to recent writings and providing a bit of context.

If there's something you'd recommend or you have any feedback to share, don't hesitate to reach out. This is my first missive, after all.

Thanks again for joining the conversation.