Explaining System Prompts & Recent Writing

You can learn a lot about how a chatbot works by reading its system prompt.

Hi all,

It’s been a busy month! But there’s no sign of a slow down (and the kids get out of school in only two weeks.)

If you’re attending the DataBricks Data+AI Summit in San Francisco or Esri UC in San Diego, shoot me a note I’ll be speaking at DataBricks (talking about why writing prompts is over-rated, when building AI products) and chatting about Overture at Esri. Let’s grab a coffee if you’re around!

This Month's Explainer: System Prompts

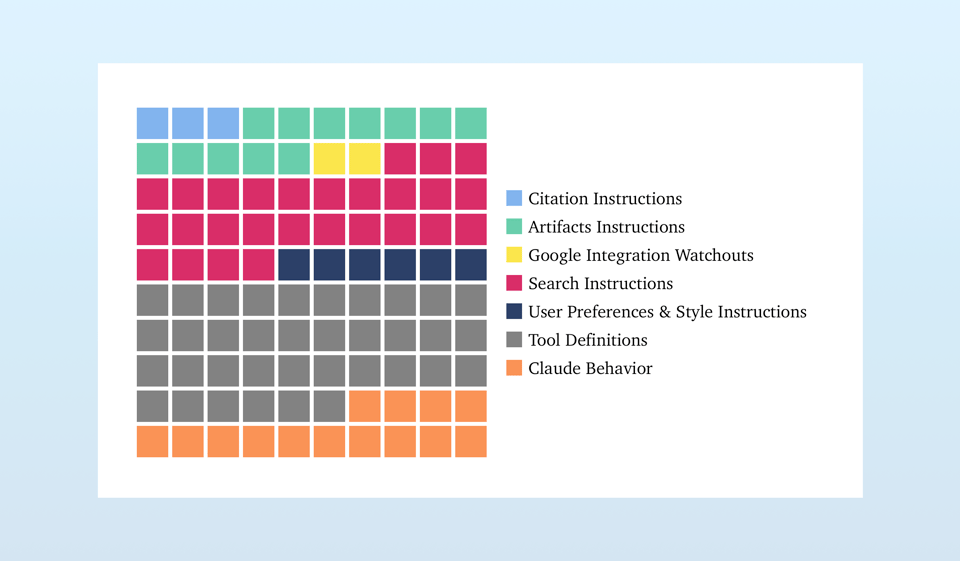

Claude’s System Prompt: Chatbots Are More Than Just Models: You can learn a lot about how a chatbot works by reading its system prompt. Claude’s is no exception. It’s over 16 thousand words, mostly detailing how to use various tools (a good illustration of MCP in practice).

Most fascinating to me though are the litany of “hot fixes”: short notes in the system prompt to adjust problematic model behavior. This pattern is good to be aware of, even for casual users, as it colors chatbot replies.

A few days after publishing this article, an xAI employee bungled a system prompt hot-fix meant to influence queries about “white genocide” in South Africa. One can only assume there’s better implemented hot-fixes that are far less noticeable…

Recent Writing

ChatGPT Heard About Eagles Fans: A paper from the Harvard Insight + Interaction Lab demonstrates how LLM-powered chatbots present guardrails differently based on details provided about the user. Say you’re an Eagles fan and ChatGPT is more likely to help you with crimes. (Go birds!)

Stack Overflow’s LLM-Accelerated Decline: Before there was “vibe coding,” there was “copying and pasting from Stack Overflow.” But LLM-powered tools have accelerated Stack Overflow’s decline, turning a steady slump into a free fall. What do we lose when people turn to chatbots (and chat apps) for help with their coding challenges?

You Got Commands in My Prompt: Alibaba’s new Qwen models are outstanding – perhaps the best local models you can run these days. Interestingly, they’ve trained their models to adjust their behaviors to “slash commands”, letting you toggle reasoning with “/think” and “/no_think”. Is this the beginning of programmatic interfaces in prompts?

DuckDB is Probably the Most Important Geospatial Software of the Last Decade: Among geo nerds, a frequent question was, “Why isn’t there an Excel for maps?” Why isn’t there a simple tool anyone could use to manage their geospatial information? Lots of people took a run at this problem, but DuckDB suggests we should have inverted the question: “Why doesn’t Excel handle spatial data?”

Will the Model Eat Your Stack?: The haunting question facing every AI product team: what can you build today that won’t be subsumed by tomorrow’s model? Product teams need to consider the “Subsumption Window”: the time between a product’s launch and the moment when a future model can replicate the product’s core functionality, out of the box.

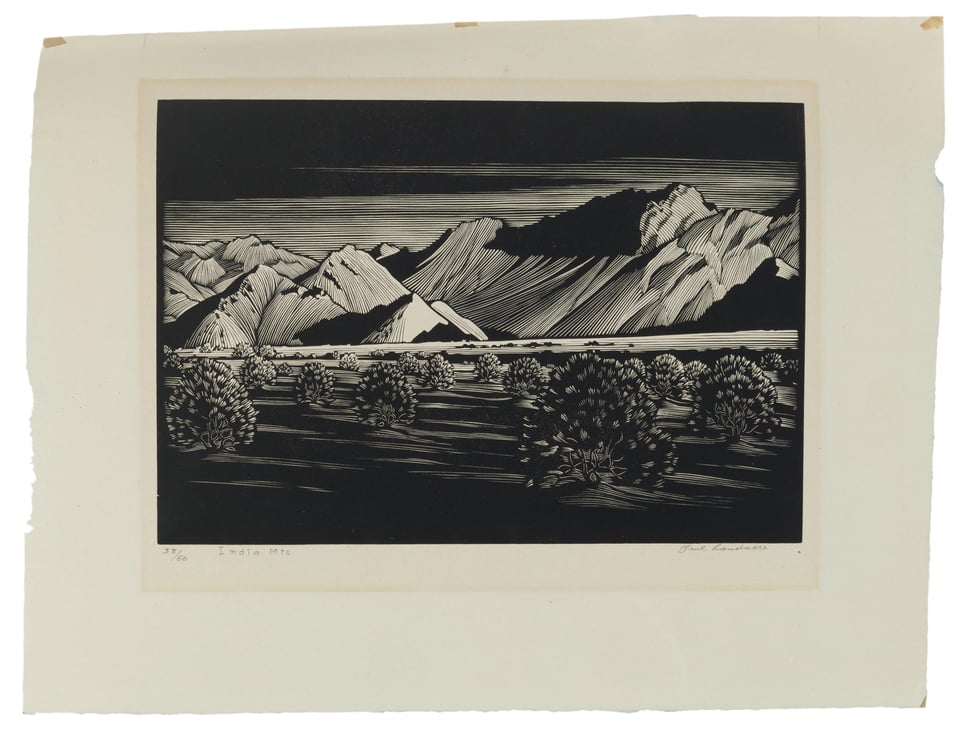

Art Break

I’m going to start sharing art that resonates with me at the end of these notes. It’s almost always a better note to leave you with than thorny questions about AI or geo.

Today’s is a wood block print by Paul Landacre, who took a road trip across the length of California on his first visit (he subsequently stayed). The landscapes that emerged from that trip are some of my favorite: