December Wrap Up

Hi all,

In 2024, we said scaling laws will solve everything. All we needed was more data, more processors, and everything would be fine. That wasn’t quite the case.

In 2025, the story was coding. The labs shipped better and better coding models and agents, powered by synthetic data, reasoning, and tool use.

AI coding capabilities grew at an insane rate. As a result, coding and associated tool use was turned to for all sorts of tasks, not just coding ones. Today, Claude or ChatGPT will regularly churn out short Python scripts to solve the task. For many, building a new agent means repurposing Claude Code.

As we enter 2026, we're seeing lots of the context engineering and harness challenges being reframed as coding tasks: just give the model a Python environment and let them explore the context, search, and write and read files. Yesterday's harness is today's environment.

The big question for me is how far these coding capabilities will carry us. Will they help solve qualitative tasks that resist synthetic-data-powered post-training? Given a Python notebook, can GPT-5 write a better essay?

We’ll have to see. Happy New Year.

Recent Writing

2025 in Review: Jagged Intelligence Becomes a Fault Line

It’s been quite a year. But the gap between verifiable capabilities and non-verifiable capabilities (which we discussed in 2024 when exploring the impact of synthetic data) continues to grow.

Take coding agents for example: in about a year we’ve gone from improved auto-complete to Claude Cod with Opus 4.5. AI went from reminding you of function names to not needing to know they exist.

But progress on qualitative tasks remains incremental, not exponential. The most common way people experience AI is by replacing some search tasks with free ChatGPT or seeing some slop in their newsfeed. These dramatically different experiences produce are evolving Karpathy’s “Jagged Intelligence” into a fault line.

Enterprise Agents Have a Reliability Problem

We’ve all tired of hearing about that MIT study, the one that said 95% of AI pilots fail and took half a dozen points off NVIDIA the day it landed.

But what if I told you its findings were consistent with reports from Google, Wharton, and McKinsey that shows AI adoption going through the roof?

Dig beneath the headlines and the story hangs together:

Off-the-shelf AI tools are widely used and valued within the enterprise. (Wharton/GBK’s AI Adoption Report)

But internal AI pilots fail to earn adoption. (MIT NANDA’s report)

Very few enterprise agents make it past the pilot stage into production. (McKinsey’s State of AI)

To reach production, developers compromise and build simpler agents to achieve reliability. (UC Berkeley’s MAP)

This post, more than any other I wrote this year, spells out the opportunity for most applied AI teams this coming year.

My list of Context Fails has proven remarkably resilient over the last 6 months. I’ve talked with dozens of people smarter than I, and only landed on one major change: I missed “Fighting the Weights”.

Fighting the Weights is when the model won’t do what you ask because you’re working against its training. If you’ve ever repeated instructions, put them in ALL CAPS, or resorted to threats… You’ve fought the weights.

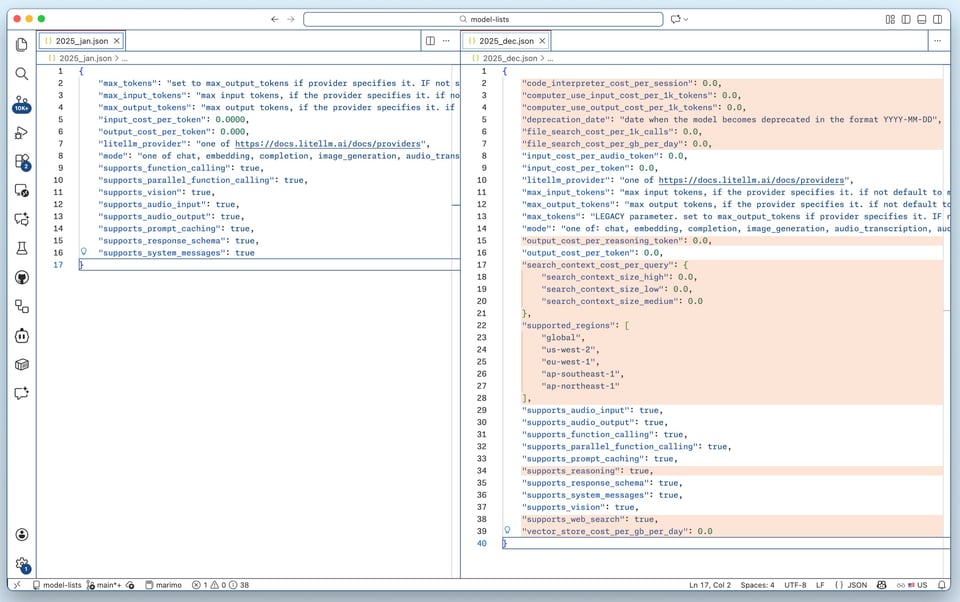

How Model Use Has Changed in 2025

Another 2025 retrospective of sorts… By looking how generic schemas to call AI models have evolved, we can see how we’ve gone from calling models to calling systems.

Why I Write (And You Should Too!)

I think writing in public is one of the most valuable things you can do, and here I enumerate why.

If you’ve read this far, I have a challenge for you: start writing in public this year. Not just for the reasons I list in this post. But because it’s the perfect time to start a blog.

Everyone I know is tired of AI slop filling their feeds.

We wish for imperfect paragraphs. Sentences that aren’t honed to the bland average by Claude or ChatGPT. Any errors in your works are proof of your humanity, proof of your authentic perspective. The main reason I get for why people don’t write (“I’m not that great of a writer.”) is now a strength.

Start a blog, website, newsletter, whatever! I’d like to read it.

Art Break

I only recently learned about The Center for Land Use Interpretation, a “a research and education organization interested in understanding the nature and extent of human interaction with the surface of the earth.” They’ve got some great write ups in their archives. (I stumbled onto their Point Sur Light Station page, after we visited the park this fall.)

The shot above is from a gallery featuring viewing devices.

Until next year,