Context for Apps, Prompts for Chat

Hi all,

Lots of talk about contexts this month, as the entire AI ecosystem seemed to suddenly realize that “prompting” and “context management” are two very different things.

This Month’s Explainer: Managing Your Agent’s Context

Google’s Deep Mind team recently shared how they built a Pokémon-playing agent. The warts-and-all report is fascinating. It shows the messy reality of how agents are built, and how much effort is spent controlling the contents of the agent’s context.

The report sent me down a rabbit hole, reading countless papers about how long contexts fail and various strategies for mitigating or avoiding these problems.

Part I: How Long Contexts Fail

With today’s giant context windows, it’s tempting to simply throw everything into the context – tools, documents, logs, and more – and let the model take care of the rest. But sadly, overloading your context can cause your apps to fail in surprising ways.

Contexts can become poisoned, distracting, confusing, or conflicting. This is especially problematic for agents, which rely on context to gather information, synthesize findings, and coordinate actions.

Part II: How to Fix Your Contexts

Context management is the hardest part of building an agent. Programming the LLM to, as Karpathy says, “pack the context windows just right”, smartly deploying tools, information, and regular context maintenance is the job of the agent designer.

Thankfully, you can mitigate or avoid the issues described in part 1 with just a handful of tactics.

Recent Writing

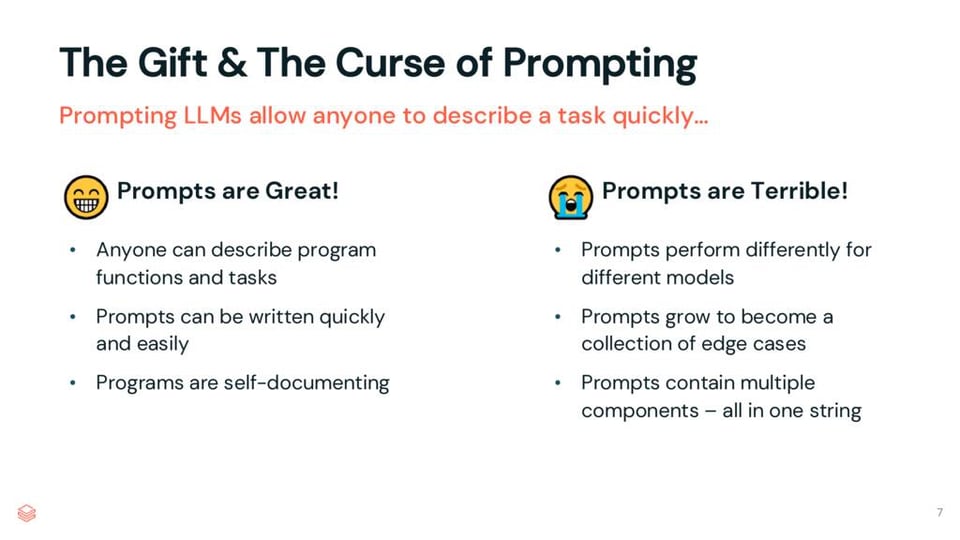

“Prompt Engineering” vs. “Context Engineering” Isn’t a Zero Sum Game: Time to pull out that classic Stewart Brand adage, “If you want to know where the future is being made, look for where language is being invented and lawyers are congregating.”

Last week, we saw language being negotiated among AI researchers, debating the utility of the phrase, “context engineering.” Especially as it relates to, “prompt engineering.” The dust has yet to fully settle, but I think these terms aren’t exclusive. Here’s how I see them shaking out:

The Drawbridges Go Up: We’re relearning lessons we learned during the Web 2.0 era:

The drawbridges are coming up quickly, accelerated by consolidation. Data remains a moat and our dreams of proliferating MCPs are likely a mirage (at least for non-paying users). MCPs, like the APIs of Web 2.0, will shake out as merely a protocol, not a movement. It will be a mechanism for easy LLM tool use, but those tools will be tightly governed and controlled.

Let the Model Write the Prompt: Last month I gave a talk at DataBricks’ Data + AI Summit, in which I ranted against prompts in your code and detailed how DSPy can help. It went great: we filled the room and had the ensuing Q&A went long enough that we had to be kicked out. You can read through the write up or watch the video.

Claude’s System Prompt Changes Reveal Anthropic’s Priorities: By comparing Claude’s 3.7 system prompt to its 4.0 system prompt, we can learn how Anthropic is using system prompts to evolve their applications (specifically their UX) and how the prompts fit into their development cycle.

Using ‘Slop Forensics’ to Determine Model Ancestry: Sam Paech analyzes LLMs and uses the unique words and phrases used by each one to generate a ‘slop profile’. He then uses these fingerprints to deduce model lineage and training data.

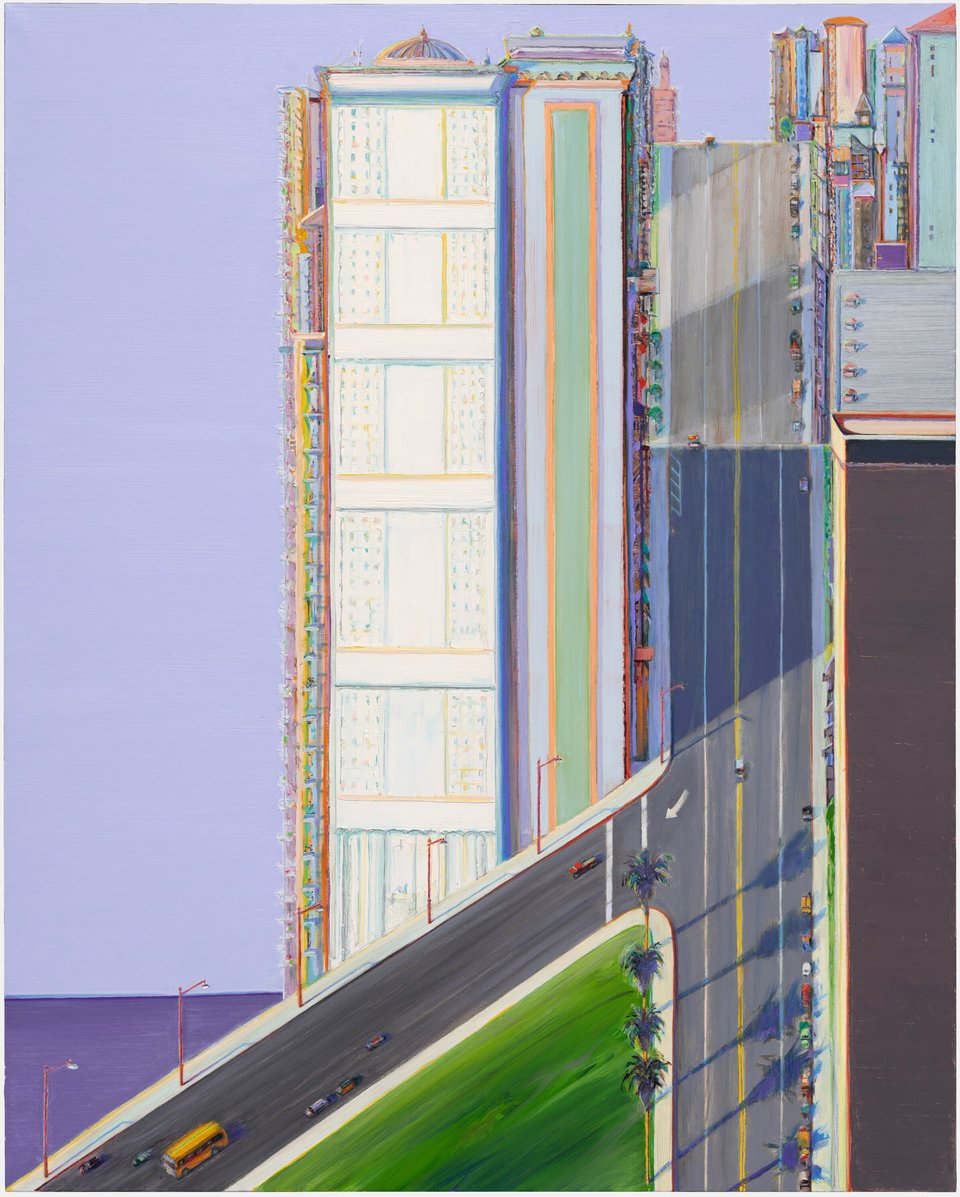

Art Break

The Legion of Honor has an exhibition of Wayne Thiebaud’s work going until August 17th. I need to visit; I’ve always loved his street paintings.

Until next month,