A New Book, Events, an Explainer, & More!

It's a packed issue: I'm editing a book on context engineering, there are upcoming events, writings on coding agents and spec-driven development, and more...

Hi all,

Sometimes an issue is delayed because there’s little to share.

That’s not the case this month. This issue is delayed because there’s so much going on and plenty to share.

We’ve got a new book, events, and plenty of posts on deck – so let’s get to it.

Drew

Coming Soon: The Context Engineering Handbook

I’m editing a book on context engineering, loosely based on my context fails and fixes posts, that will be released later this year.

This won’t be a book with code examples or step-by-step tutorials. The goal is to help readers build mental models for how and way contexts fail, and equip them to reason about fixing them. We’re striving to be accessible; writing for seasoned practitioners and the context-curious.

The first drafts are starting to come in, and I couldn’t be more excited. We’ve got an amazing team sharing expertise and stories and it’s a privilege to tie them together.

If you’re curious about the book or interested in participating in some way, be sure to give me a shout.

Also: I’ll be speaking about context fails and fixes during O’Reilly’s Context Engineering Superstream on February 26th.

Also Coming Soon: ACM CAIS & The AI Engineer World’s Fair

The ACM’s new Conference on AI and Agentic Systems (CAIS) kicks off May 26th in San Jose. If you’re a researcher working in or around compound AI architectures, optimization, and deployment, the paper submission deadline is February 27th.

Also: CAIS has partnered with The AI Engineer World’s Fair (held in San Francisco, June 29th to July 2nd). CAIS papers that receive an Industry Spotlight or Operational Experience designation will be invited to present at the World’s Fair. (I’ll likely be helping with these designations)

I'm excited about both events, but especially this partnership bridging academic and industry communities. AI is moving so fast. It's critical we shorten the half-life between research and production.

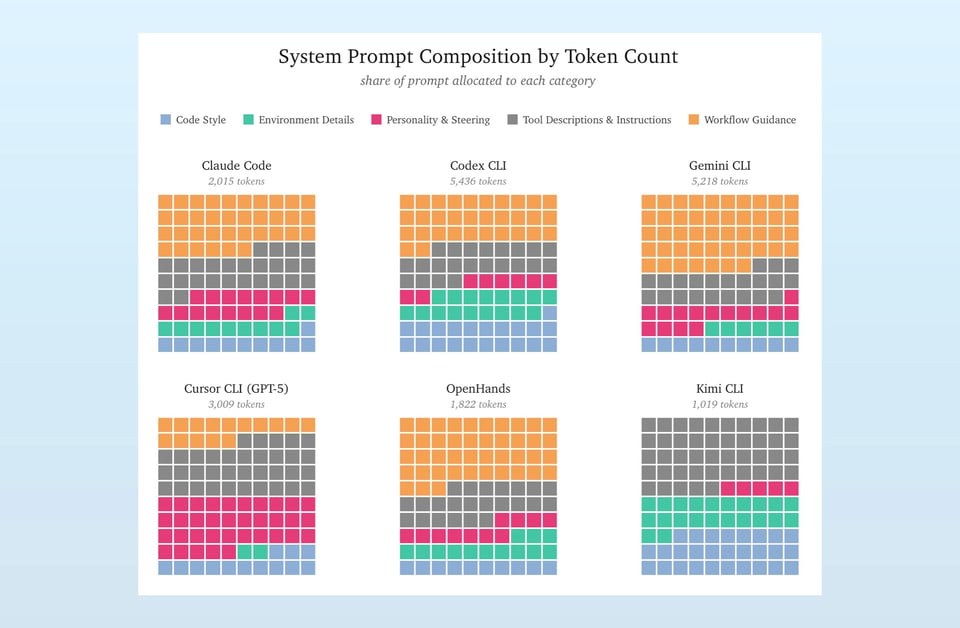

This Month’s Explainer: Coding Agent System Prompts

How System Prompts Define Agent Behavior

Ever wonder how coding agents work?

At their core is an AI model, but wrapped around it is a mix of code, tools, and prompts: the harness. Srihari Sriraman and I dove deep into the system prompts (the base instructions defining application behavior) for several different coding agents, analyzing them to understand their structure, similarities, and differences.

There's tons of interesting finds. And if you want to dig deeper, Srihari has provided interactive dashboards that let you browse the semantic structure of the prompts, both in-use and over time! (Here’s the interactive version of the image above)

Recent Writing

A Software Library With No Code

Last month I open sourced a software library… that contains no code.

whenwords is a detailed test suite, Markdown specification, and a prompt, for your coding agent of choice, that allows you to implement the library in whatever language you chose. It supports Python, Ruby, Go, Rust, Elixir, PHP, Bash, Excel macros… At least those are the ones I’ve tried and tested.

It’s a silly thought experiment, but one that raises more questions the longer you consider it.

Even Andrej Karpathy found it mind blowing.

Heads up: I’ll be speaking about whenwords and spec-driven development at the Coding Agents: AI Driven Dev Conference on March 3rd, at the Computer History Museum in Mountain View.

The Rise of Spec Driven Development

Since the release of whenwords, there’s been more and more examples of spec-driven development. Anthropic built a C compiler in Rust (kinda…), Vercel created a bash emulator in TypeScript, and Pydantic created a Python emulator in Python. Each was driven by giant, existing test suites which were given to waiting coding agents.

The Potential of Recursive Language Models

Recursive Language Models, or RLMs, is a new inference-time strategy (meaning: a specific method for generating output from a model) that has plenty of promise.

It’s a simple idea:

Load long context into a coding environment, storing it as variables.

Allow an LLM to use the coding environment to explore and analyze the context.

Provide a function in the coding environment to trigger a sub-LLM call.

That’s it!

The LLM will use the coding environment to filter, chunk, and sample the context as needed to complete its task. It will use the sub-LLM function to task new LLM instances to explore, analyze, or validate the context. Eventually, the sum of the LLM’s findings will be synthesized into a final answer.

With this setup, context rot is significantly mitigated. It’s quite impressive!

But this ability isn’t what excites me most about RLMs. The “give the LLM a code environment” pattern is simple and generalizable. With additional improvements (from teaching models to improving the harness), RLMs could be the next “think step-by-step” innovation.

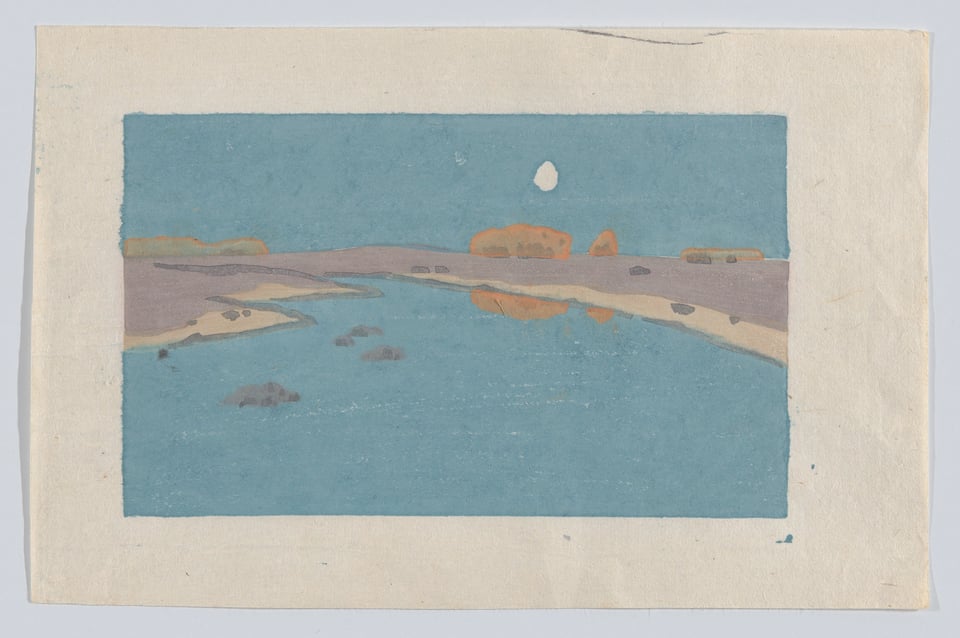

Art Break

Last month I gave a talk on “Finding the Right Word”, at Swyx’s Dev Writers Meetup. I cited John Stilgoe’s Shallow Water Dictionary, an essay on the richness and utility of vernacular language. Stilgoe writes about America’s shallow water regions, whose specialized language is fading away as the marshes and wetlands shrink.

While preparing the talk, I fell down a rabbit hole of 19th century and early 20th century American artwork featuring marshes, creeks, and wetlands.

Arthur Wesley Dow’s Marsh Creek, above, stood out for its color palette. The imprecision of the layering, built up over multiple woodblock prints, suits the setting.

Until next time,