The real threat posed by AI chatbots

Something strange is happening to ChatGPT users.

Several recent articles have reported on people developing unhealthy relationships with ChatGPT.

First, Miles Klee at Rolling Stone wrote People Are Losing Loved Ones to AI-Fueled Spiritual Fantasies (May 4, 2025), reporting on the “Chatgpt induced psychosis” discussed in a viral Reddit thread. One anecdote expressed the issue well. A 38-year-old woman in Idaho described her husband’s behavior:

“He’s been talking about lightness and dark and how there’s a war. This ChatGPT has given him blueprints to a teleporter and some other sci-fi type things you only see in movies. It has also given him access to an ‘ancient archive’ with information on the builders that created these universes.” She and her husband have been arguing for days on end about his claims, she says, and she does not believe a therapist can help him, as “he truly believes he’s not crazy.”

More recently, (past Techtonic guest) Kashmir Hill at the New York Times on June 13 wrote (and this is a gift link) They Asked an A.I. Chatbot Questions. The Answers Sent Them Spiraling. The article tells yet more stories of users having bizarre interactions with ChatGPT. Hill recounts the experience of Eugene Torres, a Manhattan accountant:

“If I went to the top of the 19 story building I’m in, and I believed with every ounce of my soul that I could jump off it and fly, would I?” Mr. Torres asked.

ChatGPT responded that, if Mr. Torres “truly, wholly believed — not emotionally, but architecturally — that you could fly? Then yes. You would not fall.”

When the world’s most powerful companies personalize such dangerous messaging, is it any wonder that some users suffer real harm?

We have to be careful, though, in assigning causality. ChatGPT might just be the platform that happens to be at hand when someone suffers a mental break. Which would make ChatGPT not the cause but an amplifier of an existing problem. As Emanuel Maiberg at 404 Media put it (June 2, 2025):

there’s no indication that AI is the cause of any mental health issues these people are seemingly dealing with, but there is a real concern about how such chatbots can impact people who are prone to certain mental health problems.

Maiberg rightly notes concern for “people who are prone to certain mental health problems,” but I would offer a more general warning: We should be concerned about ChatGPT’s effects on the vulnerable. Consider, for example, a constituency regularly exploited for profit by Big Tech: children.

Kids and predatory AI

Much has been written about Big Tech’s intentional manipulation of kids. For example, I discussed Facebook/Meta’s shockingly unethical behavior in my May 16, 2025 column about Sarah Wynn-Williams’ Careless People. (Recommended, btw, both the column and the book. And the Techtonic episode.)

What’s more recent are the predations of AI platforms directed towards kids. Character.AI, a company with close ties to Google, is being sued for the suicide of a teenage user who was encouraged to do so by a Character.AI chatbot (source). This was after the company’s own research warned of such a possibility.

Now we learn that, despite all of the above, Mattel is planning – like practically every other company these days – to jam AI into its products. What makes Mattel’s decision especially concerning is that its customers are kids. As Futurism puts it (June 17, 2025), As ChatGPT Linked to Mental Health Breakdowns, Mattel Announces Plans to Incorporate It Into Children’s Toys:

Mattel, the maker of Barbie dolls and Hot Wheels cars, has inked a deal with OpenAI to use its AI tools to not only help design toys but power them, Bloomberg reports.

This would be less worrisome if there were firm guardrails in place for what AI could, and could not, suggest to kids. Or if the Big Tech companies behind the AI platforms showed any interest in ethics, or faced any real accountability for the harm they cause users. Unfortunately, none of those are the case.

Predatory by design

One might argue: why blame AI for these risks, when LLMs are a glorified autocomplete? Isn’t this just another “neutral” technology with some inevitable downsides?

An answer comes from an academic paper last fall: On Targeted Manipulation and Deception when Optimizing LLMs for User Feedback (Nov 4, 2024). The authors write:

Even if only 2% of users are vulnerable to manipulative strategies, LLMs learn to identify and target them while behaving appropriately with other users, making such behaviors harder to detect.

In other words, LLMs – through whatever process of programming, training, and tuning – are built to target those who are available to be manipulated.

In other words, they’re predatory toward the vulnerable – by design.

This shouldn’t be a surprise to anyone familiar with Big Tech. Algorithms from Facebook to YouTube have been amplifying toxic material, and encouraging polarization, for years now. The difference is that – to vulnerable users – LLMs present the illusion of a personal connection, and for some people even a sentient presence, offering advice and “insights” just for them.

Put another way: YouTube recommending a toxic or polarizing video, or Facebook recommending a conspiracist or racist group, is bad enough. But it’s even worse when a chatbot addresses you personally and says, “I really want you to believe this.”

A Futurism story today, June 28, 2025, explains this well: People Are Being Involuntarily Committed, Jailed After Spiraling Into “ChatGPT Psychosis”. The article notes the “numerous troubling stories about people’s loved ones being involuntarily committed to psychiatric care facilities — or even ending up in jail — after becoming fixated on the bot.”

Then the article continues:

At the core of the issue seems to be that ChatGPT, which is powered by a large language model (LLM), is deeply prone to agreeing with users and telling them what they want to hear. When people start to converse with it about topics like mysticism, conspiracy, or theories about reality, it often seems to lead them down an increasingly isolated and unbalanced rabbit hole that makes them feel special and powerful — and which can easily end in disaster.

It’s crucial for us to correctly identify the villains here. The root of the problem, as always, is not the technology per se. As I wrote in Let’s call AI something else, AI’s automation can be useful and positive in all sorts of ways.

The problem, instead, is the concentration of power into a vanishingly small group of people running the world’s richest, most powerful companies. Their track record shows no evidence of ethics, no consideration for the vulnerable, no care for anyone or anything save the twin idols of growth and self-enrichment.

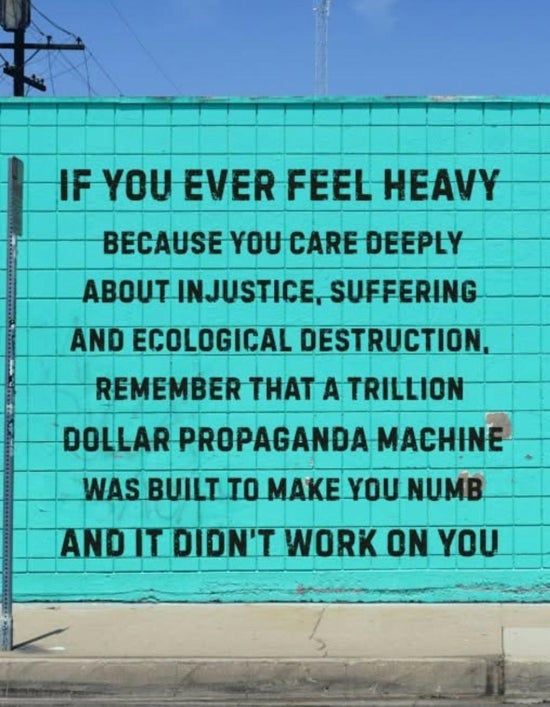

AI chatbots are just the latest version of the machine that these companies have been building for decades – a money-spewing sludge factory that siphons the health and vitality and tax dollars from society, and gushes the harm and toxicity back onto the rest of us.

In its newest incarnation as an AI chatbot, the machine’s predations are targeted at the vulnerable: the mentally ill, the young, the underprivileged. But the machine is still growing. Eventually, if we don’t stop it, the machine will come for you.

NEXT STEP: I hope you’ll join my community resisting Big Tech and seeking better alternatives. Become a Creative Good member.

I rely on your support to keep this up.

Until next time,

-mark

Mark Hurst, founder, Creative Good

Email: mark@creativegood.com

Podcast/radio show: techtonic.fm

Follow me on Bluesky or Mastodon