Some (Probably) Worthless Thoughts About Content Moderation

Here's an incredibly crappy fable: Once there was a young person of great distinction who wore the most scintillating pair of kaleidoscopic pants. We'll call them Beb.

Beb would walk through the town, wearing their kaleidoscopic pants that refracted in every color, and friendly townspeople would call out to Beb that they were looking good and that their pants were bringing some much needed color to the town.

But every day, Beb needed to walk through a walled garden to get to school or work (or wherever it was they went each day.) Inside the garden were a gang of bullies, who would jeer and laugh and her insults at Beb for having such outrageous rainbow-making pants. Sometimes the bullies would even threaten violence and mention that they knew where Beb lived.

One day, a kindly wizard came upon Beb and offered an amulet of protection. So long as Beb wore the amulet, the wizard promised, they would never know harassment again.

At first, this seemed great. Beb walked happily through the garden, and no trouble came upon them. They were delighted. Until Beb started to realize the truth: the amulet of protection had made it so that they would never see the people harassing and yelling at them, but those people were still there, and their threats of violence and persecution had only grown more vile while Beb wore the amulet. In fact, the bullies were using this space to organize their activities and recruit others.

So Beb returned to the wizard in indignation. Why did you tell me this amulet would keep me safe? they asked.

The wizard responded: I promised only that you would never know harassment. I did not promise there wouldn't be any harassment — only that you wouldn't know about it.

This parable feels like a pretty good description of how some newer social media companies seem to be approaching the issues of content moderation. Their pitch, as I understand it, is that you can choose not to see any horrible behavior — but it'll still be there, and it might still focus on you. And eventually, it'll become impossible to ignore, or well-meaning friends will bring it to your attention by responding to it on your behalf.

And here's where I pause to offer a disclaimer: I don't do this shit for a living. There are people who think about this stuff way more than I do, and I'm just sharing something that's been on my mind lately.

But anyway, this is what I'm taking from recent comments by Chris Best at Substack, and Jay Graber at Bluesky, including Best's infamous interview with The Verge's Nilay Patel. I feel like there's a lot of talk about controlling your experience, and having control over what you see, and not a lot of talk about having strong moderation policies. Yes, Bluesky has been banning a few high-profile bad actors recently, but the rationale seems to be that it's a closed beta and can kick people off for any reason — but unless I've missed it, there's no talk of having a set of enforceable community standards on what is currently a single site, even if there may be some form of federation in the future. And as Evelyn Douek and Alex Stamos pointed out on last week's Moderated Content podcast, there's no trust and safety team.

In place of having a set of clearly explained content moderation policies and moderators to enforce them, Bluesky promises it'll have a thing called "composable moderation." And there's a menu that you can access from your user settings. From what I can glean, this means that you can choose whether to see stuff like "self harm," violence, sexual content or hate groups. Here's what it looks like:

You'll notice it's all "hide" and "show," meaning that it's the functional equivalent of the amulet in the fable I shared earlier. You can choose not to see "political hate groups," but they'll still be there. (Again, this is what I've gleaned; I welcome any and all corrections.)

You'll notice it's all "hide" and "show," meaning that it's the functional equivalent of the amulet in the fable I shared earlier. You can choose not to see "political hate groups," but they'll still be there. (Again, this is what I've gleaned; I welcome any and all corrections.)

So I have two concerns about this concept.

First, who decides whether someone is a member of a "political hate group"? I worry that this label will be based on user-generated input. If enough Bluesky users decide that a particular account deserves the hate-group label, they'll be hidden from anyone who toggles that slider all the way to the left. But what's to stop a ton of racists from labeling Black people members of a hate group? Or to stop transphobes from mass-reporting trans people as hate-group members?

And second, there's the fact that hiding content doesn't make it go away.

Twitter has long had a feature where you can choose to see notifications only from people you follow, which is great in theory. If you have that box checked on Twitter, you'll only see replies and quote-tweets from the people you've decided to trust — but in practice, if your tweet gets a ton of hostile replies and quote-tweets, you will absolutely know about it. It'll show up if you look at the permalink for your actual tweet, but also your trusted followers will inevitably start arguing with the folks you don't follow. Plus, it requires a lot of willpower to avoid looking at a swarm of replies or quote-tweets when you know they're there.

Plus as I've said above, even if you decide you don't want to see hate group posts, you'll still find out about it if they target you. It's impossible to ignore at a certain point.

Here's a good place to mention that I'm enjoying Bluesky a lot so far — a ton of Black Twitter folks who were turned off by Mastodon's well-documented racism problem are hanging out there, along with many other folks I enjoyed chatting with on Twitter. But I'm also very aware that I'm enjoying it, in part, because it's still a small community and we're at the start, rather than the end, of the "enshittification" process*.

But back to my main point: I feel as though any theory of moderation that involves something like Beb's amulet is doomed to fail. And as various people have pointed out lately, it's usually a mistake to try and find technological solutions to social problems. Sure, it's probably true, as Techdirt's Mike Masnick says, that content moderation is impossible to do well at scale — but I don't think that's an excuse to give up. Or to surrender to some techno-libertarian ideal of "free speech**" in which marginalized people basically have to shut up or face torrents of abuse. I'd rather be on a platform that has robust rules against misinformation and hate speech (including wilful misgendering!) but sometimes fails to enforce those rules, than one which says "Anything goes, but you can avert your gaze from the worst of it."

Because the end state of a platform that allows hate speech but gives you the option to "hide" it is that hate speech proliferates. Mobs form. Assholes coordinate their abuse and use sock-puppets to boost their presence, and eventually this spills over into real-world harm.

What's my preferred solution? I'm hoping social media will just die, and something better will take its place. I think the message-board-as-megaphone-to-the-world concept is inherently flawed, and the future involves smaller, more insular communities that can police themselves. Mostly, I'm tired of spending time on a platform where all my favorite people are hanging out, but I constantly have to bite my tongue because I don't want to bring down a swarm of abusive jerks on my head. A space can be either social or open to the entire world, and they both have their uses. This newsletter is published to everyone who wants to read it, but there are no comments and I am very aware that I'm publishing, not socializing or shitposting. I'd love to have a space to hang out with cool weirdos online, but only if the worst people are not allowed.

*a term coined by Cory Doctorow, meaning that platforms start off having a great user experience until you're stuck on them due to network effects, at which point they get shittier.

**want more of my thoughts on free speech, "cancel culture" and Twitter? Here ya go.

Something I Love this Week

The other night, Annalee and I watched a 2022 movie called Anything's Possible, about a teenage trans girl who falls in love with a boy in her art class. This was the sweetest, loveliest romcom I've seen in forever, and it's especially a balm to your soul in this era of organized panic around trans teens. Eva Reign is so perfect as Kelsa, and the entire cast just sparkles. It's a goddamn delight — stick around for the cute musical number in the end credits. Anything's Possible was directed by Billy Porter, who played PrayTell in Pose, and I keep finding new layers to my Billy Porter fandom. I also highly, massively recommend an album Porter put out several years ago called The Soul of Richard Rodgers, featuring soul covers of old musical numbers by Rodgers. It's just magical.

My Stuff

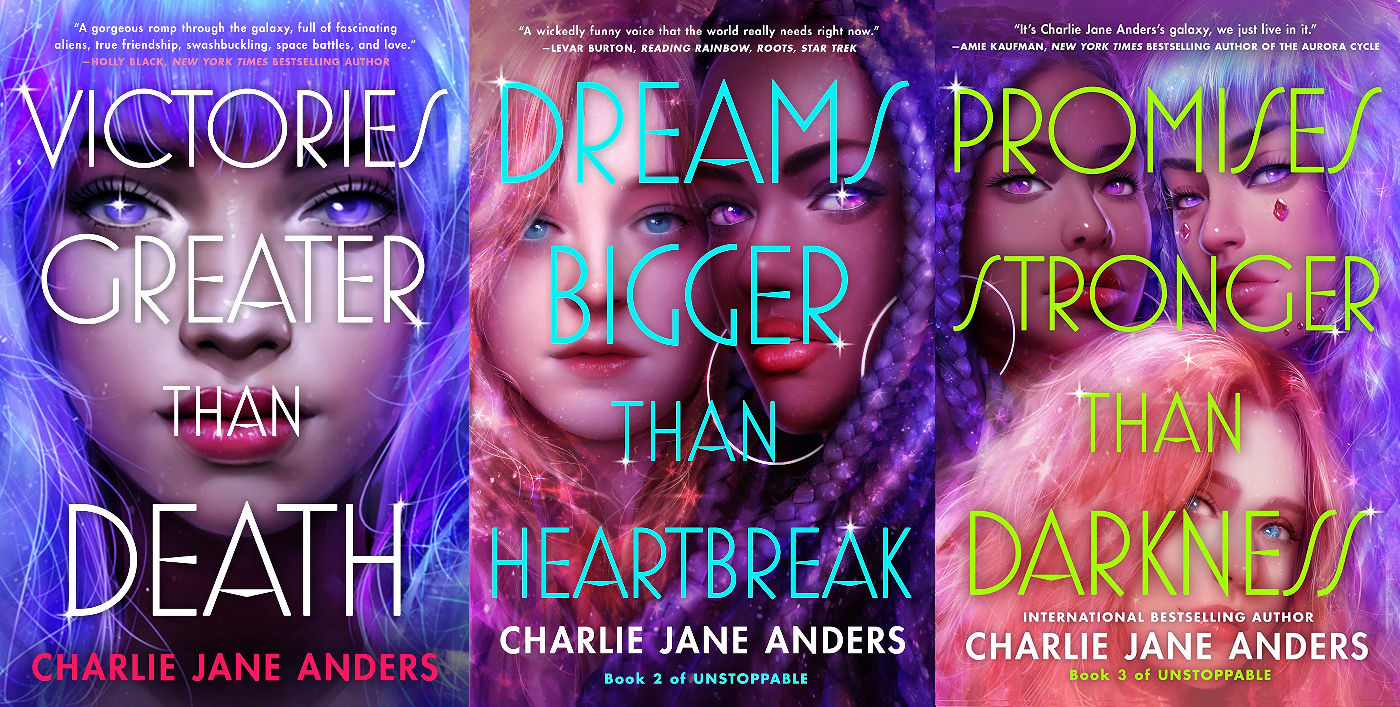

It's been about a month since Promises Stronger Than Darkness, the third book in my young adult Unstoppable trilogy, came out. I've been so blown away by the response to this one, and I'm so glad folks are able to read the whole trilogy in one go now. (See last week's newsletter for more about how people are responding to Promises.)

Also! There's a trade paperback collecting the three issues of New Mutants that I wrote featuring Escapade, the trans superhero I co-created. I'm super proud of this storyline, in which Escapade, Cerebella and some other teens are captured by the U-Men. Unfortunately, due to a metadata snafu, you can't actually find out that this trade paperback contains my story, so you'll have to take my word for it... Sigh.

And I'm still publishing a new miniseries, New Mutants: Lethal Legion, in which Escapade, Cerebella and Scout decide to do a heist and it goes even wronger than heists usually do. The first two issues are out now, and issue three comes out May 24!