Managing Sysinternals with PowerShell

Today's newsletter is another excuse for me to use PowerShell. You may have no practical need for the code, but I am hoping you'll learn something nonetheless.

I have been using the Sysinternals tools for years. Probably since they were introduced almost 30 years ago. Even though Mark Russinovich has his hands full with Azure, he still finds time to update tools from time to time. Or at least someone is. The tools are documented at https://learn.microsoft.com/sysinternals/

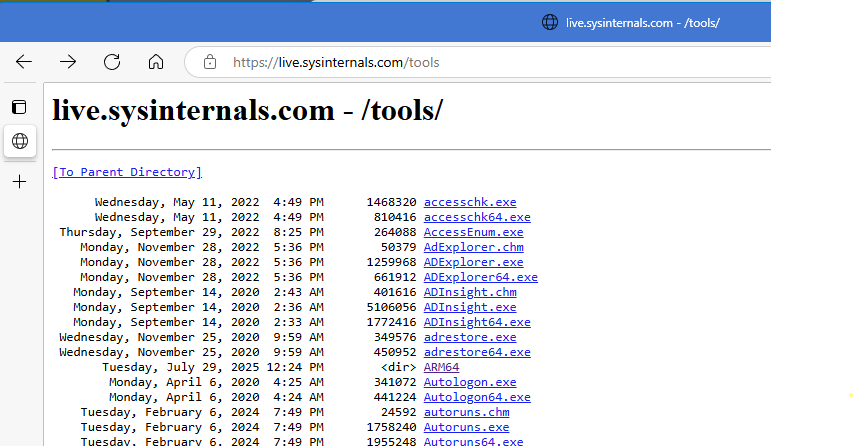

Even better, Microsoft exposes the tools at https://live.sysinternals.com/tools. The address https://live.sysinternals.com/ will also work.

You can click on a tool to download it. But wouldn't it be nicer to download all of the tools at once? Maybe using PowerShell?

Downloading

You can access the web site like a file folder by using the WebClient service. Most likely this service is not running on your computer.

PS C:\> Get-Service WebClient

Status Name DisplayName

------ ---- -----------

Stopped WebClient WebClient

Assuming it hasn't been disabled or blocked by Group Policy, you should be able to start it.

PS C:\> Start-Service WebClient

This will allow you to reference the web site like a UNC path.

PS C:\> $file = Join-Path "\\live.sysinternals.com\tools" -childPath "whois.exe"

PS C:\> Copy-Item -path $file -Destination d:\temp -PassThru -Force

Directory: D:\temp

Mode LastWriteTime Length Name

---- ------------- ------ ----

-a--- 4/6/2020 5:39 AM 398712 whois.exe

Of course, I want to download all of the tools and related files. Here is a PowerShell script that will do just that.

#requires -version 5.1

# Download Sysinternals tools from web to a local folder

param(

[Parameter(

Position = 0,

Mandatory,

HelpMessage = "Enter the name or path to the destination folder. It will be created if it doesn't exist."

)]

[ValidateNotNullOrEmpty()]

[string]$Path

)

if (-not (Test-Path $Path)) {

New-Item -Path $Path -ItemType Directory

}

try {

$svc = Get-Service -Name WebClient -ErrorAction Stop

}

catch {

Write-Warning "Failed to get the WebClient service. Cannot continue. $($_.Exception.Message)"

#bail out

return

}

if ($svs.Status -eq 'Running') {

<#

Define a variable to indicate service was already running

so that we don't stop it.

#>

$Stopped = $False

}

else {

#start the WebClient service if it is not running

Write-Host 'Starting WebClient' -ForegroundColor Magenta

try {

Start-Service -Name WebClient -ErrorAction Stop

$Stopped = $True

}

catch {

Write-Warning "Failed to start the WebClient service. Cannot continue. $($_.Exception.Message)"

#bail out

return

}

}

Write-Host "Downloading Sysinternals tools from \\live.sysinternals.com\tools to $Path" -ForegroundColor Cyan

#start a timer

$sw = [System.Diagnostics.Stopwatch]::new()

$sw.Start()

$files = Get-ChildItem -Path \\live.sysinternals.com\tools -File

#there may be null characters in the path which will cause problems.

foreach ($file in $files) {

$file = $file -replace "`0",""

Copy-Item -path $file -Destination $Path -PassThru -Force

}

if ( $Stopped ) {

Write-Host 'Stopping the web client' -ForegroundColor Magenta

Stop-Service WebClient

}

$sw.stop()

Write-Host "Sysinternals download complete in $($sw.Elapsed)" -ForegroundColor Cyan